Ubuntu18.04 hadoop2.7.7+hbase2.0.5单机伪分布式环境搭建

系统:Ubuntu18.04

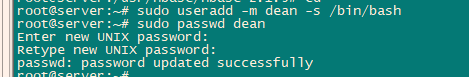

一、添加普通用户

1.使用root用户登录ubuntu

2.创建名为 dean的普通用户,使用/bin/bash作为shell

sudo useradd -m dean -s /bin/base

3.设置密码

sudo passwd dean

输入两次密码:

4.添加管理员权限

sudo adduser dean sudo

5.使用新用户登录

su dean

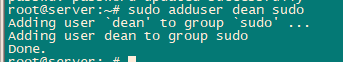

6.更新apt

sudo apt-get update

需要使用sudo管理员权限,否则提示没有权限。

失败截图:

成功截图:

二、安装JAVA

1.使用apt安装

sudo apt install openjdk-8-jdk

2.检查是否安装成功

java -version

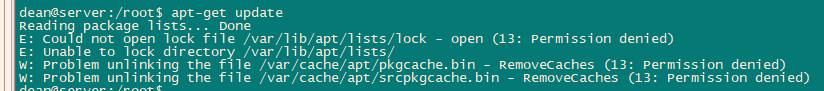

3.配置环境变量

(1)查找java目录

ls -la /etc/alternatives/java

复制上面得到的java路径:/usr/lib/jvm/java-8-openjdk-amd64

(2)配置环境变量

编辑.bashrc文件:

vim ~/.bashrc

如果没有安装vim,使用apt install vim 安装

在开头添加环境变量:

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

保存

按下esc 然后输入 :wq

使环境变量生效:

source ~/.bashrc

三、安装Hadoop

1.配置SSH无密码登陆

1.安装SSH Server

sudo apt-get install openssh-server

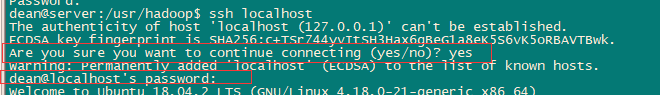

2.使用ssh登陆

ssh localhost

首次登陆如下提示输入yes,然后需要输入密码

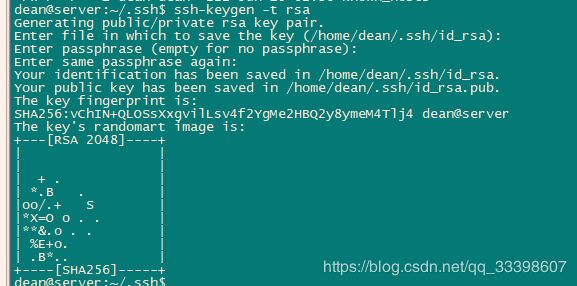

3.配置无密码登陆

cd ~/.ssh/

若没有此目录,需要先执行一次ssh localhost

ssh-keygen -t rsa

出现提示一直按回车即可

生成key:

cat ./id_rsa.pub >> ./authorized_keys

加入授权

此时使用ssh localhost 即可直接登陆

若失败则重新配置一次

2.修改hosts文件

sudo vim /etc/hosts

添加127.0.0.1 localhost

添加127.0.1.1 主机名(在/etc/hostname文件查看主机名)

3.安装hadoop

注意:以下操作均使用的是前面新建的普通用户,不是root用户!

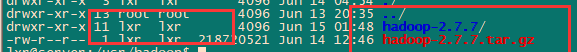

1.官网下载hadoop2.7.7二进制文件 hadoop-2.7.7.tar.gz

2.上传到ubuntu,我这里上传到了/usr/hadoop/文件夹中

3.在上传的文件目录下解压hadoop文件

tar -zxvf hadoop-2.7.7

解压完成,当前目录出现 hadoop-2.7.7文件夹

4.修改文件权限

sudo chown -R lxr:lxr ./hadoop-2.7.7

下图红框显示lxr(自己的用户名)即为成功

5.配置hadoop java环境变量

修改hadoop-2.7.7/etc/hadoop文件夹中的hadoop-env.sh 文件

vim hadoop-env.sh

添加JAVA_HOME

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

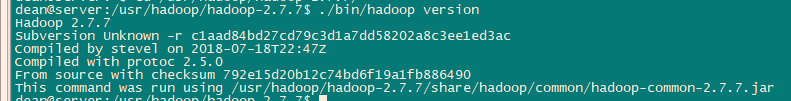

6.查看是否可用:

./bin/hadoop version

4.hadoop伪分布式配置

伪分布式需要修改2个配置文件,均在hadoop-2.7.7/etc/hadoop/文件夹中:

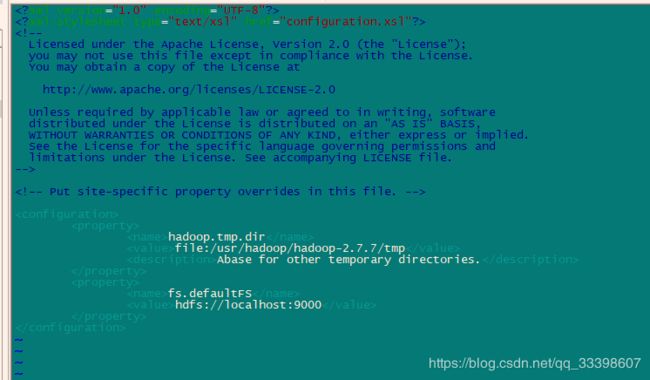

1.core-site.xml

vim core-site.xml

添加如下配置

hadoop.tmp.dir

file:/usr/hadoop/hadoop-2.7.7/tmp

fs.defaultFS

hdfs://localhost:9000

hadoop.tmp.dir配置临时目录

fs.defaultFS配置hdfs文件系统的ip:端口号

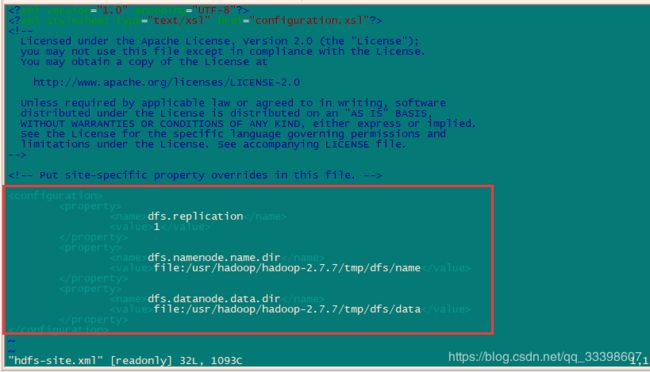

2.hdfs-site-xml

vim hdfs-site-xml

添加:

dfs.replication

1

dfs.namenode.name.dir

file:/usr/hadoop/hadoop-2.7.7/tmp/dfs/name

dfs.datanode.data.dir

file:/usr/hadoop/hadoop-2.7.7/tmp/dfs/data

用户要有配置文件夹的修改权限,前面已经给我们的新用户dean配置了hadoop文件夹权限

如果其它用户启动、修改hadoop文件夹中内容,可能会导致权限变更,造成hadoop格式化失败,原因是第一个启动hadoop的用户创建了tmp文件夹并拥有权限,其它用户无法修改,删除tmp文件夹重新格式化hadoop可解决

3.配置完成,执行namenode格式化

在hadoop2.7.7目录下

./bin/hdfs namenode -format

最后几号中出现“successfully formatted” 和 “Exitting with status 0” 的提示即成功。

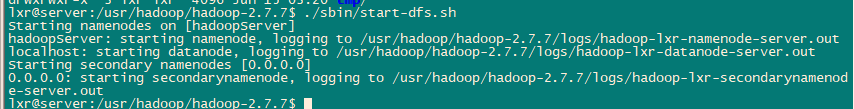

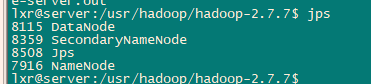

4.启动hadoop

./sbin/start-dfs.sh

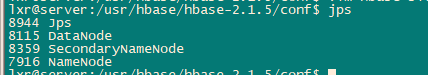

使用jps命令查看状态,datanode,namenode,secondarynamenode均启动即成功。

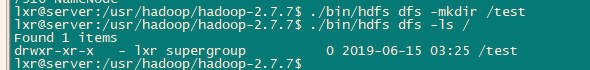

测试在hdfs中创建文件夹,创建test文件夹并查看目录

./bin/hdfs dfs -mkdir /test

./bin/hdfs dfs -ls /

5.停止hadoop

./sbin/stop-dfs.sh

四、安装zookeeper

使用hbase内置zookeeper总是启动失败,换了好几个hbase版本也启动不起来,启动起来一会就挂掉,报很多错。

整个环境搭建一共用了3天,其中两天都花在了找这个问题解决办法上,实在没找到怎么办,同步时间、改配置、关ufw、换hadoop、hbase版本都没有解决。反正都是zookeeper的问题,干脆不用它内置的了,自己装了一个zookeeper,问题都解决了,下面是报错log,有同样问题的可以换成独立的zookeeper解决。

参考:https://community.hortonworks.com/questions/86429/cannot-connect-to-zookeeper-server-from-zookeeper.html

先是很多次 Connection refused:

2019-06-17 13:15:54,429 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2019-06-17 13:15:54,429 WARN [main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:361)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1141)

最后其它服务也都挂掉:

2019-06-17 13:16:09,962 ERROR [main] zookeeper.RecoverableZooKeeper: ZooKeeper create failed after 4 attempts

2019-06-17 13:16:10,962 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2019-06-17 13:16:11,063 INFO [main] zookeeper.ZooKeeper: Session: 0x0 closed

2019-06-17 13:16:11,063 INFO [main-EventThread] zookeeper.ClientCnxn: EventThread shut down for session: 0x0

2019-06-17 13:16:11,063 ERROR [main] regionserver.HRegionServer: Failed construction RegionServer

org.apache.hadoop.hbase.ZooKeeperConnectionException: master:160000x0, quorum=localhost:2181, baseZNode=/hbase Unexpected KeeperException creating base node

at org.apache.hadoop.hbase.zookeeper.ZKWatcher.createBaseZNodes(ZKWatcher.java:193)

at org.apache.hadoop.hbase.zookeeper.ZKWatcher.

(ZKWatcher.java:167) at org.apache.hadoop.hbase.zookeeper.ZKWatcher.

(ZKWatcher.java:119) at org.apache.hadoop.hbase.regionserver.HRegionServer.

(HRegionServer.java:623) at org.apache.hadoop.hbase.master.HMaster.

(HMaster.java:492) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:3099)

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:236)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:140)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:149)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:3117)

Caused by: org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss for /hbase

at org.apache.zookeeper.KeeperException.create(KeeperException.java:99)

at org.apache.zookeeper.KeeperException.create(KeeperException.java:51)

at org.apache.zookeeper.ZooKeeper.create(ZooKeeper.java:783)

at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.createNonSequential(RecoverableZooKeeper.java:549)

at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.create(RecoverableZooKeeper.java:528)

at org.apache.hadoop.hbase.zookeeper.ZKUtil.createWithParents(ZKUtil.java:1200)

at org.apache.hadoop.hbase.zookeeper.ZKUtil.createWithParents(ZKUtil.java:1178)

at org.apache.hadoop.hbase.zookeeper.ZKWatcher.createBaseZNodes(ZKWatcher.java:183)

... 14 more

2019-06-17 13:16:11,065 ERROR [main] master.HMasterCommandLine: Master exiting

java.lang.RuntimeException: Failed construction of Master: class org.apache.hadoop.hbase.master.HMaster.

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:3106)

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:236)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:140)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:149)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:3117)

Caused by: org.apache.hadoop.hbase.ZooKeeperConnectionException: master:160000x0, quorum=localhost:2181, baseZNode=/hbase Unexpected KeeperException creating base node

at org.apache.hadoop.hbase.zookeeper.ZKWatcher.createBaseZNodes(ZKWatcher.java:193)

at org.apache.hadoop.hbase.zookeeper.ZKWatcher.

(ZKWatcher.java:167) at org.apache.hadoop.hbase.zookeeper.ZKWatcher.

(ZKWatcher.java:119) at org.apache.hadoop.hbase.regionserver.HRegionServer.

(HRegionServer.java:623) at org.apache.hadoop.hbase.master.HMaster.

(HMaster.java:492) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:3099)

... 5 more

Caused by: org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss for /hbase

at org.apache.zookeeper.KeeperException.create(KeeperException.java:99)

at org.apache.zookeeper.KeeperException.create(KeeperException.java:51)

at org.apache.zookeeper.ZooKeeper.create(ZooKeeper.java:783)

at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.createNonSequential(RecoverableZooKeeper.java:549)

at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.create(RecoverableZooKeeper.java:528)

at org.apache.hadoop.hbase.zookeeper.ZKUtil.createWithParents(ZKUtil.java:1200)

at org.apache.hadoop.hbase.zookeeper.ZKUtil.createWithParents(ZKUtil.java:1178)

at org.apache.hadoop.hbase.zookeeper.ZKWatcher.createBaseZNodes(ZKWatcher.java:183)

... 14 more

1.安装zookeeper

apt install zookeeper

查看版本,3.4.x

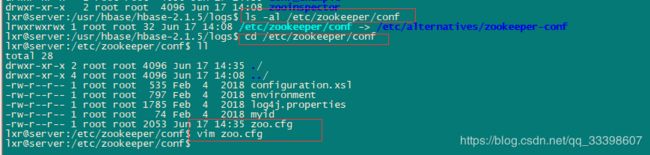

2.配置zookeeper

找到conf文件位置,修改配置文件

ls -al /etc/zookeeper

cd /etc/zookeeper/conf

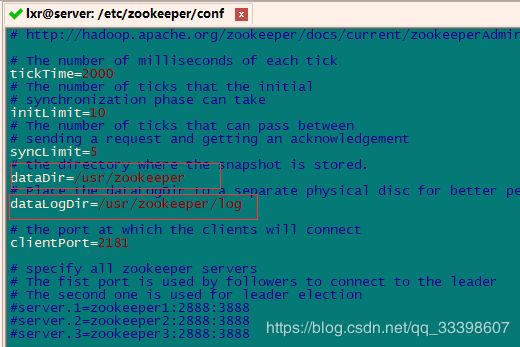

vim zoo.cfg

配置dataDir和dataLogDir,启动zookeeper的用户需要有这两个文件夹的管理员权限,否则会启动失败。

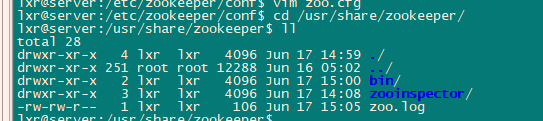

3.启动

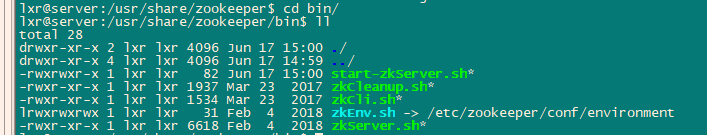

使用apt安装的zookeeper在/usr/share/zookeeper文件夹中

进入bin目录,创建后台启动脚本start-zkServer.sh,或者直接启动./zkServer.sh start

start-zkServer.sh:

nohup /usr/share/zookeeper/bin/zkServer.sh start > /usr/share/zookeeper/zoo.log &

给权限:sudo chmod +x start-zkServer.sh

五、安装HBase

注意:以下均为普通用户(lxr)操作,非root用户!没有使用hbase内置的zookeeper,安装步骤见第四部分。

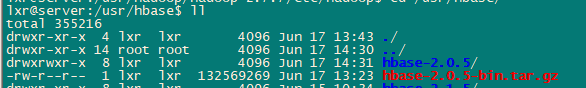

1.下载解压

这里使用hbase2.0.5(具体hadoop与hbase版本支持列表可在hbase官网文档中查看)

下载后上传到/usr/hbase目录下,解压:

tar -zxvf hbase-2.0.5-bin.tar.gz

给权限

sudo chown - R lxr:lxr ./hbase-2.0.5

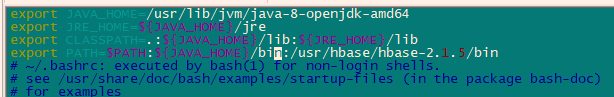

2.配置系统环境变量

1.修改配置文件

vim ~/.bashrc

PATH追加/usr/hbase/hbase-2.0.5/bin,冒号分割。

完整配置:

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=$PATH:${JAVA_HOME}/bin:/usr/hbase/hbase-2.0.5/bin

2.生效:

source ~/.bashrc

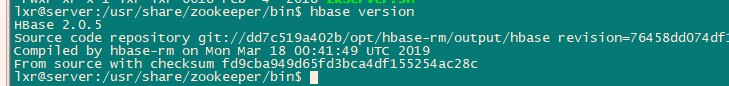

3.查看hbase版本,与hadoop一样,解压即用,查看是否可以运行。

hbase version

成功会显示版本号:

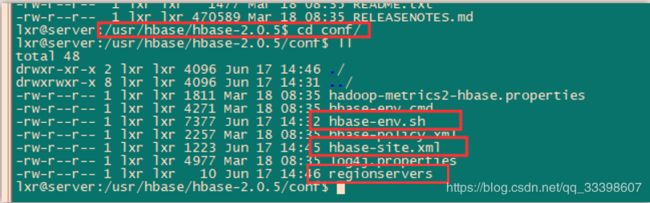

3.Hbase伪分布式配置

伪分布式模式让hbse借助于hdfs完成数据存储

需要修改三个文件,均在hbase-2.0.5/conf文件夹中

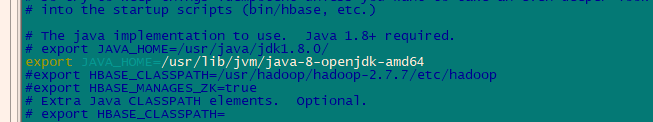

1.hbase-env.sh

vim hbase-env.sh

添加:

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HBASE_MANAGES_ZK=true

配置HBASE_MANAGES_ZK=true让hbase启用自带的zookeeper。

不使用内置zookeeper

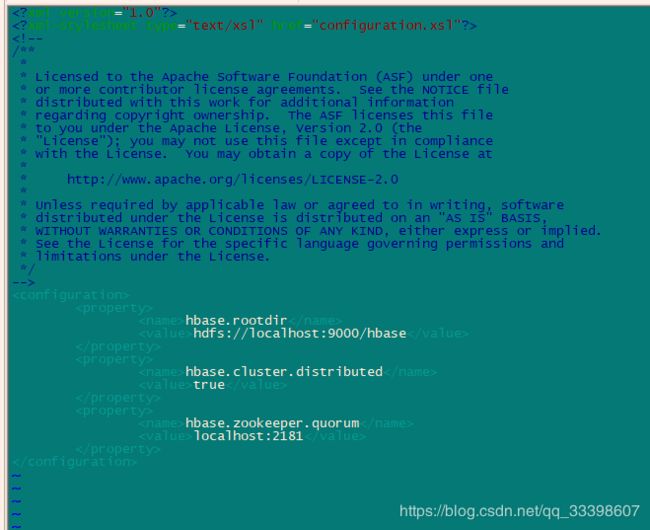

2. hbase-site.xml

vim hbase-site.xml

添加:

hbase.rootdir

hdfs://localhost:9000/hbase

hbase.cluster.distributed

true

hbase.zookeeper.quorum

localhost:2181

说明:

hbase.rootdir:hdfs文件系统的位置 ip(机器名):port/hbase,hbase启动时会自动在hdfs中创建hbase文件夹

hbase.cluster.distributed:配置hbase以分布式运行

hbase.zookeeper.quorum:填写前面配置的zookeeper服务的ip:port(localhost:2181)。

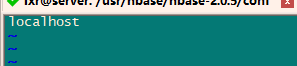

3.regionservers

vim regionservers

4.启动Hbase

1.启动hadoop

hadoop根目录下启动./sbin/start-dfs.sh

若已启动则跳过,查看hadoop是否已成功启动。

jps

2.启动hbase

切换目录到hbase根目录

启动hbase

./bin/start-hbase.sh

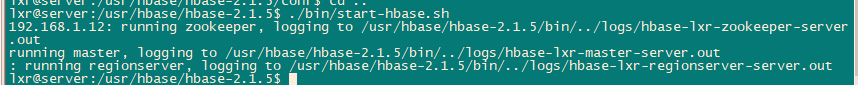

hbase启动成功:

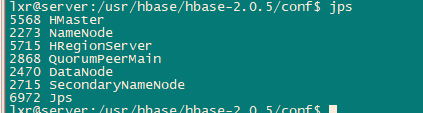

hadoop+zookeeper+hbase所有服务启动成功截图:

注:启动时可能出现如下错误,hadoop和hbase都带有slf4j包,我这里删除了hbase里的:

rm /usr/hbase/lib/slf4j-log4j12-1.6.4.jar

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hbase/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

参考:https://issues.apache.org/jira/browse/HBASE-11408

5.使用hbase shell

总结

1.需要注意用户的权限问题

启动hadoop的用户需要有tmp文件夹的权限,否则格式化hdfs时会抛出异常。

启动zookeeper的用户要拥有zookeeper data和log文件夹的权限,否则会启动失败。

2.内置zookeeper有偶尔可以启动成功,大部分时候都是过一会就挂掉。

换成了单独的zookeeper,测试了很多次hadoop、hbase开启和关闭,没有再出现过挂掉的情况。

参考:

Hadoop 2.7.7:Setting up a Single Node Cluster:https://hadoop.apache.org/docs/r2.7.7/hadoop-project-dist/hadoop-common/SingleCluster.html

HBase2.0.5:Pseudo-Distributed Local Install:https://hbase.apache.org/2.0/book.html#quickstart

在阿里云中搭建大数据实验环境,林子雨:http://dblab.xmu.edu.cn/blog/1952-2/