kafka-connect安装使用

在此使用hdp中的kafka,根据hdp版本找到对应的开源组件版本:

hdp2.6.3—kafka0.10.1—kafka-connect 0.10.1

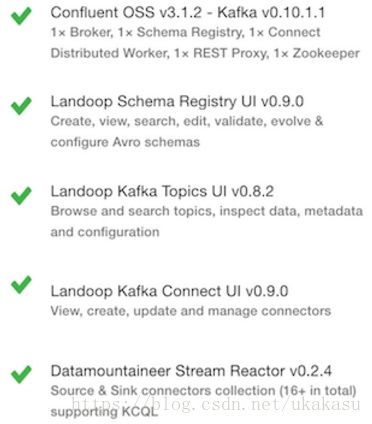

Confluent Open Source

下载:https://www.confluent.io/previous-versions/

安装:

解压:tar -zxf confluent-oss-3.1.2-2.11.tar.gz

confluent-3.1.2/bin/ # Driver scripts for starting/stopping services

confluent-3.1.2/etc/ # Configuration files

confluent-3.1.2/share/java/ # Jars

kafka connect(分布式)

配置:

vi etc/schema-registry/connect-avro-distributed.properties

#Kafka Broker,hdp默认端口6667,逗号分割

bootstrap.servers=localhost:6667#不能与consumer名称相同

group.id=connect-cluster#avro,需启动schema.registry

key.converter=io.confluent.connect.avro.AvroConverter

key.converter.schema.registry.url=http://localhost:8081

value.converter=io.confluent.connect.avro.AvroConverter

value.converter.schema.registry.url=http://localhost:8081#json,与avro二选一

key.converter=org.apache.kafka.connect.json.JsonConverter

key.converter.schemas.enable= true

value.converter=org.apache.kafka.connect.json.JsonConverter

value.converter.schemas.enable= trueinternal.key.converter=org.apache.kafka.connect.json.JsonConverter

internal.value.converter=org.apache.kafka.connect.json.JsonConverter

internal.key.converter.schemas.enable=false

internal.value.converter.schemas.enable=false#预先创建

config.storage.topic=connect-configs

offset.storage.topic=connect-offsets

status.storage.topic=connect-statuses#跨域访问

access.control.allow.methods=GET,POST,PUT,OPTIONS,DELETE

access.control.allow.origin=*

创建topic:

bin/kafka-topics.sh –create –zookeeper localhost:2181 -topic

connect-configs –replication-factor 3 –partitions 1bin/kafka-topics.sh –create –zookeeper localhost:2181 -topic

connect-offsets –replication-factor 3 –partitions 50bin/kafka-topics.sh –create –zookeeper localhost:2181 -topic

connect-status –replication-factor 3 –partitions 10

启动:

nohup bin/connect-distributed

etc/schema-registry/connect-avro-distributed.properties >>connect.log &

schema registry

kafkastore.bootstrap.servers=PLAINTEXT://p5.ambari:6667,p6.ambari:6667

listeners=http://p5.ambari:8081

kafkastore.connection.url=p5.ambari:2181

kafkastore.topic=_schemas

debug=false

access.control.allow.methods=GET,POST,PUT,OPTIONS

access.control.allow.origin=*

nohup bin/schema-registry-start etc/schema-registry/schema-registry.properties

>>schema.log &

kafka rest

配置:

vi etc/kafka-rest/kafka-rest.properties

bootstrap.servers=PLAINTEXT://p5.ambari:6667

id=kafka-rest-test-server

schema.registry.url=http://p5.ambari:8081

zookeeper.connect=p5.ambari:2181

listeners=http://p5.ambari:8082

access.control.allow.methods=GET,POST,PUT,DELETE,OPTIONS

access.control.allow.origin=*

host.name=pcs5.ambari

debug=true

启动:

nohup bin/kafka-rest-start etc/kafka-rest/kafka-rest.properties >>rest.log &

kafka-topics-ui

(此版本装不上,估计需升级kafka)

demo:kafka-topics-ui.landoop.com

下载:https://github.com/Landoop/kafka-topics-ui/releases

安装:

解压

更改

启动:http-server -p 9091 .

schema-registry-ui

schema-registry-ui.landoop.com

https://github.com/Landoop/schema-registry-ui/releases

http-server -p 9092 .

kafka-connect-ui

demo:kafka-connect-ui.landoop.com

下载:https://github.com/Landoop/kafka-connect-ui/releases

安装:

解压

更改

启动:http-server -p 8081 .

Connector安装:

plugin.path:逗号分割的路径

https://docs.confluent.io/current/connect/userguide.html#connect-installing-plugins

https://www.confluent.io/product/connectors/

Kafka 0.10.x需添加到classpath

Stream Reactor connector集合

https://lenses.stream/connectors/download-connectors.html

CONFLUENT HUB

https://www.confluent.io/hub/

jdbc connector

name=timestampJdbcSourceConnector

connector.class=io.confluent.connect.jdbc.JdbcSourceConnector

tasks.max=1

topic.prefix=mysql_

connection.url=jdbc:mysql://10.8.7.96:3306/connect_test?user=root&password=123456

timestamp.column.name=download_time

table.whitelist=file_download

mode=timestamp

写入hdfs时为json或avro

Mysql–>mysql

Jdbc source

name=JdbcSourceConnector4

connector.class=io.confluent.connect.jdbc.JdbcSourceConnector

tasks.max=1

topic.prefix=mysql4_

connection.url=jdbc:mysql://10.8.7.96:3306/connect_test?user=root&password=123456

timestamp.column.name=download_time

validate.non.null=true

batch.max.rows=100

table.whitelist=file_download

mode=timestamp

value.converter= org.apache.kafka.connect.json.JsonConverter

key.converter=org.apache.kafka.connect.json.JsonConverter

Jdbc sink

name=JdbcSinkConnector

connector.class=io.confluent.connect.jdbc.JdbcSinkConnector

topics=TopicName_JdbcSinkConnector

tasks.max=1

connection.url=jdbc:mysql://10.8.7.96:3306/connect_test?user=root&password=123456

value.converter= org.apache.kafka.connect.json.JsonConverter

key.converter=org.apache.kafka.connect.json.JsonConverter

auto.create=true

Mysql–>hive

Jdbc source

name=testJdbcSourceConnector

connector.class=io.confluent.connect.jdbc.JdbcSourceConnector

tasks.max=1

topic.prefix=test_

connection.url=jdbc:mysql://10.8.7.96:3306/connect_test?user=root&password=123456

value.converter=io.confluent.connect.avro.AvroConverter

key.converter=io.confluent.connect.avro.AvroConverter

key.converter.schema.registry.url=http://p6.ambari:7788

timestamp.column.name=download_time

validate.non.null=true

tasks.max=1

batch.max.rows=100

table.whitelist=file_download

mode=timestamp

value.converter.schema.registry.url=http://p6.ambari:7788

Hdfs sink

connector.class=io.confluent.connect.hdfs.HdfsSinkConnector

filename.offset.zero.pad.width=10

topics.dir=topics

flush.size=2

tasks.max=1

hive.home=/usr/hdp/2.6.3.0-235/hive

hive.database=default

rotate.interval.ms=60000

retry.backoff.ms=5000

hadoop.home=/usr/hdp/2.6.3.0-235/hadoop

logs.dir=logs

schema.cache.size=1000

format.class=io.confluent.connect.hdfs.avro.AvroFormat

hive.integration=true

hive.conf.dir=/usr/hdp/2.6.3.0-235/hive/conf

value.converter=io.confluent.connect.avro.AvroConverter

key.converter=io.confluent.connect.avro.AvroConverter

partition.duration.ms=-1

hadoop.conf.dir=/usr/hdp/2.6.3.0-235/hadoop/conf

schema.compatibility=BACKWARD

topics=test_file_download

hdfs.url=

hdfs.authentication.kerberos=false

hive.metastore.uris=thrift://p6.ambari:9083

partition.field.name=name_id

value.converter.schema.registry.url=http://p6.ambari:7788

partitioner.class=io.confluent.connect.hdfs.partitioner.FieldPartitioner

name=testHdfsSinkConnector

storage.class=io.confluent.connect.hdfs.storage.HdfsStorage

key.converter.schema.registry.url=http://p6.ambari:7788

配置ha后设置conf文件夹,hdfs.url设置为空