Netty 粘包/拆包

Netty 粘包/拆包

TCP是个“流”协议,所谓流,就是没有界限的一串数据。大家可以想想河里的流水,是连成一片的,其间并没有分界线。TCP底层并不了解上层业务数据的具体含义,它会根据TCP缓冲区的实际情况进行包的划分,所以在业务上认为,一个完整的包可能会被TCP拆分成多个包进行发送,也有可能把多个小的包封装成一个大的数据包发送,这就是所谓的TCP粘包和拆包问题。

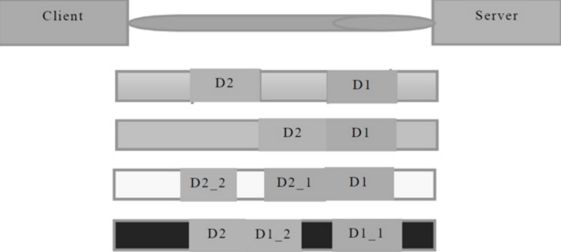

假设客户端分别发送了两个数据包D1和D2给服务端,由于服务端一次读取到的字节数是不确定的,故可能存在以下4种情况。

(1)服务端分两次读取到了两个独立的数据包,分别是D1和D2,没有粘包和拆包;

(2)服务端一次接收到了两个数据包,D1和D2粘合在一起,被称为TCP粘包;

(3)服务端分两次读取到了两个数据包,第一次读取到了完整的D1包和D2包的部分内容,第二次读取到了D2包的剩余内容,这被称为TCP拆包;

(4)服务端分两次读取到了两个数据包,第一次读取到了D1包的部分内容D1_1,第二次读取到了D1包的剩余内容D1_2和D2包的整包。

如果此时服务端TCP接收滑窗非常小,而数据包D1和D2比较大,很有可能会发生第五种可能,即服务端分多次才能将D1和D2包接收完全,期间发生多次拆包。

TCP粘包/拆包发生的原因

问题产生的原因有三个,分别如下。

(1)应用程序write写入的字节大小大于套接口发送缓冲区大小;

(2)进行MSS大小的TCP分段;

(3)以太网帧的payload大于MTU进行IP分片。

粘包问题的解决策略

由于底层的TCP无法理解上层的业务数据,所以在底层是无法保证数据包不被拆分和重组的,这个问题只能通过上层的应用协议栈设计来解决,根据业界的主流协议的解决方案,可以归纳如下。

(1)消息定长,例如每个报文的大小为固定长度200字节,如果不够,空位补空格;

(2)在包尾增加回车换行符进行分割,例如FTP协议;

(3)将消息分为消息头和消息体,消息头中包含表示消息总长度(或者消息体长度)的字段,通常设计思路为消息头的第一个字段使用int32来表示消息的总长度;

(4)更复杂的应用层协议。

Netty粘包/拆包模拟

- 服务端:

TimeServer

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

public class TimeServer {

public static void main(String[] args){

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap b = new ServerBootstrap();

b.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer() {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ch.pipeline().addLast(

new TimeServerHandler());

}

})

.option(ChannelOption.SO_BACKLOG, 128)

.childOption(ChannelOption.SO_KEEPALIVE, true);

// Bind and start to accept incoming connections.

ChannelFuture f = b.bind(8080).sync();

System.out.println("server start");

f.channel().closeFuture().sync();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

workerGroup.shutdownGracefully();

bossGroup.shutdownGracefully();

}

}

} TimeServerHandler

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

public class TimeServerHandler extends ChannelInboundHandlerAdapter {

private int counter;

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg)

throws Exception {

ByteBuf buf = (ByteBuf) msg;

byte[] req = new byte[buf.readableBytes()];

buf.readBytes(req);

String body = new String(req, "UTF-8").substring(0, req.length - System.getProperty("line.separator").length());

System.out.println("The time server receive order : " + body + " ; the counter is : " + ++counter);

String currentTime = "QUERY TIME ORDER".equalsIgnoreCase(body) ?

new java.util.Date( System.currentTimeMillis()).toString() : "BAD ORDER";

currentTime = currentTime + System.getProperty("line.separator");

ByteBuf resp = Unpooled.copiedBuffer(currentTime.getBytes());

ctx.writeAndFlush(resp);

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

ctx.close();

}

}- 客户端

TimeClient

package client;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

public class TimeClient {

public static void main(String[] args){

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

Bootstrap b = new Bootstrap();

b.group(workerGroup);

b.channel(NioSocketChannel.class);

b.option(ChannelOption.SO_KEEPALIVE, true);

b.handler(new ChannelInitializer() {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ch.pipeline().addLast(new TimeClientHandler());

}

});

// Start the client.

ChannelFuture f = b.connect("127.0.0.1", 8080).sync();

// Wait until the connection is closed.

System.out.println("connect ");

f.channel().closeFuture().sync();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

System.out.println("disconnect ");

workerGroup.shutdownGracefully();

}

}

} TimeClientHandler

import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

public class TimeClientHandler extends ChannelInboundHandlerAdapter {

private int counter;

private byte[] req;

public TimeClientHandler() {

req = ("QUERY TIME ORDER" + System.getProperty("line.separator")).getBytes();

}

@Override

public void channelActive(ChannelHandlerContext ctx) {

System.out.println("channelActive");

ByteBuf message = null;

for (int i = 0; i < 100; i++) {

message = Unpooled.buffer(req.length);

message.writeBytes(req);

ctx.writeAndFlush(message);

}

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg)

throws Exception {

ByteBuf buf = (ByteBuf) msg;

byte[] req = new byte[buf.readableBytes()];

buf.readBytes(req);

String body = new String(req, "UTF-8");

System.out.println("Now is : " + body + " ; the counter is : " + ++counter);

}

// @Override

// public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

// // 释放资源

// ctx.close();

// }

}- 结果分析

服务端结果:

The time server receive order : QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORD ; the counter is : 1

The time server receive order :

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER

QUERY TIME ORDER ; the counter is : 2客户端结果:

channelActive

connect

Now is : BAD ORDER

BAD ORDER

; the counter is : 1