python 爬取猫眼TOP100

文章目录

- 用到的库

- 分析HTML

- 编写代码

- 完整代码

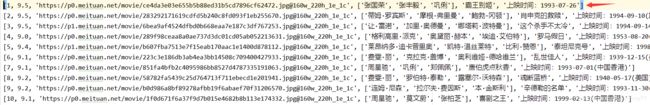

- 结果

用到的库

lxml

requests

beautifulsoup4

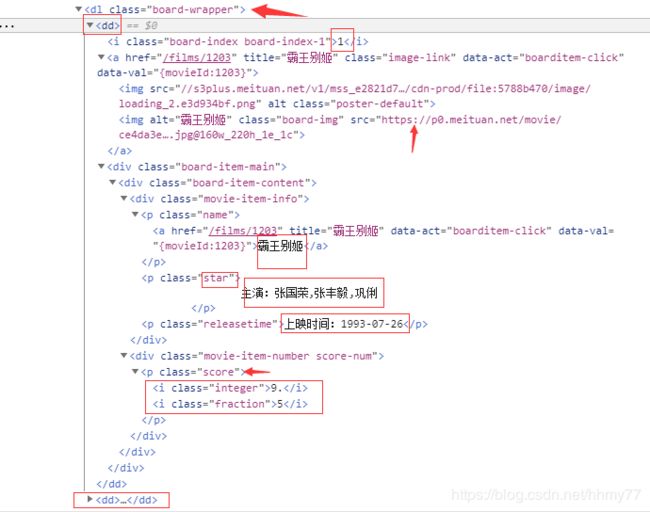

分析HTML

最外面一层是class为board-wrapper的dl

里面每个电影都用dd标签包裹

dd标签里面包括排名,海报,名称,主演,上映时间,评分

分别在i img a p p p这几个标签里面

这几个标签有class,可以很方便的定位

每部电影对应一个dd标签

链接的第一页链接是这个:

https://maoyan.com/board/4?offset=0

展示1~10的电影,共有10页

第二页的链接变为:

https://maoyan.com/board/4?offset=10

可以看到offset是偏移量,范围是0~90,步长是10

那么初始的url是这个https://maoyan.com/board/4,我们请求的时候加上offset字段就行了

编写代码

我们大概处理成这种情况就够了

排名,评分,海报链接,主演,名称,上映时间

发送request请求

我们要构造一个request请求,包含url和offset字段,headers也要加上,不然爬不了

# 请求头

self.headers = {

'User-Agent': 'Mozilla/5.o (Macintosh; Intel Mac OS X 10_13_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.162 Safari/537.36'

}

# 初始的url

self.url = 'https://maoyan.com/board/4'

# 页码

p = {

'offset': str(10 * index)

}

return requests.get(self.url, headers=self.headers, params=p)

处理request中的html

这一部分就是找到对应的标签

# 解析器使用lxml

soup = BeautifulSoup(html, 'lxml')

# 基本思路:先选择到最外层的dl标签,里面有多个dd

dl = soup.find(class_='board-wrapper')

# 遍历dd标签,分别取出排名,海报,名称,主演,上映时间,分数

for dd in dl.find_all(name='dd'):

# 选择i标签,包含排名,和分数

rank_detail_tag = dd.find_all(name='i')

# 海报链接

poster_tag = dd.find(class_='board-img')

# 演员

actors_tag = dd.find(class_='star')

# 时间

release_time_tag = dd.find(class_='releasetime')

name_tag = dd.find(class_='name').find(name='a')

进一步处理数据

我们根据上一步取得的标签,把需要的数据读出来,然后返回

def process_data(self, rank_detail_tag, poster_tag, actors_tag, release_time_tag, name_tag):

rank_lst = []

for it in rank_detail_tag:

rank_lst.append(it.string)

# 处理排名和分数

rank, score = int(rank_lst[0]), float(rank_lst[1] + rank_lst[2])

# 处理海报链接

poster_link = poster_tag.attrs['data-src']

# 处理演员

actors_str = actors_tag.string.strip()[3:]

# 演员列表

actors_lst = actors_str.split(',')

# 电影名称

movie_name = name_tag.string

# 上映时间

movie_time = release_time_tag.string

return [rank, score, poster_link, actors_lst, movie_name, movie_time]

保存数据

def save_data(self, info):

with open('movie_rank_top_100.txt', 'a+', encoding='utf-8') as fb:

fb.write(str(info) + '\n')

完整代码

我写成类,run是调用的开始

import requests

from bs4 import BeautifulSoup

import time

class Model:

def __init__(self):

# 请求头

self.headers = {

'User-Agent': 'Mozilla/5.o (Macintosh; Intel Mac OS X 10_13_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.162 Safari/537.36'

}

# 初始的url

self.url = 'https://maoyan.com/board/4'

# 爬取的页数

self.page_nums = 10

# 获得一页

def get_one_page(self, index):

p = {

'offset': str(10 * index)

}

return requests.get(self.url, headers=self.headers, params=p)

# 处理一个request

def process_html(self, html):

# 解析器使用lxml

soup = BeautifulSoup(html, 'lxml')

# 基本思路:先选择到最外层的dl标签,里面有多个dd

dl = soup.find(class_='board-wrapper')

# 遍历dd标签,分别取出排名,海报,名称,主演,上映时间,分数

for dd in dl.find_all(name='dd'):

# 选择i标签,包含排名,和分数

rank_detail_tag = dd.find_all(name='i')

# 海报链接

poster_tag = dd.find(class_='board-img')

# 演员

actors_tag = dd.find(class_='star')

# 时间

release_time_tag = dd.find(class_='releasetime')

name_tag = dd.find(class_='name').find(name='a')

info = self.process_data(rank_detail_tag, poster_tag, actors_tag, release_time_tag, name_tag)

self.save_data(info)

print('process html success')

def process_data(self, rank_detail_tag, poster_tag, actors_tag, release_time_tag, name_tag):

rank_lst = []

for it in rank_detail_tag:

rank_lst.append(it.string)

# 处理排名和分数

rank, score = int(rank_lst[0]), float(rank_lst[1] + rank_lst[2])

# 处理海报链接

poster_link = poster_tag.attrs['data-src']

# 处理演员

actors_str = actors_tag.string.strip()[3:]

# 演员列表

actors_lst = actors_str.split(',')

# 电影名称

movie_name = name_tag.string

# 上映时间

movie_time = release_time_tag.string

return [rank, score, poster_link, actors_lst, movie_name, movie_time]

def save_data(self, info):

with open('movie_rank_top_100.txt', 'a+', encoding='utf-8') as fb:

fb.write(str(info) + '\n')

def run(self):

time.sleep(3)

# 10页

for i in range(0, self.page_nums):

request = self.get_one_page(i)

print(request.url)

self.process_html(request.content)

if __name__ == '__main__':

s = Model()

s.run()