Deepin15.11下Hadoop 3.2.1+jdk1.8安装全流程

目录

前言

前期准备

安装Deepin15.11

安装JDK1.8

安装ssh

安装配置单机Hadoop

伪分布式Hadoop

eclipse环境配置

示例程序

完事

前言

最近需要安装Hadoop,去年也做过一次,安装起来挺麻烦的,刚好今年疫情,闲来无事也写点博客记录一下过程,这样之后找起来也有迹可循。

本次计划采用各部分最新版本安装,VMware workstation pro 15.5+Deepin15.11+Hadoop 3.2.1+JDK1.8。

前期准备

下载Deepin15.11,毕竟支持国产(我是不会说我试了两个版本的Ubuntu,然后卡死在切换中文界面那里的)

下载VMware workstation pro15,从官网下载时可能需要登录,不过如果是下载试用版本的话就不用登录了,本文链接是试用版下载链接,可直接下载,不过速度可能比较慢,可用从别的渠道下载,这个应该不会影响后续安装。

下载Hadoop,从网站选择版本,点击下图binary进入下载链接,选择合适的现在链接下载,这里用的是Hadoop 3.2.1版本。source为未编译文件,需要自己编译。

下载jdk1.8。本着no zuo no die的原则,我没有尝试jdk13,有兴趣的可以试试,并且Oracle更改了下载方式,需要注册神马的,贼麻烦,慢慢来,下载tar.gz格式的文件。

安装Deepin15.11

在尝试了好多次Ubuntu,然后中文界面就是出不来的情况下,我换路去了国产deepin,毕竟好看又好用。

首先安装VMware workstation,然后安装Deepin虚拟机,具体过程就不做描述了,很多网站和博客都有教程,这一步应该不是问题,不想找的可以参考这篇博客。

安装JDK1.8

下载jdk之后,直接用图形界面解压,然后在当前的文件夹下打开命令行,将文件夹移动到usr/lib目录下

sudo mv jdk1.8/ /usr/lib/jdk/jdk1.8就是解压后的目录名,当然可以把解压后的文件夹重命名个短一点的,我一直那么做。

/usr/lib/jdk这里我是直接移动后改了文件名,同理下边的配置里的jdk都是文件名,如果你的不是这个名字的话根据自己的文件更改路径。

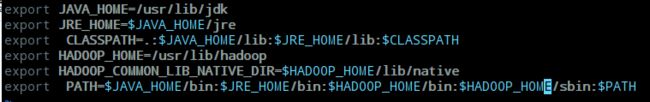

配置java

输入sudo vim /etc/profile

然后输入一下内容

export JAVA_HOME=/usr/lib/jdk

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH最后source /etc/profile刷新

然后命令行输入 java -version和javac -version可以看到。如果发现新开命令行找不到java的情况,可以重启试试,我就是这种情况然后解决了。

goodman@goodman-PC:~/Desktop$ java -version

java version "1.8.0_241"

Java(TM) SE Runtime Environment (build 1.8.0_241-b07)

Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed mode)

goodman@goodman-PC:~/Desktop$ javac -version

javac 1.8.0_241

安装ssh

使用下边的命令安装ssh。

sudo apt-get install openssh-server

安装之后使用 ssh localhost连接本机,首次登陆的时候有提示,然后输入yes,再输入当前用户的登陆密码进行登录。

输入exit推出ssh连接。

设置免密登录

cd ~/.ssh/

ssh-keygen -t rsa

cat ./id_rsa.pub >> ./authorized_keys需要输入回车的地方就一直回车就行

再次输入ssh localhost测试是否需要密码登录,如下图

goodman@goodman-PC:~/Documents$ ssh localhost

Welcome to Deepin 15.11 GNU/Linux

* Homepage:https://www.deepin.org/

* Bugreport:https://feedback.deepin.org/feedback/

* Community:https://bbs.deepin.org/

Last login: Fri Feb 28 14:30:25 2020 from 127.0.0.1

安装配置单机Hadoop

解压Hadoop压缩包,然后移动到/usr/lib/hadoop目录下,命令同java

sudo mv hadoop-3.2.1/ /usr/lib/hadoop

进入Hadoop目录中,进行测试,成功后如下。

goodman@goodman-PC:/usr/lib/hadoop$ ./bin/hadoop version

Hadoop 3.2.1

Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842

Compiled by rohithsharmaks on 2019-09-10T15:56Z

Compiled with protoc 2.5.0

From source with checksum 776eaf9eee9c0ffc370bcbc1888737

This command was run using /usr/lib/hadoop/share/hadoop/common/hadoop-common-3.2.1.jar

授予用户文件夹权限

goodman@goodman-PC:/usr/lib$ sudo chown -R yonghuming ./hadoop

单机Hadoop到这里就完成了,再加上后边的配置路径,完事。

伪分布式Hadoop

模仿多个服务器进行配置。参考博客

修改位于hadoop文件下,/etc/hadoop中core-site.xml 和 hdfs-site.xml两个文件。

修改core-site.xml文件,添加下列内容。

hadoop.tmp.dir

/usr/lib/hadoop/tmp

Abase for other temporary directories.

fs.defaultFS

hdfs://localhost:9000

修改hdfs-site.xml文件,添加下列内容

dfs.replication

1

dfs.namenode.name.dir

/usr/lib/hadoop/tmp/dfs/name

dfs.datanode.data.dir

/usr/lib/hadoop/tmp/dfs/data

有关文件路径的地方根据自己的安装来修改

在Hadoop目录下将名称节点格式化,命令和结果如下,太长了,只看开头和结尾就行。

goodman@goodman-PC:/usr/lib/hadoop$ ./bin/hdfs namenode -format

WARNING: /usr/lib/hadoop/logs does not exist. Creating.

2020-02-28 16:07:24,225 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = goodman-PC/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.2.1

STARTUP_MSG: classpath = /usr/lib/hadoop/etc/hadoop:/usr/lib/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/lib/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/lib/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerb-util-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-io-2.5.jar:/usr/lib/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/usr/lib/hadoop/share/hadoop/common/lib/curator-client-2.13.0.jar:/usr/lib/hadoop/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/usr/lib/hadoop/share/hadoop/common/lib/jetty-xml-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-net-3.6.jar:/usr/lib/hadoop/share/hadoop/common/lib/jersey-servlet-1.19.jar:/usr/lib/hadoop/share/hadoop/common/lib/jetty-io-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/common/lib/netty-3.10.5.Final.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-lang3-3.7.jar:/usr/lib/hadoop/share/hadoop/common/lib/jetty-webapp-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/lib/hadoop/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/usr/lib/hadoop/share/hadoop/common/lib/token-provider-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/accessors-smart-1.2.jar:/usr/lib/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/usr/lib/hadoop/share/hadoop/common/lib/jackson-core-2.9.8.jar:/usr/lib/hadoop/share/hadoop/common/lib/jsch-0.1.54.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/zookeeper-3.4.13.jar:/usr/lib/hadoop/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/usr/lib/hadoop/share/hadoop/common/lib/jersey-core-1.19.jar:/usr/lib/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/lib/hadoop/share/hadoop/common/lib/stax2-api-3.1.4.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/usr/lib/hadoop/share/hadoop/common/lib/re2j-1.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/jersey-json-1.19.jar:/usr/lib/hadoop/share/hadoop/common/lib/jetty-util-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/common/lib/asm-5.0.4.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerb-common-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/json-smart-2.3.jar:/usr/lib/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/lib/hadoop/share/hadoop/common/lib/jackson-annotations-2.9.8.jar:/usr/lib/hadoop/share/hadoop/common/lib/error_prone_annotations-2.2.0.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerb-server-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/usr/lib/hadoop/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-beanutils-1.9.3.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerby-config-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/usr/lib/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerb-client-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-text-1.4.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/lib/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/usr/lib/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerb-core-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/httpcore-4.4.10.jar:/usr/lib/hadoop/share/hadoop/common/lib/jetty-server-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/common/lib/jetty-servlet-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerby-util-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/httpclient-4.5.6.jar:/usr/lib/hadoop/share/hadoop/common/lib/curator-framework-2.13.0.jar:/usr/lib/hadoop/share/hadoop/common/lib/jetty-http-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/common/lib/checker-qual-2.5.2.jar:/usr/lib/hadoop/share/hadoop/common/lib/hadoop-annotations-3.2.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/guava-27.0-jre.jar:/usr/lib/hadoop/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/hadoop-auth-3.2.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-compress-1.18.jar:/usr/lib/hadoop/share/hadoop/common/lib/failureaccess-1.0.jar:/usr/lib/hadoop/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/usr/lib/hadoop/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/jackson-databind-2.9.8.jar:/usr/lib/hadoop/share/hadoop/common/lib/jetty-security-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/avro-1.7.7.jar:/usr/lib/hadoop/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/metrics-core-3.2.4.jar:/usr/lib/hadoop/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/usr/lib/hadoop/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/usr/lib/hadoop/share/hadoop/common/lib/snappy-java-1.0.5.jar:/usr/lib/hadoop/share/hadoop/common/lib/commons-codec-1.11.jar:/usr/lib/hadoop/share/hadoop/common/lib/jersey-server-1.19.jar:/usr/lib/hadoop/share/hadoop/common/lib/dnsjava-2.1.7.jar:/usr/lib/hadoop/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/usr/lib/hadoop/share/hadoop/common/hadoop-common-3.2.1.jar:/usr/lib/hadoop/share/hadoop/common/hadoop-nfs-3.2.1.jar:/usr/lib/hadoop/share/hadoop/common/hadoop-kms-3.2.1.jar:/usr/lib/hadoop/share/hadoop/common/hadoop-common-3.2.1-tests.jar:/usr/lib/hadoop/share/hadoop/hdfs:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jetty-util-ajax-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-io-2.5.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/curator-recipes-2.13.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/curator-client-2.13.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jetty-xml-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-net-3.6.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jetty-io-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/netty-3.10.5.Final.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-lang3-3.7.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jetty-webapp-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jackson-core-2.9.8.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jsch-0.1.54.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/okio-1.6.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/zookeeper-3.4.13.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/re2j-1.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jetty-util-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/asm-5.0.4.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/json-smart-2.3.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jackson-annotations-2.9.8.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/error_prone_annotations-2.2.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-beanutils-1.9.3.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/paranamer-2.3.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-text-1.4.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/gson-2.2.4.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jettison-1.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/httpcore-4.4.10.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jetty-server-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jetty-servlet-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/httpclient-4.5.6.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/curator-framework-2.13.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jetty-http-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/hadoop-annotations-3.2.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/hadoop-auth-3.2.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-compress-1.18.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jackson-databind-2.9.8.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jetty-security-9.3.24.v20180605.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/avro-1.7.7.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/dnsjava-2.1.7.jar:/usr/lib/hadoop/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.2.1-tests.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.2.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.2.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.2.1-tests.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.2.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.2.1-tests.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.2.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.2.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-3.2.1.jar:/usr/lib/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.2.1-tests.jar:/usr/lib/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/lib/hadoop/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.2.1.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.2.1.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.2.1.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.2.1.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.2.1.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.2.1.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.2.1-tests.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.2.1.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.2.1.jar:/usr/lib/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn:/usr/lib/hadoop/share/hadoop/yarn/lib/bcpkix-jdk15on-1.60.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/bcprov-jdk15on-1.60.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/objenesis-1.0.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/guice-4.0.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-base-2.9.8.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.9.8.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/java-util-1.9.0.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/fst-2.50.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/json-io-2.5.1.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/jersey-client-1.19.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/usr/lib/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.9.8.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-server-router-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-services-core-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-api-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-client-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-services-api-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-registry-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-submarine-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-common-3.2.1.jar:/usr/lib/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.2.1.jar

STARTUP_MSG: build = https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842; compiled by 'rohithsharmaks' on 2019-09-10T15:56Z

STARTUP_MSG: java = 1.8.0_241

************************************************************/

2020-02-28 16:07:24,239 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2020-02-28 16:07:24,341 INFO namenode.NameNode: createNameNode [-format]

2020-02-28 16:07:25,164 INFO common.Util: Assuming 'file' scheme for path /usr/lib/hadoop/tmp/dfs/name in configuration.

2020-02-28 16:07:25,165 INFO common.Util: Assuming 'file' scheme for path /usr/lib/hadoop/tmp/dfs/name in configuration.

Formatting using clusterid: CID-2b050f19-fc84-4098-ae30-ec7a62219d65

2020-02-28 16:07:25,213 INFO namenode.FSEditLog: Edit logging is async:true

2020-02-28 16:07:25,238 INFO namenode.FSNamesystem: KeyProvider: null

2020-02-28 16:07:25,241 INFO namenode.FSNamesystem: fsLock is fair: true

2020-02-28 16:07:25,241 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

2020-02-28 16:07:25,253 INFO namenode.FSNamesystem: fsOwner = goodman (auth:SIMPLE)

2020-02-28 16:07:25,253 INFO namenode.FSNamesystem: supergroup = supergroup

2020-02-28 16:07:25,253 INFO namenode.FSNamesystem: isPermissionEnabled = true

2020-02-28 16:07:25,275 INFO namenode.FSNamesystem: HA Enabled: false

2020-02-28 16:07:25,333 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2020-02-28 16:07:25,350 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

2020-02-28 16:07:25,350 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2020-02-28 16:07:25,355 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2020-02-28 16:07:25,356 INFO blockmanagement.BlockManager: The block deletion will start around 2020 二月 28 16:07:25

2020-02-28 16:07:25,358 INFO util.GSet: Computing capacity for map BlocksMap

2020-02-28 16:07:25,358 INFO util.GSet: VM type = 64-bit

2020-02-28 16:07:25,361 INFO util.GSet: 2.0% max memory 873 MB = 17.5 MB

2020-02-28 16:07:25,361 INFO util.GSet: capacity = 2^21 = 2097152 entries

2020-02-28 16:07:25,420 INFO blockmanagement.BlockManager: Storage policy satisfier is disabled

2020-02-28 16:07:25,420 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

2020-02-28 16:07:25,431 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS

2020-02-28 16:07:25,432 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

2020-02-28 16:07:25,432 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

2020-02-28 16:07:25,432 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

2020-02-28 16:07:25,433 INFO blockmanagement.BlockManager: defaultReplication = 1

2020-02-28 16:07:25,433 INFO blockmanagement.BlockManager: maxReplication = 512

2020-02-28 16:07:25,433 INFO blockmanagement.BlockManager: minReplication = 1

2020-02-28 16:07:25,433 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

2020-02-28 16:07:25,433 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

2020-02-28 16:07:25,433 INFO blockmanagement.BlockManager: encryptDataTransfer = false

2020-02-28 16:07:25,433 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2020-02-28 16:07:25,502 INFO namenode.FSDirectory: GLOBAL serial map: bits=29 maxEntries=536870911

2020-02-28 16:07:25,502 INFO namenode.FSDirectory: USER serial map: bits=24 maxEntries=16777215

2020-02-28 16:07:25,502 INFO namenode.FSDirectory: GROUP serial map: bits=24 maxEntries=16777215

2020-02-28 16:07:25,502 INFO namenode.FSDirectory: XATTR serial map: bits=24 maxEntries=16777215

2020-02-28 16:07:25,516 INFO util.GSet: Computing capacity for map INodeMap

2020-02-28 16:07:25,516 INFO util.GSet: VM type = 64-bit

2020-02-28 16:07:25,516 INFO util.GSet: 1.0% max memory 873 MB = 8.7 MB

2020-02-28 16:07:25,516 INFO util.GSet: capacity = 2^20 = 1048576 entries

2020-02-28 16:07:25,516 INFO namenode.FSDirectory: ACLs enabled? false

2020-02-28 16:07:25,516 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

2020-02-28 16:07:25,517 INFO namenode.FSDirectory: XAttrs enabled? true

2020-02-28 16:07:25,517 INFO namenode.NameNode: Caching file names occurring more than 10 times

2020-02-28 16:07:25,524 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2020-02-28 16:07:25,528 INFO snapshot.SnapshotManager: SkipList is disabled

2020-02-28 16:07:25,532 INFO util.GSet: Computing capacity for map cachedBlocks

2020-02-28 16:07:25,533 INFO util.GSet: VM type = 64-bit

2020-02-28 16:07:25,533 INFO util.GSet: 0.25% max memory 873 MB = 2.2 MB

2020-02-28 16:07:25,533 INFO util.GSet: capacity = 2^18 = 262144 entries

2020-02-28 16:07:25,542 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2020-02-28 16:07:25,542 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2020-02-28 16:07:25,542 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2020-02-28 16:07:25,548 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2020-02-28 16:07:25,549 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2020-02-28 16:07:25,551 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2020-02-28 16:07:25,551 INFO util.GSet: VM type = 64-bit

2020-02-28 16:07:25,552 INFO util.GSet: 0.029999999329447746% max memory 873 MB = 268.2 KB

2020-02-28 16:07:25,552 INFO util.GSet: capacity = 2^15 = 32768 entries

2020-02-28 16:07:25,584 INFO namenode.FSImage: Allocated new BlockPoolId: BP-2096700243-127.0.1.1-1582877245575

2020-02-28 16:07:25,610 INFO common.Storage: Storage directory /usr/lib/hadoop/tmp/dfs/name has been successfully formatted.

2020-02-28 16:07:25,685 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/lib/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2020-02-28 16:07:25,927 INFO namenode.FSImageFormatProtobuf: Image file /usr/lib/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 402 bytes saved in 0 seconds .

2020-02-28 16:07:25,963 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2020-02-28 16:07:25,981 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2020-02-28 16:07:25,981 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at goodman-PC/127.0.1.1

************************************************************/

启动Hadoop,发现下列情况

goodman@goodman-PC:/usr/lib/hadoop$ ./sbin/start-dfs.sh

Starting namenodes on [localhost]

localhost: ERROR: JAVA_HOME is not set and could not be found.

Starting datanodes

localhost: ERROR: JAVA_HOME is not set and could not be found.

Starting secondary namenodes [goodman-PC]

goodman-PC: Warning: Permanently added 'goodman-pc' (ECDSA) to the list of known hosts.

goodman-PC: ERROR: JAVA_HOME is not set and could not be found.

在/usr/lib/hadoop/etc/hadoop目录hadoop-env.sh添加

export JAVA_HOME=/usr/lib/jdk再次尝试并用jps查看进程,如下图,完事。

goodman@goodman-PC:/usr/lib/hadoop$ ./sbin/start-dfs.sh

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [goodman-PC]

goodman@goodman-PC:/usr/lib/hadoop$ jps

8354 NameNode

8822 Jps

8471 DataNode

8681 SecondaryNameNode

配置Hadoop环境变量,方便开启和关闭

在/etc/profile中添加hadoop路径,如下

可以在任何路径开启和关闭Hadoop

伪分布式配置到此结束。

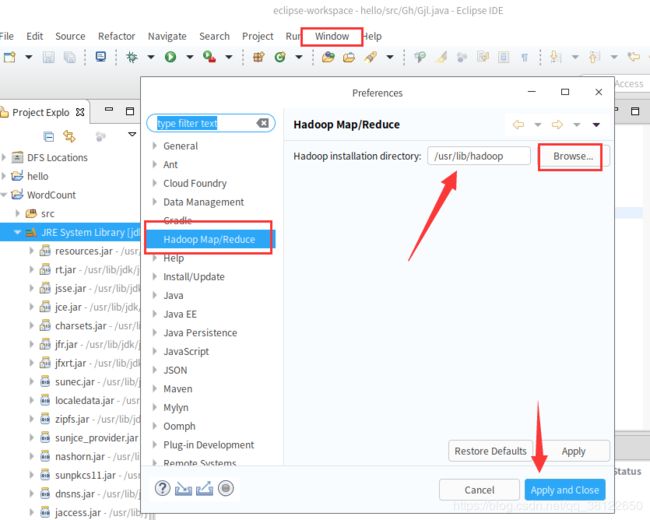

eclipse环境配置

安装eclipse,deepin商店中有eclipse,直接安装就可。或者参考博客。

安装 hadoop-eclipse-plugin,下载地址 http://pan.baidu.com/s/1i4ikIoP。

下 载 后 将 release文 件 夹 中 的 hadoop-eclipse-kepler-plugin-2.6.0.jar复 制到/usr/lib/eclipse/plugins(如果是 ubuntu软件中心安装的 Eclipse则为该文件夹,如果不在也可能在usr/share文件夹中,如果是自己安装的就放到 你的位置/eclipse/plugins文件夹中)文件夹中,运行 eclipse -clean重启 Eclipse,(添加插件后只需要运行一次该命令,以后按照正常方式启动就行了)。

重启后从eclipse的Windows菜单栏中找到preference,看到左边有Hadoop map/reduce选项,然后找到hadoop的目录(、usr/lib/hadoop),点击应用。

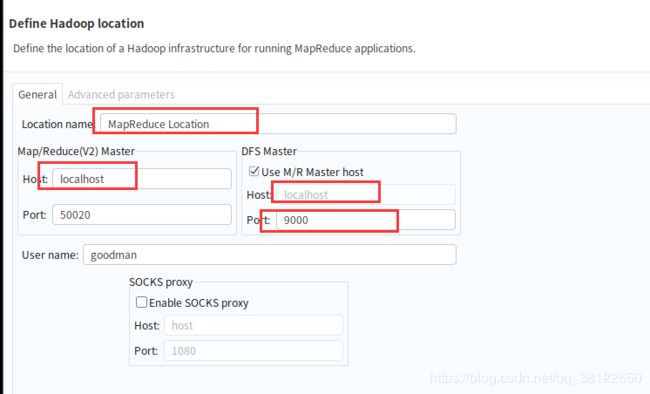

从windows菜单栏中选择perspective->open perspective->other,会看到map/reduce选项,点击确定切换。

点击 Eclipse软件右下角的 Map/ReduceLocations 面板,在面板中单击右键,选择 New Hadoop Location。

在general面板中,配置与本机hadoop相关配置相同

在eclipse中新建mapreduce项目:file->new->project->mapreduce project.

其他新建包和新建类与java项目相同。(示例代码后附)

在运行mapreduce程序之前, 还 需 要 执 行 一 项 重 要 操 作 : 将/usr/local/hadoop/etc/hadoop 中将有修改过的配置文件(如伪分布式需要 core-site.xml 和 hdfs-site.xml),以及 log4j.properties 复制到 WordCount 项目下的 src 文件夹中,在eclipse中刷新。

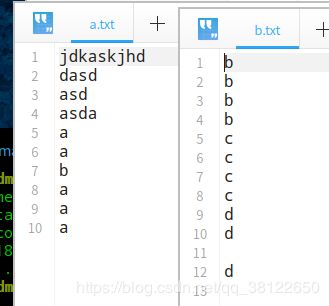

示例程序

这里采用简单的wordcount程序进行演示,代码的目的是计算文件中相同单词或者字母的个数

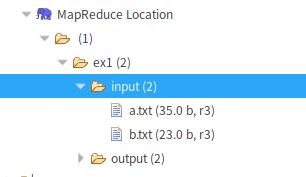

先创建两个简单的txt文件,随便输入点内容,然后上传到Hadoop文件系统hdfs中,这里可以使用eclipse图形化操作。

在程序中右键运行->通过hadoop运行

结果如上。代码如下

package example;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class Word {

public static class TokenizerMapper

extends Mapper{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer

extends Reducer {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

args = new String[] {"hdfs://localhost:9000/ex1/input","hdfs://localhost:9000/ex1/output"};

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount ");

System.exit(2);

}

Path path =new Path(args[1]);

//加载配置文件

FileSystem fileSystem = path.getFileSystem(conf);

//输出目录若存在则删除

if (fileSystem.exists(new Path(args[1]))) {

fileSystem.delete(new Path(args[1]),true);

}

Job job = new Job(conf, "word count");

job.setJarByClass(Word.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

} 完事

有点长,凑合看吧