神经网络与深度学习第三周-Planar data classification with one hidden layer

Planar data classification with one hidden layer(用一个隐藏层平面数据分类)

Welcome to your week 3 programming assignment. It’s time to build your first neural network, which will have a hidden layer. You will see a big difference between this model and the one you implemented using logistic regression.

You will learn how to:

- Implement a 2-class classification neural network with a single hidden layer(实现具有单个隐藏层的2类分类神经网络)

- Use units with a non-linear activation function, such as tanh (使用具有非线性激活功能的单位,例如tanh)

- Compute the cross entropy loss (计算交叉熵损失)

- Implement forward and backward propagation(实现向前和向后传播)

1 - Packages

Let’s first import all the packages that you will need during this assignment.

- numpy is the fundamental package for scientific computing with Python.

- sklearn provides simple and efficient tools for data mining and data analysis. (为数据挖掘和数据分析提供简单高效的工具)

- matplotlib is a library for plotting graphs in Python.

- testCases provides some test examples to assess the correctness of your functions (testCases提供了一些测试例子来评估你的函数的正确性)

- planar_utils provide various useful functions used in this assignment (planar_utils提供了在这个任务中使用的各种有用的功能)

# Package imports

import numpy as np

import matplotlib.pyplot as plt

from testCases import *

import sklearn

import sklearn.datasets

import sklearn.linear_model

from planar_utils import plot_decision_boundary, sigmoid, load_planar_dataset, load_extra_datasets

%matplotlib inline

np.random.seed(1) # set a seed so that the results are consistenttestCases源码如下:

import numpy as np

def layer_sizes_test_case():

np.random.seed(1)

’‘’

seed( ) 用于指定随机数生成时所用算法开始的整数值。

1.如果使用相同的seed( )值,则每次生成的随即数都相同;

2.如果不设置这个值,则系统根据时间来自己选择这个值,此时每次生成的随机数因时间差异而不同。

3.设置的seed()值仅一次有效

‘’‘

X_assess = np.random.randn(5, 3)

Y_assess = np.random.randn(2, 3)

return X_assess, Y_assess

def initialize_parameters_test_case():

n_x, n_h, n_y = 2, 4, 1

return n_x, n_h, n_y

def forward_propagation_test_case():

np.random.seed(1)

X_assess = np.random.randn(2, 3)

parameters = {'W1': np.array([[-0.00416758, -0.00056267],

[-0.02136196, 0.01640271],

[-0.01793436, -0.00841747],

[ 0.00502881, -0.01245288]]),

'W2': np.array([[-0.01057952, -0.00909008, 0.00551454, 0.02292208]]),

'b1': np.array([[ 0.],

[ 0.],

[ 0.],

[ 0.]]),

'b2': np.array([[ 0.]])}

return X_assess, parameters

def compute_cost_test_case():

np.random.seed(1)

Y_assess = np.random.randn(1, 3)

parameters = {'W1': np.array([[-0.00416758, -0.00056267],

[-0.02136196, 0.01640271],

[-0.01793436, -0.00841747],

[ 0.00502881, -0.01245288]]),

'W2': np.array([[-0.01057952, -0.00909008, 0.00551454, 0.02292208]]),

'b1': np.array([[ 0.],

[ 0.],

[ 0.],

[ 0.]]),

'b2': np.array([[ 0.]])}

a2 = (np.array([[ 0.5002307 , 0.49985831, 0.50023963]]))

return a2, Y_assess, parameters

def backward_propagation_test_case():

np.random.seed(1)

X_assess = np.random.randn(2, 3)

Y_assess = np.random.randn(1, 3)

parameters = {'W1': np.array([[-0.00416758, -0.00056267],

[-0.02136196, 0.01640271],

[-0.01793436, -0.00841747],

[ 0.00502881, -0.01245288]]),

'W2': np.array([[-0.01057952, -0.00909008, 0.00551454, 0.02292208]]),

'b1': np.array([[ 0.],

[ 0.],

[ 0.],

[ 0.]]),

'b2': np.array([[ 0.]])}

cache = {'A1': np.array([[-0.00616578, 0.0020626 , 0.00349619],

[-0.05225116, 0.02725659, -0.02646251],

[-0.02009721, 0.0036869 , 0.02883756],

[ 0.02152675, -0.01385234, 0.02599885]]),

'A2': np.array([[ 0.5002307 , 0.49985831, 0.50023963]]),

'Z1': np.array([[-0.00616586, 0.0020626 , 0.0034962 ],

[-0.05229879, 0.02726335, -0.02646869],

[-0.02009991, 0.00368692, 0.02884556],

[ 0.02153007, -0.01385322, 0.02600471]]),

'Z2': np.array([[ 0.00092281, -0.00056678, 0.00095853]])}

return parameters, cache, X_assess, Y_assess

def update_parameters_test_case():

parameters = {'W1': np.array([[-0.00615039, 0.0169021 ],

[-0.02311792, 0.03137121],

[-0.0169217 , -0.01752545],

[ 0.00935436, -0.05018221]]),

'W2': np.array([[-0.0104319 , -0.04019007, 0.01607211, 0.04440255]]),

'b1': np.array([[ -8.97523455e-07],

[ 8.15562092e-06],

[ 6.04810633e-07],

[ -2.54560700e-06]]),

'b2': np.array([[ 9.14954378e-05]])}

grads = {'dW1': np.array([[ 0.00023322, -0.00205423],

[ 0.00082222, -0.00700776],

[-0.00031831, 0.0028636 ],

[-0.00092857, 0.00809933]]),

'dW2': np.array([[ -1.75740039e-05, 3.70231337e-03, -1.25683095e-03,

-2.55715317e-03]]),

'db1': np.array([[ 1.05570087e-07],

[ -3.81814487e-06],

[ -1.90155145e-07],

[ 5.46467802e-07]]),

'db2': np.array([[ -1.08923140e-05]])}

return parameters, grads

def nn_model_test_case():

np.random.seed(1)

X_assess = np.random.randn(2, 3)

Y_assess = np.random.randn(1, 3)

return X_assess, Y_assess

def predict_test_case():

np.random.seed(1)

X_assess = np.random.randn(2, 3)

parameters = {'W1': np.array([[-0.00615039, 0.0169021 ],

[-0.02311792, 0.03137121],

[-0.0169217 , -0.01752545],

[ 0.00935436, -0.05018221]]),

'W2': np.array([[-0.0104319 , -0.04019007, 0.01607211, 0.04440255]]),

'b1': np.array([[ -8.97523455e-07],

[ 8.15562092e-06],

[ 6.04810633e-07],

[ -2.54560700e-06]]),

'b2': np.array([[ 9.14954378e-05]])}

return parameters, X_assessplanar_utils源代码如下:

import matplotlib.pyplot as plt

import numpy as np

import sklearn

import sklearn.datasets

import sklearn.linear_model

def plot_decision_boundary(model, X, y):

# Set min and max values and give it some padding

x_min, x_max = X[0, :].min() - 1, X[0, :].max() + 1

y_min, y_max = X[1, :].min() - 1, X[1, :].max() + 1

h = 0.01

# Generate a grid of points with distance h between them

xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

# Predict the function value for the whole grid

Z = model(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

# Plot the contour and training examples

plt.contourf(xx, yy, Z, cmap=plt.cm.Spectral)

plt.ylabel('x2')

plt.xlabel('x1')

plt.scatter(X[0, :], X[1, :], c=y, cmap=plt.cm.Spectral)

def sigmoid(x):

"""

Compute the sigmoid of x

Arguments:

x -- A scalar or numpy array of any size.

Return:

s -- sigmoid(x)

"""

s = 1/(1+np.exp(-x))

return s

def load_planar_dataset():

np.random.seed(1)

m = 400 # number of examples

N = int(m/2) # number of points per class

D = 2 # dimensionality

X = np.zeros((m,D)) # data matrix where each row is a single example

Y = np.zeros((m,1), dtype='uint8') # labels vector (0 for red, 1 for blue)

a = 4 # maximum ray of the flower

for j in range(2):

ix = range(N*j,N*(j+1))

t = np.linspace(j*3.12,(j+1)*3.12,N) + np.random.randn(N)*0.2 # theta

r = a*np.sin(4*t) + np.random.randn(N)*0.2 # radius

X[ix] = np.c_[r*np.sin(t), r*np.cos(t)]

Y[ix] = j

X = X.T

Y = Y.T

return X, Y

def load_extra_datasets():

N = 200

noisy_circles = sklearn.datasets.make_circles(n_samples=N, factor=.5, noise=.3)

noisy_moons = sklearn.datasets.make_moons(n_samples=N, noise=.2)

blobs = sklearn.datasets.make_blobs(n_samples=N, random_state=5, n_features=2, centers=6)

gaussian_quantiles = sklearn.datasets.make_gaussian_quantiles(mean=None, cov=0.5, n_samples=N, n_features=2, n_classes=2, shuffle=True, random_state=None)

no_structure = np.random.rand(N, 2), np.random.rand(N, 2)

return noisy_circles, noisy_moons, blobs, gaussian_quantiles, no_structure2 - Dataset

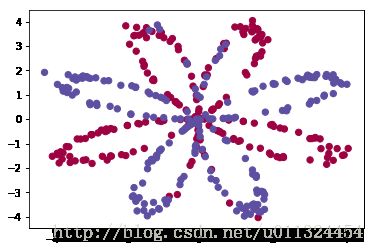

First, let’s get the dataset you will work on. The following code will load a “flower” 2-class dataset into variables X and Y.

X, Y = load_planar_dataset()Visualize the dataset using matplotlib. The data looks like a “flower” with some red (label y=0) and some blue (y=1) points. Your goal is to build a model to fit this data.

# Visualize the data:

plt.scatter(X[0, :], X[1, :], c=np.squeeze(Y), s=40, cmap=plt.cm.Spectral);使用原先的:

plt.scatter(X[0, :], X[1, :], c=Y, s=40, cmap=plt.cm.Spectral);会出现

c of shape (1, 400) not acceptable as a color sequence for x with size 400, y with size 400即维度不匹配的bug,使用np.squeeze将Y的0维去掉,后面报同样的错误处理方法一样

You have:

- a numpy-array (matrix) X that contains your features (x1, x2)

- a numpy-array (vector) Y that contains your labels (red:0, blue:1).

Lets first get a better sense of what our data is like.

Exercise: How many training examples do you have? In addition, what is the shape of the variables X and Y? (你有几个训练样例?另外,变量X和Y的形状是什么?)

Hint: How do you get the shape of a numpy array? (help)

### START CODE HERE ### (≈ 3 lines of code)

shape_X = X.shape

shape_Y = Y.shape

m = X.shape[1] # training set size

### END CODE HERE ###

print ('The shape of X is: ' + str(shape_X))

print ('The shape of Y is: ' + str(shape_Y))

print ('I have m = %d training examples!' % (m))The shape of X is: (2, 400)

The shape of Y is: (1, 400)

I have m = 400 training examples!

Expected Output:

| **shape of X** | (2, 400) |

| **shape of Y** | (1, 400) |

| **m** | 400 |

3 - Simple Logistic Regression

Before building a full neural network, lets first see how logistic regression performs on this problem. You can use sklearn’s built-in functions to do that. Run the code below to train a logistic regression classifier on the dataset.

(在建立一个完整的神经网络之前,先让我们看看逻辑回归在这个问题上是如何执行的。你可以使用sklearn的内置函数来做到这一点。运行下面的代码来训练数据集上的逻辑回归分类器。)

# Train the logistic regression classifier

clf = sklearn.linear_model.LogisticRegressionCV();

clf.fit(X.T, Y.T);

/usr/local/lib/python3.5/dist-packages/sklearn/utils/validation.py:578: DataConversionWarning: A column-vector y was passed when a 1d array was expected. Please change the shape of y to (n_samples, ), for example using ravel().

y = column_or_1d(y, warn=True)

You can now plot the decision boundary of these models. Run the code below.

# Plot the decision boundary for logistic regression

plot_decision_boundary(lambda x: clf.predict(x), X, np.squeeze(Y))

plt.title("Logistic Regression")

# Print accuracy

LR_predictions = clf.predict(X.T)

print ('Accuracy of logistic regression: %d ' % float((np.dot(Y,LR_predictions) + np.dot(1-Y,1-LR_predictions))/float(Y.size)*100) +

'% ' + "(percentage of correctly labelled datapoints)")Accuracy of logistic regression: 47 % (percentage of correctly labelled datapoints)

Expected Output:

| **Accuracy** | 47% |

Interpretation: The dataset is not linearly separable, so logistic regression doesn’t perform well. Hopefully a neural network will do better. Let’s try this now! (数据集不是线性可分的,所以逻辑回归表现不佳。希望神经网络能做得更好。现在就来试试吧!)

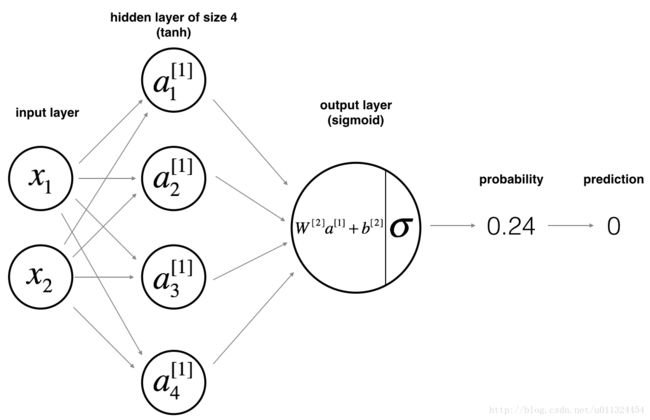

4 - Neural Network model

Logistic regression did not work well on the “flower dataset”. You are going to train a Neural Network with a single hidden layer.(逻辑回归在“花卉数据集”上效果不佳。你将用一个隐藏层来训练一个神经网络。)

Mathematically:

For one example x(i) x ( i ) :

Given the predictions on all the examples, you can also compute the cost J J as follows:

Reminder: The general methodology to build a Neural Network is to:

1. Define the neural network structure ( # of input units, # of hidden units, etc). (定义神经网络结构(输入单元数量,隐藏单元数量等)。)

2. Initialize the model’s parameters(初始化模型的参数)

3. Loop:

- Implement forward propagation(实现向前传播)

- Compute loss(计算损失函数)

- Implement backward propagation to get the gradients(实现向后传播以获得梯度)

- Update parameters (gradient descent)(更新参数,梯度下降)

You often build helper functions to compute steps 1-3 and then merge them into one function we call nn_model(). Once you’ve built nn_model() and learnt the right parameters, you can make predictions on new data.(您经常构建帮助函数来计算步骤1-3,然后将它们合并到一个函数中,我们称之为nn_model()。一旦你建立了nn_model()并学习了正确的参数,你就可以预测新的数据。)

4.1 - Defining the neural network structure

Exercise: Define three variables:

- n_x: the size of the input layer

- n_h: the size of the hidden layer (set this to 4)

- n_y: the size of the output layer

Hint: Use shapes of X and Y to find n_x and n_y. Also, hard code the hidden layer size to be 4.

# GRADED FUNCTION: layer_sizes

def layer_sizes(X, Y):

"""

Arguments:

X -- input dataset of shape (input size, number of examples)

Y -- labels of shape (output size, number of examples)

Returns:

n_x -- the size of the input layer

n_h -- the size of the hidden layer

n_y -- the size of the output layer

"""

### START CODE HERE ### (≈ 3 lines of code)

n_x = X.shape[0] # size of input layer

n_h = 4

n_y = Y.shape[0] # size of output layer

### END CODE HERE ###

return (n_x, n_h, n_y)X_assess, Y_assess = layer_sizes_test_case()

(n_x, n_h, n_y) = layer_sizes(X_assess, Y_assess)

print("The size of the input layer is: n_x = " + str(n_x))

print("The size of the hidden layer is: n_h = " + str(n_h))

print("The size of the output layer is: n_y = " + str(n_y))The size of the input layer is: n_x = 5

The size of the hidden layer is: n_h = 4

The size of the output layer is: n_y = 2

Expected Output (these are not the sizes you will use for your network, they are just used to assess the function you’ve just coded).

| **n_x** | 5 |

| **n_h** | 4 |

| **n_y** | 2 |

4.2 - Initialize the model’s parameters

Exercise: Implement the function initialize_parameters().

Instructions:

- Make sure your parameters’ sizes are right. Refer to the neural network figure above if needed.(确保你的参数的大小是正确的。如果需要,请参考上面的神经网络图。)

- You will initialize the weights matrices with random values. (你将用随机值初始化权重矩阵。)

- Use: np.random.randn(a,b) * 0.01 to randomly initialize a matrix of shape (a,b).

- You will initialize the bias vectors as zeros. (你将初始化偏置向量为零。)

- Use: np.zeros((a,b)) to initialize a matrix of shape (a,b) with zeros.

# GRADED FUNCTION: initialize_parameters

def initialize_parameters(n_x, n_h, n_y):

"""

Argument:

n_x -- size of the input layer

n_h -- size of the hidden layer

n_y -- size of the output layer

Returns:

params -- python dictionary containing your parameters:

W1 -- weight matrix of shape (n_h, n_x)

b1 -- bias vector of shape (n_h, 1)

W2 -- weight matrix of shape (n_y, n_h)

b2 -- bias vector of shape (n_y, 1)

"""

np.random.seed(2) # we set up a seed so that your output matches ours although the initialization is random.

### START CODE HERE ### (≈ 4 lines of code)

W1 = np.random.randn(n_h,n_x)

b1 = np.zeros((n_h,1))

W2 = np.random.rand(n_y,n_h)

b2 = np.zeros((n_y,1))

### END CODE HERE ###

assert (W1.shape == (n_h, n_x))

assert (b1.shape == (n_h, 1))

assert (W2.shape == (n_y, n_h))

assert (b2.shape == (n_y, 1))

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2}

return parametersn_x, n_h, n_y = initialize_parameters_test_case()

parameters = initialize_parameters(n_x, n_h, n_y)

print("W1 = " + str(parameters["W1"]))

print("b1 = " + str(parameters["b1"]))

print("W2 = " + str(parameters["W2"]))

print("b2 = " + str(parameters["b2"]))W1 = [[-0.41675785 -0.05626683]

[-2.1361961 1.64027081]

[-1.79343559 -0.84174737]

[ 0.50288142 -1.24528809]]

b1 = [[ 0.]

[ 0.]

[ 0.]

[ 0.]]

W2 = [[ 0.29965467 0.26682728 0.62113383 0.52914209]]

b2 = [[ 0.]]

Expected Output:

| **W1** | [[-0.00416758 -0.00056267] [-0.02136196 0.01640271] [-0.01793436 -0.00841747] [ 0.00502881 -0.01245288]] |

| **b1** | [[ 0.] [ 0.] [ 0.] [ 0.]] |

| **W2** | [[-0.01057952 -0.00909008 0.00551454 0.02292208]] |

| **b2** | [[ 0.]] |

4.3 - The Loop

Question: Implement forward_propagation().

Instructions:

- Look above at the mathematical representation of your classifier.(请看上面的分类器的数学表示。)

- You can use the function sigmoid(). It is built-in (imported) in the notebook.(你可以使用函数sigmoid().它是notebook的内置函数)

- You can use the function np.tanh(). It is part of the numpy library.(你可以使用函数np.tanh().它是notebook的内置函数)

- The steps you have to implement are:

1. Retrieve each parameter from the dictionary “parameters” (which is the output of initialize_parameters()) by using parameters[".."].(使用parameters [“..”]从字典“parameters”(这是initialize_parameters()的输出)中检索每个参数。)

2. Implement Forward Propagation. Compute Z[1],A[1],Z[2] Z [ 1 ] , A [ 1 ] , Z [ 2 ] and A[2] A [ 2 ] (the vector of all your predictions on all the examples in the training set).(实现向前传播。计算 Z[1],A[1],Z[2] Z [ 1 ] , A [ 1 ] , Z [ 2 ] 和 A[2] A [ 2 ] (训练中所有例子的所有预测的向量组)。)

- Values needed in the backpropagation are stored in “cache“. The cache will be given as an input to the backpropagation function.(反向传播所需的值存储在“cache”中。cache`将作为反向传播函数的输入。)

# GRADED FUNCTION: forward_propagation

def forward_propagation(X, parameters):

"""

Argument:

X -- input data of size (n_x, m)

parameters -- python dictionary containing your parameters (output of initialization function)

Returns:

A2 -- The sigmoid output of the second activation

cache -- a dictionary containing "Z1", "A1", "Z2" and "A2"

"""

# Retrieve each parameter from the dictionary "parameters"

### START CODE HERE ### (≈ 4 lines of code)

W1 = parameters["W1"]

b1 = parameters["b1"]

W2 = parameters["W2"]

b2 = parameters["b2"]

### END CODE HERE ###

# Implement Forward Propagation to calculate A2 (probabilities)

### START CODE HERE ### (≈ 4 lines of code)

Z1 = np.dot(W1,X) + b1

A1 = np.tanh(Z1)

Z2 = np.dot(W2,A1) + b2

A2 = sigmoid(Z2)

### END CODE HERE ###

assert(A2.shape == (1, X.shape[1]))

cache = {"Z1": Z1,

"A1": A1,

"Z2": Z2,

"A2": A2}

return A2, cacheX_assess, parameters = forward_propagation_test_case()

A2, cache = forward_propagation(X_assess, parameters)

# Note: we use the mean here just to make sure that your output matches ours.

print(np.mean(cache['Z1']) ,np.mean(cache['A1']),np.mean(cache['Z2']),np.mean(cache['A2']))-0.000499755777742 -0.000496963353232 0.000438187450959 0.500109546852

Expected Output:

| -0.000499755777742 -0.000496963353232 0.000438187450959 0.500109546852 |

Now that you have computed A[2] A [ 2 ] (in the Python variable “A2“), which contains a[2](i) a [ 2 ] ( i ) for every example, you can compute the cost function as follows:

Exercise: Implement compute_cost() to compute the value of the cost J J .

Instructions:

- There are many ways to implement the cross-entropy loss. To help you, we give you how we would have implemented

−∑i=0my(i)log(a[2](i)) − ∑ i = 0 m y ( i ) log ( a [ 2 ] ( i ) ) :

logprobs = np.multiply(np.log(A2),Y)

cost = - np.sum(logprobs) # no need to use a for loop!(you can use either np.multiply() and then np.sum() or directly np.dot()).

# GRADED FUNCTION: compute_cost

def compute_cost(A2, Y, parameters):

"""

Computes the cross-entropy cost given in equation (13)

Arguments:

A2 -- The sigmoid output of the second activation, of shape (1, number of examples)

Y -- "true" labels vector of shape (1, number of examples)

parameters -- python dictionary containing your parameters W1, b1, W2 and b2

Returns:

cost -- cross-entropy cost given equation (13)

"""

m = Y.shape[1] # number of example

# Compute the cross-entropy cost

### START CODE HERE ### (≈ 2 lines of code)

logprobs = np.multiply(np.log(A2),Y)

cost = -np.sum(logprobs + np.multiply(np.log(1 - A2),1 - Y))/m

### END CODE HERE ###

cost = np.squeeze(cost) # makes sure cost is the dimension we expect.

# E.g., turns [[17]] into 17

assert(isinstance(cost, float))

return costA2, Y_assess, parameters = compute_cost_test_case()

print("cost = " + str(compute_cost(A2, Y_assess, parameters)))cost = 0.692919893776

Expected Output:

| **cost** | 0.692919893776 |

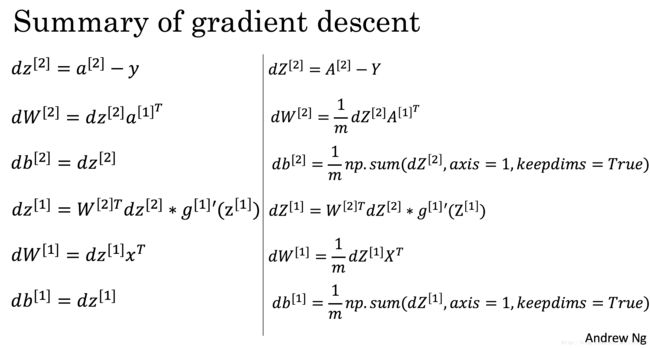

Using the cache computed during forward propagation, you can now implement backward propagation.

Question: Implement the function backward_propagation().

Instructions:

Backpropagation is usually the hardest (most mathematical) part in deep learning. To help you, here again is the slide from the lecture on backpropagation. You’ll want to use the six equations on the right of this slide, since you are building a vectorized implementation. (反向传播通常是深度学习中最难(最具数学意义的)部分。为了帮助你,这里又一次是反向传播讲座的幻灯片。您将要使用这张幻灯片右边的六个公式,因为您正在构建一个矢量化的实现。)

Tips:

- To compute dZ1 you’ll need to compute g[1]′(Z[1]) g [ 1 ] ′ ( Z [ 1 ] ) . Since g[1](.) g [ 1 ] ( . ) is the tanh activation function, if a=g[1](z) a = g [ 1 ] ( z ) then g[1]′(z)=1−a2 g [ 1 ] ′ ( z ) = 1 − a 2 . So you can compute

g[1]′(Z[1]) g [ 1 ] ′ ( Z [ 1 ] ) using(1 - np.power(A1, 2)).

(为了计算dZ1,你需要计算 g[1]′(Z[1]) g [ 1 ] ′ ( Z [ 1 ] ) 。因为 g[1](.) g [ 1 ] ( . ) 是tanh激活函数,所以如果 a=g[1](z) a = g [ 1 ] ( z ) ,那么 g[1]′(z)=1−a2 g [ 1 ] ′ ( z ) = 1 − a 2 。所以你可以用

(1 - np.power(A1, 2))来计算 g[1]′(Z[1]) g [ 1 ] ′ ( Z [ 1 ] ) )- To compute dZ1 you’ll need to compute g[1]′(Z[1]) g [ 1 ] ′ ( Z [ 1 ] ) . Since g[1](.) g [ 1 ] ( . ) is the tanh activation function, if a=g[1](z) a = g [ 1 ] ( z ) then g[1]′(z)=1−a2 g [ 1 ] ′ ( z ) = 1 − a 2 . So you can compute

# GRADED FUNCTION: backward_propagation

def backward_propagation(parameters, cache, X, Y):

"""

Implement the backward propagation using the instructions above.

Arguments:

parameters -- python dictionary containing our parameters

cache -- a dictionary containing "Z1", "A1", "Z2" and "A2".

X -- input data of shape (2, number of examples)

Y -- "true" labels vector of shape (1, number of examples)

Returns:

grads -- python dictionary containing your gradients with respect to different parameters

"""

m = X.shape[1]

# First, retrieve W1 and W2 from the dictionary "parameters".

### START CODE HERE ### (≈ 2 lines of code)

W1 = parameters["W1"]

W2 = parameters["W2"]

### END CODE HERE ###

# Retrieve also A1 and A2 from dictionary "cache".

### START CODE HERE ### (≈ 2 lines of code)

A1 = cache["A1"]

A2 = cache["A2"]

### END CODE HERE ###

# Backward propagation: calculate dW1, db1, dW2, db2.

### START CODE HERE ### (≈ 6 lines of code, corresponding to 6 equations on slide above)

dZ2= A2 - Y

dW2 = np.dot(dZ2,A1.T)/m

db2 = np.sum(dZ2,axis=1,keepdims = True)/m

dZ1 = np.dot(W2.T,dZ2)*(1 - np.power(A1, 2))

dW1 = np.dot(dZ1,X.T)/m

db1 = np.sum(dZ1,axis=1,keepdims = True)/m

### END CODE HERE ###

grads = {"dW1": dW1,

"db1": db1,

"dW2": dW2,

"db2": db2}

return gradsparameters, cache, X_assess, Y_assess = backward_propagation_test_case()

grads = backward_propagation(parameters, cache, X_assess, Y_assess)

print ("dW1 = "+ str(grads["dW1"]))

print ("db1 = "+ str(grads["db1"]))

print ("dW2 = "+ str(grads["dW2"]))

print ("db2 = "+ str(grads["db2"]))dW1 = [[ 0.01018708 -0.00708701]

[ 0.00873447 -0.0060768 ]

[-0.00530847 0.00369379]

[-0.02206365 0.01535126]]

db1 = [[-0.00069728]

[-0.00060606]

[ 0.000364 ]

[ 0.00151207]]

dW2 = [[ 0.00363613 0.03153604 0.01162914 -0.01318316]]

db2 = [[ 0.06589489]]

Expected output:

| **dW1** | [[ 0.01018708 -0.00708701] [ 0.00873447 -0.0060768 ] [-0.00530847 0.00369379] [-0.02206365 0.01535126]] |

| **db1** | [[-0.00069728] [-0.00060606] [ 0.000364 ] [ 0.00151207]] |

| **dW2** | [[ 0.00363613 0.03153604 0.01162914 -0.01318316]] |

| **db2** | [[ 0.06589489]] |

Question: Implement the update rule. Use gradient descent. You have to use (dW1, db1, dW2, db2) in order to update (W1, b1, W2, b2).(实施更新规则。使用渐变下降。你必须使用(dW1,db1,dW2,db2)来更新(W1,b1,W2,b2)。)

General gradient descent rule: θ=θ−α∂J∂θ θ = θ − α ∂ J ∂ θ where α α is the learning rate and θ θ represents a parameter.

Illustration: The gradient descent algorithm with a good learning rate (converging) and a bad learning rate (diverging). Images courtesy of Adam Harley.(具有良好学习速率(收敛)和不良学习速率(发散)的梯度下降算法。)

# GRADED FUNCTION: update_parameters

def update_parameters(parameters, grads, learning_rate = 1.2):

"""

Updates parameters using the gradient descent update rule given above

Arguments:

parameters -- python dictionary containing your parameters

grads -- python dictionary containing your gradients

Returns:

parameters -- python dictionary containing your updated parameters

"""

# Retrieve each parameter from the dictionary "parameters"

### START CODE HERE ### (≈ 4 lines of code)

W1 = parameters["W1"]

b1 = parameters["b1"]

W2 = parameters["W2"]

b2 = parameters["b2"]

### END CODE HERE ###

# Retrieve each gradient from the dictionary "grads"

### START CODE HERE ### (≈ 4 lines of code)

dW1 = grads["dW1"]

db1 = grads["db1"]

dW2 = grads["dW2"]

db2 = grads["db2"]

## END CODE HERE ###

# Update rule for each parameter

### START CODE HERE ### (≈ 4 lines of code)

W1 = W1 - learning_rate*dW1

b1 = b1 - learning_rate*db1

W2 = W2 - learning_rate*dW2

b2 = b2 - learning_rate*db2

### END CODE HERE ###

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2}

return parametersparameters, grads = update_parameters_test_case()

parameters = update_parameters(parameters, grads)

print("W1 = " + str(parameters["W1"]))

print("b1 = " + str(parameters["b1"]))

print("W2 = " + str(parameters["W2"]))

print("b2 = " + str(parameters["b2"]))W1 = [[-0.00643025 0.01936718]

[-0.02410458 0.03978052]

[-0.01653973 -0.02096177]

[ 0.01046864 -0.05990141]]

b1 = [[ -1.02420756e-06]

[ 1.27373948e-05]

[ 8.32996807e-07]

[ -3.20136836e-06]]

W2 = [[-0.01041081 -0.04463285 0.01758031 0.04747113]]

b2 = [[ 0.00010457]]

Expected Output:

| **W1** | [[-0.00643025 0.01936718] [-0.02410458 0.03978052] [-0.01653973 -0.02096177] [ 0.01046864 -0.05990141]] |

| **b1** | [[ -1.02420756e-06] [ 1.27373948e-05] [ 8.32996807e-07] [ -3.20136836e-06]] |

| **W2** | [[-0.01041081 -0.04463285 0.01758031 0.04747113]] |

| **b2** | [[ 0.00010457]] |

4.4 - Integrate parts 4.1, 4.2 and 4.3 in nn_model()

Question: Build your neural network model in nn_model().

Instructions: The neural network model has to use the previous functions in the right order.

# GRADED FUNCTION: nn_model

def nn_model(X, Y, n_h, num_iterations = 10000, print_cost=False):

"""

Arguments:

X -- dataset of shape (2, number of examples)

Y -- labels of shape (1, number of examples)

n_h -- size of the hidden layer

num_iterations -- Number of iterations in gradient descent loop

print_cost -- if True, print the cost every 1000 iterations

Returns:

parameters -- parameters learnt by the model. They can then be used to predict.

"""

np.random.seed(3)

n_x = layer_sizes(X, Y)[0]

n_y = layer_sizes(X, Y)[2]

# Initialize parameters, then retrieve W1, b1, W2, b2. Inputs: "n_x, n_h, n_y". Outputs = "W1, b1, W2, b2, parameters".

### START CODE HERE ### (≈ 5 lines of code)

parameters = initialize_parameters(n_x, n_h, n_y)

W1 = parameters['W1']

b1 = parameters['b1']

W2 = parameters['W2']

b2 = parameters['b2']

### END CODE HERE ###

# Loop (gradient descent)

import pdb

for i in range(0, num_iterations):

### START CODE HERE ### (≈ 4 lines of code)

# Forward propagation. Inputs: "X, parameters". Outputs: "A2, cache".

A2, cache = forward_propagation(X, parameters)

# Cost function. Inputs: "A2, Y, parameters". Outputs: "cost".

cost = compute_cost(A2, Y, parameters)

# Backpropagation. Inputs: "parameters, cache, X, Y". Outputs: "grads".

grads = backward_propagation(parameters, cache, X, Y)

# Gradient descent parameter update. Inputs: "parameters, grads". Outputs: "parameters".

parameters = update_parameters(parameters, grads)

### END CODE HERE ###

# Print the cost every 1000 iterations

if print_cost and i % 1000 == 0:

print ("Cost after iteration %i: %f" %(i, cost))

return parametersX_assess, Y_assess = nn_model_test_case()

parameters = nn_model(X_assess, Y_assess, 4, num_iterations=10000, print_cost=False)

print("W1 = " + str(parameters["W1"]))

print("b1 = " + str(parameters["b1"]))

print("W2 = " + str(parameters["W2"]))

print("b2 = " + str(parameters["b2"]))/usr/local/lib/python3.5/dist-packages/ipykernel_launcher.py:21: RuntimeWarning: divide by zero encountered in log

/home/suika/master666/deeplearning.ai-master/1_Neural Networks and Deep Learning/week3/Planar data classification with one hidden layer/planar_utils.py:34: RuntimeWarning: overflow encountered in exp

s = 1/(1+np.exp(-x))

/usr/local/lib/python3.5/dist-packages/ipykernel_launcher.py:20: RuntimeWarning: divide by zero encountered in log

W1 = [[-7.52988766 1.24319063]

[-4.26279991 5.29212185]

[-7.53014762 1.24321351]

[ 4.14268745 -5.36367671]]

b1 = [[ 3.79497527]

[ 2.31213804]

[ 3.79504402]

[-2.32779718]]

W2 = [[-6009.17160368 -6035.84702574 -6010.98356457 6036.44394146]]

b2 = [[-54.06026603]]

Expected Output:

| **W1** | [[-4.18494056 5.33220609] [-7.52989382 1.24306181] [-4.1929459 5.32632331] [ 7.52983719 -1.24309422]] |

| **b1** | [[ 2.32926819] [ 3.79458998] [ 2.33002577] [-3.79468846]] |

| **W2** | [[-6033.83672146 -6008.12980822 -6033.10095287 6008.06637269]] |

| **b2** | [[-52.66607724]] |

4.5 Predictions

Question: Use your model to predict by building predict().

Use forward propagation to predict results.

Reminder: predictions = yprediction=