Mybatis产生背景

1. JDBC操作数据库的局限性

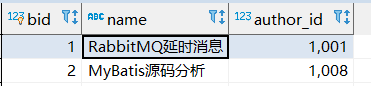

假设数据库有这么一张表:

CREATE TABLE `blog` (

`bid` int(11) NOT NULL AUTO_INCREMENT,

`name` varchar(255) DEFAULT NULL,

`author_id` int(11) DEFAULT NULL,

PRIMARY KEY (`bid`)

) ENGINE=InnoDB AUTO_INCREMENT=3 DEFAULT CHARSET=utf8

使用原生JDBC查询数据库:

@Test

public void testJdbc() throws IOException {

Connection conn = null;

Statement stmt = null;

Blog blog = new Blog();

try {

// 注册 JDBC 驱动

Class.forName("com.mysql.jdbc.Driver");

// 打开连接

conn = DriverManager.getConnection("jdbc:mysql://localhost:3306/mybatistest", "root", "lchadmin");

// 执行查询

stmt = conn.createStatement();

String sql = "SELECT bid, name, author_id FROM blog where bid = 1";

ResultSet rs = stmt.executeQuery(sql);

// 获取结果集

while (rs.next()) {

Integer bid = rs.getInt("bid");

String name = rs.getString("name");

Integer authorId = rs.getInt("author_id");

blog.setAuthorId(authorId);

blog.setBid(bid);

blog.setName(name);

}

System.out.println(blog);

rs.close();

stmt.close();

conn.close();

} catch (SQLException se) {

se.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

if (stmt != null) stmt.close();

} catch (SQLException se2) {

}

try {

if (conn != null) conn.close();

} catch (SQLException se) {

se.printStackTrace();

}

}

}

只是查询一条记录,就需要下面6个步骤:

- 注册驱动(Class.forName)

- 获取连接:Connection

- 创建Statement对象

- 执行查询语句:stmt.executeQuery

- 遍历返回的结果集,封装返回对象

- 关闭连接:ResutlSet Statement Connection

实际业务中如果是这样的代码,无疑是很可怕的,也是JDBC操作数据库的缺点:

第一,重复代码多,

第二,需要手动管理数据库连接资源,如果忘记关流,很容易造成数据库服务连接耗尽,

第三,把结果集转换为POJO时,必须根据字段属性的类型一个一个去处理

第四,业务逻辑与数据操作强耦合,sql硬编码在代码中,难以维护

2. JDBC工具类之DbUtils

为了解决JDBC操作数据库的种种问题,出现了Commons DbUtils 工具类,用来简化数据库的操作,DbUtils 提供了一个工具类QueryRunner,封装了CRUD的一些操作,使用步骤如下:

- 创建一个QueryRunner对象,QueryRunner的构造方法中传入数据源: queryRunner = new QueryRunner(dataSource);

- 为了解决结果集转换为不同类型的POJO对象,DbUtils 提供了一系列支持泛型的ResultSetHandler, 只要在DAO层调用QueryRunner封装好的查询方法,传入指定类型的handler,就可以把结果集转换成Bean ,List 或Map

String sql = "select * from blog";

List<BlogDto> list = queryRunner.query(sql, new BeanListHandler<>(BlogDto.class));

那么自动类型转换是怎么实现的呢,点击进入BeanListHandler类,它实现了 ResultSetHandler接口,重写了handle方法:

public List<T> handle(ResultSet rs) throws SQLException {

return this.convert.toBeanList(rs, this.type);

}

它调用的是RowProcessor的toBeanList方法,这是一个接口,找到它的默认实现org.apache.commons.dbutils.BasicRowProcessor#toBeanList ,它又调用了org.apache.commons.dbutils.BeanProcessor#toBeanList

public <T> List<T> toBeanList(ResultSet rs, Class<? extends T> type) throws SQLException {

List<T> results = new ArrayList();

if (!rs.next()) {

return results;

} else {

PropertyDescriptor[] props = this.propertyDescriptors(type);

ResultSetMetaData rsmd = rs.getMetaData();

int[] columnToProperty = this.mapColumnsToProperties(rsmd, props);

do {

results.add(this.createBean(rs, type, props, columnToProperty));

} while(rs.next());

return results;

}

}

进入createBean 方法,看到最终是调用 populateBean方法进行结果集到POJO对象的映射

private <T> T createBean(ResultSet rs, Class<T> type, PropertyDescriptor[] props, int[] columnToProperty) throws SQLException {

T bean = this.newInstance(type);

return this.populateBean(rs, bean, props, columnToProperty);

}

private <T> T populateBean(ResultSet rs, T bean, PropertyDescriptor[] props, int[] columnToProperty) throws SQLException {

for(int i = 1; i < columnToProperty.length; ++i) {

if (columnToProperty[i] != -1) {

PropertyDescriptor prop = props[columnToProperty[i]];

Class<?> propType = prop.getPropertyType();

Object value = null;

if (propType != null) {

value = this.processColumn(rs, i, propType);

if (value == null && propType.isPrimitive()) {

value = primitiveDefaults.get(propType);

}

}

this.callSetter(bean, prop, value);

}

}

return bean;

}

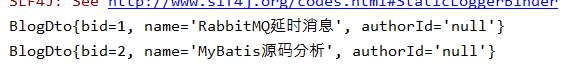

通过for循环,将rs的值填充到指定的类型属性中,但是,这个工具类有个问题:数据库中authorId明明有值,但查询结果却是null, 这是因为dbutils没有解决数据库字段与POJO属性名不一致的问题,DbUtils中的自动映射,必须要求数据库字段与POJO属性名完全一致

3. JDBC工具类之JdbcTemplate

JdbcTemplate是Spring对原生Jdbc的封装,它封装了JDBC的核心流程,应用只要提供sql ,提取结果集就可以了,并且在初始化的时候课设置数据源,解决了资源管理的问题;

对结果集的处理,JdbcTemplate提供了RowMapper接口,用来把结果集转换为Java对象,RowMapper作为JdbcTemplate的参数来使用:

public class EmployeeRowMapper implements RowMapper {

@Override

public Object mapRow(ResultSet resultSet, int i) throws SQLException {

Employee employee = new Employee();

employee.setEmpId(resultSet.getInt("emp_id"));

employee.setEmpName(resultSet.getString("emp_name"));

employee.setGender(resultSet.getString("gender"));

employee.setEmail(resultSet.getString("email"));

return employee;

}

}

使用时传入sql 和EmployeeRowMapper即可进行查询,返回我们需要的类型

List<Employee> list = jdbcTemplate.query(" select * from tbl_emp", new EmployeeRowMapper());

但是,如果项目的表非常多,每张表转换为POJO都要定义一个RowMapper 的实现类,会导致类的膨胀。想要让表里面一行数据的字段跟POJO属性自动进行映射,必须要解决两个问题:

- 名称对应问题,从数据库的下划线到pojo的驼峰命名

- 类型匹配问题,数据库的JDBC类型和Java对象的类型要对应,

虽然我们可以封装一个通用的BaseRowMapper,在jdbcTemplate.query方法中传入sql和BaseRowMapper 即可,但是,sql仍然会和Java代码耦合在一起。

public class BaseRowMapper<T> implements RowMapper<T> {

private Class<?> targetClazz;

private HashMap<String, Field> fieldMap;

public BaseRowMapper(Class<?> targetClazz) {

this.targetClazz = targetClazz;

fieldMap = new HashMap<>();

Field[] fields = targetClazz.getDeclaredFields();

for (Field field : fields) {

fieldMap.put(field.getName(), field);

}

}

@Override

public T mapRow(ResultSet rs, int arg1) throws SQLException {

T obj = null;

try {

obj = (T) targetClazz.newInstance();

final ResultSetMetaData metaData = rs.getMetaData();

int columnLength = metaData.getColumnCount();

String columnName = null;

for (int i = 1; i <= columnLength; i++) {

columnName = metaData.getColumnName(i);

Class fieldClazz = fieldMap.get(camel(columnName)).getType();

Field field = fieldMap.get(camel(columnName));

field.setAccessible(true);

// fieldClazz == Character.class || fieldClazz == char.class

if (fieldClazz == int.class || fieldClazz == Integer.class) { // int

field.set(obj, rs.getInt(columnName));

} else if (fieldClazz == boolean.class || fieldClazz == Boolean.class) { // boolean

field.set(obj, rs.getBoolean(columnName));

} else if (fieldClazz == String.class) { // string

field.set(obj, rs.getString(columnName));

} else if (fieldClazz == float.class) { // float

field.set(obj, rs.getFloat(columnName));

} else if (fieldClazz == double.class || fieldClazz == Double.class) { // double

field.set(obj, rs.getDouble(columnName));

} else if (fieldClazz == BigDecimal.class) { // bigdecimal

field.set(obj, rs.getBigDecimal(columnName));

} else if (fieldClazz == short.class || fieldClazz == Short.class) { // short

field.set(obj, rs.getShort(columnName));

} else if (fieldClazz == Date.class) { // date

field.set(obj, rs.getDate(columnName));

} else if (fieldClazz == Timestamp.class) { // timestamp

field.set(obj, rs.getTimestamp(columnName));

} else if (fieldClazz == Long.class || fieldClazz == long.class) { // long

field.set(obj, rs.getLong(columnName));

}

field.setAccessible(false);

}

} catch (Exception e) {

e.printStackTrace();

}

return obj;

}

/**

* 下划线转驼峰

* @param str

* @return

*/

public static String camel(String str) {

Pattern pattern = Pattern.compile("_(\\w)");

Matcher matcher = pattern.matcher(str);

StringBuffer sb = new StringBuffer(str);

if(matcher.find()) {

sb = new StringBuffer();

matcher.appendReplacement(sb, matcher.group(1).toUpperCase());

matcher.appendTail(sb);

}else {

return sb.toString();

}

return camel(sb.toString());

}

4. 两种工具类的不足

虽然两种工具类已经解决了很多jdbc操作数据库的问题,但是仍然存在很大缺陷:

- SQL语句写死在代码里面,与Java代码强耦合

- SQL查询参数只能按照固定位置的顺序传入,通过占位符去替换,不能传入对象和map

- 可以把结果集映射成实体类,但是无法做到传入一个JavaBean, 映射成为数据库的记录(没有自动生成sql的功能) 它只是做到了数据库到Java对象的单向映射,而Java对象到数据库的自动映射无法实现

- 查询没有缓存,性能不够好

要解决上面的问题,ORM框架应运而生

5. ORM框架

每一种技术的产生,都是为了解决某个场景下的问题。那么,什么是ORM? ORM是Object Relation Mapping ,就是对象关系映射,对象是程序里面的对象,关系是它与数据库里面的数据的关系,ORM框架帮我们解决的是对象和关系型数据库的相互映射的问题

- Hibernate框架是一个全自动的ORM框架,它具有以下优点:

- 根据数据库方言自动生成sql, 移植性好

- 自动管理数据源

- 实现了对象和关系型数据库的完全映射,操作对象就是操作数据库记录

- 提供了缓存机制

但它的缺点也很明显: - 使用get update save 对象时,操作的是所有字段,无法指定部分字段,不灵活

- 自动生成SQL,无法优化sql,存在性能问题

- 不支持动态sql, 分库分表时表名,条件,参数发生变化,无法根据条件自动生成sql

- Mybatis框架

半自动化的ORM框架Mybatis解决了Hibernate的几个问题,封装程度没有Hibernate那么高,主要解决SQL和对象的映射问题,并且,sql和代码是分离的。

mybatis主要有以下特性:

- 使用连接池管理连接对象

- SQL与代码分离,集中管理

- 参数映射和动态SQL

- 结果集自动映射

- 缓存管理

- 重复SQL提取

- 插件机制