Python线程与进程

文章目录

- 引入

- 1 多进程

- 1.1 Process

- 1.2 Pool

- 1.3 进程间通信

- 2 多线程

- 2.1 threading

- 2.2 线程同步

- 2.3 全局解释器锁 (GIL)

- 3 协程

- 4 分布式进程

- 致谢

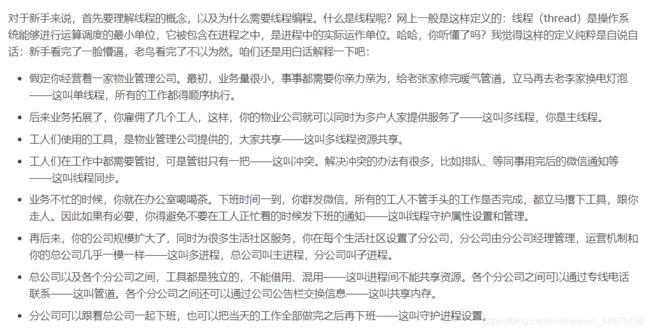

引入

1 多进程

Python实现多进程的方法主要有两种:os模块下的fork方法、multiprocessing模块。前者只适用于Unix / Linux系统,后者则是跨平台的实现方式。由于本人使用的系统为windows,在以后使用Unix / Linux再进行补充。

1.1 Process

multiprocessing提供了一个Process类来描述一个进程对象。创建子进程时,只需要传入一个执行函数和函数的参数;用start ()方法启动进程,用join ()方法实现进程间的同步:

import os

from multiprocessing import Process

def run_proc(name):

print("Child process (%s) (%s) running..." % (name, os.getpid()))

if __name__ == '__main__':

print("Current process (%s) start..." % (os.getpid()))

for i in range(5):

p = Process(target=run_proc, args=str(i))

print("Process will start.")

p.start()

p.join()

print("Process end.")

输出:

Current process (17016) start...

Process will start.

Process will start.

Process will start.

Process will start.

Process will start.

Child process (0) (16024) running...

Child process (1) (10276) running...

Child process (3) (1296) running...

Child process (2) (13944) running...

Child process (4) (3884) running...

Process end.

1.2 Pool

使用Process方法的不足在于需要启动大量的子进程,只能适用于被操作对象数目不大的情况,而使用Pool便能解决这一问题。

简单说来,Pool可以指定进程的数量,默认为CPU的核数,同一时候最多有指定数量的进程执行:

import os, time, random

from multiprocessing import Pool

def run_task(name):

print("Task %s (pid = %s) is running..." % (name, os.getpid()))

time.sleep(random.random() * 3)

print("Task %s end." % name)

if __name__ == '__main__':

print("Current process (%s) start..." % (os.getpid()))

p = Pool(processes=3)

for i in range(5):

p.apply_async(run_task, args=(i, ))

print("Waiting for all subprocess done...")

p.close()

p.join()

print("All subprocess done.")

输出:

Current process (15076) start...

Waiting for all subprocess done...

Task 0 (pid = 4620) is running...

Task 1 (pid = 7520) is running...

Task 2 (pid = 15384) is running...

Task 2 end.

Task 3 (pid = 15384) is running...

Task 3 end.

Task 4 (pid = 15384) is running...

Task 0 end.

Task 1 end.

Task 4 end.

All subprocess done.

需要注意的是Pool对象调用join ()方法会等待所有子进程执行完毕,调用join () 之前必须调用close ();调用close ()之后就不能继续添加新的Process。

1.3 进程间通信

Python提供了多种进程间通信的方式,这里主要讲Queue和Pipe:

1)Queue: 用于多个进程间的通信,操作如下:

- Queue.put(): 可选参数为blocked和timeout

blocked默认为True;

如果blocked为True且timeout为正值,该方法会在队里没有剩余空间时阻塞timeout指定的时间,如果超时,则会抛出Queue.Full异常;

blocked为False,且Queue已满,也会抛出相应异常; - Queue.get(blocked, timeout): 可选参数为blocked和timeout

blocked默认为True;

blocked为True,且timeout为正值,在等待时间内没有取得元素,则会抛出Queue.Empty的异常;

如果blocked为False:

如果Queue有一个值可用,则立即返回该值;

如果队列为空,则抛出相应异常。

以下例子为:

在父进程中创建三个子进程,两个子进程往Queue中写入数据,另一个则读取数据:

import os, time

from multiprocessing import Process, Queue

"""

Write to process.

"""

def proc_write(q, urls):

print("Process (%d) is writing..." % os.getpid())

for url in urls:

q.put(url)

print('Put %s to queue...' % url)

time.sleep(0.1)

"""Read form process."""

def proc_read(q):

print("Process (%d) is reading..." % (os.getpid()))

while True:

url = q.get(True)

print("Get %s from queue." % url)

if __name__ == '__main__':

# 创建父进程

q = Queue()

writer1 = Process(target=proc_write, args=(q, ['张飞', '黄忠', "孙尚香"]))

writer2 = Process(target=proc_write, args=(q, ['马超', '关羽', "赵云"]))

reader = Process(target=proc_read, args=(q, ))

# 启动

writer1.start()

writer2.start()

reader.start()

writer1.join()

writer2.join()

# 读操作是死循环,必须强行终止

reader.terminate()

输出:

Process (15908) is writing...

Put 张飞 to queue...

Process (16320) is writing...

Put 马超 to queue...

Process (14980) is reading...

Get 张飞 from queue.

Get 马超 from queue.

Get 黄忠 from queue.

Put 黄忠 to queue...

Put 关羽 to queue...

Get 关羽 from queue.

Put 孙尚香 to queue...

Get 孙尚香 from queue.

Put 赵云 to queue...

Get 赵云 from queue.

2)Pipe: 常用于两个进程间的通信,两个进程分别位于管道的两端:

Pipe ()方法返回 (conn1, conn2),代表一个管道的两端;

可选参数duplex:

True (默认):conn1,、conn2均可收发;

False:conn1负责接收消息,conn2负责发送消息;

.send: 发送消息

.recv: 接收消息

未收到消息,则recv方法会一直阻塞;

管道如果关闭,recv方法会抛出EOFRrror。

import os, time

from multiprocessing import Process, Pipe

def proc_send(p, urls):

print("Process (%d) is sending..." % os.getpid())

for url in urls:

p.send(url)

print('Send %s...' % url)

time.sleep(0.1)

def proc_recv(p):

print("Process (%d) is receiving..." % (os.getpid()))

while True:

print("Receive %s" % p.recv())

time.sleep(0.1)

if __name__ == '__main__':

# 创建父进程

p = Pipe()

p1 = Process(target=proc_send, args=(p[0], ['张飞' + str(i) for i in range(3)]))

p2 = Process(target=proc_recv, args=(p[1], ))

p1.start()

p2.start()

p1.join()

p2.join()

输出:

Process (14364) is sending...

Send 张飞0...

Process (14116) is receiving...

Receive 张飞0

Send 张飞1...

Receive 张飞1

Send 张飞2...

Receive 张飞2

2 多线程

多线程类似于执行多个不同的程序,具有以下优点:

- 任务可后台处理

- 用户界面可以更吸引人,例如进度条

- 可能加快程序运行速度

- 在一些需要等待的任务上,如用户输入、文件读写、网络收发数据等,可以释放一些资源,如内存占用。

Python库提供了两个模块:thread 和threading, 后者对前者进行了包装,一般使用后者.

2.1 threading

方法1:传入函数并创建Thread实例,再start()运行:

import time, threading

def thread_run(urls):

print("Current %s is running..." % threading.current_thread().name)

for url in urls:

print("%s --->>> %s" % (threading.current_thread().name, url))

time.sleep(0.1)

print("%s ended." % threading.current_thread().name)

if __name__ == '__main__':

print("%s is running..." % threading.current_thread().name)

t1 = threading.Thread(target=thread_run, name='t1', args=(['张飞', '关羽', '孙尚香'],))

t2 = threading.Thread(target=thread_run, name='t2', args=(['赵云', '马超', '黄忠'],))

t1.start()

t2.start()

t1.join()

t1.join()

print("%s ended." % threading.current_thread().name)

输出:

MainThread is running...

Current t1 is running...

t1 --->>> 张飞

Current t2 is running...

t2 --->>> 赵云

t2 --->>> 马超t1 --->>> 关羽

t2 --->>> 黄忠t1 --->>> 孙尚香

t2 ended.

t1 ended.

MainThread ended.

方法2:从threading.Thread 继承并创建线程类,然后重写__init__ 方法和run方法:

import time, threading

class myThread(threading.Thread):

def __init__(self, name, urls):

threading.Thread.__init__(self, name=name)

self.urls = urls

def run(self):

print("Current %s is running..." % threading.current_thread().name)

for url in self.urls:

print("%s --->>> %s" % (threading.current_thread().name, url))

time.sleep(0.1)

print("%s ended." % threading.current_thread().name)

if __name__ == '__main__':

print("%s is running..." % threading.current_thread().name)

t1 = myThread(name='t1', urls=['张飞', '关羽', '孙尚香'])

t2 = myThread(name='t2', urls=['赵云', '马超', '黄忠'])

t1.start()

t2.start()

t1.join()

t1.join()

print("%s ended." % threading.current_thread().name)

输出:

MainThread is running...

Current t1 is running...

t1 --->>> 张飞Current t2 is running...

t2 --->>> 赵云

t1 --->>> 关羽

t2 --->>> 马超

t2 --->>> 黄忠

t1 --->>> 孙尚香

t2 ended.t1 ended.

MainThread ended.

2.2 线程同步

如果多个线程共同对某个数据修改,则可能出现不可预料的结果,为了保证数据的正确性,需要对多个线程进行同步。

具体说明:

1)使用Thread对象的Lock和RLock可以实现简单的线程同步,这两个对象都有acquire方法和release方法;

2)对于每次只允许一个线程操作的数据,可以将其操作放在acquire和release之间。

3)对于Lock对象而言,如果一个线程连续两次进行acquire操作,将使线程死锁;

4)RLock对象允许一个线程连续多次进行acquire操作,因为其内部通过一个counter变量维护着acquire的次数;

5)每一个acquire对象必须有一个release与之对应;

6)所有的release操作完成之后,别的线程才能申请该RLock对象。

import threading

my_lock = threading.RLock()

num = 0

class myThread(threading.Thread):

def __init__(self, name):

threading.Thread.__init__(self, name=name)

def run(self):

global num

while True:

my_lock.acquire()

print("%s locked, Number: %d" % (threading.current_thread().name, num))

if num >= 4:

my_lock.release()

print("%s released, Number: %d" % (threading.current_thread().name, num))

break

num += 1

print("%s released, Number: %d" % (threading.current_thread().name, num))

my_lock.release()

if __name__ == '__main__':

t1 = myThread('张飞先上')

t2 = myThread("终于到赵云了")

t1.start()

t2.start()

输出:

张飞先上 locked, Number: 0

张飞先上 released, Number: 1

张飞先上 locked, Number: 1

张飞先上 released, Number: 2

张飞先上 locked, Number: 2

张飞先上 released, Number: 3

张飞先上 locked, Number: 3

张飞先上 released, Number: 4

张飞先上 locked, Number: 4

张飞先上 released, Number: 4

终于到赵云了 locked, Number: 4

终于到赵云了 released, Number: 4

2.3 全局解释器锁 (GIL)

Python的原始解释器CPython中存在着GIL,因此再解释执行Python代码时,会产生互斥锁来限制线程对共享资源的访问,知道解释器遇到I / O操作或者操作次数达到一定数目。

因此,由于GIL的存在,在进行多线程操作时,不能调用多个CPU内核,所有在CPU密集型操作时更倾向于使用多进程。

对于IO密集型操作,使用多线程则可以提高效率,例如Python爬虫的开发。

3 协程

协程 (coroutine),又称微线程、纤程。协程拥有自己的寄存器上下文和栈,其调度切换时,将寄存器上下文和栈保存在其他地方,在切回来的时候,恢复先前保存的寄存器上下文和栈。因此,协程可以保留上一次调用时的状态,即每次过程重入时,就相当于进入上一次调用的状态。

Python通过yield对协程提供基本支持,但是不完全,而第三方库gevent则提供更完善的操作,其主要特性有以下:

- 基于libev的快速事件循环,Linux上是epoll机制;

- 基于greenlet的轻量级执行单元;

- API复用了Python标准库里的内容;

- 支持SSL的协作式sockets

- 可通过线程池或者c-ares实现DNS查询;

- 通过monkey patching功能使得第三方模块变成协作式。

greenlet的工作流程如下:

假如进行访问网络的IO操作时出现阻塞,greenlet就显示切换到另一段没有被阻塞的代码块执行,直到原先的阻塞状况消失,再自动切换到原来的代码块,这也是协程一般比多线程效率高的原因:

# python2需要将urllib.request改为urllib2

import gevent, urllib.request

from gevent import monkey; monkey.patch_all()

def run_task(url):

print("Visit ---> %s" % url)

try:

response = urllib.request.urlopen(url)

data = response.read()

print("%d bytes received from %s." % (len(data), url))

except Exception as e:

print(e)

if __name__ == '__main__':

urls = ['https://www.zhihu.com/', 'https://www.python.org/', 'http://www.cnblogs.com/']

# spawn:形成协程

# joinall:添加协程并启动

greenlets = [gevent.spawn(run_task, url) for url in urls]

gevent.joinall(greenlets)

输出:

Visit ---> https://www.zhihu.com/

Visit ---> https://www.python.org/

Visit ---> http://www.cnblogs.com/

48865 bytes received from https://www.zhihu.com/.

49254 bytes received from http://www.cnblogs.com/.

48959 bytes received from https://www.python.org/.

gevent中还提供了对池的支持。当拥有动态数量的greenlet需要进行并发管理 (限制并发数)时,就可以使用池,着再处理大量的网络和IO操作时是非常需要的,以下使用gevent的pool对象对之前的例子进行改进 (目前的代码github啥的网站简直慢的要死):

import urllib.request

from gevent import monkey; monkey.patch_all()

from gevent.pool import Pool

def run_task(url):

print("Visit ---> %s" % url)

try:

response = urllib.request.urlopen(url)

data = response.read()

print("%d bytes received from %s." % (len(data), url))

except Exception as e:

print(e)

return "url: %s ---> finish" % url

if __name__ == '__main__':

pool = Pool(2)

urls = ['https://www.zhihu.com/', 'https://blog.csdn.net/', 'http://www.cnblogs.com/']

results = pool.map(run_task, urls)

print(results)

输出:

Visit ---> https://www.zhihu.com/

Visit ---> https://blog.csdn.net/

49280 bytes received from https://www.zhihu.com/.

Visit ---> http://www.cnblogs.com/

301628 bytes received from https://blog.csdn.net/.

48766 bytes received from http://www.cnblogs.com/.

['url: https://www.zhihu.com/ ---> finish', 'url: https://blog.csdn.net/ ---> finish', 'url: http://www.cnblogs.com/ ---> finish']

4 分布式进程

分布式进程是指将Process进程分布到多台机器上,充分利用多台机器的性能完成复杂的任务。

multiprocessing模块的managers子模块支持把多进程分布到多台机器上。可以写一个服务进程作为调度者,将任务分布到其他多个进程中,依靠网络通信进行管理。

例如:当抓取网站的所有图片,如果使用多进程,一般是一个进程负责抓取图片的链接地址,将链接地址存放到Queue中,另外的进程负责从Queue中读取链接地址并进行下载、存储,这个过程称为分布式,即一台机器上的进程负责抓取链接,其他机器上的进程负责下载存储。

目前的主要问题是上述做法如何将把Queue暴露在网络中,让其他机器进程都可以访问,而分布式进程就是对这一过程进行封装,着称之为本地队列的网络化。整个过程如下图所示 (图片源自致谢中提到的书籍):

分布式进程的创建分为两步,服务进程创建和任务进程创建。

1)服务进程的创建 (taskManager.py):

# coding:utf-8

from multiprocessing import Queue, freeze_support

from multiprocessing.managers import BaseManager

"""任务个数"""

task_number = 10

"""定义收发队列"""

task_queue = Queue(task_number)

result_queue = Queue(task_number)

def get_task():

return task_queue

def get_result():

return result_queue

class QueueManager(BaseManager):

pass

def win_run():

"""Windows下接口绑定不能使用lambda,之呢个先定义函数再绑定"""

QueueManager.register('get_task_queue', callable=get_task)

QueueManager.register('get_result_queue', callable=get_result)

"""绑定端口并设置验证口令,Windows下需要填写IP地址,Linux下默认稳本机"""

manager = QueueManager(address=('127.0.0.1', 8001), authkey='qiye'.encode('utf-8'))

"""启动"""

manager.start()

try:

"""通过网络获取任务队列和结果队列"""

task = manager.get_task_queue()

result = manager.get_result_queue()

"""添加任务"""

for url in ['张飞' + str(i) for i in range(10)]:

print("Put task %s..." % url)

task.put(url)

print("Try get result...")

for i in range(10):

print("Result is %s" % result.get(timeout=10))

except:

print("Manager error")

finally:

"""必须进行关闭,否则会报管道未关闭的错误"""

manager.shutdown()

if __name__ == '__main__':

"""Windows下多进程可能会有问题,添加这句可以缓解"""

freeze_support()

win_run()

2)任务进程的创建 (taskWorker.py):

# coding:utf-8

import time

from multiprocessing.managers import BaseManager

class QueueManager(BaseManager):

pass

def worker():

"""第一步:注册用于获取Queue的方法名称"""

QueueManager.register('get_task_queue')

QueueManager.register('get_result_queue')

"""第二步:连接到服务器"""

server_addr = '127.0.0.1'

print("Connect to server %s..." % server_addr)

"""端口和验证口令需要和服务进程完全一致"""

m = QueueManager(address=(server_addr, 8001), authkey='qiye'.encode('utf-8'))

"""从网络连接"""

m.connect()

"""第三步:获取Queue对象"""

task = m.get_task_queue()

result = m.get_result_queue()

"""第四步:从task队列获取任务,并把结果写入result队列"""

while not task.empty():

url = task.get(True, timeout=5)

print("Run task download %s" % url)

time.sleep(1)

result.put('%s ---> success' % url)

"""结果处理"""

print("Worker exit.")

if __name__ == '__main__':

worker()

首先运行服务进程,输出如下:

Put task 张飞0...

Put task 张飞1...

Put task 张飞2...

Put task 张飞3...

Put task 张飞4...

Put task 张飞5...

Put task 张飞6...

Put task 张飞7...

Put task 张飞8...

Put task 张飞9...

Try get result...

然后运行任务进程,输出如下 (出现这个ConnectionRefusedError: [WinError 10061] 由于目标计算机积极拒绝,无法连接就多试几次):

Connect to server 127.0.0.1...

Run task download 张飞0

Run task download 张飞1

Run task download 张飞2

Run task download 张飞3

Run task download 张飞4

Run task download 张飞5

Run task download 张飞6

Run task download 张飞7

Run task download 张飞8

Run task download 张飞9

Worker exit.

这个过程中会输出成功,不过跳太快了,没有截图到w(゚Д゚)w

致谢

参考教程为范传辉老师的Python爬虫:开发与项目实战。