kubernetes进阶 -- helm包管理工具

概况

-

Helm是Kubernetes 应用的包管理工具,主要用来管理 Charts,类似Linux系统的yum,是k8s的上层应用。

-

Helm Chart 是用来封装 Kubernetes 原生应用程序的一系列 YAML 文件。可以在你部署应用的时候自定义应用程序的一些 Metadata,以便于应用程序的分发。

-

对于应用发布者而言,可以通过 Helm 打包应用、管理应用依赖关系、管理应用版本并发布应用到软件仓库。

-

对于使用者而言,使用 Helm 后不用需要编写复杂的应用部署文件,可以以简单的方式在 Kubernetes 上查找、安装、升级、回滚、卸载应用程序。

我们的ymal文件会随着各种操作越来越多,如果没有一个很好的规划管理,是很难进行使的。Helm Chart 是用来封装 Kubernetes 原生应用程序的一系列 YAML 文件。可以在使用你部署应用的时候。

目前Helm V3 与 V2 最大的区别在于去掉了tiller:

通过配置文件去访问api.还有v3版本中需要自己去添加仓库。

Helm当前最新版本 v3.1.0 官网:https://helm.sh/docs/intro/

部署

[root@server2 helm]# ls

helm-push_0.8.1_linux_amd64.tar.gz helm-v3.2.4-linux-amd64.tar.gz linux-amd64

[root@server2 helm]# cd linux-amd64/

[root@server2 linux-amd64]# ls

helm LICENSE README.md

[root@server2 linux-amd64]# mv helm /usr/local/bin/

[root@server2 linux-amd64]# he

head helm help hexdump

[root@server2 ~]# echo "source <(helm completion bash)" >> ~/.bashrc

[root@server2 ~]# source ~/.bashrc

[root@server2 ~]# helm

completion dependency get install list plugin repo search status test upgrade version

create env history lint package pull rollback show template uninstall verify

这样就可以补齐了。

[root@server2 ~]# helm repo list

Error: no repositories to show /我们当前是没有自己的仓库的

[root@server2 ~]# helm search hub redis /我们可以搜官方的仓库

URL CHART VERSION APP VERSION DESCRIPTION

https://hub.helm.sh/charts/bitnami/redis 10.7.11 6.0.5 Open source, advanced key-value store. It is of...

https://hub.helm.sh/charts/bitnami/redis-cluster 3.1.5 6.0.5 Open source, advanced key-value store. It is of...

https://hub.helm.sh/charts/softonic/redis-sharded 0.1.2 5.0.8 A Helm chart for sharded redis

https://hub.helm.sh/charts/hmdmph/redis-pod-lab... 1.0.2 1.0.0 Labelling redis pods as master/slave periodical...

https://hub.helm.sh/charts/incubator/redis-cache 0.5.0 4.0.12-alpine A pure in-memory redis cache, using statefulset...

https://hub.helm.sh/charts/inspur/redis-cluster 0.0.2 5.0.6 Highly available Kubernetes implementation of R...

https://hub.helm.sh/charts/enapter/keydb 0.11.0 5.3.3 A Helm chart for KeyDB multimaster setup

https://hub.helm.sh/charts/stable/redis-ha 4.4.4 5.0.6 Highly available Kubernetes implementation of R...

https://hub.helm.sh/charts/stable/prometheus-re... 3.4.1 1.3.4 Prometheus exporter for Redis metrics

https://hub.helm.sh/charts/dandydeveloper/redis-ha 4.5.7 5.0.6 Highly available Kubernetes implementation of R...

https://hub.helm.sh/charts/hkube/redis-ha 3.6.1004 5.0.5 Highly available Kubernetes implementation of R...

https://hub.helm.sh/charts/hephy/redis v2.4.0 A Redis database for use inside a Kubernetes cl...

https://hub.helm.sh/charts/pozetron/keydb 0.5.1 v5.3.3 A Helm chart for multimaster KeyDB optionally w...

https://hub.helm.sh/charts/choerodon/redis 0.2.5 0.2.5 redis for Choerodon

我们还可以使用第三方的仓库,毕竟官网的有点慢:

Helm 添加第三方 Chart 库:

[root@server2 ~]# helm repo add stable http://mirror.azure.cn/kubernetes/charts/

helm repo lisy"stable" has been added to your repositories

[root@server2 ~]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

[root@server2 ~]# helm search repo redis

NAME CHART VERSION APP VERSION DESCRIPTION

stable/prometheus-redis-exporter 3.4.1 1.3.4 Prometheus exporter for Redis metrics

stable/redis 10.5.7 5.0.7 DEPRECATED Open source, advanced key-value stor...

stable/redis-ha 4.4.4 5.0.6 Highly available Kubernetes implementation of R...

stable/sensu 0.2.3 0.28 Sensu monitoring framework backed by the Redis ...

阿里云的:helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.co/charts

Helm 部署 redis 应用:

helm支持多种安装方式:(helm默认读取~/.kube/config信息连接k8s集群)

helm install redis-ha stable/redis-ha 直接从网上进行拉取。

这种方式在网络环境不好的情况下是不推荐使用的,因为把包拉取下来很慢。

还可以指定包的路径安装:

helm install redis-ha path/redis-ha /接包的路径

放到内网进行安装:

helm install redis-ha https://example.com/charts/redis-ha-4.4.0.tgz

我们可以先把包拉取下到本地仓库,在进行安装:

[root@server2 ~]# helm pull stable/redis-ha

[root@server2 ~]# ls

redis-ha-4.4.4.tgz

[root@server2 ~]# tar zxf redis-ha-4.4.4.tgz

[root@server2 ~]# cd redis-ha/

[root@server2 redis-ha]# ls

Chart.yaml ci OWNERS README.md templates values.yaml 里面其实都是一些yaml文件。

用户需要定义的变量值全部放在values.yaml里面,然后 templates 里的文件直接去调用

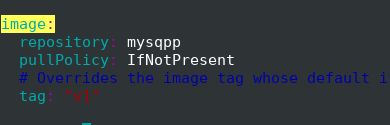

[root@server2 redis-ha]# vim values.yaml /里面有很多内容

image:

repository: redis /可以事先准备好镜像

tag: 5.0.6-alpine

pullPolicy: IfNotPresent

serviceAccount:

create: true /是否创建sa,如果未true,templets中就会读取相应文件

haproxy:

enabled: false /false就可以不用管

# Enable if you want a dedicated port in haproxy for redis-slaves

readOnly:

enabled: false

port: 6380

replicas: 3

image:

repository: haproxy

metrics:

enabled: false /数据的采集,是关闭的

rbac: /授权

create: true

redis:

port: 6379 /这是它的一些配置

masterGroupName: "mymaster"

里面还有资源限制(关闭),sentinel哨兵(打开),反亲和性(打开),输出(关闭)exporter,pv,

[root@server2 redis-ha]# helm install redis-ha .

NAME: redis-ha

LAST DEPLOYED: Sat Jul 11 13:25:38 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

[root@server2 ~]# helm status redis-ha /查看状态

NAME: redis-ha

LAST DEPLOYED: Sat Jul 11 13:25:38 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

Redis can be accessed via port 6379 and Sentinel can be accessed via port 26379 on the following DNS name from within your cluster:

redis-ha.default.svc.cluster.local

To connect to your Redis server:

1. Run a Redis pod that you can use as a client:

kubectl exec -it redis-ha-server-0 sh -n default

2. Connect using the Redis CLI:

redis-cli -h redis-ha.default.svc.cluster.local

[root@server2 redis-ha]# kubectl get pod

NAME READY STATUS RESTARTS AGE

redis-ha-server-0 2/2 Running 0 70s

redis-ha-server-1 2/2 Running 0 61s

redis-ha-server-2 0/2 Pending 0 51s

安装完成后,由于反亲和性的原因,而且master主机上有污点,而且我们没有设置容忍,做一又一个pod处于等待的状态。

[root@server2 ~]# helm uninstall redis-ha /卸载

release "redis-ha" uninstalled

[root@server2 ~]# helm status redis-ha

Error: release: not found

我们重新卸载,去关掉反亲和性。

[root@server2 redis-ha]# vim values.yaml

[root@server2 redis-ha]# helm install redis-ha .

To connect to your Redis server:

1. Run a Redis pod that you can use as a client:

//这好似一些提示性的东西,我们那需要去做

kubectl exec -it redis-ha-server-0 sh -n default /进入主结点pod命令行

2. Connect using the Redis CLI:

redis-cli -h redis-ha.default.svc.cluster.local /连接使用redis命令行连接集群

[root@server2 redis-ha]# kuebcget pod -w

-bash: kuebcget: command not found

[root@server2 redis-ha]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

redis-ha-server-0 2/2 Running 0 13s

redis-ha-server-1 2/2 Running 0 7s

redis-ha-server-2 2/2 Running 0 7s 用statefulset控制器,有唯一表示

重新安装就好了

[root@server2 redis-ha]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-redis-ha-server-0 Bound pvc-e412fb4e-7666-4ca4-83f1-7621e0d474f9 10Gi RWO managed-nfs-storage 11m

data-redis-ha-server-1 Bound pvc-78cb2920-5b88-4d50-b087-f14b4e486c97 10Gi RWO managed-nfs-storage 6m26s

data-redis-ha-server-2 Bound pvc-63c09e96-9161-4560-bc82-c83263237f72 10Gi RWO managed-nfs-storage 6m16s

[root@server2 redis-ha]# kubectl exec -it redis-ha-server-0 sh -n default

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

Defaulting container name to redis.

Use 'kubectl describe pod/redis-ha-server-0 -n default' to see all of the containers in this pod.

$ redis-cli -h redis-ha.default.svc.cluster.local redis-ha 是我们的svc,通过dns解析是负载均衡的。

redis-ha.default.svc.cluster.local:6379> info

# Replication

role:master /连接到了master结点

connected_slaves:2

我们还可以通过pod名连接:

$ redis-cli -h redis-ha-server-0

redis-ha-server-0:6379> info

# Replication

role:master

connected_slaves:2

min_slaves_good_slaves:2

$ redis-cli -h redis-ha-server-1.redis-ha

redis-ha-server-1.redis-ha:6379> info

# Replication

role:slave

master_host:10.99.95.146

master_port:6379

我们可以在msater结点上写数据,其他结点会同步过去:

$ redis-cli -h redis-ha-server-0.redis-ha

redis-ha-server-0.redis-ha:6379> set name linux /设置变量

OK

redis-ha-server-0.redis-ha:6379> get name

"linux"

redis-ha-server-0.redis-ha:6379>

$ redis-cli -h redis-ha-server-1.redis-ha

redis-ha-server-1.redis-ha:6379> get name

"linux"

redis-ha-server-1.redis-ha:6379>

$ redis-cli -h redis-ha-server-2.redis-ha /两个结点都能获取到

redis-ha-server-2.redis-ha:6379> get name

"linux"

由于有高可用的设置:

$ redis-cli -h redis-ha-server-0

redis-ha-server-0:6379> SHUTDOWN /关机

command terminated with exit code 137

[root@server2 redis-ha]# kubectl get pod

NAME READY STATUS RESTARTS AGE

redis-ha-server-0 2/2 Running 1 20m /重启了一次

redis-ha-server-1 2/2 Running 0 20m

redis-ha-server-2 2/2 Running 0 20m

$ redis-cli -h redis-ha-server-0

redis-ha-server-0:6379> get name

"linux" /由于pv的设置,值还保存着

[root@server2 redis-ha]# helm uninstall redis-ha

release "redis-ha" uninstalled

这些东西都是别人写好的,我们只需要进行改就行了。

手动构建helm chart

如果我们想自己写的话,我们可以构建一个 Helm Chart:

[root@server2 ~]# helm create mychart

Creating mychart

[root@server2 ~]# ls

mychart

[root@server2 ~]# cd mychart/

[root@server2 mychart]# ls

charts Chart.yaml templates values.yaml

[root@server2 mychart]# tree .

.

|-- charts

|-- Chart.yaml

|-- templates

| |-- deployment.yaml

| |-- _helpers.tpl

| |-- hpa.yaml

| |-- ingress.yaml

| |-- NOTES.txt

| |-- serviceaccount.yaml

| |-- service.yaml

| `-- tests

| `-- test-connection.yaml

`-- values.yaml

它会自动的帮我们构建好结构文件,这里面要注意的是:

Chart.yaml定义了我们的版本:

root@server2 mychart]# cat Chart.yaml

apiVersion: v2

name: mychart

description: A Helm chart for Kubernetes

type: application

version: 0.1.0 chart版本

appVersion: 1.16.0 app版本

[root@server2 mychart]# helm search repo redis /就像这个

NAME CHART VERSION APP VERSION DESCRIPTION

stable/prometheus-redis-exporter 3.4.1 1.3.4 Prometheus exporter for Redis metrics

values.yaml文件里面的值,还是会被 templates 目录里面的yaml文件调用:

比如:

[root@server2 templates]# vim deployment.yaml

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

这是文件的其中一行

vim values.yaml

就对应values文件的iamge 这里的repository的值和 tag的值。

我们对他进行语法和依赖性的检测:

[root@server2 ~]# helm lint mychart/

==> Linting mychart/

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

没有问题的话我们就可以对他们进行打包了。

[root@server2 ~]# helm package mychart/

Successfully packaged chart and saved it to: /root/mychart-0.1.0.tgz

[root@server2 ~]# ls

mychart-0.1.0.tgz

[root@server2 ~]# du -sh mychart-0.1.0.tgz

4.0K mychart-0.1.0.tgz chart的封装是非常小的

我们需要把它放在chart仓库中:

我们在harbor仓库的部署时可以添加 --with-chartmuseum 参数,来支持 chart 仓库,我们也可以在k8s 及群众部署harbor仓库。

[root@server1 harbor]# ./install.sh --with-chartmuseum / 安装时支持chart仓库

[root@server2 ~]# cp /etc/docker/certs.d/reg.caoaoyuan.org/ca.crt /etc/pki/ca-trust/source/anchors/

[root@server2 ~]# update-ca-trust

把证书放到系统层面,然后更新证书。

[root@server2 ~]# helm repo add local https://reg.caoaoyuan.org/chartrepo/charts

"local" has been added to your repositories /把仓库加近来

[root@server2 ~]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

local https://reg.caoaoyuan.org/chartrepo/charts

这个版本的 helm 上传包时需要安装helm-push插件,没有集成进helm。

helm plugin install https://github.com/chartmuseum/helm-push /这是在线安装的方式

[root@server2 helm]# helm env

HELM_PLUGINS="/root/.local/share/helm/plugins" /查看插件目录

[root@server2 helm]# cd /root/.local/share/helm/plugins

-bash: cd: /root/.local/share/helm/plugins: No such file or directory

[root@server2 helm]# mkdir -p /root/.local/share/helm/plugins /没有就创建这个目录

[root@server2 helm]# cd /root/.local/share/helm/plugins

[root@server2 plugins]# mkdir push

[root@server2 ~]# cd helm/

[root@server2 helm]# ls

helm-push_0.8.1_linux_amd64.tar.gz helm-v3.2.4-linux-amd64.tar.gz linux-amd64

解压到插件目录

[root@server2 helm]# tar zxf helm-push_0.8.1_linux_amd64.tar.gz -C /root/.local/share/helm/plugins/push/

[root@server2 helm]# cd /root/.local/share/helm/plugins/push/

[root@server2 push]# ls

bin LICENSE plugin.yaml

[root@server2 ~]# mv mychart helm/

[root@server2 ~]# mv mychart-0.1.0.tgz helm/

[root@server2 helm]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

local https://reg.caoaoyuan.org/chartrepo/charts

[root@server2 helm]# helm push mychart-0.1.0.tgz local -uadmin -pcaoaoyuan

Pushing mychart-0.1.0.tgz to local...

Done.

上传到harbor仓库了。

更新

当我们对这个目录进行了更新的时候,比如更换了镜像或者版本,我们可以:

[root@server2 mychart]# vim values.yaml

tag: "v2"

[root@server2 mychart]# vim Chart.yaml

version: 0.2.0

[root@server2 mychart]# helm push --help

Helm plugin to push chart package to ChartMuseum

Examples:

$ helm push mychart-0.1.0.tgz chartmuseum # push .tgz from "helm package"

$ helm push . chartmuseum # package and push chart directory /打包上传。

$ helm push . --version="7c4d121" chartmuseum # override version in Chart.yaml

$ helm push . https://my.chart.repo.com # push directly to chart repo URL

[root@server2 mychart]# helm push . local -u admin -p caoaoyuan

Pushing mychart-0.2.0.tgz to local...

Done.

[root@server2 mychart]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

[root@server2 mychart]# helm search repo mychart

NAME CHART VERSION APP VERSION DESCRIPTION

local/mychart 0.2.0 1.16.0 A Helm chart for Kubernetes

更新后就可以获取了,并且获取到的是最新版

[root@server2 mychart]# helm search repo mychart -l /列出所有

NAME CHART VERSION APP VERSION DESCRIPTION

local/mychart 0.2.0 1.16.0 A Helm chart for Kubernetes

local/mychart 0.1.0 1.16.0 A Helm chart for Kubernetes

这时候我们想部署这个软件我们就可以:

[root@server2 mychart]# helm install demo local/mychart

NAME: demo

LAST DEPLOYED: Sat Jul 11 17:53:48 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=mychart,app.kubernetes.io/instance=demo" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:80

[root@server2 mychart]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

demo-mychart-56cd5798c6-hpll7 1/1 Running 0 15s

[root@server2 mychart]# curl 10.244.141.228

Hello MyApp | Version: v2 | Pod Name

是最新版本。

指定版本安装

[root@server2 mychart]# helm uninstall demo

release "demo" uninstalled

[root@server2 mychart]# helm install demo local/mychart --version 0.1.0

kubec get podNAME: demo

[root@server2 mychart]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demo-mychart-7574477f9c-vhqbz 0/1 Running 0 3s 10.244.141.231 server3

[root@server2 mychart]# curl 10.244.141.231

Hello MyApp | Version: v1 | Pod Name

滚动更新

[root@server2 mychart]# helm upgrade demo local/mychart

Release "demo" has been upgraded. Happy Helming!

NAME: demo

LAST DEPLOYED: Sat Jul 11 18:06:07 2020

NAMESPACE: default

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=mychart,app.kubernetes.io/instance=demo" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:80

[root@server2 mychart]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demo-mychart-56cd5798c6-9pfvv 1/1 Running 0 10s 10.244.22.33 server4

demo-mychart-7574477f9c-vhqbz 0/1 Terminating 0 90s server3

[root@server2 mychart]# curl 10.244.22.33

Hello MyApp | Version: v2 | Pod Name

就从v1升级到了v2.

回滚

[root@server2 mychart]# helm history demo /查看历史版本

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Sat Jul 11 18:04:47 2020 superseded mychart-0.1.0 1.16.0 Install complete

2 Sat Jul 11 18:06:07 2020 deployed mychart-0.2.0 1.16.0 Upgrade complete

[root@server2 mychart]# helm rollback demo 1 /回滚到1版本

Rollback was a success! Happy Helming!

[root@server2 mychart]# helm history demo

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Sat Jul 11 18:04:47 2020 superseded mychart-0.1.0 1.16.0 Install complete

2 Sat Jul 11 18:06:07 2020 superseded mychart-0.2.0 1.16.0 Upgrade complete

3 Sat Jul 11 18:09:03 2020 deployed mychart-0.1.0 1.16.0 Rollback to 1

[root@server2 mychart]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demo-mychart-56cd5798c6-9pfvv 1/1 Terminating 0 3m9s 10.244.22.33 server4

demo-mychart-7574477f9c-jkn8g 1/1 Running 0 12s 10.244.141.232 server3

[root@server2 mychart]# curl 10.244.141.232

Hello MyApp | Version: v1 | Pod Name

卸载

[root@server2 mychart]# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

demo default 3 2020-07-11 18:09:03.765806922 +0800 CST deployed mychart-0.1.0 1.16.0

[root@server2 mychart]# helm uninstall demo

release "demo" uninstalled

[root@server2 mychart]# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

helm绑定了所有的数据。直接删除即可。

同理我们可以使用helm部署所有的应用。

Helm部署metrics-server应用

我们先删除已经开启的metrics-server应用:

[root@server2 mychart]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

metrics-server-7cdfcc6666-r2gkg 1/1 Running 2 22h

[root@server2 ~]# kubectl delete -f components.yaml m

root@server2 helm]# helm search repo metrics-server

NAME CHART VERSION APP VERSION DESCRIPTION

stable/metrics-server 2.11.1 0.3.6 Metrics Server is a cluster-wide aggregator of ...

[root@server2 helm]# helm pull stable/metrics-server

[root@server2 helm]# tar zxf metrics-server-2.11.1.tgz

[root@server2 helm]# cd metrics-server/

[root@server2 metrics-server]# vim values.yaml

我们只需要改变一下镜像拉取的位置:

image:

repository: metrics-server-amd64

tag: v0.3.6

[root@server2 metrics-server]# kubectl create namespace metrics-server

namespace/metrics-server created

[root@server2 metrics-server]# helm install metrics . -n metrics-server /指定命名空间运行

[root@server2 metrics-server]# kubectl get all -n metrics-server

NAME READY STATUS RESTARTS AGE

pod/metrics-metrics-server-fc6469c6f-dcwr2 1/1 Running 0 74s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/metrics-metrics-server ClusterIP 10.99.233.224 443/TCP 74s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/metrics-metrics-server 1/1 1 1 74s

NAME DESIRED CURRENT READY AGE

replicaset.apps/metrics-metrics-server-fc6469c6f 1 1 1 74s

[root@server2 metrics-server]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

server2 197m 9% 1262Mi 44%

server3 53m 5% 491Mi 25%

server4 66m 6% 701Mi 36%

[root@server2 metrics-server]# kubectl top pod -n metrics-server

NAME CPU(cores) MEMORY(bytes)

metrics-metrics-server-fc6469c6f-dcwr2 3m 11Mi

正常运行,正常监控。非常简单吧

Helm部署nfs-client-provisioner

我们之前在学习存储的时候做过nfs的部署,我们现在删除之前的所有东西,只留下namesapce:

[root@server2 vol]# ls

mysql nfs-client nginx pv1.yml pv2.yml pv3.yml vol1.yml

[root@server2 vol]# cd nfs-client/

[root@server2 nfs-client]# ls

class.yml deployment.yml pod.yml pvc.yml rbac.yml

[root@server2 nfs-client]# kubectl delete -f .

[root@server2 nfs-client]# kubectl get namespaces nfs-client-provisioner

NAME STATUS AGE

nfs-client-provisioner Active 5d6h

[root@server2 helm]# helm search repo nfs-client

NAME CHART VERSION APP VERSION DESCRIPTION

stable/nfs-client-provisioner 1.2.8 3.1.0 nfs-client is an automatic provisioner that use...

[root@server2 helm]# helm pull stable/nfs-client-provisioner

[root@server2 helm]# cd nfs-client-provisioner/

[root@server2 nfs-client-provisioner]# vim values.yaml

image:

repository: nfs-client-provisioner

tag: latest 同样对镜像做更改,符合我们的仓库的版本就行。

pullPolicy: IfNotPresent

nfs:

server: 172.25.254.1 /指定外部nfs的信息

path: /nfsdata

mountOptions:

[root@server1 nfsdata]# showmount -e server1上已经做好了nfs。

Export list for server1:

/nfsdata *

storageClass: /存储类

create: true

defaultClass: true /设为默认存储类

rbac: /授权

# Specifies whether RBAC resources should be created

create: true

# If true, create & use Pod Security Policy resources

# https://kubernetes.io/docs/concepts/policy/pod-security-policy/

podSecurityPolicy:

enabled: false

[root@server2 nfs-client-provisioner]# helm install nfs-client-provisioner . -n nfs-client-provisioner

NAME: nfs-client-provisioner

LAST DEPLOYED: Sat Jul 11 18:56:52 2020

NAMESPACE: nfs-client-provisioner

STATUS: deployed

REVISION: 1

TEST SUITE: None

[root@server2 nfs-client-provisioner]# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

[root@server2 nfs-client-provisioner]# helm list --all-namespaces

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

metrics metrics-server 1 2020-07-11 18:39:32.002511524 +0800 CST deployed metrics-server-2.11.1 0.3.6

nfs-client-provisioner nfs-client-provisioner 1 2020-07-11 18:56:52.798811488 +0800 CST deployed nfs-client-provisioner-1.2.8 3.1.0

就部署好了。

[root@server2 nfs-client-provisioner]# cd ../..

[root@server2 ~]# cd vol/

[root@server2 vol]# ls

mysql nfs-client nginx pv1.yml pv2.yml pv3.yml vol1.yml

[root@server2 vol]# cd nfs-client/

[root@server2 nfs-client]# ls

class.yml deployment.yml pod.yml pvc.yml rbac.yml

[root@server2 nfs-client]# vim pvc.yml

[root@server2 nfs-client]# kubectl apply -f pvc.yml

persistentvolumeclaim/pvc1 created

[root@server2 nfs-client]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc1 Bound pvc-c204d6a1-972c-41b9-befe-f6342fe9a3fe 1G RWX nfs-client 7s

/bound状态

成功了。

部署kubeapps应用

kubeapps应用可以为Helm提供web UI界面管理,尤其是是用于部署的应用非常多的时候。

需要先添加一个第三方的仓库:

[root@server2 nfs-client]# helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo list"bitnami" has been added to your repositories

[root@server2 nfs-client]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

local https://reg.caoaoyuan.org/chartrepo/charts

bitnami https://charts.bitnami.com/bitnami

[root@server2 nfs-client]# helm search repo kubeapps

NAME CHART VERSION APP VERSION DESCRIPTION

bitnami/kubeapps 3.7.2 v1.10.2 Kubeapps is a dashboard for your Kubernetes clu...

[root@server2 nfs-client]# helm pull bitnami/kubeapps

这个应用的部署是比较麻烦的,因为里面有依赖性:

[root@server2 kubeapps]# ls

charts Chart.yaml crds README.md requirements.lock requirements.yaml templates values.schema.json values.yaml

[root@server2 kubeapps]# cat requirements.yaml

dependencies:

- name: mongodb

version: "> 7.10.2"

repository: https://charts.bitnami.com/bitnami

condition: mongodb.enabled

- name: postgresql

version: ">= 0"

repository: https://charts.bitnami.com/bitnami

但是我们依然从values.yaml入手

[root@server2 kubeapps]# vim values.yaml

global:

imageRegistry: reg.caoaoyuan.org /全局的镜像仓库名称

useHelm3: true /使用的是helm3版本

allowNamespaceDiscovery: true

ingress:

enabled: true /使用ingress的方式

certManager: false

hostname: kubeapps.caoaoyuan.org /设定主机名

由于我们之前吧ingress部署到了server4上,我们去物理主机上做一个解析:

172.25.254.4 server4 kubeapps.caoaoyuan.org

image:

registry: docker.io /上面的全局设定会覆盖掉这里

repository: bitnami/nginx /去harbor创建一个bitnami仓库,把所有要用的景象都准备好

tag: 1.18.0-debian-10-r38

mongodb:

## Whether to deploy a mongodb server to satisfy the applications database requirements.

enabled: false /monggodb数据库关闭

postgresql:

## Whether to deploy a postgresql server to satisfy the applications database requirements.

enabled: true /需要数据库的支持

[root@server2 kubeapps]# kubectl create namespace kubeapps

namespace/kubeapps created

去部署 postgresql

[root@server2 kubeapps]# cd charts/ /在charts目录下

[root@server2 charts]# ls

mongodb postgresql

[root@server2 charts]# cd postgresql/

[root@server2 postgresql]# ls

Chart.yaml ci files README.md templates values-production.yaml values.schema.json values.yaml

[root@server2 postgresql]# vim values.yaml

global:

postgresql: {}

imageRegistry: reg.caoaoyuan.org /镜像拉取位置

# imagePullSecrets:

# - myRegistryKeySecretName

# storageClass: myStorageClass

## Bitnami PostgreSQL image version

## ref: https://hub.docker.com/r/bitnami/postgresql/tags/

##

image:

registry: docker.io

repository: bitnami/postgresql 这个镜像也需要放到harbor中

tag: 11.8.0-debian-10-r19

可以安装了

[root@server2 kubeapps]# helm install kubeapps . -n kubeapps

[root@server2 kubeapps]# kubectl get pod -n kubeapps

NAME READY STATUS RESTARTS AGE

kubeapps-5f74b67cb6-955tr 0/1 ContainerCreating 0 69s

kubeapps-5f74b67cb6-rjc7j 0/1 ContainerCreating 0 69s

kubeapps-internal-apprepository-controller-86f5f4f977-zhm2t 0/1 ContainerCreating 0 69s

kubeapps-internal-assetsvc-6d549656f9-8897d 0/1 ContainerCreating 0 69s

kubeapps-internal-assetsvc-6d549656f9-zfwrp 0/1 ContainerCreating 0 69s

kubeapps-internal-dashboard-56897bb684-5w5lx 0/1 ContainerCreating 0 69s

kubeapps-internal-dashboard-56897bb684-htlbx 1/1 Running 0 69s

kubeapps-internal-kubeops-6d88f8bd7f-dpc9d 1/1 Running 0 69s

kubeapps-internal-kubeops-6d88f8bd7f-dwbq7 1/1 Running 0 69s

kubeapps-postgresql-master-0 1/1 Running 0 69s

kubeapps-postgresql-slave-0 0/1 Running 0 69s

我们就可以访问了:

点击图片中间的 clickhere ,它就是让我们创建一个用户给他授权。

创建一个服务账户绑定集群管理员

[root@server2 kubeapps]# kubectl create serviceaccount kubeapps-operator -n kubeapps

serviceaccunt/kubeapps-operator created

[root@server2 kubeapps]# kubectl create clusterrolebinding kubeapps-operator --clusterrole=cluster-admin --serviceaccount=kubeapps:kubeapps-operator

clusterrolebinding.rbac.authorization.k8s.io/kubeapps-operator created

[root@server2 kubeapps]# kubectl get clusterrolebindings.rbac.authorization.k8s.io | grep kubeapps

kubeapps-operator ClusterRole/cluster-admin 31s

获取token

[root@server2 kubeapps]# kubectl -n kubeapps get secrets

NAME TYPE DATA AGE

default-token-jfrhs kubernetes.io/service-account-token 3 22m

kubeapps-db Opaque 2 12m

kubeapps-internal-apprepository-controller-token-t7f7z kubernetes.io/service-account-token 3 12m

kubeapps-internal-apprepository-job-postupgrade-token-g2hz6 kubernetes.io/service-account-token 3 12m

kubeapps-internal-kubeops-token-2zjdp kubernetes.io/service-account-token 3 12m

kubeapps-operator-token-xgb9r kubernetes.io/service-account-token 3 5m58s

sh.helm.release.v1.kubeapps.v1 helm.sh/release.v1 1 12m

[root@server2 kubeapps]# kubectl describe secrets -n kubeapps kubeapps-operator-token-xgb9r

Name: kubeapps-operator-token-xgb9r

Namespace: kubeapps

Labels:

Annotations: kubernetes.io/service-account.name: kubeapps-operator

kubernetes.io/service-account.uid: bdfcafe4-1527-416e-9e3b-b20138c40fcd

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 8 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlBFMlhOZUItQ1d4c2NKNVNUdi1xY1o5WDA2ekJocFN3OU1FNW5URHVweXcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlYXBwcyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlYXBwcy1vcGVyYXRvci10b2tlbi14Z2I5ciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlYXBwcy1vcGVyYXRvciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImJkZmNhZmU0LTE1MjctNDE2ZS05ZTNiLWIyMDEzOGM0MGZjZCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlYXBwczprdWJlYXBwcy1vcGVyYXRvciJ9.kThxEZtbv39bU26f9GZofOjGemQHEmFy2CVltszx0KhLEyZAKTh_eL8sV40PFrmguMN1Vgmp8JDpUchlLhSnIhM3Oxb6sv1wEqkAqJPHDB8fTpVMMDIXaevVW3EPYq7_L4Bc30mNP9ov9YMsGfK3sdZrwLjMUBKpvpFFWkQzxpLZEcCGzRiKEvwzPM61QVLUTNS2wvZB0gHvYOEmT7xvl-ZkwSVfU3d_pYGp1DEevi0W8M7BVztyEGeO0ip4EzIIEnI_L709g7zZiV9YZ_lNoLS4wyjc8ZtdEkxGtAH_4GftRQTiA5G2utfkEetQVDGrGzSUR2DE1qlwHLeyVayoBg

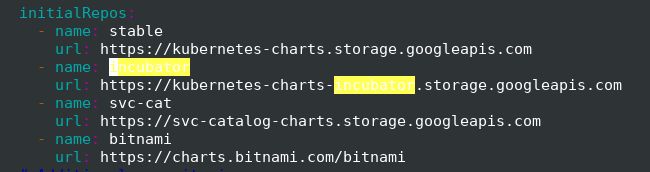

[root@server2 kubeapps]# vim values.yaml

这些是kubeapp在安装时的初始化仓库,我们全部删除建立我们自己的第三方仓库:

[root@server2 kubeapps]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

local https://reg.caoaoyuan.org/chartrepo/charts

bitnami https://charts.bitnami.com/bitnami

我们的 local仓库是需要认证的:

而且要把ca证书放进去,但是此时提交它告诉我们解析不到 reg.caoaoyuan.org ,所以我们要:

[root@server2 kubeapps]# kubectl -n kube-system edit cm coredns

apiVersion: v1

data:

Corefile: ".:53 {\n errors\n health {\n lameduck 5s\n }\n ready\n

\ hosts {\n 172.25.254.1 reg.westos.org 172.25.254.2 server2\n 172.25.254.3 server3\n 172.25.254.4

server4\n fallthrough \n

把解析加入到 k8s 当中去。

就提交上去了。

其实我们在 kubeapps 上的槽走就相当于在k8s 中进行pod 的操作:

[root@server2 kubeapps]# kubectl get pod -n kubeapps

NAME READY STATUS RESTARTS AGE

apprepo-kubeapps-cleanup-bitnami-sgm5b-db4k7 0/1 Completed 0 14m

apprepo-kubeapps-cleanup-incubator-k84z9-tkfcv 0/1 Completed 0 14m

apprepo-kubeapps-cleanup-stable-7b25w-gwgvz 0/1 Completed 0 14m

apprepo-kubeapps-cleanup-svc-cat-qbvbp-f5lst 0/1 Completed 0 14m /这是我们刚才删除的几个仓库,清理了

apprepo-kubeapps-sync-local-jj9nf-dbgpw 0/1 CrashLoopBackOff 4 2m54s /这是刚才没有解析到,出错了

apprepo-kubeapps-sync-stable-1594471800-lnj6q 0/1 Completed 0 2m52s

apprepo-kubeapps-sync-stable-rnpbm-fcfjb 0/1 Completed 0 11m

[root@server2 kubeapps]# kubectl -n kubeapps get pod

NAME READY STATUS RESTARTS AGE

apprepo-kubeapps-cleanup-bitnami-sgm5b-db4k7 0/1 Completed 0 30m

apprepo-kubeapps-cleanup-incubator-k84z9-tkfcv 0/1 Completed 0 30m

apprepo-kubeapps-cleanup-local-rq754-vgchz 0/1 Completed 0 6m58s

apprepo-kubeapps-cleanup-stable-7b25w-gwgvz 0/1 Completed 0 30m

apprepo-kubeapps-cleanup-svc-cat-qbvbp-f5lst 0/1 Completed 0 29m

apprepo-kubeapps-sync-local-g7mww-jqc6m 0/1 Completed 3 68s /本地仓库同步完成了

apprepo-kubeapps-sync-local-s9mx2-j27r9 0/1 Completed 3 65s

apprepo-kubeapps-sync-stable-1594471800-lnj6q 0/1 Completed 0 18m /添加微软仓库也同步了

apprepo-kubeapps-sync-stable-1594472400-bpngf 0/1 Completed 0 8m41s

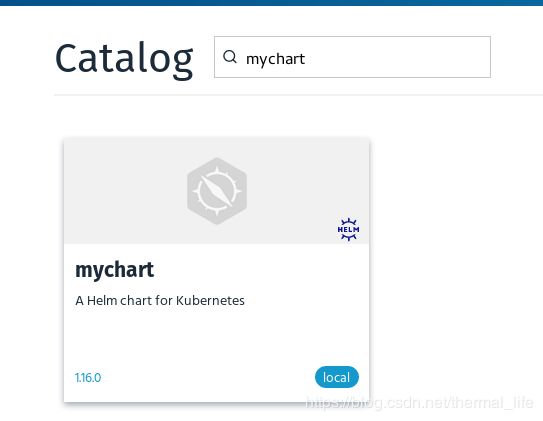

我们还无法找到本地的chart。

刷新以下local的。

刷新以下local的。

出来了。

[root@server2 kubeapps]# kubectl -n kubeapps get pod

NAME READY STATUS RESTARTS AGE

apprepo-kubeapps-sync-local-1594473000-zsd5x 0/1 Completed 0 2m28s

apprepo-kubeapps-sync-local-g7mww-jqc6m 0/1 Completed 3 4m57s

apprepo-kubeapps-sync-local-j45dk-ps629 0/1 Completed 0 7s

apprepo-kubeapps-sync-local-s9mx2-j27r9 0/1 Completed 3 4m54s

其实是它有开启了一个pod 进行同步了。

在default 命名空间部署0.2.0版本的。点击deploy

自动生成了ymal文件,我们可以在里面进行编辑。

我们还可以选择创建一个新的namesapoace,运行。

[root@server2 kubeapps]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

aom-mychart-766d5887b6-ftvll 1/1 Running 0 78s 10.244.22.59 server4

[root@server2 kubeapps]# curl 10.244.22.59

Hello MyApp | Version: v2 | Pod Name

[root@server2 kubeapps]# kubectl get pod

NAME READY STATUS RESTARTS AGE

aom-mychart-674f7f5d79-lrh2c 1/1 Running 0 9s

aom-mychart-766d5887b6-ftvll 1/1 Terminating 0 2m52s

[root@server2 kubeapps]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

aom-mychart-674f7f5d79-lrh2c 1/1 Running 0 12s 10.244.141.193 server3

[root@server2 kubeapps]# curl 10.244.141.193

Hello MyApp | Version: v1 | Pod Name

aom-mychart-766d5887b6-nj4ll 1/1 Running 0 22s 10.244.22.60 server4

[root@server2 kubeapps]# curl 10.244.22.60

Hello MyApp | Version: v2 | Pod Name

[root@server2 kubeapps]#

还可以配合ingress使用:

affinity: {}

autoscaling:

enabled: false

maxReplicas: 100

minReplicas: 1

targetCPUUtilizationPercentage: 80

fullnameOverride: ""

image:

pullPolicy: IfNotPresent

repository: myapp

tag: v1

imagePullSecrets: []

ingress:

annotations: {}

enabled: true /打开ingress

hosts:

- host: www1.westos.org 指定路径

paths: [/]

tls: false 关闭tls加密

[root@server2 kubeapps]# kubectl get pod

NAME READY STATUS RESTARTS AGE

ultra-support-mychart-c9f445f49-s7mkz 0/1 ContainerCreating 0 2s

[root@server2 kubeapps]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ultra-support-mychart www1.westos.org 80

[root@rhel7host k8s]# curl www1.westos.org

Hello MyApp | Version: v1 | Pod Name

解析到了。

升级:

[root@rhel7host k8s]# curl www1.westos.org

Hello MyApp | Version: v2 | Pod Name

解析到了。非常的方便。