kubernetes:helm的部署、应用(部署nfsclass、部署nginx-ingress、升级和回滚)

kubernetes

- 1. helm简介

- 2. helm安装

- 3. helm部署应用

- 3.1 部署一个redis

- 3.2 创建一个自己的chart

- 3.3 升级和回滚

- 3.4 部署nfsclass

- 3.5 部署nginx-ingress

1. helm简介

Helm是Deis (https://deis.com/) 开发的一个用于kubernetes的包管理器。每个包称为一个Chart,一个Chart是一个目录。类似Linux系统的yum。

Helm Chart 是用来封装 Kubernetes 原生应用程序的一系列 YAML 文件。可以在你部署应用的时候自定义应用程序的一些 Metadata,以便于应用程序的分发。

对于应用发布者而言,可以通过 Helm 打包应用、管理应用依赖关系、管理应用版本并发布应用到软件仓库。

对于使用者而言,使用 Helm 后不用需要编写复杂的应用部署文件,可以以简单的方式在 Kubernetes 上查找、安装、升级、回滚、卸载应用程序。

Helm当前最新版本 v3.2.0 官网:https://helm.sh/docs/intro/

2. helm安装

使用了html-v3.2.0的安装包helm-v3.2.0-linux-amd64.tar.gz

[root@server2 ~]# tar zxf helm-v3.2.0-linux-amd64.tar.gz

[root@server2 ~]# cd linux-amd64/

[root@server2 linux-amd64]# ls

helm LICENSE README.md

[root@server2 linux-amd64]# cp helm /usr/local/bin/

命令补齐

[kubeadm@server2 ~]$ echo "source <(helm completion bash)" >> ~/.bashrc

重新登陆生效

[root@server2 ~]# su - kubeadm

Last login: Mon May 11 09:16:14 CST 2020 on pts/1

Last failed login: Mon May 11 11:38:46 CST 2020 on pts/1

There was 1 failed login attempt since the last successful login.

[kubeadm@server2 ~]$ helm

completion dependency get install list plugin repo search status test upgrade version

create env history lint package pull rollback show template uninstall verify

Helm 添加第三方 Chart 库:

[kubeadm@server2 ~]$ helm repo add stable http://mirror.azure.cn/kubernetes/charts/

"stable" has been added to your repositories

[kubeadm@server2 ~]$ helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

[kubeadm@server2 ~]$ helm search repo redis

NAME CHART VERSION APP VERSION DESCRIPTION

stable/prometheus-redis-exporter 3.4.0 1.3.4 Prometheus exporter for Redis metrics

stable/redis 10.5.7 5.0.7 DEPRECATED Open source, advanced key-value stor...

stable/redis-ha 4.4.4 5.0.6 Highly available Kubernetes implementation of R...

stable/sensu 0.2.3 0.28 Sensu monitoring framework backed by the Redis ...

3. helm部署应用

[kubeadm@server2 ~]$ helm search repo redis-ha -l # 可以查看redis-ha的所有版本

NAME CHART VERSION APP VERSION DESCRIPTION

stable/redis-ha 4.4.4 5.0.6 Highly available Kubernetes implementation of R...

stable/redis-ha 4.4.3 5.0.6 Highly available Kubernetes implementation of R...

stable/redis-ha 4.4.2 5.0.6 Highly available Kubernetes implementation of R...

stable/redis-ha 4.4.1 5.0.6 Highly available Kubernetes implementation of R...

stable/redis-ha 4.4.0 5.0.6 Highly available Kubernetes implementation of R...

stable/redis-ha 4.3.4 5.0.6 Highly available Kubernetes implementation of R...

[kubeadm@server2 ~]$ helm pull stable/redis-ha # 拉取应用到本地,默认拉取最新版本

[kubeadm@server2 ~]$ ls # 拉取了一个redis-ha的压缩包

components.yaml dashrbac.yaml kube-flannel.yml manifest recommended.yaml redis-ha-4.4.4.tgz

[kubeadm@server2 ~]$ mkdir helm

[kubeadm@server2 ~]$ tar zxf redis-ha-4.4.4.tgz -C helm/ # 解压到helm目录

[kubeadm@server2 redis-ha]$ sudo yum install tree -y

[kubeadm@server2 redis-ha]$ tree . # 查看目录结构

.

├── Chart.yaml

├── ci

│ └── haproxy-enabled-values.yaml

├── OWNERS

├── README.md

├── templates

│ ├── _configs.tpl

│ ├── _helpers.tpl

│ ├── NOTES.txt

│ ├── redis-auth-secret.yaml

│ ├── redis-ha-announce-service.yaml

│ ├── redis-ha-configmap.yaml

│ ├── redis-ha-exporter-script-configmap.yaml

│ ├── redis-ha-pdb.yaml

│ ├── redis-haproxy-deployment.yaml

│ ├── redis-haproxy-serviceaccount.yaml

│ ├── redis-haproxy-servicemonitor.yaml

│ ├── redis-haproxy-service.yaml

│ ├── redis-ha-rolebinding.yaml

│ ├── redis-ha-role.yaml

│ ├── redis-ha-serviceaccount.yaml

│ ├── redis-ha-servicemonitor.yaml

│ ├── redis-ha-service.yaml

│ ├── redis-ha-statefulset.yaml

│ └── tests

│ ├── test-redis-ha-configmap.yaml

│ └── test-redis-ha-pod.yaml

└── values.yaml

3 directories, 25 files

3.1 部署一个redis

[kubeadm@server2 redis-ha]$ vim values.yaml

image:

repository: redis

tag: 5.0.6-alpine

pullPolicy: IfNotPresent

需要redis:5.0.6-alpine的镜像

[root@server1 harbor]# docker pull redis:5.0.6-alpine

[root@server1 harbor]# docker tag redis:5.0.6-alpine reg.westos.org/library/redis:5.0.6-alpine

[root@server1 harbor]# docker push reg.westos.org/library/redis

[kubeadm@server2 redis-ha]$ kubectl create namespace redis # 创建一个redis的namespace

namespace/redis created

[kubeadm@server2 redis-ha]$ helm install redis-ha . -n redis # 名字redis-ha,.在当前目录,-n redis 指定namepace为redis

NAME: redis-ha

LAST DEPLOYED: Mon May 11 13:04:20 2020

NAMESPACE: redis

STATUS: deployed

REVISION: 1

NOTES:

Redis can be accessed via port 6379 and Sentinel can be accessed via port 26379 on the following DNS name from within your cluster:

redis-ha.redis.svc.cluster.local

To connect to your Redis server:

1. Run a Redis pod that you can use as a client:

kubectl exec -it redis-ha-server-0 sh -n redis

2. Connect using the Redis CLI:

redis-cli -h redis-ha.redis.svc.cluster.local

[kubeadm@server2 redis-ha]$ kubectl -n redis get all # redis-ha部署完成

NAME READY STATUS RESTARTS AGE

pod/redis-ha-server-0 2/2 Running 0 2m16s

pod/redis-ha-server-1 2/2 Running 0 107s

pod/redis-ha-server-2 0/2 Pending 0 77s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/redis-ha ClusterIP None <none> 6379/TCP,26379/TCP 2m19s

service/redis-ha-announce-0 ClusterIP 10.100.4.107 <none> 6379/TCP,26379/TCP 2m18s

service/redis-ha-announce-1 ClusterIP 10.109.211.70 <none> 6379/TCP,26379/TCP 2m18s

service/redis-ha-announce-2 ClusterIP 10.105.156.107 <none> 6379/TCP,26379/TCP 2m18s

NAME READY AGE

statefulset.apps/redis-ha-server 2/3 2m17s

[kubeadm@server2 redis-ha]$ kubectl -n redis describe pod redis-ha-server-0 #pod中由两个容器redis和sentinel

Containers:

redis:

sentinel: # 负责主从切换

[kubeadm@server2 redis-ha]$ kubectl -n redis exec -it redis-ha-server-0 -c redis -- sh # 进入redis-ha-server-0,-c 指定进入的容器时redis

/data $ redis-cli

127.0.0.1:6379> info # 查看master的信息

/data $ redis-cli -h 10.109.211.70 # 进入salve

10.109.211.70:6379> info

/data $ nslookup redis-ha.redis.svc.cluster.local

nslookup: can't resolve '(null)': Name does not resolve

Name: redis-ha.redis.svc.cluster.local

Address 1: 10.244.1.147 redis-ha-server-0.redis-ha.redis.svc.cluster.local

Address 2: 10.244.2.131 redis-ha-server-1.redis-ha.redis.svc.cluster.local

/data $ redis-cli -h redis-ha-server-1.redis-ha.redis.svc.cluster.local

redis-ha-server-1.redis-ha.redis.svc.cluster.local:6379> info # 1是slave

# Replication

role:slave

/data $ redis-cli -h redis-ha-server-0.redis-ha.redis.svc.cluster.local

redis-ha-server-0.redis-ha.redis.svc.cluster.local:6379> info # 0是master

# Replication

role:master

helm -n redis upgrade redis-ha # 更新

helm status redis-ha # 查看状态

helm -n redis uninstall redis-ha # 删除卸载

kubectl delete namespaces redis # 删除namespace

helm -n redis history redis-ha # 查看历史

3.2 创建一个自己的chart

[kubeadm@server2 helm]$ helm create mychart

Creating mychart

[kubeadm@server2 helm]$ ls

mychart redis-ha

[kubeadm@server2 helm]$ cd mychart/

[kubeadm@server2 mychart]$ tree .

.

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── NOTES.txt

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

3 directories, 10 files

[kubeadm@server2 mychart]$ ls

charts Chart.yaml templates values.yaml

values.yaml 用于获取变量

chart.yaml 介绍发行版本

[kubeadm@server2 mychart]$ helm lint . # 检测当前目录依赖和模板配置是否正确

==> Linting .

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

[kubeadm@server2 helm]$ helm package mychart/ # 将应用打包

Successfully packaged chart and saved it to: /home/kubeadm/helm/mychart-0.1.0.tgz

[kubeadm@server2 helm]$ ls

mychart mychart-0.1.0.tgz redis-ha

建立本地chart仓库:

方式一:

helm v3 需要外部仓库软件的支持:https://github.com/goharbor/harbor-helm

$ helm repo add harbor https://helm.goharbor.io

$ helm pull harbor/harbor

方式二:使用我自己搭建的harbor仓库

在harbor私有仓库中新建一个charts项目

[kubeadm@server2 helm]$ helm repo add mychart https://reg.westos.org/chartrepo/charts

Error: looks like "https://reg.westos.org/chartrepo/charts" is not a valid chart repository or cannot be reached: Get https://reg.westos.org/chartrepo/charts/index.yaml: x509: certificate signed by unknown authority

问题:需要证书x509: certificate signed by unknown authority

[root@server2 reg.westos.org]# pwd

/etc/docker/certs.d/reg.westos.org

[root@server2 reg.westos.org]# ls # 当前的证书只有docker能使用

ca.crt

[root@server2 reg.westos.org]# cp ca.crt /etc/pki/ca-trust/source/anchors/ # 将证书放到系统中的认证目录中

[root@server2 reg.westos.org]# update-ca-trust # 更新ca信任

[kubeadm@server2 helm]$ helm repo add mychart https://reg.westos.org/chartrepo/charts # 添加本地仓库

"mychart" has been added to your repositories

[kubeadm@server2 helm]$ helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts/

mychart https://reg.westos.org/chartrepo/charts # 我自己的私有仓库,https://reg.westos.org/chartrepo/charts解释https://reg.westos.org/是你的私有仓库地址,chartrepo固定写法,charts是你在私有仓库中创建的项目

安装helm-push插件:

需要helm-push_0.8.1_linux_amd64.tar.gz插件包

[kubeadm@server2 helm]$ helm env

HELM_BIN="helm"

HELM_DEBUG="false"

HELM_KUBEAPISERVER=""

HELM_KUBECONTEXT=""

HELM_KUBETOKEN=""

HELM_NAMESPACE="default"

HELM_PLUGINS="/home/kubeadm/.local/share/helm/plugins" # 存放插件的目录

HELM_REGISTRY_CONFIG="/home/kubeadm/.config/helm/registry.json"

HELM_REPOSITORY_CACHE="/home/kubeadm/.cache/helm/repository" # 存放仓库

HELM_REPOSITORY_CONFIG="/home/kubeadm/.config/helm/repositories.yaml"

[kubeadm@server2 ~]$ mkdir -p /home/kubeadm/.local/share/helm/plugins

[kubeadm@server2 ~]$ cd /home/kubeadm/.local/share/helm/plugins

[kubeadm@server2 plugins]$ mkdir helm-push

[kubeadm@server2 ~]$ tar zxf helm-push_0.8.1_linux_amd64.tar.gz -C /home/kubeadm/.local/share/helm/plugins/helm-push/

[kubeadm@server2 ~]$ cd /home/kubeadm/.local/share/helm/plugins/helm-push/

[kubeadm@server2 helm-push]$ ls

bin LICENSE plugin.yaml

[kubeadm@server2 helm]$ helm push mychart-0.1.0.tgz mychart -u admin -p redhat # 将mychart包放到我们的mychart仓库中

Pushing mychart-0.1.0.tgz to mychart...

Done.

[kubeadm@server2 helm]$ helm repo update # 更新repo

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "mychart" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

[kubeadm@server2 helm]$ helm search repo mychart # 可以查看到mychart中的打包文件

NAME CHART VERSION APP VERSION DESCRIPTION

mychart/mychart 0.1.0 1.16.0 A Helm chart for Kubernetes

[kubeadm@server2 helm]$ helm show values mychart/mychart # 查看部署参数

,也就是values.yaml文件

affinity: {}

autoscaling:

enabled: false

maxReplicas: 100

minReplicas: 1

targetCPUUtilizationPercentage: 80

fullnameOverride: ""

[kubeadm@server2 helm]$ helm install test mychart/mychart --debug # 部署mychart应用到k8s集群

[kubeadm@server2 helm]$ helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

test default 1 2020-05-12 23:01:29.93227763 +0800 CST deployed mychart-0.1.0 1.16.0

[kubeadm@server2 helm]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-55d87b5996-8clxq 1/1 Running 4 8d 10.244.2.133 server4 <none> <none>

test-mychart-7d7557d49b-rbsdf 1/1 Running 0 3m10s 10.244.1.150 server3 <none> <none>

[kubeadm@server2 helm]$ kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

nfs-client-provisioner 1/1 1 1 8d

test-mychart 1/1 1 1 4m57s

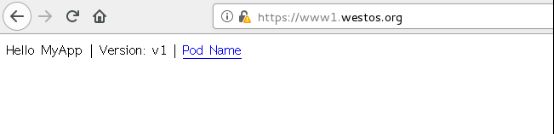

[kubeadm@server2 helm]$ curl 10.244.1.150 # 可以访问test-mychart,说明部署成功

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

3.3 升级和回滚

[kubeadm@server2 mychart]$ vim Chart.yaml # 修改chart的版本号

version: 0.2.0 //修改版本

[kubeadm@server2 helm]$ helm package mychart # 打包

Successfully packaged chart and saved it to: /home/kubeadm/helm/mychart-0.2.0.tgz

[kubeadm@server2 helm]$ ls

mychart mychart-0.1.0.tgz mychart-0.2.0.tgz redis-ha

[kubeadm@server2 helm]$ helm push mychart-0.2.0.tgz mychart -u admin -p redhat # 上传新版本

Pushing mychart-0.2.0.tgz to mychart...

Done.

[kubeadm@server2 helm]$ helm repo update # 更新仓库

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "mychart" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

[kubeadm@server2 helm]$ helm search repo mychart -l # 两个mychart版本

NAME CHART VERSION APP VERSION DESCRIPTION

mychart/mychart 0.2.0 1.16.0 A Helm chart for Kubernetes

mychart/mychart 0.1.0 1.16.0 A Helm chart for Kubernetes

[kubeadm@server2 helm]$ helm upgrade test mychart/mychart # 更新

[kubeadm@server2 helm]$ helm list # 已经更新到0.2.0版本

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

test default 2 2020-05-12 23:14:52.474461236 +0800 CST deployed mychart-0.2.0 1.16.0

[kubeadm@server2 helm]$ helm history test

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Tue May 12 23:01:29 2020 superseded mychart-0.1.0 1.16.0 Install complete

2 Tue May 12 23:14:52 2020 deployed mychart-0.2.0 1.16.0 Upgrade complete

[kubeadm@server2 helm]$ helm rollback test 1 # 回滚到version 1

Rollback was a success! Happy Helming!

[kubeadm@server2 helm]$ helm list # mychart-0.1.0,标识回滚成功

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

test default 3 2020-05-12 23:18:28.493052781 +0800 CST deployed mychart-0.1.0 1.16.0

[kubeadm@server2 helm]$ helm history test

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Tue May 12 23:01:29 2020 superseded mychart-0.1.0 1.16.0 Install complete

2 Tue May 12 23:14:52 2020 superseded mychart-0.2.0 1.16.0 Upgrade complete

3 Tue May 12 23:18:28 2020 deployed mychart-0.1.0 1.16.0 Rollback to 1

[kubeadm@server2 helm]$ helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

test default 3 2020-05-12 23:18:28.493052781 +0800 CST deployed mychart-0.1.0 1.16.0

[kubeadm@server2 helm]$ helm uninstall test # 卸载test

release "test" uninstalled

3.4 部署nfsclass

[kubeadm@server2 nfsclass]$ ls

class.yaml deployment.yaml pod.yaml pvc.yaml rbac.yaml

[kubeadm@server2 nfsclass]$ kubectl delete -f . # 删除之前的部署

storageclass.storage.k8s.io "managed-nfs-storage" deleted

deployment.apps "nfs-client-provisioner" deleted

serviceaccount "nfs-client-provisioner" deleted

clusterrole.rbac.authorization.k8s.io "nfs-client-provisioner-runner" deleted

clusterrolebinding.rbac.authorization.k8s.io "run-nfs-client-provisioner" deleted

role.rbac.authorization.k8s.io "leader-locking-nfs-client-provisioner" deleted

rolebinding.rbac.authorization.k8s.io "leader-locking-nfs-client-provisioner" deleted

Error from server (NotFound): error when deleting "pod.yaml": pods "test-pod" not found

Error from server (NotFound): error when deleting "pvc.yaml": persistentvolumeclaims "test-claim" not found

注意:在使用helm uninstall删除的时候,调用的是kubectl apiserver,通过config完成授权

[kubeadm@server2 .kube]$ ls

cache config http-cache

[kubeadm@server2 .kube]$ pwd

/home/kubeadm/.kube

[kubeadm@server2 helm]$ helm search repo nfs

NAME CHART VERSION APP VERSION DESCRIPTION

stable/nfs-client-provisioner 1.2.8 3.1.0 nfs-client is an automatic provisioner that use...

stable/nfs-server-provisioner 1.0.0 2.3.0 nfs-server-provisioner is an out-of-tree dynami...

[kubeadm@server2 ~]$ helm pull stable/nfs-client-provisioner # 拉取nfs-client

[kubeadm@server2 ~]$ tar zxf nfs-client-provisioner-1.2.8.tgz

[kubeadm@server2 ~]$ cd nfs-client-provisioner/

[kubeadm@server2 nfs-client-provisioner]$ ls

Chart.yaml ci OWNERS README.md templates values.yaml

[kubeadm@server2 nfs-client-provisioner]$ vim values.yaml

image:

repository: nfs-client-provisioner # 需要在你的私有仓库中有nfs-client-provisioner镜像

tag: latest

nfs:

server: 172.25.60.1 # nfs输出端

path: /nfs # nfs输出端路径

defaultClass: true # 默认stroageclass将内用于动态的为没有特定storage class需求的pvc配置存储

[kubeadm@server2 nfs-client-provisioner]$ kubectl create namespace nfs-client-provisioner # 传键一个独立的namespace

namespace/nfs-client-provisioner created

[kubeadm@server2 nfs-client-provisioner]$ helm install nfs-client-provisioner . -n nfs-client-provisioner

NAME: nfs-client-provisioner

LAST DEPLOYED: Wed May 13 11:38:41 2020

NAMESPACE: nfs-client-provisioner

STATUS: deployed

REVISION: 1

TEST SUITE: None

[kubeadm@server2 nfs-client-provisioner]$ helm -n nfs-client-provisioner list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nfs-client-provisioner nfs-client-provisioner 1 2020-05-13 11:38:41.860926655 +0800 CST deployed nfs-client-provisioner-1.2.8 3.1.0

[kubeadm@server2 nfs-client-provisioner]$ kubectl -n nfs-client-provisioner get all # 部署完成

NAME READY STATUS RESTARTS AGE

pod/nfs-client-provisioner-76474c9c94-5dcjq 1/1 Running 0 75s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nfs-client-provisioner 1/1 1 1 76s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nfs-client-provisioner-76474c9c94 1 1 1 76s

测试:

[kubeadm@server2 pv]$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client (default) cluster.local/nfs-client-provisioner Delete Immediate true 6m5s

[kubeadm@server2 pv]$ cat pvc1.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

spec:

# storageClassName: nfs

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

[kubeadm@server2 pv]$ kubectl apply -f pvc1.yaml

persistentvolumeclaim/pvc1 created

[kubeadm@server2 pv]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-d7372908-97a8-4c46-ab24-e269570f8005 1Gi RWO Delete Bound default/pvc1 nfs-client 7s

[kubeadm@server2 pv]$ kubectl delete -f pvc1.yaml

persistentvolumeclaim "pvc1" deleted

3.5 部署nginx-ingress

[kubeadm@server2 pv]$ kubectl delete namespaces ingress-nginx # 清除之前的部署

namespace "ingress-nginx" deleted

[kubeadm@server2 ~]$ helm search repo nginx-ingress

NAME CHART VERSION APP VERSION DESCRIPTION

stable/nginx-ingress 1.36.3 0.30.0 An nginx Ingress controller that uses ConfigMap...

stable/nginx-lego 0.3.1 Chart for nginx-ingress-controller and kube-lego

[kubeadm@server2 ~]$ cd helm

[kubeadm@server2 helm]$ helm pull stable/nginx-ingress

[kubeadm@server2 helm]$ tar zxf nginx-ingress-1.36.3.tgz

[kubeadm@server2 helm]$ cd nginx-ingress/

[kubeadm@server2 nginx-ingress]$ ls

Chart.yaml ci OWNERS README.md templates values.yaml

[kubeadm@server2 nginx-ingress]$ vim values.yaml

name: controller

image:

repository: nginx-ingress-controller # 镜像

tag: "0.30.0" # [root@server1 ~]# docker pull registry.aliyuncs.com/google_containers/nginx-ingress-controller:0.30.0使用阿里云镜像pull

hostNetwork: true # 使用本机网络

daemonset:

useHostPort: true # 使用主机端口

nodeSelector: # 选择带有ingress:nginx标签的节点

ingress: nginx

service: # 不创建服务

enabled: false

image: # 需要defaultbackend-amd64:1.5的镜像

repository: defaultbackend-amd64

tag: "1.5"

[kubeadm@server2 pv]$ kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

server4 Ready <none> 25d v1.18.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=sata,ingress=nginx,kubernetes.io/arch=amd64,kubernetes.io/hostname=server4,kubernetes.io/os=linux # 在server4上有ingress=nginx的标签

[kubeadm@server2 nginx-ingress]$ helm -n nginx-ingress install nginx-ingress . # 在namespace为nginx-ingress下使用当前目录部署nginx-ingress

[kubeadm@server2 nginx-ingress]$ helm -n nginx-ingress list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx-ingress nginx-ingress 1 2020-05-13 16:41:58.048882663 +0800 CST deployed nginx-ingress-1.36.3 0.30.0

测试:

[root@server4 ~]# netstat -antlpe # 在有ingress=nginx标签的server4上开启了80和443端口

tcp6 0 0 :::80 :::* LISTEN 101 582974 5696/nginx: master

tcp6 0 0 :::8181 :::* LISTEN 101 582978 5696/nginx: master

tcp6 0 0 :::22 :::* LISTEN 0 79424 3668/sshd

tcp6 0 0 ::1:25 :::* LISTEN 0 80093 3949/master

tcp6 0 0 :::443 :::* LISTEN 101 582976 5696/nginx: master

[kubeadm@server2 manifest]$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: myapp:v1

ports:

- containerPort: 80

[kubeadm@server2 manifest]$ kubectl apply -f deployment.yaml

deployment.apps/nginx-deployment created

[kubeadm@server2 manifest]$ kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-deployment-5c58fb7c46-57z8g 1/1 Running 0 30s app=nginx,pod-template-hash=5c58fb7c46

nginx-deployment-5c58fb7c46-qmmms 1/1 Running 0 30s app=nginx,pod-template-hash=5c58fb7c46

[kubeadm@server2 manifest]$ cat service.yaml

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: nginx

type: ClusterIP

[kubeadm@server2 manifest]$ kubectl apply -f service.yaml # 创建svc

service/myservice created

[kubeadm@server2 manifest]$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25d

myservice ClusterIP 10.104.254.65 <none> 80/TCP 33s

[kubeadm@server2 manifest]$ kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: Selector: app=nginx

Type: ClusterIP

IP: 10.104.254.65

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.155:80,10.244.2.138:80

Session Affinity: None

Events: <none>

[kubeadm@server2 helm]$ kubectl apply -f ingress1.yaml

ingress.extensions/example created

[kubeadm@server2 helm]$ cat ingress1.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

# namespace: foo

spec:

rules:

- host: www1.westos.org

http:

paths:

- backend:

serviceName: myservice

servicePort: 80

path: /

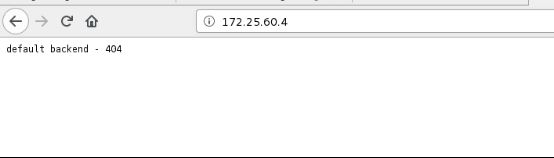

[kubeadm@server2 helm]$ kubectl describe ingress example

Name: example

Namespace: default

Address: 172.25.60.4

Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)

Rules:

Host Path Backends

---- ---- --------

www1.westos.org

/ myservice:80 (10.244.1.155:80,10.244.2.138:80)

Annotations: kubernetes.io/ingress.class: nginx

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CREATE 80s nginx-ingress-controller Ingress default/example

Normal UPDATE 51s nginx-ingress-controller Ingress default/example

[root@foundation60 kiosk]# cat /etc/hosts # server4上要有www1.westos.org的解析

172.25.60.4 www1.westos.org www2.westos.org www3.westos.org

[root@foundation60 kiosk]# curl www1.westos.org/hostname.html # 可以负载均衡

nginx-deployment-5c58fb7c46-qmmms

[root@foundation60 kiosk]# curl www1.westos.org/hostname.html

nginx-deployment-5c58fb7c46-57z8g

[kubeadm@server2 helm]$ cat ingress1.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

# namespace: foo

spec:

rules:

- host: www1.westos.org

http:

paths:

- backend:

serviceName: myservice

servicePort: 80

path: /

tls: # 启用tls加密

- hosts:

- www1.westos.org

secretName: tls-secret

[kubeadm@server2 helm]$ kubectl apply -f ingress1.yaml

ingress.extensions/example configured