macOS Spark 2.4.3 standalone 搭建笔记

based on

jdk 1.8

Mac os

1、解压

nancylulululu:local nancy$ tar -zxvf /Users/nancy/Downloads/spark-2.4.3-bin-hadoop2.7.tar -C /usr/local/opt/

2、重命名并修改配置文件

nancylulululu:conf nancy$ cp slaves.template slaves

nancylulululu:conf nancy$ cp spark-defaults.conf.template spark-defaults.conf

nancylulululu:conf nancy$ cp spark-env.sh.template spark-env.sh

nancylulululu:conf nancy$ vi slaves

localhost

nancylulululu:conf nancy$ vi spark-env.sh

SPARK_LOCAL_IP=127.0.0.1

SPARK_MASTER_HOST=127.0.0.1

SPARK_MASTER_PORT=7077

SPARK_WORKER_CORES=2

SPARK_WORKER_MEMORY=1G

3、启动spark

nancylulululu:spark-2.4.3 nancy$ sbin/start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /usr/local/opt/spark-2.4.3/logs/spark-nancy-org.apache.spark.deploy.master.Master-1-nancylulululu.out

localhost: starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/opt/spark-2.4.3/logs/spark-nancy-org.apache.spark.deploy.worker.Worker-1-nancylulululu.out

jps 查看一个 master 和一个 worker服务

nancylulululu:conf nancy$ jps

3908 CoarseGrainedExecutorBackend

3478 Master

4166 Jps

3896 SparkSubmit

3514 Worker

4、 Launching Applications with spark-submit

Once a user application is bundled, it can be launched using the bin/spark-submit script. This script takes care of setting up the classpath with Spark and its dependencies, and can support different cluster managers and deploy modes that Spark supports:

./bin/spark-submit \ --class\ --master \ --deploy-mode \ --conf = \ ... # other options \ [application-arguments]

Some of the commonly used options are:

-

--class: The entry point for your application (e.g.org.apache.spark.examples.SparkPi) -

--master: The master URL for the cluster (e.g.spark://23.195.26.187:7077) -

--deploy-mode: Whether to deploy your driver on the worker nodes (cluster) or locally as an external client (client) (default:client) † -

--conf: Arbitrary Spark configuration property in key=value format. For values that contain spaces wrap “key=value” in quotes (as shown). -

application-jar: Path to a bundled jar including your application and all dependencies. The URL must be globally visible inside of your cluster, for instance, anhdfs://path or afile://path that is present on all nodes. -

application-arguments: Arguments passed to the main method of your main class, if any

脚本:

./bin/spark-submit \ --class org.apache.spark.examples.SparkPi \ --master spark://localhost:7077 \ /usr/local/opt/spark-2.4.3/examples/jars/spark-examples_2.11-2.4.3.jar \ 100

结果

Pi is roughly 3.1413047141304715

19/05/14 14:23:06 INFO SparkUI: Stopped Spark web UI at http://localhost:4040

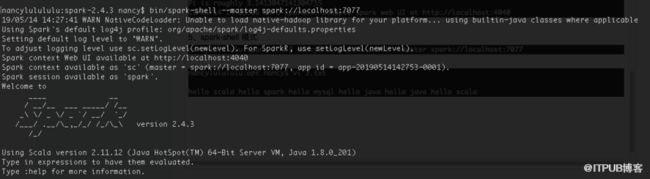

5、spark-shell 模式

nancylulululu:spark-2.4.3 nancy$ bin/spark-shell --master spark://localhost:7077

6、测试wordcount

测试文件

nancylulululu:opt nancy$ vi 3.txt

hello scala hello spark hello mysql hello java hello java hello scala

测试结果

scala> sc.textFile("/usr/local/opt/3.txt").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).collect

res1: Array[(String, Int)] = Array((scala,2), (mysql,1), (hello,6), (java,2), (spark,1))

scala>

来自 “ ITPUB博客 ” ,链接:http://blog.itpub.net/69908925/viewspace-2644303/,如需转载,请注明出处,否则将追究法律责任。

转载于:http://blog.itpub.net/69908925/viewspace-2644303/