android 原生人脸检测

在android打开照相机时自动会对焦,并且能自动识别人脸,这个是利用google自带的FaceDetector做人脸检测识别 下面就简单介绍一下Fade Detector 的用法

1,照相机的打开,拍照识别时首先对摄像头进行配置

a,将摄像头Camera和SurfaceView绑定具体,在百度一搜一大堆 ,具体代码如下

@Override

protected void onPostCreate(@Nullable Bundle savedInstanceState) {

Log.e(TAG, "onPostCreate");

super.onPostCreate(savedInstanceState);

// Check for the camera permission before accessing the camera. If the

// permission is not granted yet, request permission.

SurfaceHolder holder = surfaceView.getHolder();

holder.addCallback(this);

holder.setFormat(ImageFormat.NV21);

}在surfaceHolder中 oncreate方法中打开摄像头

public void surfaceCreated(SurfaceHolder surfaceHolder) {

Log.e(TAG, "surfaceCreated");

//Find the total number of cameras available

numberOfCameras = Camera.getNumberOfCameras();

Camera.CameraInfo cameraInfo=new Camera.CameraInfo();

for (int i=0;i< Camera.getNumberOfCameras();i++){

Camera.getCameraInfo(i,cameraInfo);

if(cameraInfo.facing==Camera.CameraInfo.CAMERA_FACING_FRONT){

if(cameraId==1)

cameraId=i;

}

}

mCamera=Camera.open(cameraId);

Camera.getCameraInfo(cameraId,cameraInfo);

if(cameraInfo.facing==Camera.CameraInfo.CAMERA_FACING_FRONT){

mFaceView.setFront(true);

}

try{

mCamera.setPreviewDisplay(surfaceView.getHolder());

}catch (Exception e){

e.printStackTrace();

}

}当surfaceView调用完onCreate 之后紧接着会回调 onSeurfaceChange()函数因此在次函数中继续做摄像头的一些初始化和配置

public void surfaceChanged(SurfaceHolder surfaceHolder, int format, int width, int height) {

Log.e(TAG, "surfaceChanged");

// We have no surface, return immediately:

if (surfaceHolder.getSurface() == null) {

return;

}

// Try to stop the current preview:

try {

mCamera.stopPreview();

} catch (Exception e) {

// Ignore...

}

configureCamera(width, height);

setDisplayOrientation();

setErrorCallback();

// Create media.FaceDetector

float aspect=(float) previewHeight / (float) previewWidth;

faceDetector=new FaceDetector(prevSettingWidth,(int)(prevSettingWidth*aspect),MAX_FACE);

// Everything is configured! Finally start the camera preview again:

startPreview();

}

public void configureCamera(int width,int height){

Camera.Parameters parameters=mCamera.getParameters();

// Set the PreviewSize and AutoFocus:

setOptimalPreviewSize(parameters, width, height);

setAutoFocus(parameters);

// And set the parameters:

mCamera.setParameters(parameters);

}

private void setDisplayOrientation(){

mDisplayRotation = Utils.getDisplayRotation(FaceRGBAct.this);

mDisplayOrientation = Utils.getDisplayOrientation(mDisplayRotation, cameraId);

mCamera.setDisplayOrientation(mDisplayOrientation);

if (mFaceView != null) {

mFaceView.setDisplayOrientation(mDisplayOrientation);

}

}

private void setErrorCallback() {

mCamera.setErrorCallback(mErrorCallback);

}

private void setOptimalPreviewSize(Camera.Parameters cameraParameters,int width,int height){

List previewSizes=cameraParameters.getSupportedPreviewSizes();

float targetRatio=(float)width/height;

Camera.Size previewSize=Utils.getOptimalPreviewSize(this,previewSizes,targetRatio);

previewWidth = previewSize.width;

previewHeight = previewSize.height;

Log.e(TAG, "previewWidth" + previewWidth);

Log.e(TAG, "previewHeight" + previewHeight);

/**

* Calculate size to scale full frame bitmap to smaller bitmap

* Detect face in scaled bitmap have high performance than full bitmap.

* The smaller image size -> detect faster, but distance to detect face shorter,

* so calculate the size follow your purpose

*/

if (previewWidth / 4 > 360) {

prevSettingWidth = 360;

prevSettingHeight = 270;

} else if (previewWidth / 4 > 320) {

prevSettingWidth = 320;

prevSettingHeight = 240;

} else if (previewWidth / 4 > 240) {

prevSettingWidth = 240;

prevSettingHeight = 160;

} else {

prevSettingWidth = 160;

prevSettingHeight = 120;

}

Log.i(TAG, "****************");

Log.e(TAG, "previewWidth****" + prevSettingWidth);

Log.e(TAG, "previewHeight****" + prevSettingHeight);

cameraParameters.setPreviewSize(previewSize.width, previewSize.height);

mFaceView.setPreviewWidth(previewWidth);

mFaceView.setPreviewHeight(previewHeight);

}

关键点

onPreviewFrame回调函数,当摄像头设置好开始预览时,会不断的回调次函数,实时的将每次预览的数据通过该函数传传递回来,我们要获取图像只需要再次函数中获取就行,同时注意性能问题,因为次函数不断地会回调,因此不能做耗时的操作,需要开启子线程去处理即可,具体如下:

@Override

public void onPreviewFrame(byte[] bytes, Camera camera) {

if(!isThreadWorking){

if (counter == 0)

start = System.currentTimeMillis();

isThreadWorking=true;

waitForFdetThreadComplete();

detectThread=new FaceDetectThread(handler,this);

detectThread.setData(bytes);

detectThread.start();

}

} private class FaceDetectThread extends Thread{

private Handler handler;

private byte[] data=null;

private Context context;

private Bitmap mBitmap;

static final int REFRESH_COMPLETE=0x1112;

public FaceDetectThread(Handler handler, Context context) {

this.context = context;

this.handler = handler;

}

public void setData(byte[] data) {

this.data = data;

}

@Override

public void run() {

float aspect = (float)previewHeight / (float)previewWidth;

int w = prevSettingWidth;

int h = (int)(prevSettingWidth * aspect);

Bitmap bitmap = Bitmap.createBitmap(previewWidth, previewHeight, Bitmap.Config.RGB_565);

// face detection: first convert the image from NV21 to RGB_565

YuvImage yuv = new YuvImage(data, ImageFormat.NV21,

bitmap.getWidth(),

bitmap.getHeight(),

null);

// TODO: make rect a member and use it for width and height values above

Rect rectImage = new Rect(0, 0, bitmap.getWidth(), bitmap.getHeight());

// TODO: use a threaded option or a circular buffer for converting streams?

//see http://ostermiller.org/convert_java_outputstream_inputstream.html

ByteArrayOutputStream baout = new ByteArrayOutputStream();

if (!yuv.compressToJpeg(rectImage, 100, baout)) {

Log.e("CreateBitmap", "compressToJpeg failed");

}

BitmapFactory.Options bfo = new BitmapFactory.Options();

bfo.inPreferredConfig = Bitmap.Config.RGB_565;

bitmap = BitmapFactory.decodeStream(

new ByteArrayInputStream(baout.toByteArray()), null, bfo);

/* Log.i(TAG, "bitmapWidth****" + bitmap.getWidth());

Log.i(TAG, "bitmapHeight****" + bitmap.getHeight());

*/

Bitmap bmp = Bitmap.createScaledBitmap(bitmap, 320, 240, false);

/* Log.i(TAG, "**********************************");

Log.i(TAG, "bitmapWidth****" + bmp.getWidth());

Log.i(TAG, "bitmapHeight****" + bmp.getHeight());*/

float xScale = (float) previewWidth / (float) prevSettingWidth;

float yScale = (float) previewHeight / (float) h;

Camera.CameraInfo info = new Camera.CameraInfo();

Camera.getCameraInfo(cameraId, info);

int rotate = mDisplayOrientation;

//Log.e("***ortate***",rotate+"");

if (info.facing == Camera.CameraInfo.CAMERA_FACING_FRONT && mDisplayRotation % 180 == 0) {

if (rotate + 180 > 360) {

//Log.e("***ortate-180***",rotate+"");

rotate = rotate - 180;

} else

//Log.e("***ortate+180***",rotate+"");

rotate = rotate + 180;

}

switch (rotate) {

case 90:

bmp = ImageUtils.rotate(bmp, 90);

xScale = (float) previewHeight / bmp.getWidth();

yScale = (float) previewWidth / bmp.getHeight();

break;

case 180:

bmp = ImageUtils.rotate(bmp, 180);

break;

case 270:

bmp = ImageUtils.rotate(bmp, 270);

xScale = (float) previewHeight / (float) h;

yScale = (float) previewWidth / (float) prevSettingWidth;

break;

}

/* Log.i(TAG, "%%%%%%%%%%%%%%%%%%%%%%");

Log.i(TAG, "bitmapWidth****" + bmp.getWidth());

Log.i(TAG, "bitmapHeight****" + bmp.getHeight());*/

FaceApp.getInstance().getNetInfo().runOnThreadServer(Constant.DETECTED,bmp,mHandler);

faceDetector = new FaceDetector(bmp.getWidth(), bmp.getHeight(), MAX_FACE);

FaceDetector.Face[] fullResults = new FaceDetector.Face[MAX_FACE];

faceDetector.findFaces(bmp, fullResults);

for (int i = 0; i < MAX_FACE; i++) {

if (fullResults[i] == null) {

faces[i].clear();

} else {

PointF mid = new PointF();

fullResults[i].getMidPoint(mid);

mid.x *= xScale;

mid.y *= yScale-0.5;

float eyesDis = fullResults[i].eyesDistance() * xScale;

float confidence = fullResults[i].confidence();

float pose = fullResults[i].pose(android.media.FaceDetector.Face.EULER_Y);

int idFace = Id;

/*Rect rect = new Rect(

(int) (mid.x - eyesDis * 1.20f),

(int) (mid.y - eyesDis * 0.10f),

(int) (mid.x + eyesDis * 1.20f),

(int) (mid.y + eyesDis * 1.85f));*/

Rect rect = new Rect(

(int) (mid.x - eyesDis * 1.20f),

(int) (mid.y - eyesDis * 0.30f),

(int) (mid.x + eyesDis * 1.20f),

(int) (mid.y + eyesDis * 1.85f));

/**

* Only detect face size > 100x100

*/

if (rect.height() * rect.width() > 100 * 100) {

for (int j = 0; j < MAX_FACE; j++) {

float eyesDisPre = faces_previous[j].eyesDistance();

PointF midPre = new PointF();

faces_previous[j].getMidPoint(midPre);

RectF rectCheck = new RectF(

(midPre.x - eyesDisPre * 1.5f),

(midPre.y - eyesDisPre * 1.15f),

(midPre.x + eyesDisPre * 1.5f),

(midPre.y + eyesDisPre * 1.85f));

if (rectCheck.contains(mid.x, mid.y) &&

(System.currentTimeMillis() - faces_previous[j].getTime()) < 1000) {

idFace = faces_previous[j].getId();

break;

}

}

if (idFace == Id)

Id++;

faces[i].setFace(idFace, mid, eyesDis, confidence, pose, System.currentTimeMillis());

faces_previous[i].set(faces[i].getId(), faces[i].getMidEye(),

faces[i].eyesDistance(), faces[i].getConfidence(),

faces[i].getPose(), faces[i].getTime());

//

// if focus in a face 5 frame -> take picture face display in RecyclerView

// because of some first frame have low quality

//

if (facesCount.get(idFace) == null) {

facesCount.put(idFace, 0);

} else {

int count = facesCount.get(idFace) + 1;

if (count <= 5)

facesCount.put(idFace, count);

//

// Crop Face to display in RecylerView

//

/* if (count == 5) {

mBitmap = ImageUtils.cropFace(faces[i], bitmap, rotate);

if (mBitmap != null) {

handler.post(new Runnable() {

public void run() {

//FaceObServernotice.getInstance().notifyObserver(0,mbitmap);

mHandler.sendEmptyMessageDelayed(REFRESH_COMPLETE, 0);

// imagePreviewAdapter.add(mbitmap);

}

});

}

}*/

}

}

}

}

handler.post(new Runnable() {

public void run() {

//send face to FaceView to draw rect

mFaceView.setFaces(faces);

//calculate FPS

end = System.currentTimeMillis();

counter++;

double time = (double) (end - start) / 1000;

if (time != 0)

fps = counter / time;

mFaceView.setFPS(fps);

if (counter == (Integer.MAX_VALUE - 1000))

counter = 0;

isThreadWorking = false;

}

});

}

/*private Handler mHandler = new Handler() {

public void handleMessage(android.os.Message msg) {

switch (msg.what) {

case REFRESH_COMPLETE:

if(ScanApp.scanInfo==null){

*//* Intent intent = new Intent(FaceDetectRGBActivity.this, MainAct.class);

startActivity( intent);*//*

setResult(RESULT_OK);

}else{

Intent intent = new Intent(FaceDetectRGBActivity.this, FaceVerifyAct.class);

ByteArrayOutputStream output = new ByteArrayOutputStream();//初始化一个流对象

//把bitmap100%高质量压缩 到 output对象里

mbitmap.compress(Bitmap.CompressFormat.PNG, 100, output);

//result = output.toByteArray();//转换成功了 result就是一个bit的资源数组

intent.putExtra("bitmap",output.toByteArray());

startActivity(intent);

}

FaceDetectRGBActivity.this.finish();

break;

}

}

};*/

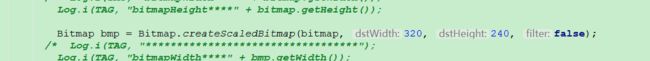

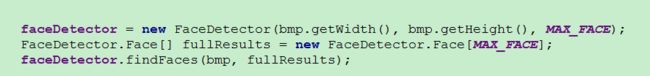

}注意再此处将图片压缩为320* 240 具体可根据自己的摄像头来调试,

具体可参照如下代码,此代码包含华为云人脸识别活体检测等功能 去掉及原生的人脸识别

https://github.com/lvzongning/android-FaceDetected/tree/5dcb9ed49387b0bbc59fd6eaad61c471c247dea8