前置条件:完成MGR部署

MGR部署参考:MGR单主部署

此外需要将MGR的从节点设置read_only=1

架构

ProxySQL:172.17.100.101

MGR单主:172.17.100.101

MGR双从:172.17.100.103

172.17.100.104

部署ProxySQL

#下载安装最新的proxy1.4.x版本

(本段摘抄自骏马金龙博客:http://www.cnblogs.com/f-ck-need-u/p/9278818.html)

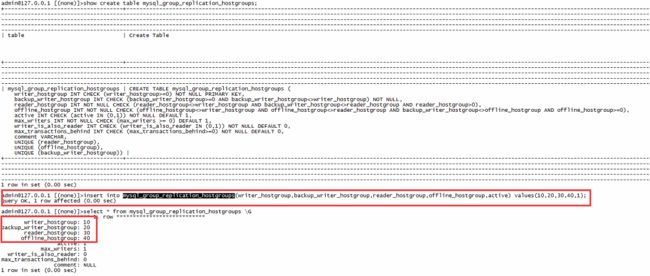

cat < [proxysql_repo] name= ProxySQL baseurl=http://repo.proxysql.com/ProxySQL/proxysql-1.4.x/centos/\$releasever gpgcheck=1 gpgkey=http://repo.proxysql.com/ProxySQL/repo_pub_key EOF yum install -y proxysql proxysql的操作,分成三层 最顶层为runtime层,数据加载到这一层后可以实现执行; 中间层为内存层,也就是各种表内容的写入; 最底层为disk层,也就是把数据写到磁盘保存; 根据吴总的推荐,proxy的操作最好是首先在内存层的个表中写入数据,确认数据无误后,写入到disk层进行存储,存储完毕后加载到runtime层用于实现; 不过网上的文档很多是直接加载到runtime,然后再存储到disk中,个人觉得吴总的方法更严谨一点(然而我做实验的时候因为是测试库的关系,实际上也是先执行的load→_→) #相关账号已经存在于MySQL正式库中 monitor为前端监控账号 run为后端程序账号 权限给的不是很严谨,为了保障实验顺利,给的都是all privileges;实际工作中,监控端账号只需要给select权限即可 #之前已经部署过proxysql,这里需要干掉老的proxysql,重新部署一次 删除proxysql的配置和路径 #yum安装proxysql #配置/etc/proxysql.cnf #启动proxysql并用admin登陆(6032端口) #配置监控账号 set mysql-monitor_username='monitor'; set mysql-monitor_password='beacon'; 这里配置的监控账号密码与proxysql.cnf里面配置的一致 #配置默认组信息 insert into mysql_group_replication_hostgroups(writer_hostgroup,backup_writer_hostgroup,reader_hostgroup,offline_hostgroup,active) values(10,20,30,40,1); 组ID含义如下 写组:10 备写组:20 读组:30 离线组(不可用):40 #配置用户(主要是添加程序端的这个用户,也就是run,将其设置到写组10里面) insert into mysql_users(username,password,default_hostgroup) values('run','beacon',10); #配置后端节点信息 主节点定义为写组10,从节点定义为只读组30 insert into mysql_servers(hostgroup_id,hostname,port,comment) values(10,'172.17.100.101',3306,'write'); insert into mysql_servers(hostgroup_id,hostname,port,comment) values(30,'172.17.100.103',3306,'read'); insert into mysql_servers(hostgroup_id,hostname,port,comment) values(30,'172.17.100.104',3306,'read'); #配置读写分离参数 insert into mysql_query_rules(rule_id,active,match_digest,destination_hostgroup,apply)values(1,1,'^SELECT.*FOR UPDATE$',10,1); insert into mysql_query_rules(rule_id,active,match_digest,destination_hostgroup,apply)values(2,1,'^SELECT',30,1); select rule_id,active,match_digest,destination_hostgroup,apply from mysql_query_rules; #保存到磁盘并load到runtime 综上,我们一共操作了5张表 mysql_users mysql_servers mysql_query_rules global_variables mysql_group_replication_hostgroups 前面4张都需要执行save和load操作 save是使内存数据永久存储到磁盘,load使内存数据加载到runtime生效 save mysql users to disk; save mysql servers to disk; save mysql query rules to disk; save mysql variables to disk; save admin variables to disk; load mysql users to runtime; load mysql servers to runtime; load mysql query rules to runtime; load mysql variables to runtime; load admin variables to runtime; #用程序端账号并使用6033端口登陆,执行show databases可以得出结果,证明状态通畅 测试部分说法约定 监控端:使用admin用户登陆6032端口 程序端:使用run用户登陆6033端口 节点端:使用root用户在本地登陆 #在MySQL库添加一个监控脚本:addition_to_sys.sql 脚本内容如下 USE sys; DELIMITER $$ CREATE FUNCTION IFZERO(a INT, b INT) RETURNS INT DETERMINISTICRETURN IF(a = 0, b, a)$$ CREATE FUNCTION LOCATE2(needle TEXT(10000), haystack TEXT(10000), offset INT) RETURNS INT DETERMINISTICRETURN IFZERO(LOCATE(needle, haystack, offset), LENGTH(haystack) + 1)$$ CREATE FUNCTION GTID_NORMALIZE(g TEXT(10000)) RETURNS TEXT(10000) DETERMINISTICRETURN GTID_SUBTRACT(g, '')$$CREATE FUNCTION GTID_COUNT(gtid_set TEXT(10000)) RETURNS INT DETERMINISTIC BEGIN DECLARE result BIGINT DEFAULT 0; DECLARE colon_pos INT; DECLARE next_dash_pos INT; DECLARE next_colon_pos INT; DECLARE next_comma_pos INT; SET gtid_set = GTID_NORMALIZE(gtid_set); SET colon_pos = LOCATE2(':', gtid_set, 1); WHILE colon_pos != LENGTH(gtid_set) + 1 DO SET next_dash_pos = LOCATE2('-', gtid_set, colon_pos + 1); SET next_colon_pos = LOCATE2(':', gtid_set, colon_pos + 1); SET next_comma_pos = LOCATE2(',', gtid_set, colon_pos + 1); IF next_dash_pos < next_colon_pos AND next_dash_pos < next_comma_pos THEN SET result = result + SUBSTR(gtid_set, next_dash_pos + 1, LEAST(next_colon_pos, next_comma_pos) - (next_dash_pos + 1)) - SUBSTR(gtid_set, colon_pos + 1, next_dash_pos - (colon_pos + 1)) + 1; ELSE SET result = result + 1; END IF; SET colon_pos = next_colon_pos; END WHILE; RETURN result;END$$ CREATE FUNCTION gr_applier_queue_length() RETURNS INT DETERMINISTIC BEGIN RETURN (SELECT sys.gtid_count( GTID_SUBTRACT( (SELECTReceived_transaction_set FROM performance_schema.replication_connection_statusWHERE Channel_name = 'group_replication_applier' ), (SELECT@@global.GTID_EXECUTED) )));END$$ CREATE FUNCTION gr_member_in_primary_partition() RETURNS VARCHAR(3) DETERMINISTIC BEGIN RETURN (SELECT IF( MEMBER_STATE='ONLINE' AND ((SELECT COUNT(*) FROMperformance_schema.replication_group_members WHERE MEMBER_STATE != 'ONLINE') >=((SELECT COUNT(*) FROM performance_schema.replication_group_members)/2) = 0),'YES', 'NO' ) FROM performance_schema.replication_group_members JOINperformance_schema.replication_group_member_stats USING(member_id));END$$ CREATE VIEW gr_member_routing_candidate_status AS SELECTsys.gr_member_in_primary_partition() as viable_candidate,IF( (SELECT (SELECT GROUP_CONCAT(variable_value) FROMperformance_schema.global_variables WHERE variable_name IN ('read_only','super_read_only')) != 'OFF,OFF'), 'YES', 'NO') as read_only,sys.gr_applier_queue_length() as transactions_behind, Count_Transactions_in_queue as 'transactions_to_cert' from performance_schema.replication_group_member_stats;$$DELIMITER ; #节点端通过系统视图sys.gr_member_routing_candidate_status进行监控 select * from sys.gr_member_routing_candidate_status; 主节点 从节点 #监控端的监控如下 select hostname,port,viable_candidate,read_only,transactions_behind,error from mysql_server_group_replication_log order by time_start_us desc limit 6; #程序端进行操作 #监控端观察路由状态 select hostgroup,digest_text from stats_mysql_query_digest; 读写分离测试成功! #直接关闭主节点 #监控端执行查询 发现主节点101已经被踢出,从节点104已经变为可写状态,意味着104被自动提升为主节点 原主节点101属组已经变更为40,状态变更为shunned,证实该节点不可用 从节点104属组由30变更为10 程序端执行DML操作,并不会受到影响 重启节点101 需要手动在101上启动group_replication 101被proxysql识别,重新加入监控,变更为从节点 故障转移测试成功!ProxySQL的多层配置结构逻辑

实际搭建配置ProxySQL+MGR+读写分离过程

验证ProxySQL相关功能

相关监控设置

读写分离测试

故障转移测试