hadoop自学日记--2.hadoop集群环境搭建

hadoop自学日记–2.hadoop集群环境搭建

搭建环境

本人使用简单的windows 7笔记本,使用VirtualBox创建Centos虚拟机来安装Hadoop

VirtualBox:6.0.8 r130520 (Qt5.6.2)

CentOS:CentOS Linux release 7.6.1810 (Core)

jdk:1.8.0_202

hadoop:2.6.5

集群规划

- 一个master节点,在hdfs担任NameNode,在yarn担任ResourceManager。

- 三台数据节点data1,data2,data3,在hdfs担任DataNode,在yarn担任NodeManager。

| 名称 | ip | hdfs | yarn |

|---|---|---|---|

| master | 192.168.37.200 | NameNode | ResourceManager |

| data1 | 192.168.37.201 | DataNode | NodeManager |

| data2 | 192.168.37.202 | DataNode | NodeManager |

| data3 | 192.168.37.203 | DataNode | NodeManager |

创建data节点

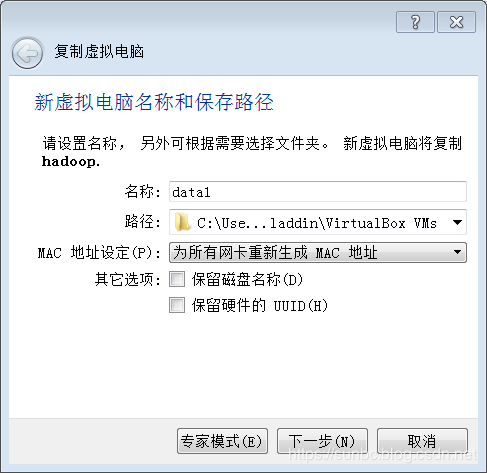

将之前创建的虚拟机作为模板机器,复制出data1注意要为所有网卡重新生成MAC地址。

参见:hadoop自学日记–1.单机hadoop环境搭建

完成后开启data1虚拟机,修改配置

1.core-site.xml

修改全局配置core-site.xml,将local改为master

[root@ ~]# vim /software/hadoop-2.6.5/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.default.namename>

<value>hdfs://master:9000value>

property>

configuration>

2.yarn-site.xml

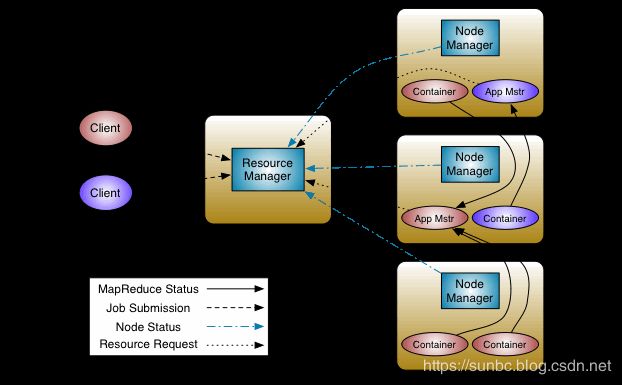

修改yarn配置yarn-site.xml,分别添加NodeManager,ApplicationMaster,客户端的连接信息。

详细架构如下:

参考:Apache Hadoop YARN

[root@ ~]# vim /software/hadoop-2.6.5/etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux.servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux.services.mapreduce.shuffle.classname>

<value>org.apache.hadoop.mapred.ShuffleHandlervalue>

property>

<property>

<name>yarn.resourcemanager.resource-tracker.addressname>

<value>master:8025value>

property>

<property>

<name>yarn.resourcemanager.scheduler.addressname>

<value>master:8030value>

property>

<property>

<name>yarn.resourcemanager.addressname>

<value>master:8050value>

property>

configuration>

3.mapred-site.xml

修改监控配置mapred-site.xml,将链接地址改为master

[root@ ~]# vim /software/hadoop-2.6.5/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>master:54311value>

property>

configuration>

4.hdfs-site.xml

修改hdfs配置mapred-site.xml,去除NameNode配置信息

[root@ ~]# vim /software/hadoop-2.6.5/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>file:/software/hadoop-2.6.5/hadoop_data/hdfs/datanodevalue>

property>

configuration>

再将修改后的data1机器复制为data2,data3,master。

配置网络

按照规划配置各台虚拟机hostonly网卡,使用静态ip,以data1机器为例:

查看网络状况:

[root@localhost ~]# ifconfig

enp0s3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.11.91.160 netmask 255.255.255.0 broadcast 10.11.91.255

inet6 fe80::cfb8:10a7:ca04:61ea prefixlen 64 scopeid 0x20<link>

ether 08:00:27:41:1e:cf txqueuelen 1000 (Ethernet)

RX packets 23859 bytes 2131126 (2.0 MiB)

RX errors 0 dropped 3 overruns 0 frame 0

TX packets 438 bytes 58284 (56.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

enp0s8: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.37.104 netmask 255.255.255.0 broadcast 192.168.37.255

inet6 fe80::7327:497d:15cd:6be0 prefixlen 64 scopeid 0x20<link>

ether 08:00:27:14:8a:70 txqueuelen 1000 (Ethernet)

RX packets 667 bytes 73822 (72.0 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 39 bytes 6314 (6.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 16 base 0xd240

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

这里enp0s3是桥接的网卡,是用来上网的,可以忽略。而enp0s3是hostonly的网卡,需要做配置:

复制一份配置文件:

[root@localhost ~]# cd /etc/sysconfig/network-scripts/

[root@localhost network-scripts]# ls

ifcfg-enp0s3 ifdown-eth ifdown-post ifdown-Team ifup-aliases ifup-ipv6 ifup-post ifup-Team init.ipv6-global

ifcfg-lo ifdown-ippp ifdown-ppp ifdown-TeamPort ifup-bnep ifup-isdn ifup-ppp ifup-TeamPort network-functions

ifdown ifdown-ipv6 ifdown-routes ifdown-tunnel ifup-eth ifup-plip ifup-routes ifup-tunnel network-functions-ipv6

ifdown-bnep ifdown-isdn ifdown-sit ifup ifup-ippp ifup-plusb ifup-sit ifup-wireless

[root@localhost network-scripts]# cp ifcfg-enp0s3 ifcfg-enp0s8

编辑如下配置:

[root@localhost network-scripts]# vim ifcfg-enp0s8

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="static"

DEFROUTE="yes"

PEERDNS="yes"

PEERROUTES="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_PEERDNS="yes"

IPV6_PEERROUTES="yes"

IPV6_FAILURE_FATAL="no"

NAME="enp0s8"

UUID="06cae6b3-98ea-4177-8de1-acee3e51f9a2"

DEVICE="enp0s8"

ONBOOT="yes"

IPADDR=192.168.37.201

NETMASK=255.255.255.0

GATEWAY=192.168.37.1

配置hosts

[root@localhost network-scripts]# vim /etc/hosts

192.168.37.200 master

192.168.37.201 data1

192.168.37.202 data2

192.168.37.203 data3

重启后,新配置生效。

配置master节点

由于master节点需要做为管理节点存在,不存储数据,需要对其配置进行修改。

hdfs-site.xml

master只是NameNode,而不是DataNode,需要修改hdfs配置:

[root@ ~]# vim /software/hadoop-2.6.5/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>file:/software/hadoop-2.6.5/hadoop_data/hdfs/namenodevalue>

property>

configuration>

masters和slaves

masters文件告知哪台机器是NameNode:

[root@ ~]# vim /software/hadoop-2.6.5/etc/hadoop/masters

master

slaves文件告知哪台机器是DataNode:

[root@ ~]# vim /software/hadoop-2.6.5/etc/hadoop/slaves

data1

data2

data3

重启master节点

创建hdfs目录

以data1为例:

1.连接到data1

[root@ ~]# ssh data1

2.删除hdfs目录

[root@ ~]# rm -rf /software/hadoop-2.6.5/hadoop_data/hdfs/

3.创建DataNode目录

[root@ ~]# mkdir -p /software/hadoop-2.6.5/hadoop_data/hdfs/datanode

同样操作在data2,data3执行。

格式化NameNode目录

在master重建NameNode目录:

[root@ ~]# rm -rf /software/hadoop-2.6.5/hadoop_data/hdfs

[root@ ~]# mkdir -p /software/hadoop-2.6.5/hdfs/namenode

格式化:

[root@ ~]# hadoop namenode -format

配置hosts

以data1为例:

[root@localhost network-scripts]# vim /etc/hostname

data1

修改后重启机器生效

启动集群

直接使用start-all同时启动hdfs和yarn

[root@master ~]# start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

The authenticity of host 'master (192.168.37.200)' can't be established.

ECDSA key fingerprint is SHA256:sVo82fntVBJ6mhn1+oSp+1lLVknmE7s4JcMg4MVoLO0.

ECDSA key fingerprint is MD5:89:6d:c0:42:b1:21:79:07:c4:41:19:a2:0a:45:19:43.

Are you sure you want to continue connecting (yes/no)? yes

master: Warning: Permanently added 'master,192.168.37.200' (ECDSA) to the list of known hosts.

master: starting namenode, logging to /software/hadoop-2.6.5/logs/hadoop-root-namenode-..out

data2: starting datanode, logging to /software/hadoop-2.6.5/logs/hadoop-root-datanode-..out

data1: starting datanode, logging to /software/hadoop-2.6.5/logs/hadoop-root-datanode-..out

data3: starting datanode, logging to /software/hadoop-2.6.5/logs/hadoop-root-datanode-..out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /software/hadoop-2.6.5/logs/hadoop-root-secondarynamenode-..out

starting yarn daemons

starting resourcemanager, logging to /software/hadoop-2.6.5/logs/yarn-root-resourcemanager-..out

data3: starting nodemanager, logging to /software/hadoop-2.6.5/logs/yarn-root-nodemanager-..out

data1: starting nodemanager, logging to /software/hadoop-2.6.5/logs/yarn-root-nodemanager-..out

data2: starting nodemanager, logging to /software/hadoop-2.6.5/logs/yarn-root-nodemanager-..out

查看master节点进程:

[root@master ~]# jps

3766 ResourceManager

3626 SecondaryNameNode

3452 NameNode

4061 Jps

查看data节点进程:

[root@master ~]# ssh data1 "/software/jdk1.8.0_202/bin/jps"

3462 NodeManager

3606 Jps

3369 DataNode

查看web界面

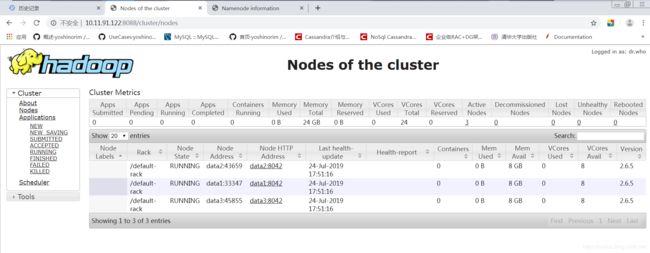

1.ResourceManager界面

可看到共有3个节点data1,data2,data3。

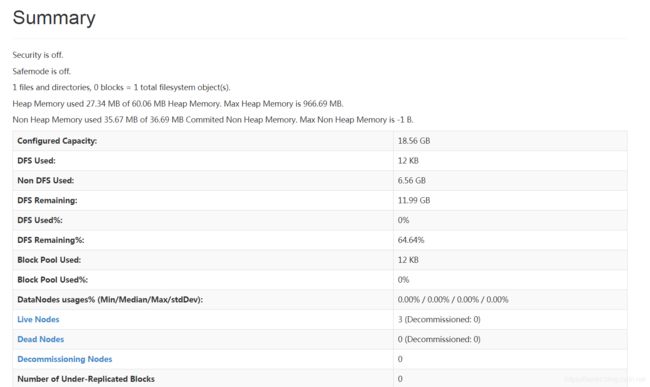

2.hdfs界面

地址:http://10.11.91.122:50070/

可以看到Live Nodes有3个

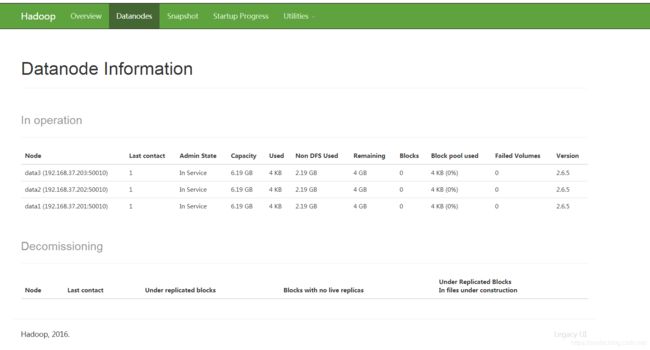

再切换到DataNode界面:

可以看到3个节点的完整信息。

搭建集群遇到的问题

1.datanode节点无法启动

一开始搭建的时候我因为配置错误导致namenode未正常启动,后来格式化了namenode后发现datanode都无法启动了。查看日志发现NameNode的clusterid和DataNode的clusterid不一致。。

手动修改为一样后此问题解决。

2.DataNode界面只显示一台机器

我配置了3台机器,但是不管怎么启动,都只会有随机的一台DataNode出现在界面上:

奇怪的是,,我再overview界面却可看到有3台机器:

最后查明原因是因为我没配置hostname,,,导致3台机器的hostname都默认为"."

修改3台机器的hostname后,问题解决