基于ambari的hadoop集群和spark安装

简介:在两台服务器(一台为ubuntu14.04,另一台为redhat 6)上通过ambari搭建hadoop环境,并启用spark。

打算将ubuntu作为server,redhat 6作为client。

1. 实现两台服务器的互相无密登陆

ubuntu的ip为10.20.31.202,主机名(hostname)为server204,全限定域名FQDN为server204

redhat 6的ip为10.20.31.201,主机名(hostname)为server201,全限定域名FQDN为server201

备注:hostname的查询用hostname命令。

fqdn的查询用hostname -f命令。

1.1 ubuntu修改主机名和全限定域名

主机名修改:

sudo nano /etc/hostname 在其中填写主机名即可。

全限定域名修改:

sudo nano /etc/hosts 在其中添加一行127.0.1.1 server204

1.2 redhat 6修改主机名和全限定域名

主机名修改:

sudo nano

/

etc

/

sysconfig

/

network 修改

HOSTNAME后值为server201

全限定域名修改:

sudo nano /etc/hosts 在其中添加一行127.0.1.1 server201

1.3 生成ssh密钥对

前提:服务器ssh服务已装好并可用。

- ubuntu服务器

生成密钥对:

ssh-keygen -t rsa -P ""

然后指定密钥对存储位置和文件名:

输入:/home/ubuntu/.ssh/id_rsa

在/home/ubuntu/.ssh目录下看到密钥对id_rsa和id_rsa.pub,前者为私钥,后者为公钥。

现在我们将公钥追加到authorized_keys中:

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys (备注:~默认代表用户目录,在此服务器上为/home/ubuntu目录)

ssh localhost 或 sshserver201

可以发现本机可以无密登陆到自己机器了。

- redhat 6服务器

重复上面操作。

注意:redhat 6需要修改authorized_keys的权限,(这一步很重要不然的话,SSH时仍然需要密码)

使用命令 chmod 600 authorized_keys

然后将ubuntu(server204)中id_rsa.pub公钥拷贝到redhat 6(server201)中/home/ubuntu目录下

执行命令 cat ~/id_rsa.pub >> ~/.ssh/authorized_keys 将此公钥添加到授信文件中

这样做之后,server204就能无密登陆到server201了。

同理,server201要能无密登陆到server204,需要将server201的公钥添加到server204的authorized_keys中。

2. 安装ambari

https://cwiki.apache.org/confluence/display/AMBARI/Install+Ambari+2.2.0+from+Public+Repositories

上面为官网教程,很详细,就不写了。

我是在ubuntu系统上安装的。

3. Ambari 的 网页配置

1、在浏览器中输入: http://10.20.31.202:8080/ 用户名密码都是admin

2、选择hadoop版本

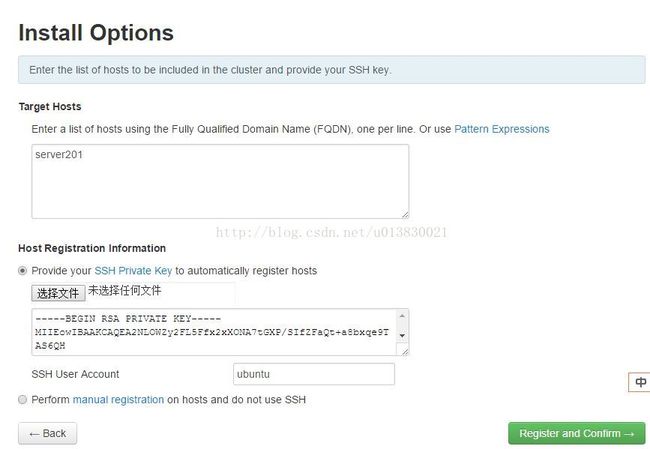

3、进入集群部署界面,我的输入如下:

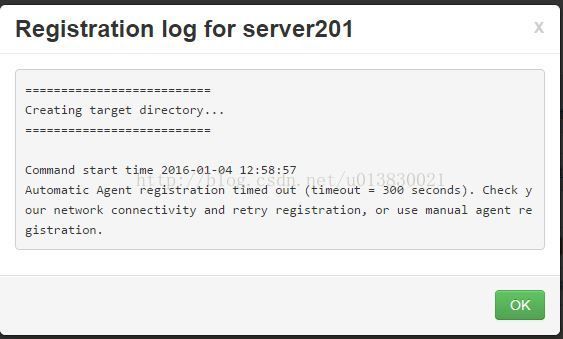

出现错误!

错误解决:

原因:自己ssh账号用的是ubuntu,不是root

网页中第三步ssh account更换为root后,需要server201用root账号登录,生成密钥对(前面是用ubuntu生成的密钥对),并将server204的公钥加入到自己的root

/.ssh/authorized_keys

中,注意修改

authorized_keys

权限为600。

上面做好之后还是报错,错误信息如下:

==========================

Creating target directory...

==========================

Command start time 2016-01-05 09:40:21

chmod: cannot access `/var/lib/ambari-agent/data': No such file or directory

Connection to server201 closed.

SSH command execution finished

host=server201, exitcode=0

Command end time 2016-01-05 09:40:22

==========================

Copying common functions script...

==========================

Command start time 2016-01-05 09:40:22

scp /usr/lib/python2.6/site-packages/ambari_commons

host=server201, exitcode=0

Command end time 2016-01-05 09:40:22

==========================

Copying OS type check script...

==========================

Command start time 2016-01-05 09:40:22

scp /usr/lib/python2.6/site-packages/ambari_server/os_check_type.py

host=server201, exitcode=0

Command end time 2016-01-05 09:40:22

==========================

Running OS type check...

==========================

Command start time 2016-01-05 09:40:22

Cluster primary/cluster OS family is ubuntu14 and local/current OS family is redhat6

Traceback (most recent call last):

File "/var/lib/ambari-agent/tmp/os_check_type1452004822.py", line 44, in

main()

File "/var/lib/ambari-agent/tmp/os_check_type1452004822.py", line 40, in main

raise Exception("Local OS is not compatible with cluster primary OS family. Please perform manual bootstrap on this host.")

Exception: Local OS is not compatible with cluster primary OS family. Please perform manual bootstrap on this host.

Connection to server201 closed.

SSH command execution finished

host=server201, exitcode=1

Command end time 2016-01-05 09:40:22

ERROR: Bootstrap of host server201 fails because previous action finished with non-zero exit code (1)

ERROR MESSAGE: Connection to server201 closed.

STDOUT: Cluster primary/cluster OS family is ubuntu14 and local/current OS family is redhat6

Traceback (most recent call last):

File "/var/lib/ambari-agent/tmp/os_check_type1452004822.py", line 44, in

main()

File "/var/lib/ambari-agent/tmp/os_check_type1452004822.py", line 40, in main

raise Exception("Local OS is not compatible with cluster primary OS family. Please perform manual bootstrap on this host.")

Exception: Local OS is not compatible with cluster primary OS family. Please perform manual bootstrap on this host.

Connection to server201 closed.

可以看出错误原因是:两台服务器操作系统不同,不能自动安装,需要自己手动安装引导程序。

操作系统不同问题的解决:

参考文档:http://pivotalhd.docs.pivotal.io/docs/install-ambari.html

- 手动安装ambari-agent

和安装ambari-server类似,只不过安装命令改为sudo yum install ambari-agent

然后修改/etc/ambari-agent/conf/ambari-agent.ini文件,指定服务器为server204,如下图:

载启动ambari-agent,执行命令sudo ambari-agent start

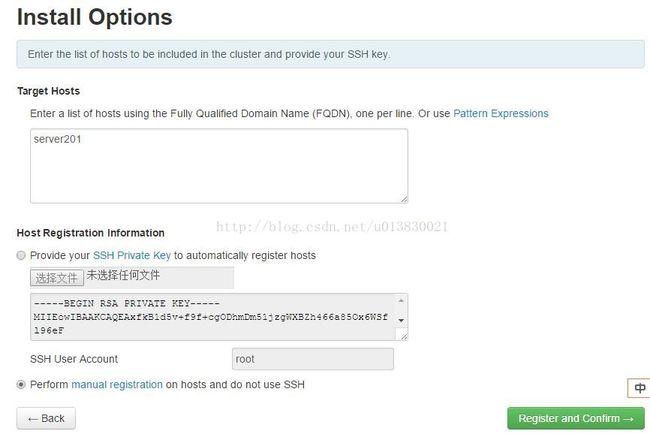

- 网页配置

选择手动注册,入下图:

然后有一些问题要解决,如若防火墙的关闭等

http://pivotalhd.docs.pivotal.io/docs/install-ambari.html

这个网页写的很详细。

来自为知笔记(Wiz)