Otto商品分类(二)----Logistic回归预测&超参数调优

目录

***训练部分***

1.读取数据

2.准备数据

3.默认参数的Logistic Regression

4.Logistic Regression+GridSearchCV

超参数调优

***测试部分***

1.读取数据

2.准备数据

3.生成预测的结果

本demo以kaggle 2015年举办的Otto Group Product Classification Challenge竞赛数据为例,分别调用缺省参数LogisticRegression、LogisticRegression+GridSearchCV(可用LogisticRegressionCV代替)进行参数调优

Otto数据集是著名电商Otto提供的一个多类商品分类问题,类别数=9,每个样本有93维数值型特征(整数,表示某种事件发生的次数,已经进行过脱敏处理)

***训练部分***

1.读取数据

#读取数据

#可以自己在log(x=1)特征和tf_idf特征上进行尝试,冰比较不同特征的结果

dpath='./data/'

train=pd.read_csv(path+'Otto_FE_train_org.csv')

print(train.head())2.准备数据

y_train=train['target']

X_train=train.drop(['id','target'],axis=1)

#保存特征名字以备后用(可视化)

feat_names=X_train.columns

#sklearn的学习器大多支持稀疏矩阵数据输入,模型训练会快很多

#查看一个学习器是否支持稀疏数据,可以看fit函数是否支持:X:{array-like,

# sparse matrix}

#可自行用timeit比较稠密数据和稀疏数据的训练时间

from scipy.sparse import csr_matrix

X_train=csr_matrix(X_train)

3.默认参数的Logistic Regression

from sklearn.linear_model import LogisticRegression

lr=LogisticRegression()

#交叉验证用于评估模型性能和进行参数调优(模型选择)

#分类任务中交叉验证缺省是采用StratifiedKFold

#数据集比较大,采用3折交叉验证

from sklearn.model_selection import cross_val_score

loss=cross_val_score(lr,X_train,y_train,cv=3,scoring='neg_log_loss')

#%timeit loss_sparse=cross_val_score(lr,X_train_sparse,y_train,cv=3,

# scoring='neg_log_loss')

print('cv accuracy score is:',-loss)

print('cv logloss is:',-loss.mean())

cv accuracy score is: [0.79764036 0.79738583 0.79737361]

cv logloss is: 0.7974666008423363

4.Logistic Regression+GridSearchCV

logistic回归需要调整超参数有:C(正则系数,一般在log域(取log后的值)均匀设置候选参数)和正则参数penalty(L2/L1)

目标函数为:J=C*sum(logloss(f(xi),yi) +penalty

在sklearn框架下,不同学习器的参数调整步骤相同:

- 设置参数搜索范围

- 生成学习器示例(参数设置)

- 生成GridSearchCV的实例(参数设置)

- 调用GridSearchCV的fit方法

超参数调优

from sklearn.model_selection import GridSearchCV

from sklearn.linear_model import LogisticRegression

#需要调优的参数

#请尝试将L1正则和L2正则分开,并配合合适的优化求解算法(solver)

#tuned_parameters={'penalth':['l1','l2'],'C':[0.001,0.01,0.1,1,10,100,

# 1000]}

#参数的搜索范围

penaltys=['l1','l2']

Cs=[0.1,1,10,100,1000]

#调优的参数集合,搜索网格为2x5,在网格上的交叉点进行搜索

tuned_parameters=dict(penalty=penaltys,C=Cs)

lr_penalty=LogisticRegression(solver='liblinear')

grid=GridSearchCV(lr_penalty,tuned_parameters,cv=3,scoring='neg_log_loss'

n_jobs=4)

grid.fit(X_train,y_train)对于tuned_parameter也类似可以这样写:

parameters = {'kernel':['linear'], 'C':[0.001,0.01,0.1,1,10,100], 'gamma':[0.0001,0.001,0.01,0.1,1,10,100]}

grid=GridSearchCV(SVC(),param_grid=parameters,cv=5)

grid.fit(X_trian_part,y_trian_part)

y_predict=grid.predict(X_val)

accuracy=accuracy_score(y_val,y_predict)

print("accuracy={}".format(accuracy))

print("params={} scores={}".format(grid.best_params_,grid.best_score_))

得到调优的参数:

best_score这里输出的是负log似然损失

#examine the best model

print(-grid.best_score_)

print(grid.best_params_)输出结果:

best_score: 0.6728475285576403

best_params: {'C': 100, 'penalty': 'l1'}

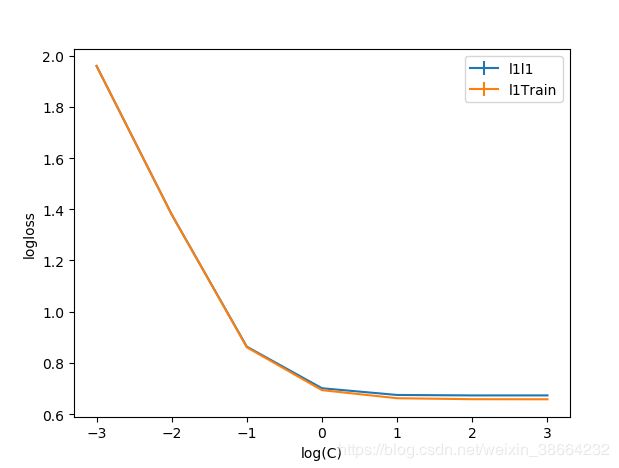

图表的形式显示训练和测试的过程:

#plot CV误差曲线

test_means=grid.cv_results_['mean_test_score']

test_stds=grid.cv_results_['std_test_score']

train_means=grid.cv_results_['mean_train_score']

#plot results

n_Cs=len(Cs)

number_penaltys=len(penaltys)

test_scores=np.array(test_means).reshape(n_Cs,number_penaltys)

train_scores=np.array(train_means).reshape(n_Cs,number_penaltys)

test_stds=np.array(test_stds).reshape(n_Cs,number_penaltys)

train_stds=np.array(train_stds).reshape(n_Cs,number_penaltys)

X_axis=np.log10(Cs)

for i,value in enumerate(penaltys):

#pyplot.plot(log(Cs),test_scores[i],label='penalty:'+str(value)

plt.errorbar(X_axis,-test_score[:,i],yerr=test_stds[:,i],

label=penaltys[i]+'Test')

plt.errorbar(X_axis,-train_scores[:,i],yerr=train_stds[:,i],

label=penalty[i]+'Train')

plt.legend()

plt.xlabel('log(C)')

plt.ylabel('logloss')

plt.savefig('LogisticGridSearchCV_c.png')

plt.show()

从图中可以看出L1正则和L2正则下、不同正则参数C对应的模型在训练集上测试集上的logloss(似然损失)

可以看出在训练集上C越大(正则越少)的模型性能越好;

但在测试集上当C=100时性能最好(L1正则)

5.保存模型,用于后续测试

cPickle.dump(grid.best_estimator_,open('Otto_L1_org.pkl','wb'))***测试部分***

1.读取数据

#读取数据

#自行在log(x+1)特征和tf-idf特征上尝试,并比较不同特征的结果

#我们可以采用stacking的方式组合这几种不同特征编码的得到的模型

dpath='./data/'

test1=pd.read_csv(dpath+'Otto_FE_test_org.csv')

test2=pd.read_csv(dpath+'Otto_FE_test_tfidf.csv')

#去掉多余的id

test2=test2.drop(['id'],axis=1)

test=pd.concat([test1,test2],axis=1,ignore_index=False)

print(test.head())

2.准备数据

test_id=test['id']

X_test=test.drop(['id'],axis=1)

#保存特征名字以备后用(可视化)

feat_names=X_test.columns

#sklearn的学习器大多数支持稀疏数据输入,模型训练会很快

from scipy.sparse import csr_matrix

X_test=csr_matrix(X_test)

#load训练好的模型

import cPickle

lr_best=cPickle.load(open('Otto_Lr_org_tfidf.pkl','rb'))

#输出每类的概率

y_test_pred=lr_best.predict_proba(X_test)

print(y_test_pred.shape)3.生成预测的结果

#生成预测的结果

out_df=pd.DataFrame(y_test_pred)

columns=np.empty(9,dtype=object)

for i in range(9):

columns[i] ='Class_'+str(i+1)

out_df.columns=columns

out_df=pd.concat([test_id,out_df],axis=1)

out_df.to_csv('LR_org_tfidf.csv',index=False)原始特征编码:在Kaggle的Private Leaderboard分数为*****

************************