CentOS下sqoop的配置安装(整理篇)

需要用到Sqoop将原来mysql中的数据导入到HBase,以下是安装配置Sqoop的步骤和问题记录:

1. 项目用到的hadoop的版本是1.1.2,所以对应的sqoop是sqoop-1.4.4.bin__hadoop-1.0.0,mysql的jdbc是mysql-connector-java-5.1.6-bin.jar

解压缩sqoop安装文件

![]()

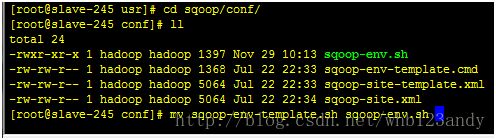

2.重命名配置文件

在${SQOOP_HOME}/conf中执行命令

mv sqoop-env-template.sh sqoop-env.sh

在conf目录下,有两个文件sqoop-site.xml和sqoop-site-template.xml内容是完全一样的,不必在意,我们只关心sqoop-site.xml即可。

3.修改配置文件sqoop-env.sh

内容如下

#Set pathto where bin/hadoop is available

exportHADOOP_COMMON_HOME=/usr/hadoop/

#Set pathto where hadoop-*-core.jar is available

exportHADOOP_MAPRED_HOME=/usr/hadoop

#set thepath to where bin/hbase is available

exportHBASE_HOME=/usr/hbase

#Set thepath to where bin/hive is available

exportHIVE_HOME=/usr/hive

#Set thepath for where zookeper config dir is

exportZOOCFGDIR=/usr/zookeeper

好了,搞定了,下面就可以运行了。

4. 配置环境变量:

在/etc/profile中添加:

export $SQOOP_HOME=/usr/sqoop

export $PATH = $SQOOP_HOME/bin:$PATH

配置完成后,需要注销或者重启

5. 解压mysql,将mysql-connector-java-5.1.6-bin.jar放到$SQOOP_HOME/lib里,配置完成。

6. 从MySQL导入数据到HDFS

(1)在MySQL里创建测试数据库sqooptest

[Hadoop@node01 ~]$ mysql -u root -p

mysql>create database sqooptest;

Query OK, 1 row affected (0.01 sec)

(2)创建sqoop专有用户

mysql>create user 'sqoop' identified by 'sqoop';

Query OK, 0 rows affected (0.00 sec)

mysql>grant all privileges on *.* to 'sqoop' with grant option;

Query OK, 0 rows affected (0.00 sec)

mysql>flush privileges;

Query OK, 0 rows affected (0.00 sec)

(3)生成测试数据

mysql>use sqooptest;

Database changed

mysql> create table tb1 as select table_schema,table_name,table_type frominformation_schema.TABLES;

Query OK, 154 rows affected (0.28 sec)

Records: 154 Duplicates: 0 Warnings: 0

(4)测试sqoop与mysql的连接

[hadoop@node01~]$ sqoop list-databases --connect jdbc:mysql://node01:3306/ --username sqoop--password sqoop

13/05/09 06:15:01 WARN tool.BaseSqoopTool: Setting your password on the command-lineis insecure. Consider using -P instead.

13/05/09 06:15:01 INFO manager.MySQLManager: Executing SQL statement: SHOWDATABASES

information_schema

hive

mysql

performance_schema

sqooptest

test

(5)从MySQL导入数据到HDFS

[hadoop@node01~]$ sqoop import --connect jdbc:mysql://node01:3306/sqooptest --username sqoop--password sqoop --table tb1 -m 1

13/05/09 06:16:39 WARN tool.BaseSqoopTool: Setting your password on thecommand-line is insecure. Consider using -P instead.

13/05/09 06:16:39 INFO tool.CodeGenTool: Beginning code generation

13/05/09 06:16:39 INFO manager.MySQLManager: Executing SQL statement: SELECTt.* FROM `tb1` AS t LIMIT 1

13/05/09 06:16:39 INFO manager.MySQLManager: Executing SQL statement: SELECTt.* FROM `tb1` AS t LIMIT 1

13/05/09 06:16:39 INFO orm.CompilationManager: HADOOP_HOME is/home/hadoop/hadoop-0.20.2/bin/..

13/05/09 06:16:39 INFO orm.CompilationManager: Found hadoop core jar at:/home/hadoop/hadoop-0.20.2/bin/../hadoop-0.20.2-core.jar

13/05/09 06:16:42 INFO orm.CompilationManager: Writing jar file:/tmp/sqoop-hadoop/compile/4175ce59fd53eb3de75875cfd3bd450b/tb1.jar

13/05/09 06:16:42 WARN manager.MySQLManager: It looks like you are importingfrom mysql.

13/05/09 06:16:42 WARN manager.MySQLManager: This transfer can be faster! Usethe --direct

13/05/09 06:16:42 WARN manager.MySQLManager: option to exercise aMySQL-specific fast path.

13/05/09 06:16:42 INFO manager.MySQLManager: Setting zero DATETIME behavior toconvertToNull (mysql)

13/05/09 06:16:42 INFO mapreduce.ImportJobBase: Beginning import of tb1

13/05/09 06:16:43 INFO manager.MySQLManager: Executing SQL statement: SELECTt.* FROM `tb1` AS t LIMIT 1

13/05/09 06:16:45 INFO mapred.JobClient: Running job: job_201305090600_0001

13/05/09 06:16:46 INFO mapred.JobClient: map 0% reduce 0%

13/05/09 06:17:01 INFO mapred.JobClient: map 100% reduce 0%

13/05/09 06:17:03 INFO mapred.JobClient: Job complete: job_201305090600_0001

13/05/09 06:17:03 INFO mapred.JobClient: Counters: 5

13/05/09 06:17:03 INFO mapred.JobClient: Job Counters

13/05/09 06:17:03 INFO mapred.JobClient: Launched map tasks=1

13/05/09 06:17:03 INFO mapred.JobClient: FileSystemCounters

13/05/09 06:17:03 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=7072

13/05/09 06:17:03 INFO mapred.JobClient: Map-Reduce Framework

13/05/09 06:17:03 INFO mapred.JobClient: Map input records=154

13/05/09 06:17:03 INFO mapred.JobClient: Spilled Records=0

13/05/09 06:17:03 INFO mapred.JobClient: Map output records=154

13/05/09 06:17:03 INFO mapreduce.ImportJobBase: Transferred 6.9062 KB in19.9871 seconds (353.8277 bytes/sec)

13/05/09 06:17:03 INFO mapreduce.ImportJobBase: Retrieved 154 records.

(6)在HDFS上查看刚刚导入的数据

[hadoop@node01~]$ hadoop dfs -ls tb1

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2013-05-09 06:16 /user/hadoop/tb1/_logs

-rw-r--r-- 2 hadoop supergroup 7072 2013-05-09 06:16/user/hadoop/tb1/part-m-00000

但是遇到的问题如下:

1. 在命令行运行sqoop,提示:

Error: Could not find or load main classorg.apache.sqoop.Sqoop

这里把sqoop解压后根目录下的sqoop-1.4.3.jar加入到hadoop-1.0.3/lib里即可。

2. 运行sqoop list-tables --connectjdbc:mysql://172.30.1.245:3306/database -username 'root' -P提示mysql错误:

13/07/02 10:09:53 INFO manager.MySQLManager: Preparing to use aMySQL streaming resultset.

13/07/02 10:09:53 ERROR sqoop.Sqoop: Got exception running Sqoop:java.lang.RuntimeException: Could not load db driverclass: com.mysql.jdbc.Driver

java.lang.RuntimeException: Could not load db driver class:com.mysql.jdbc.Driver

at org.apache.sqoop.manager.SqlManager.makeConnection(SqlManager.java:716)

atorg.apache.sqoop.manager.GenericJdbcManager.getConnection(GenericJdbcManager.java:52)

atorg.apache.sqoop.manager.CatalogQueryManager.listTables(CatalogQueryManager.java:101)

at org.apache.sqoop.tool.ListTablesTool.run(ListTablesTool.java:49)

at org.apache.sqoop.Sqoop.run(Sqoop.java:145)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:65)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:181)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:220)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:229)

at org.apache.sqoop.Sqoop.main(Sqoop.java:238)

网上搜到的解决方案都说是没把mysql的jar包放到$SQOOP_HOME/lib下,但是我确实是放进去了。然后看到有个地方说是hadoop找不到mysql,我把mysql的jar包放到了/usr/hadoop/lib里,运行成功。

对于这两个问题,在网上都没有搜到这样的解决方法,不清楚是不是我自己hadoop哪个地方配得不对,导致常规的配置方法不能运行成功。把sqoop和mysql的jar包都添加到/usr/hadoop/lib里之后,我把之前放到/usr/sqoop/lib里的mysql的jar包删掉,也可以正常运行。