Flink 问题记录

1.集群突然宕机

- 找到Master节点的日志 vi 打开 Shift + g 跳到文件最后一行 Shift + n 查询任务名称找到对应的id

- 进入hdfs hadoop fs -ls /flink-checkpoints | grep 任务ID 找到id对应的checkPoint目录 进入目录 获取/flink-checkpoints/a1cb4cadb79c74ac8d3c7a11b6029ec2/chk-4863 地址

- 从checkPoint 中重启任务 如果不行则勾选Allow Non Restored State

2.org.apache.flink.table.api.StreamQueryConfig; local class incompatible: stream classdesc serialVersionUID = XX, local class serialVersionUID = -XXX

查看jar版本是否冲突,我的原因是有两个一样的Flink Table jar但是版本不一样 所有导致序列化异常

3 Flink Address already in use

使用Flink独立集群模式启动的,这个问题是master2上面已经启动集群了,此时在master1上面重复启动集群就会报错。可以选择kill master2上面的master进程

4.任务运行时突然抛出Caused by: java.lang.NullPointerException

org.apache.flink.types.NullFieldException: Field 3 is null, but expected to hold a value.

at org.apache.flink.api.java.typeutils.runtime.TupleSerializer.serialize(TupleSerializer.java:127)

at org.apache.flink.api.java.typeutils.runtime.TupleSerializer.serialize(TupleSerializer.java:30)

at org.apache.flink.contrib.streaming.state.RocksDBKeySerializationUtils.writeKey(RocksDBKeySerializationUtils.java:108)

at org.apache.flink.contrib.streaming.state.AbstractRocksDBState.writeKeyWithGroupAndNamespace(AbstractRocksDBState.java:217)

at org.apache.flink.contrib.streaming.state.AbstractRocksDBState.writeKeyWithGroupAndNamespace(AbstractRocksDBState.java:192)

at org.apache.flink.contrib.streaming.state.AbstractRocksDBState.writeCurrentKeyWithGroupAndNamespace(AbstractRocksDBState.java:179)

at org.apache.flink.contrib.streaming.state.AbstractRocksDBState.getKeyBytes(AbstractRocksDBState.java:161)

at org.apache.flink.contrib.streaming.state.RocksDBReducingState.add(RocksDBReducingState.java:96)

at org.apache.flink.runtime.state.ttl.TtlReducingState.add(TtlReducingState.java:52)

at com.yjp.stream.stat.business.crm.function.ReturnOrderFlatMapFunction.flatMap(ReturnOrderFlatMapFunction.java:101)

at com.yjp.stream.stat.business.crm.function.ReturnOrderFlatMapFunction.flatMap(ReturnOrderFlatMapFunction.java:24)

at org.apache.flink.streaming.api.operators.StreamFlatMap.processElement(StreamFlatMap.java:50)

at org.apache.flink.streaming.runtime.io.StreamInputProcessor.processInput(StreamInputProcessor.java:202)

at org.apache.flink.streaming.runtime.tasks.OneInputStreamTask.run(OneInputStreamTask.java:105)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:300)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:711)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.NullPointerException解决办法:

此异常是在keyby时以多个key分组某个key字段为null时抛出。在处理数据时将需要分组的数据都进行非null判断默认赋值。Field 3 is null 说明问题是分组的第四个key为null值(下标从0开始)

4.修改keyby字段的个数(由之前的三个key到现在4个key)后从savePoint中启动报错

java.lang.Exception: Exception while creating StreamOperatorStateContext.

at org.apache.flink.streaming.api.operators.StreamTaskStateInitializerImpl.streamOperatorStateContext(StreamTaskStateInitializerImpl.java:192)

at org.apache.flink.streaming.api.operators.AbstractStreamOperator.initializeState(AbstractStreamOperator.java:227)

at org.apache.flink.streaming.runtime.tasks.StreamTask.initializeState(StreamTask.java:738)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:289)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:711)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.flink.util.FlinkException: Could not restore keyed state backend for StreamFlatMap_16b166ecf5d9fd813aab48502efdb6f5_(1/1) from any of the 1 provided restore options.

at org.apache.flink.streaming.api.operators.BackendRestorerProcedure.createAndRestore(BackendRestorerProcedure.java:137)

at org.apache.flink.streaming.api.operators.StreamTaskStateInitializerImpl.keyedStatedBackend(StreamTaskStateInitializerImpl.java:279)

at org.apache.flink.streaming.api.operators.StreamTaskStateInitializerImpl.streamOperatorStateContext(StreamTaskStateInitializerImpl.java:133)

... 5 more

Caused by: org.apache.flink.util.StateMigrationException: The new key serializer is not compatible to read previous keys. Aborting now since state migration is currently not available

at org.apache.flink.contrib.streaming.state.RocksDBKeyedStateBackend$RocksDBFullRestoreOperation.restoreKVStateMetaData(RocksDBKeyedStateBackend.java:689)

at org.apache.flink.contrib.streaming.state.RocksDBKeyedStateBackend$RocksDBFullRestoreOperation.restoreKeyGroupsInStateHandle(RocksDBKeyedStateBackend.java:652)

at org.apache.flink.contrib.streaming.state.RocksDBKeyedStateBackend$RocksDBFullRestoreOperation.doRestore(RocksDBKeyedStateBackend.java:638)

at org.apache.flink.contrib.streaming.state.RocksDBKeyedStateBackend.restore(RocksDBKeyedStateBackend.java:525)

at org.apache.flink.contrib.streaming.state.RocksDBKeyedStateBackend.restore(RocksDBKeyedStateBackend.java:166)

at org.apache.flink.streaming.api.operators.BackendRestorerProcedure.attemptCreateAndRestore(BackendRestorerProcedure.java:151)

at org.apache.flink.streaming.api.operators.BackendRestorerProcedure.createAndRestore(BackendRestorerProcedure.java:123)

... 7 more解决办法:

修改此key对应key state的uid 从savePoint中恢复强依赖uid 更改uid后可以抛弃原有的state

5.命令行提交任务异常:

./flink run -yid application_X_X -c 启动类 '打包地址' 启动参数

org.apache.flink.yarn.cli.FlinkYarnSessionCli - No path for the flink jar passed. Using the location of class org.ap ache.flink.yarn.YarnClusterDescriptor to locate the jar 此异常看不出什么 直接看jobManager的日志

java.nio.file.NoSuchFileException: /tmp/flink-web-0188545c-dc3d-46bb-923b-6b3f2f7fc61b/flink-web-upload/599d2006-a636-4339-8229-7d256270dd2f

解决办法:新建目录并赋权

6.批处理用sql join维度表 异常 Too many duplicate keys

解决方案:将维度表直接用where条件过滤 而不是join

7. flink run 提交了一个jar包,任务在持续运行中,不小心把jar包rm掉了,jar的缓存文件地址:

1.配置了HA模式,在这个目录 high-availability.storageDir

2.没有配置HA,在io.tmp.dirs 文件夹名是 blobStore 开头,里面有jobid,自己看下就能找到

8.配置Flink的字符集

在在 flink-conf.yaml 中添加如下一行

env.java.opts: "-Dfile.encoding=UTF-8"

9.有N台服务器,一个hadoop集群,前x台服务器分配给一种flink作业,另外y台服务器分配给另一种flink作业。采用的是yarn集群调度,这种情况怎么配置来协调不同job调度到不同服务器?

解决方案:Hadoop YARN新特性—label based scheduling 参考文章

10.Flink版本1.8.0 在使用袋鼠云的StreamSql 时报错:

Caused by: java.lang.ClassNotFoundException: org.apache.flink.table.sinks.TableSink

将flink-table-common-1.8.0.jar放到flinklib下

Caused by: java.lang.ClassNotFoundException: org.apache.flink.table.sinks.RetractStreamTableSink

将flink-table-planner_2.11-1.8.0.jar放到flinklib下

Caused by: java.lang.ClassNotFoundException: org.apache.flink.table.api.StreamQueryConfig

将flink-table-api-java-1.8.0.jar放到flinklib下

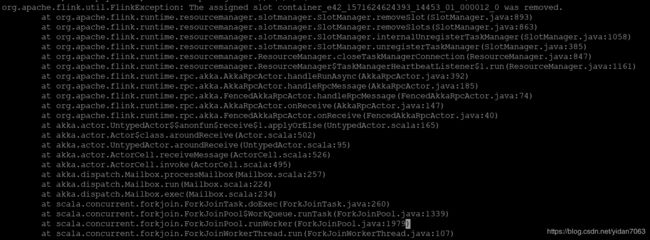

11:org.apache.flink.util.FlinkException: The assigned slot container_e42_1571624624393_14453_01_000012_0 was removed.

查看jobManger的日志信息发现:Closing TaskExecutor connection container_e42_1571624624393_14453_01_000012 because: The heartbeat of TaskManager with id container_e42_1571624624393_14453_01_000012 timed out 本质的原因是timeout超时。原因,解决方案

此错误是container心跳超时,出现此种错误一般有两种可能:

1、分布式物理机网络失联,这种原因一般情况下failover后作业能正常恢复,如果出现的不频繁可以不用关注;

2、failover的节点对应TM的内存设置太小,GC严重导致心跳超时,建议调大对应节点的内存值。

12:Caused by: org.apache.flink.util.StateMigrationException: The new state serializer cannot be incompatible.

任务从savePoint重启报错:原因原有的state数据类型改变从 Mapstat

13: Flink yarn Could not start rest endpoint on any port in port range 8081

参考答案

此错误是说端口被占用。查看源代:

Iterator portsIterator;

try {

portsIterator = NetUtils.getPortRangeFromString(restBindPortRange);

} catch (IllegalConfigurationException e) {

throw e;

} catch (Exception e) {

throw new IllegalArgumentException("Invalid port range definition: " + restBindPortRange);

} 对应的配置是 flink-conf.yaml中的rest.bind-port。

rest.bind-port不设置,则Rest Server默认绑定到rest.port端口(8081)。

rest.bind-port可以设置成列表格式如50100,50101,也可设置成范围格式如50100-50200。推荐范围格式,避免端口冲突。

14:线程上下文属性丢失

问题:在new对象时需要为其他属性赋值 但是在asyncInvoke()方法中使用时报错asyncClient 和table都为null。说明赋值未成功。

public AsyncKuduLoginRealUser(FlinkKuduConfig flinkKuduConfig, List queryFields, LRUCacheConfig lruCacheConfig) {

super(flinkKuduConfig, queryFields, lruCacheConfig);

this.asyncClient = AsyncQueryHelper.getAsyncKuduClientBuilder(flinkKuduConfig);

this.table = AsyncQueryHelper.getKuduTable(flinkKuduConfig);

System.out.println(Thread.currentThread().getName());

} 问题原因:

在new对象时是main线程,但是调用asyncInvoke()是Source: Custom Source -> Flat Map -> Flat Map -> Process -> insert_async_bizuserid (1/1) 初始化和正在执行的线程不是同一个线程所以会有信息丢失。但是调用open方法的线程是SourceXXX线程 将额外的赋值操作移到open()方法中就可以了。15:Flink生产数据到Kafka频繁出现事务失效导致任务重启

参考文章

添加配置:

//the transaction timeout must be larger than the checkpoint interval, but smaller than the broker transaction.max.timeout.ms.

properties.setProperty(ProducerConfig.TRANSACTION_TIMEOUT_CONFIG, 1000 * 60 * 3 + "");

properties.setProperty(ProducerConfig.MAX_IN_FLIGHT_REQUESTS_PER_CONNECTION, "1");

properties.setProperty(ProducerConfig.ENABLE_IDEMPOTENCE_CONFIG, "true");