基于金字塔LK的光流法实现—根据论文自己实现的c++代码

自己实现代码与opencv的接口函数对比

- 在VINS-Mono上效果对比

- 在两张图片上效果对比

- PyrLKTracking()源码

- 总结

最近自己在研究VINS-Mono源码的特征提取与追踪部分的原理以及代码实现。发现VINS-Mono的光流匹配用的是Opencv自己的API接口函数calcOpticalFlowPyrLK,关于这个函数的参数含义及意义在此不在详述,有兴趣的朋友可以自行上网查找。这个函数的底层代码我们是看不到的,又由于我对光流法的具体代码实现产生了浓厚兴趣,所以,我自己就看着《 Pyramidal Implementation of the Lucas Kanade Feature Tracker Description of the algorithm》论文以及参考网上的内容,一边看一边写,不怕大家笑话,搞了四五天,终于写出了一个可以应用在VINS-Mono上的源码。精度上虽然略逊于Opencv的接口函数一筹,但耗时上却大大慢于Opencv的接口函数(这里是往更坏的方向转折)。后面我会把代码分享出来,希望有大神可以指教。也好让我把代码优化优化,具体原理请参考我的博客《 图像金字塔LK光流法原理分析》。

在VINS-Mono上效果对比

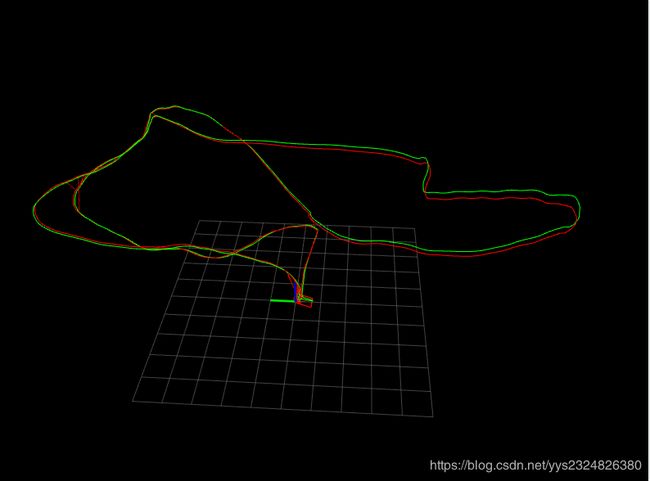

使用Opencv的API函数calcOpticalFlowPyrLK()得到的精度结果如下图所示,

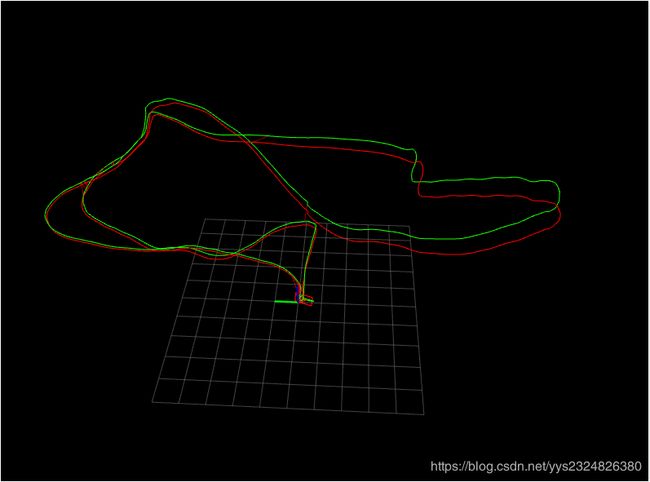

使用我自己写的API函数PyrLKTracking()得到的精度结果如下图所示,

红线是标准轨迹,第一张图的绿线是应用Opencv接口函数进行VIO融合后的轨迹,可以看到误差较小。 第二张图的绿线是应用我自己实现的接口函数进行VIO融合后的轨迹,可以看到误差较大。所以说我的代码在精度上略逊一筹。

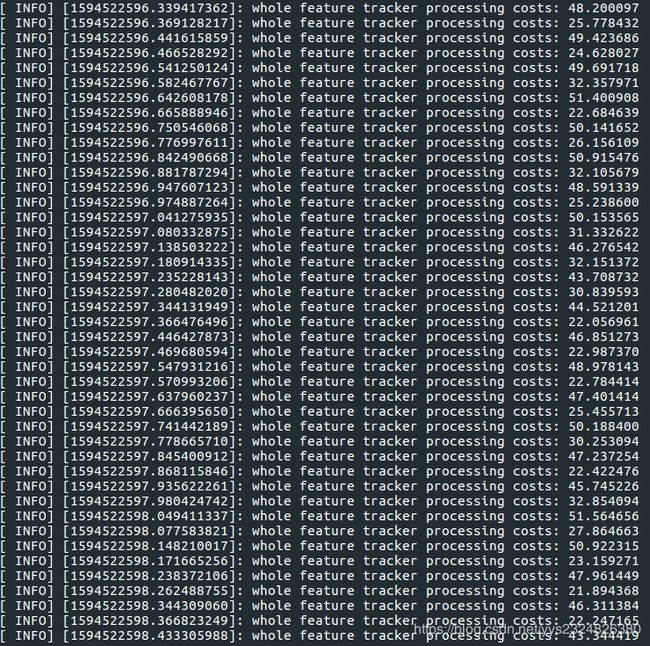

使用Opencv的API函数calcOpticalFlowPyrLK()得到的耗时结果如下图所示,

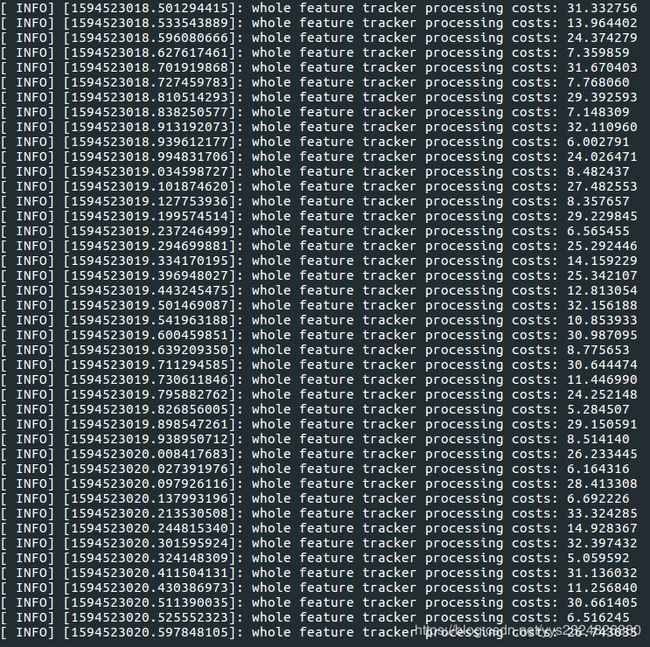

使用我自己写的API函数PyrLKTracking()得到的耗时结果如下图所示,

根据上面两张图可以估计一下Opencv的API函数耗时大致在5ms~33ms之间(追踪300个角点),跟踪很快。我的API函数耗时大致在21ms~51ms之间,跟踪很慢。所以说我的代码在耗时上逊色好多筹。

另外,我自己的API接口函数,在成功追踪的角点数量上也比不上Opencv的函数。

在两张图片上效果对比

使用Opencv的API函数calcOpticalFlowPyrLK()追踪到的角点数量结果如下图所示,

使用我自己写的API函数PyrLKTracking()追踪到的角点数量结果如下图所示,

显然,根据这两张图上角点连线的稀疏程度以及边缘匹配情况就可以看出,Opencv函数匹配的角点多,我自己的函数匹配的角点数量少,且边缘部分更为明显。

PyrLKTracking()源码

PyrLK_OpticalFlow.h头文件

#ifndef PyrLK_H

#define PyrLK_H

#include PyrLK_OpticalFlow.cpp文件

#include "PyrLK_OpticalFlow.h"

void lowpassFilter(InputArray src, OutputArray dst) {

Mat srcMat = src.getMat();

int dstWidth = srcMat.size().width / 2;

int dstHeight = srcMat.size().height / 2;

dst.create(Size(dstWidth, dstHeight), CV_8UC1);

Mat dstMat = dst.getMat();

copyMakeBorder(srcMat, srcMat, 1, 1, 1, 1, BORDER_REFLECT_101 + BORDER_ISOLATED);

for (int x = 0; x < dstHeight; x++) {

for (int y = 0; y < dstWidth; y++) {

double val = 0;

val += srcMat.at<uchar>(2 * x + 1, 2 * y + 1) * 0.25;

val += srcMat.at<uchar>(2 * x, 2 * y + 1) * 0.125;

val += srcMat.at<uchar>(2 * x + 2, 2 * y + 1) * 0.125;

val += srcMat.at<uchar>(2 * x + 1, 2 * y) * 0.125;

val += srcMat.at<uchar>(2 * x + 1, 2 * y + 2) * 0.125;

val += srcMat.at<uchar>(2 * x, 2 * y) * 0.0625;

val += srcMat.at<uchar>(2 * x + 2, 2 * y) * 0.0625;

val += srcMat.at<uchar>(2 * x, 2 * y + 2) * 0.0625;

val += srcMat.at<uchar>(2 * x + 2, 2 * y + 2) * 0.0625;

dstMat.at<uchar>(x, y) = (uchar)val;

}

}

}

Mat get_Ix(Mat src1, int p_x, int p_y, int windows_x, int windows_y)

{

src1 = src1(Rect(p_y, p_x, windows_y + 2, windows_x + 2));

src1.convertTo(src1, CV_64FC1, 0.167, 0);

Mat kernal = Mat::zeros(3, 3, CV_64FC1);

kernal.ATD(0, 0) = -1.0;

kernal.ATD(0, 1) = -1.0;

kernal.ATD(0, 2) = -1.0;

kernal.ATD(2, 0) = 1.0;

kernal.ATD(2, 1) = 1.0;

kernal.ATD(2, 2) = 1.0;

Mat Ix;

filter2D(src1, Ix, -1, kernal);

return Ix;

}

Mat get_Iy(Mat src1, int p_x, int p_y, int windows_x, int windows_y)

{

src1 = src1(Rect(p_y, p_x, windows_y + 2, windows_x + 2));

src1.convertTo(src1, CV_64FC1, 0.167, 0);

Mat kernal = Mat::zeros(3, 3, CV_64FC1);

kernal.ATD(0, 0) = -1.0;

kernal.ATD(1, 0) = -1.0;

kernal.ATD(2, 0) = -1.0;

kernal.ATD(0, 2) = 1.0;

kernal.ATD(1, 2) = 1.0;

kernal.ATD(2, 2) = 1.0;

Mat Iy;

filter2D(src1, Iy, -1, kernal);

return Iy;

}

double getSubPixel(Mat mat, double row, double col)

{

row = row + 1;

col = col + 1;

int floorRow = floor(row);

int floorCol = floor(col);

double fracRow = row - floorRow;

double fracCol = col - floorCol;

int ceilRow = floorRow + 1;

int ceilCol = floorCol + 1;

return ((1.0 - fracRow) * (1.0 - fracCol) * (double)mat.ptr<uchar>(floorRow)[floorCol]) +

(fracRow * (1.0 - fracCol) * (double)mat.ptr<uchar>(ceilRow)[floorCol]) +

((1.0 - fracRow) * fracCol * (double)mat.ptr<uchar>(floorRow)[ceilCol]) +

(fracRow * fracCol * (double)mat.ptr<uchar>(ceilRow)[ceilCol]);

}

void PyrLKTracking(Mat src1, Mat src2, vector<cv::Point2f> points1, vector<cv::Point2f>& points2,

vector<uchar>& states)

{

points2 = vector<cv::Point2f>(points1.size(), cv::Point2f(0,0));

int windows = 21;

int halfwin = (windows - 1) / 2;

states = vector<uchar>(points1.size(), 0);

Mat image_pyr1[4];

Mat image_pyr2[4];

image_pyr1[3] = src1;

image_pyr2[3] = src2;

for (int i = 3; i > 0; i--)

{

pyrDown(image_pyr1[i], image_pyr1[i - 1], Size(image_pyr1[i].cols / 2, image_pyr1[i].rows / 2));

pyrDown(image_pyr2[i], image_pyr2[i - 1], Size(image_pyr2[i].cols / 2, image_pyr2[i].rows / 2));

}

Mat image_exp1[4];

Mat image_exp2[4];

for (int i = 0; i < 4; i++)

{

copyMakeBorder(image_pyr1[i], image_exp1[i], 1, 1, 1, 1, BORDER_REFLECT_101 + BORDER_ISOLATED);

copyMakeBorder(image_pyr2[i], image_exp2[i], 1, 1, 1, 1, BORDER_REFLECT_101 + BORDER_ISOLATED);

}

for (int i = 0; i < (int)points1.size(); i++)

{

bool loop_start = false;

int pixel_x = points1[i].y;

int pixel_y = points1[i].x;

Eigen::Vector2f ggl{ 0, 0 };

for (int j = 0; j < 4; j++)

{

int heightl = image_pyr1[j].rows;

int widthl = image_pyr1[j].cols;

double pixel_Subxl = pixel_x / pow(2.0, 3 - j);

double pixel_Subyl = pixel_y / pow(2.0, 3 - j);

int pixel_xl = round(pixel_Subxl);

int pixel_yl = round(pixel_Subyl);

int windows_x, windows_y, start_x, start_y;

if (pixel_xl - halfwin < 0)

{

windows_x = halfwin + pixel_xl + 1;

start_x = 0;

}

else if (pixel_xl + halfwin > heightl - 1)

{

windows_x = halfwin + heightl - pixel_xl;

start_x = pixel_xl - halfwin;

}

else

{

windows_x = windows;

start_x = pixel_xl - halfwin;

}

if (pixel_yl - halfwin < 0)

{

windows_y = halfwin + pixel_yl + 1;

start_y = 0;

}

else if (pixel_yl + halfwin > widthl - 1)

{

windows_y = halfwin + widthl - pixel_yl;

start_y = pixel_yl - halfwin;

}

else

{

windows_y = windows;

start_y = pixel_yl - halfwin;

}

Mat Ix = get_Ix(image_exp1[j], start_x, start_y, windows_x, windows_y);

Mat Iy = get_Iy(image_exp1[j], start_x, start_y, windows_x, windows_y);

Mat Ix2 = Ix.mul(Ix);

Mat Iy2 = Iy.mul(Iy);

Mat Ixy = Ix.mul(Iy);

double Ix2Sum = 0;

double Iy2Sum = 0;

double IxIySum = 0;

for (int jj = 1; jj < windows_x + 1; jj++)

{

for (int kk = 1; kk < windows_y + 1; kk++)

{

Ix2Sum = Ix2Sum + Ix2.ATD(jj, kk);

Iy2Sum = Iy2Sum + Iy2.ATD(jj, kk);

IxIySum = IxIySum + Ixy.ATD(jj, kk);

}

}

Eigen::Matrix2f GG;

GG(0, 0) = Ix2Sum;

GG(1, 0) = IxIySum;

GG(0, 1) = IxIySum;

GG(1, 1) = Iy2Sum;

Eigen::Vector2f vv{ 0.0, 0.0 };

Eigen::Vector2f threshold;

double thth;

for (int mm = 0; mm < 20; mm++)

{

double delt_IIx = 0;

double delt_IIy = 0;

int Global_x1, Global_y1;

for (int jj = 1; jj < windows_x + 1; jj++)

{

for (int kk = 1; kk < windows_y + 1; kk++)

{

if (pixel_xl - halfwin < 0)

Global_x1 = jj;

else

Global_x1 = jj + pixel_xl - halfwin;

if (pixel_yl - halfwin < 0)

Global_y1 = kk;

else

Global_y1 = kk + pixel_yl - halfwin;

double Global_Sbux2 = ((double)Global_x1 + ggl.x() + vv.x());

double Global_Sbuy2 = ((double)Global_y1 + ggl.y() + vv.y());

int Global_x2 = (int)(Global_Sbux2);

int Global_y2 = (int)(Global_Sbuy2);

if (Global_x2 < 0 || Global_y2 < 0 || Global_x2>(heightl)

|| Global_y2>(widthl))

{

loop_start = true;

goto someone;

}

double delt_I;

//if ((Global_Sbux2 != Global_x2)||(Global_Sbuy2 != Global_y2))

if (true)

{

int floorRow = floor(Global_Sbux2);

int floorCol = floor(Global_Sbuy2);

double fracRow = Global_Sbux2 - floorRow;

double fracCol = Global_Sbuy2 - floorCol;

int ceilRow = floorRow + 1;

int ceilCol = floorCol + 1;

double delt_I1 = (double)image_exp1[j].at<uchar>(Global_x1, Global_y1) -

(((1.0 - fracRow) * (1.0 - fracCol) * (double)image_exp2[j].ptr<uchar>(floorRow)[floorCol]) +

(fracRow * (1.0 - fracCol) * (double)image_exp2[j].ptr<uchar>(ceilRow)[floorCol]) +

((1.0 - fracRow) * fracCol * (double)image_exp2[j].ptr<uchar>(floorRow)[ceilCol]) +

(fracRow * fracCol * (double)image_exp2[j].ptr<uchar>(ceilRow)[ceilCol]));

delt_I = delt_I1;

}

else

{

int delt_I2 = image_pyr1[j].at<uchar>(Global_x1, Global_y1) -

image_pyr2[j].at<uchar>(Global_x2, Global_y2);

delt_I = delt_I2;

}

delt_IIx = delt_IIx + delt_I*Ix.ATD(jj, kk);

delt_IIy = delt_IIy + delt_I*Iy.ATD(jj, kk);

}

}

Eigen::Vector2f bbk;

bbk(0, 0) = delt_IIx;

bbk(1, 0) = delt_IIy;

threshold = GG.inverse()*bbk;

thth = threshold.norm();

vv = vv + threshold;

if (thth < 0.05)

{

break;

}

}

ggl = 2 * (vv + ggl);

if (j == 3 && thth<0.05)

{

states[i] = 1;

}

}

someone:

if (loop_start)

continue;

Eigen::Vector2d dd;

dd.x() = round(ggl.x() / 2);

dd.y() = round(ggl.y() / 2);

pixel_x = pixel_x + dd.x();

pixel_y = pixel_y + dd.y();

if (states[i])

{

points2[i].x = pixel_y;

points2[i].y = pixel_x;

}

}

}

函数参数介绍

void PyrLKTracking(Mat src1, Mat src2, vector<cv::Point2f> points1, vector<cv::Point2f>& points2,

vector<uchar>& states)

src1:原图像(灰度图)

src2:匹配图像(灰度图)

points1:原图像需要进行追踪的角点

points2:匹配图像追踪成功的角点

states:匹配成功的角点状态值为1,匹配失败的角点值为0

总结

该代码有必要进行进一步优化,感谢各位大神指教。