Python OpenCV 实现 Yolo v3

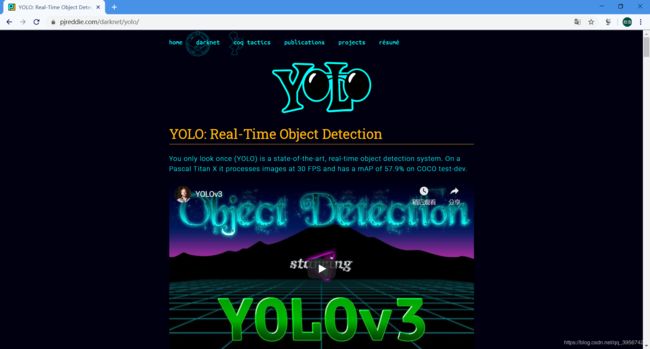

OpenCV 早在 3.x版本就涵盖 dnn 模块,使用 OpenCV 能更简别的直接运行已训练的深度学习模型,本次采用在目标检测中最强劲的 Yolo v3进行

文件准备

yolov3.cfg ,coco.names 与 yolov3.weights,yolov3.weights 可从 Yolo 官网进行下载:下载地址

yolov3.cfg 与 coco.names 在 GitHub 上直接搜寻即可,当然土豪也可以直接从本页面下载文件进行下载 yolov3.cfg,coco.names

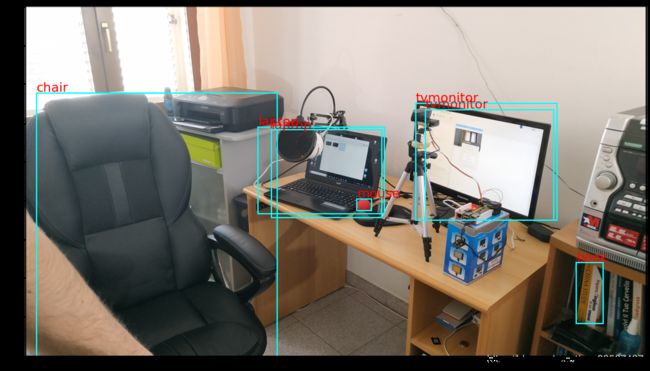

当然还需准备测试的图片,任意拍一张照片即可,下面推荐我使用的两张测试照片

demo1.jpg

demo2.jpg

程序编写

导入必要的模块

import cv2

import numpy as np

使用 OpenCV dnn 模块加载 Yolo 模型

net = cv2.dnn.readNet("yolov3.weights", "yolov3.cfg")

从 coco.names 导入类别并存储为列表

classes = []

with open("coco.names", "r") as f:

classes = [line.strip() for line in f.readlines()]

print(classes)

['person', 'bicycle', 'car', 'motorbike', 'aeroplane', 'bus', 'train', 'truck', 'boat', 'traffic light', 'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow', 'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard', 'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple', 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'sofa', 'pottedplant', 'bed', 'diningtable', 'toilet', 'tvmonitor', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone', 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear', 'hair drier', 'toothbrush']

获得输出层

函数解释

getLayerNames() 是获取网络各层名称

getUnconnectedOutLayers() 是返回具有未连接输出的图层索引

layer_names = net.getLayerNames()

print(layer_names)

output_layers = [layer_names[i[0] - 1] for i in net.getUnconnectedOutLayers()]

print(output_layers)

['conv_0', 'bn_0', 'relu_1', 'conv_1', 'bn_1', 'relu_2', 'conv_2', 'bn_2', 'relu_3', 'conv_3', 'bn_3', 'relu_4', 'shortcut_4', 'conv_5', 'bn_5', 'relu_6', 'conv_6', 'bn_6', 'relu_7', 'conv_7', 'bn_7', 'relu_8', 'shortcut_8', 'conv_9', 'bn_9', 'relu_10', 'conv_10', 'bn_10', 'relu_11', 'shortcut_11', 'conv_12', 'bn_12', 'relu_13', 'conv_13', 'bn_13', 'relu_14', 'conv_14', 'bn_14', 'relu_15', 'shortcut_15', 'conv_16', 'bn_16', 'relu_17', 'conv_17', 'bn_17', 'relu_18', 'shortcut_18', 'conv_19', 'bn_19', 'relu_20', 'conv_20', 'bn_20', 'relu_21', 'shortcut_21', 'conv_22', 'bn_22', 'relu_23', 'conv_23', 'bn_23', 'relu_24', 'shortcut_24', 'conv_25', 'bn_25', 'relu_26', 'conv_26', 'bn_26', 'relu_27', 'shortcut_27', 'conv_28', 'bn_28', 'relu_29', 'conv_29', 'bn_29', 'relu_30', 'shortcut_30', 'conv_31', 'bn_31', 'relu_32', 'conv_32', 'bn_32', 'relu_33', 'shortcut_33', 'conv_34', 'bn_34', 'relu_35', 'conv_35', 'bn_35', 'relu_36', 'shortcut_36', 'conv_37', 'bn_37', 'relu_38', 'conv_38', 'bn_38', 'relu_39', 'conv_39', 'bn_39', 'relu_40', 'shortcut_40', 'conv_41', 'bn_41', 'relu_42', 'conv_42', 'bn_42', 'relu_43', 'shortcut_43', 'conv_44', 'bn_44', 'relu_45', 'conv_45', 'bn_45', 'relu_46', 'shortcut_46', 'conv_47', 'bn_47', 'relu_48', 'conv_48', 'bn_48', 'relu_49', 'shortcut_49', 'conv_50', 'bn_50', 'relu_51', 'conv_51', 'bn_51', 'relu_52', 'shortcut_52', 'conv_53', 'bn_53', 'relu_54', 'conv_54', 'bn_54', 'relu_55', 'shortcut_55', 'conv_56', 'bn_56', 'relu_57', 'conv_57', 'bn_57', 'relu_58', 'shortcut_58', 'conv_59', 'bn_59', 'relu_60', 'conv_60', 'bn_60', 'relu_61', 'shortcut_61', 'conv_62', 'bn_62', 'relu_63', 'conv_63', 'bn_63', 'relu_64', 'conv_64', 'bn_64', 'relu_65', 'shortcut_65', 'conv_66', 'bn_66', 'relu_67', 'conv_67', 'bn_67', 'relu_68', 'shortcut_68', 'conv_69', 'bn_69', 'relu_70', 'conv_70', 'bn_70', 'relu_71', 'shortcut_71', 'conv_72', 'bn_72', 'relu_73', 'conv_73', 'bn_73', 'relu_74', 'shortcut_74', 'conv_75', 'bn_75', 'relu_76', 'conv_76', 'bn_76', 'relu_77', 'conv_77', 'bn_77', 'relu_78', 'conv_78', 'bn_78', 'relu_79', 'conv_79', 'bn_79', 'relu_80', 'conv_80', 'bn_80', 'relu_81', 'conv_81', 'permute_82', 'yolo_82', 'identity_83', 'conv_84', 'bn_84', 'relu_85', 'upsample_85', 'concat_86', 'conv_87', 'bn_87', 'relu_88', 'conv_88', 'bn_88', 'relu_89', 'conv_89', 'bn_89', 'relu_90', 'conv_90', 'bn_90', 'relu_91', 'conv_91', 'bn_91', 'relu_92', 'conv_92', 'bn_92', 'relu_93', 'conv_93', 'permute_94', 'yolo_94', 'identity_95', 'conv_96', 'bn_96', 'relu_97', 'upsample_97', 'concat_98', 'conv_99', 'bn_99', 'relu_100', 'conv_100', 'bn_100', 'relu_101', 'conv_101', 'bn_101', 'relu_102', 'conv_102', 'bn_102', 'relu_103', 'conv_103', 'bn_103', 'relu_104', 'conv_104', 'bn_104', 'relu_105', 'conv_105', 'permute_106', 'yolo_106']

['yolo_82', 'yolo_94', 'yolo_106']

处理图像并获取 blob

img = cv2.imread("demo1.jpg")

# 获取图像尺寸与通道值

height, width, channels = img.shape

print('The image height is:',height)

print('The image width is:',width)

print('The image channels is:',channels)

blob = cv2.dnn.blobFromImage(img, 1.0 / 255.0, (416, 416), (0, 0, 0), True, crop=False)

The image height is: 2250

The image width is: 4000

The image channels is: 3

添加 matplotlib 可视化 blob 下的图像

from matplotlib import pyplot as plt

%matplotlib inline

OpenCV 采用 BGR,matplotlib 采用的 RGB,需要使用 cv2.COLOR_BGR2RGB 将 BGR 转换为 RGB

fig = plt.gcf()

fig.set_size_inches(20, 10)

num = 0

for b in blob:

for img_blob in b:

img_blob=cv2.cvtColor(img_blob, cv2.COLOR_BGR2RGB)

num += 1

ax = plt.subplot(3/3, 3, num)

ax.imshow(img_blob)

title = 'blob_image:{}'.format(num)

ax.set_title(title, fontsize=20)

将 blob 输入网络

setInput 函数将 blob输入到网络,forward 函数输入网络输出层的名字来计算网络输出,本次计算中 output_layers 包含三个输出层的列表,所以 outs 的值也是一个包含三个矩阵(array)的列表(list)

net.setInput(blob)

outs = net.forward(output_layers)

for i in range(len(outs)):

print('The {} layer out shape is:'.format(i), outs[i].shape)

The 0 layer out shape is: (507, 85)

The 1 layer out shape is: (2028, 85)

The 2 layer out shape is: (8112, 85)

进行识别与标签处理

创建记录数据列表

class_ids 记录 类别名

confidences 记录算法检测物体概率

boxes 记录框的坐标

class_ids = []

confidences = []

boxes = []

YOLO3对于一个 416 * 416 的输入图像,在每个尺度的特征图的每个网格设置3个先验框,总共有 13 * 13 * 3 + 26 * 26 * 3 + 52 * 52 * 3 = 10647 个预测。每一个预测是一个(4+1+80)=85维向量,这个85维向量包含边框坐标(4个数值),边框置信度(1个数值),对象类别的概率(对于COCO数据集,有80种对象),所以对于 detection 我们通过 detection[5:] 获取后 80 个数据(类似独热码),获取其最大值索引对应 coco.names 类别

我们打印 2 条具有非零元素的 80 个数据列表

i = 0

for out in outs:

for detection in out:

a = sum(detection[5:])

if a > 0:

print(detection[5:])

i += 1

if i == 2:

break

[0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0.93348056 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. ]

[0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0.99174595 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. ]

在 80 个数据列表中,存在一个最大的值,这个值为类别概率,我们需要为这个值设定阈值,对于低概率进行舍弃,使用 confidence 获取这个概率值,设置阈值为 0.5(对于 80 分类物体,平均概率为 0.0125 [1/80],0.5这个值设定已经足够,当然也可以设置更高的概率阈值)

从上面介绍可知 detection 85维向量包含边框坐标(4个数值),边框置信度(1个数值),对象类别的概率(对于COCO数据集,有80种对象),边框坐标是一个对输入图像尺寸大小的比例,需要乘以原输入图像才可获得像素坐标

打印一组边框坐标值与置信度

i = 0

for out in outs:

for detection in out:

print('中心像素坐标 X 对原图宽比值:',detection[0])

print('中心像素坐标 Y 对原图高比值:',detection[1])

print('边界框的宽度 W 对原图宽比值:',detection[2])

print('边界框的高度 H 对原图高比值:',detection[3])

print('此边界框置信度:',detection[4])

break

break

中心像素坐标 X 对原图宽比值: 0.035791095

中心像素坐标 Y 对原图高比值: 0.051952414

边界框的宽度 W 对原图宽比值: 0.41718394

边界框的高度 H 对原图高比值: 0.123795405

此边界框置信度: 2.766259e-08

我们需要通过 detection[0] ~ detection[3]来计算 cv2.rectangle() 函数需要的(x, y, w, h)的值,绘制边框,为了适应 jupyter-notebook 的环境,我是用 matplotlib 进行绘制,注意 matplotlib 使用 RGB 与 OpenCV 使用 BGR,plt.Rectangle edgecolor 设置先统一颜色(注意 edgecolor 采用 RGBA 模式,值为 0~1),plt.text() 也采用 RGBA 模式

plt_img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

fig = plt.gcf()

fig.set_size_inches(20, 10)

plt.imshow(plt_img)

# jupyter 对每次运行结果会保留,再次运行列表创建

class_ids = []

confidences = []

boxes = []

i = 0

for out in outs:

for detection in out:

scores = detection[5:]

class_id = np.argmax(scores)

confidence = scores[class_id]

if confidence > 0.5:

center_x = int(detection[0] * width)

center_y = int(detection[1] * height)

w = int(detection[2] * width)

h = int(detection[3] * height)

x = int(center_x - w / 2)

y = int(center_y - h / 2)

boxes.append([x, y, w, h])

confidences.append(float(confidence))

class_ids.append(class_id)

label = classes[class_id]

plt.gca().add_patch(

plt.Rectangle((x, y), w,

h, fill=False,

edgecolor=(0, 1, 1), linewidth=2)

)

plt.text(x, y - 10, label, color = (1, 0, 0), fontsize=20)

print('object {} :'.format(i), label)

i += 1

plt.show()

object 0 : tvmonitor

object 1 : laptop

object 2 : chair

object 3 : tvmonitor

object 4 : laptop

object 5 : book

object 6 : mouse

在检测中发现出现双框的效果(或者出现多框),使用 NMS 算法解决多框问题,OpenCV dnn 模块自带了 NMSBoxes() 函数,NMS 目的是在邻域内保留同一检测目标置信度最大的框,在下方输出中可以发现对于邻域相同目标检测,只保留了 confidence 值最大的 box 索引,例如 object 0 : tvmonitor 与 object 3 : tvmonitor 概率分别为 0.9334805607795715 与 0.9716598987579346,显然保留了 object 3 : tvmonitor,在索引 indexes 中没有 [0] 元素,其余推断类似

plt_img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

fig = plt.gcf()

fig.set_size_inches(30, 20)

ax_img = plt.subplot(1, 2, 1)

ax_img.imshow(plt_img)

# jupyter 对每次运行结果会保留,再次运行一次

class_ids = []

confidences = []

boxes = []

i = 0

for out in outs:

for detection in out:

scores = detection[5:]

class_id = np.argmax(scores)

confidence = scores[class_id]

if confidence > 0.5:

center_x = int(detection[0] * width)

center_y = int(detection[1] * height)

w = int(detection[2] * width)

h = int(detection[3] * height)

x = int(center_x - w / 2)

y = int(center_y - h / 2)

boxes.append([x, y, w, h])

confidences.append(float(confidence))

class_ids.append(class_id)

label = classes[class_id]

plt.gca().add_patch(

plt.Rectangle((x, y), w,

h, fill=False,

edgecolor=(0, 1, 1), linewidth=2)

)

plt.text(x, y - 10, label, color = (1, 0, 0), fontsize=20)

print('object {} :'.format(i), label + ' '*(10 - len(label)), 'confidence :{}'.format(confidence))

i += 1

print(confidences)

indexes = cv2.dnn.NMSBoxes(boxes, confidences, 0.5, 0.4)

print(indexes, end='')

ax_img = plt.subplot(1, 2, 2)

ax_img.imshow(plt_img)

for j in range(len(boxes)):

if j in indexes:

x, y, w, h = boxes[j]

label = classes[class_ids[j]]

plt.gca().add_patch(

plt.Rectangle((x, y), w,

h, fill=False,

edgecolor=(0, 1, 1), linewidth=2)

)

plt.text(x, y - 10, label, color = (1, 0, 0), fontsize=20)

plt.show()

object 0 : tvmonitor confidence :0.9334805607795715

object 1 : laptop confidence :0.9917459487915039

object 2 : chair confidence :0.9899855256080627

object 3 : tvmonitor confidence :0.9716598987579346

object 4 : laptop confidence :0.9745261669158936

object 5 : book confidence :0.6494743824005127

object 6 : mouse confidence :0.5076925158500671

[0.9334805607795715, 0.9917459487915039, 0.9899855256080627, 0.9716598987579346, 0.9745261669158936, 0.6494743824005127, 0.5076925158500671]

[[1]

[2]

[3]

[5]

[6]]

让同一类别颜色相同,不同类别颜色不同

jupyter notebook 下最终源码

import cv2

import numpy as np

# Load Yolo

net = cv2.dnn.readNet("yolov3.weights", "yolov3.cfg")

classes = []

with open("coco.names", "r") as f:

classes = [line.strip() for line in f.readlines()]

layer_names = net.getLayerNames()

output_layers = [layer_names[i[0] - 1] for i in net.getUnconnectedOutLayers()]

colors = np.random.uniform(0, 255, size=(len(classes), 3)) / 255

# Loading image

img = cv2.imread("demo1.jpg")

# img = cv2.resize(img, None, fx=0.4, fy=0.4)

height, width, channels = img.shape

# Detecting objects

blob = cv2.dnn.blobFromImage(img, 1.0 / 255.0, (416, 416), (0, 0, 0), True, crop=False)

net.setInput(blob)

outs = net.forward(output_layers)

# Showing informations on the screen

class_ids = []

confidences = []

boxes = []

fig = plt.gcf()

fig.set_size_inches(20, 10)

plt_img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

plt.imshow(plt_img)

for out in outs:

for detection in out:

scores = detection[5:]

class_id = np.argmax(scores)

confidence = scores[class_id]

if confidence > 0.5:

# Object detected

center_x = int(detection[0] * width)

center_y = int(detection[1] * height)

w = int(detection[2] * width)

h = int(detection[3] * height)

# Rectangle coordinates

x = int(center_x - w / 2)

y = int(center_y - h / 2)

boxes.append([x, y, w, h])

confidences.append(float(confidence))

class_ids.append(class_id)

indexes = cv2.dnn.NMSBoxes(boxes, confidences, 0.5, 0.4)

for i in range(len(boxes)):

if i in indexes:

x, y, w, h = boxes[i]

label = str(classes[class_ids[i]])

color = colors[i]

plt.gca().add_patch(

plt.Rectangle((x, y), w,

h, fill=False,

edgecolor=color, linewidth=2)

)

plt.text(x, y - 10, label, color = color, fontsize=20)

到此使用 OpenCV 实现 Yolo v3结束

代码扩展

一、Python 代码图片识别

yolo_object_detection_image.py

import cv2

import numpy as np

# Load Yolo

net = cv2.dnn.readNet("yolov3.weights", "yolov3.cfg")

classes = []

with open("coco.names", "r") as f:

classes = [line.strip() for line in f.readlines()]

layer_names = net.getLayerNames()

output_layers = [layer_names[i[0] - 1] for i in net.getUnconnectedOutLayers()]

colors = np.random.uniform(0, 255, size=(len(classes), 3))

# Loading image

img = cv2.imread("demo1.jpg")

height, width, channels = img.shape

# Detecting objects

blob = cv2.dnn.blobFromImage(img, 0.00392, (416, 416), (0, 0, 0), True, crop=False)

net.setInput(blob)

outs = net.forward(output_layers)

# Showing informations on the screen

class_ids = []

confidences = []

boxes = []

for out in outs:

for detection in out:

scores = detection[5:]

class_id = np.argmax(scores)

confidence = scores[class_id]

if confidence > 0.5:

# Object detected

center_x = int(detection[0] * width)

center_y = int(detection[1] * height)

w = int(detection[2] * width)

h = int(detection[3] * height)

# Rectangle coordinates

x = int(center_x - w / 2)

y = int(center_y - h / 2)

boxes.append([x, y, w, h])

confidences.append(float(confidence))

class_ids.append(class_id)

indexes = cv2.dnn.NMSBoxes(boxes, confidences, 0.5, 0.4)

font = cv2.FONT_HERSHEY_SIMPLEX

for i in range(len(boxes)):

if i in indexes:

x, y, w, h = boxes[i]

label = str(classes[class_ids[i]])

color = colors[i]

cv2.rectangle(img, (x, y), (x + w, y + h), color, 3)

cv2.putText(img, label, (x, y - 20), font, 2, color, 3)

cv2.namedWindow("Image",0)

cv2.resizeWindow("Image", 1600, 900)

cv2.imshow("Image", img)

cv2.waitKey(0)

cv2.destroyAllWindows()

yolo_object_detection_webcam.py

二、Python 代码摄像头识别

import cv2

import numpy as np

# Load Yolo

net = cv2.dnn.readNet("yolov3.weights", "yolov3.cfg")

classes = []

with open("coco.names", "r") as f:

classes = [line.strip() for line in f.readlines()]

layer_names = net.getLayerNames()

output_layers = [layer_names[i[0] - 1] for i in net.getUnconnectedOutLayers()]

colors = np.random.uniform(0, 255, size=(len(classes), 3))

# Initialize frame rate calculation

frame_rate_calc = 1

freq = cv2.getTickFrequency()

cap = cv2.VideoCapture(0)

while True:

# Start timer (for calculating frame rate)

t1 = cv2.getTickCount()

ret, frame = cap.read()

height, width, channels = frame.shape

# Detecting objects

blob = cv2.dnn.blobFromImage(frame, 0.00392, (416, 416), (0, 0, 0), True, crop=False)

net.setInput(blob)

outs = net.forward(output_layers)

# Showing informations on the screen

class_ids = []

confidences = []

boxes = []

for out in outs:

for detection in out:

scores = detection[5:]

class_id = np.argmax(scores)

confidence = scores[class_id]

if confidence > 0.5:

# Object detected

center_x = int(detection[0] * width)

center_y = int(detection[1] * height)

w = int(detection[2] * width)

h = int(detection[3] * height)

# Rectangle coordinates

x = int(center_x - w / 2)

y = int(center_y - h / 2)

boxes.append([x, y, w, h])

confidences.append(float(confidence))

class_ids.append(class_id)

indexes = cv2.dnn.NMSBoxes(boxes, confidences, 0.5, 0.4)

font = cv2.FONT_HERSHEY_SIMPLEX

for i in range(len(boxes)):

if i in indexes:

x, y, w, h = boxes[i]

label = str(classes[class_ids[i]])

color = colors[i]

cv2.rectangle(frame, (x, y), (x + w, y + h), color, 1)

cv2.putText(frame, label, (x, y - 20), font, 0.7, color, 1)

cv2.putText(frame,'FPS: {0:.2f}'.format(frame_rate_calc), (30,50),font, 0.7, (255,255,0), 1)

cv2.namedWindow("Image", cv2.WINDOW_NORMAL)

cv2.resizeWindow("Image", 960, 540)

cv2.imshow("Image", frame)

# Calculate framerate

t2 = cv2.getTickCount()

time1 = (t2-t1)/freq

frame_rate_calc= 1/time1

# Press 'q' to quit

if cv2.waitKey(1) == ord('q'):

break

cap.release()

cv2.destroyAllWindows()