Linux搭建Kafka+Spark实时处理系统

服务器要求:jdk-8u121-linux-x64.tar.gz、kafka_2.12-0.10.2.1.tgz、spark-1.3.1-bin-hadoop2-without-hive.tgz

1、生产者—SpringMVC+Kafka

1.1、准备工作

所需资源:kafka_2.10-0.8.2.2.jar、kafka-clients-0.10.0.0.jar,把这两个jar包导入到项目中

1.2、配置

关于生产者Kafka服务器的配置,如下:

bootstrap.servers=172.17.0.2:9092

acks=all

retries=3

batch.size=16384

linger.ms=1

buffer.memory=33554432

key.serializer=org.apache.kafka.common.serialization.StringSerializer

value.serializer=org.apache.kafka.common.serialization.StringSerializer1.3、编写代码

1.3.1、线程池工厂

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.ThreadFactory;

public class ExecutorServiceFactory {

private ExecutorService executors;

private ExecutorServiceFactory() {

}

public static final ExecutorServiceFactory getInstance() {

return KafkaProducerPoolHolder.instance;

}

private static class KafkaProducerPoolHolder {

private static final ExecutorServiceFactory instance = new ExecutorServiceFactory();

}

public ExecutorService createScheduledThreadPool() {

// CPU个数

int availableProcessors = Runtime.getRuntime().availableProcessors();

// 创建

executors = Executors.newScheduledThreadPool(availableProcessors * 10, new KafkaProducterThreadFactory());

return executors;

}

public ExecutorService createSingleThreadExecutor() {

// 创建

executors = Executors.newSingleThreadExecutor(new KafkaProducterThreadFactory());

return executors;

}

public ExecutorService createCachedThreadPool() {

// 创建

executors = Executors.newCachedThreadPool(new KafkaProducterThreadFactory());

return executors;

}

public ExecutorService createFixedThreadPool(int count) {

// 创建

executors = Executors.newFixedThreadPool(count, new KafkaProducterThreadFactory());

return executors;

}

private class KafkaProducterThreadFactory implements ThreadFactory {

@Override

public Thread newThread(Runnable runnable) {

SecurityManager s = System.getSecurityManager();

ThreadGroup group = (s != null) ? s.getThreadGroup() : Thread.currentThread().getThreadGroup();

Thread t = new Thread(group, runnable);

return t;

}

}

}import java.util.concurrent.Callable;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Future;

public class KafkaProducerPool {

private ExecutorService executor;

private KafkaProducerPool() {

executor = ExecutorServiceFactory.getInstance().createFixedThreadPool(2);

}

public static final KafkaProducerPool getInstance() {

return KafkaProducerPoolHolder.instance;

}

private static class KafkaProducerPoolHolder {

private static final KafkaProducerPool instance = new KafkaProducerPool();

}

/**

* 关闭线程池,这里要说明的是:调用关闭线程池方法后,线程池会执行完队列中的所有任务才退出

*

* @author SHANHY

* @date 2015年12月4日

*/

public void shutdown(){

executor.shutdown();

}

/**

* 提交任务到线程池,可以接收线程返回值

*

* @param task

* @return

* @author SHANHY

* @date 2015年12月4日

*/

public Future submit(Runnable task) {

return executor.submit(task);

}

/**

* 提交任务到线程池,可以接收线程返回值

*

* @param task

* @return

* @author SHANHY

* @date 2015年12月4日

*/

public Future submit(Callable task) {

return executor.submit(task);

}

/**

* 直接提交任务到线程池,无返回值

*

* @param task

* @author SHANHY

* @date 2015年12月4日

*/

public void execute(Runnable task){

executor.execute(task);

}

}import java.io.FileNotFoundException;

import java.io.IOException;

import java.io.InputStream;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import org.apache.kafka.clients.producer.Callback;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import com.cda91.common.HttpJResopnse;

public class KafkaProducerT implements Runnable {

private static Producer producer;

private String topic = "kafka_producer_title";

private static Map> messages;

private static List keys;

private String key = null;

private Object value = null;

private static Properties props;

private static final String productPro = "/kafka.properties";

private KafkaProducterListener listener;

private KafkaProducerT() {

System.out.println("KafkaProducerT构造函数被调用");

props = new Properties();

messages = new HashMap>();

keys = new ArrayList();

try {

InputStream is = KafkaProducerT.class.getResourceAsStream(productPro);

props.load(is);

is.close();

is = null;

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

producer = new KafkaProducer(props);

}

public static final KafkaProducerT getInstance() {

return KafkaProducerTHolder.instance;

}

private static class KafkaProducerTHolder{

private static final KafkaProducerT instance = new KafkaProducerT();

}

public void setTopic(String topic) {

this.topic = topic;

}

public void push(String key,Object value){

if(!keys.contains(key)) {

keys.add(key);

}

if(!messages.containsKey(key)) {

messages.put(key, new ArrayList 2、消费者—Kafka+Maven

2.1、pom.xml相关

4.0.0

com.cda

sparkcda

0.0.1

jar

sparkcda

http://maven.apache.org

UTF-8

junit

junit

3.8.1

test

org.apache.spark

spark-core_2.10

1.3.1

provided

org.apache.spark

spark-streaming_2.10

1.3.1

provided

org.apache.kafka

kafka_2.10

0.8.2.1

org.apache.spark

spark-streaming-kafka_2.10

1.3.1

com.101tec

zkclient

0.10

com.yammer.metrics

metrics-core

2.2.0

import java.util.Arrays;

import java.util.HashMap;

import java.util.HashSet;

import java.util.Map;

import java.util.Set;

import java.util.regex.Pattern;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaPairDStream;

import org.apache.spark.streaming.api.java.JavaPairInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka.KafkaUtils;

import kafka.serializer.StringDecoder;

import scala.Tuple2;

public class AccessStream {

private static final Pattern SPACE = Pattern.compile(" ");

public static void main(String[] args) {

consurmer();

}

public static void consurmer() {

String brokers = "localhost:9092";

String topics = "cda_pv";

// Create context with a 2 seconds batch interval

SparkConf sparkConf = new SparkConf().setAppName("AccessStream");

sparkConf.setMaster("local[*]");

JavaStreamingContext jssc = new JavaStreamingContext(sparkConf, Durations.seconds(20));

Set topicsSet = new HashSet(Arrays.asList(topics.split(",")));

Map kafkaParams = new HashMap();

kafkaParams.put("metadata.broker.list", brokers);

// Create direct kafka stream with brokers and topics

JavaPairInputDStream messages = KafkaUtils.createDirectStream(

jssc,

String.class,

String.class,

StringDecoder.class,

StringDecoder.class,

kafkaParams,

topicsSet

);

// Get the lines, split them into words, count the words and print

JavaDStream lines = messages.map(new Function, String>() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public String call(Tuple2 tuple2) {

return tuple2._2();

}

});

JavaDStream words = lines.flatMap(new FlatMapFunction() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public Iterable call(String x) {

return Arrays.asList(SPACE.split(x));

}

});

JavaPairDStream wordCounts = words.mapToPair(

new PairFunction() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public Tuple2 call(String s) {

return new Tuple2(s, 1);

}

}).reduceByKey(

new Function2() {

/**

*

*/

private static final long serialVersionUID = 1L;

@Override

public Integer call(Integer i1, Integer i2) {

return i1 + i2;

}

});

wordCounts.print();

// Start the computation

jssc.start();

jssc.awaitTermination();

}

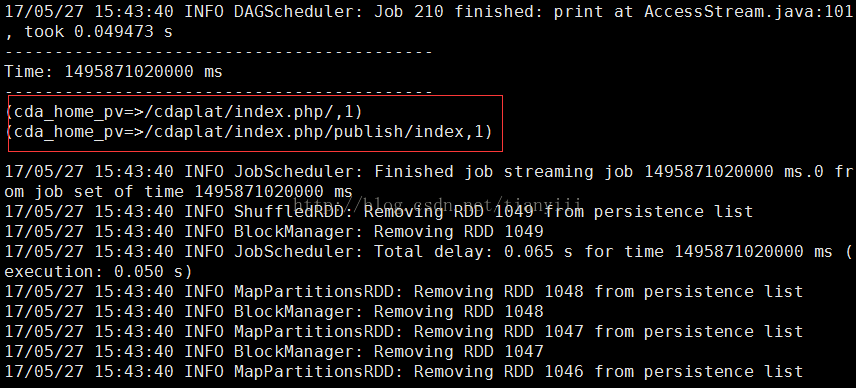

} 3、服务器搭建

3.1、软件安装

解压jdk-8u121-linux-x64.tar.gz、kafka_2.12-0.10.2.1.tgz、spark-1.3.1-bin-hadoop2-without-hive.tgz 到 /usrl/local,并加入到PATH中(这些都懂得,不必赘述)

3.2、相关配置

cho -e "export JAVA_HOME=$JAVA_HOME" >> $SPARK_HOME/sbin/spark-config.sh \

echo -e \"advertised.listeners=PLAINTEXT://你的服务器IP:9092\" >> $KAFKA_HOME/config/server.properties3.3、测试

spark-submit --class com.cda.stream.AccessStream --jars /usr/local/spark-1.3.1-bin-hadoop2-without-hive/lib/kafka_2.10-0.8.2.1.jar,/usr/local/spark-1.3.1-bin-hadoop2-without-hive/lib/spark-streaming-kafka_2.10-1.3.1.jar,/usr/local/kafka_2.12-0.10.2.1/libs/metrics-core-2.2.0.jar /opt/sparkcda-0.0.1.jar