DeepLabv3+代码实现+训练自己的数据集

1.环境配置

(1)首先需要配置代码运行所需要的环境,本文使用的是从官网下载的代码,其源码是在TensorFlow运行的,故需要安装TensorFlow,我安装的是TensorFlow-gpu=15.0版本,所以之前需要先配置好相应的Cuda环境和Cudnn环境,这里使用的是Cuda10.0,我试过Cuda10.1,会出现错误,虽然可以通过修改实现代码正常运行,但最后还是安装与TensorFlow版本相对应的cuda版本。

(2)安装之后运行代码可能会出现ImportError: No module named 'nets’的错误,这是因为TensorFlow15.0中不包含这个模块了,所以需要额外添加。

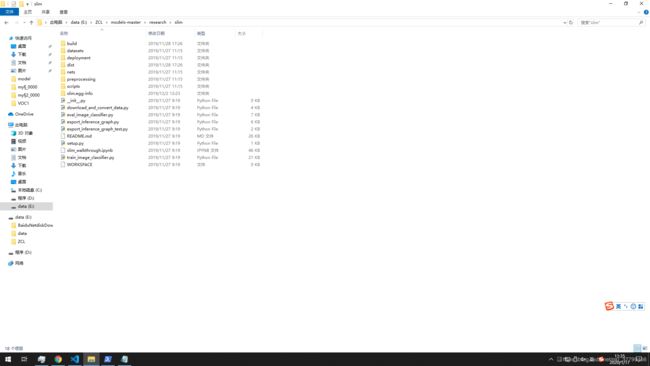

1)首先从git上下载slim源码,其包含在model中,下载

2)打开相应目录

然后运行setup.py文件,将slim中所有的模块加载。运行命令:

python setup.py build

python setup.py install

运行命令是可能会出现error: could not create ‘build’: 当文件已存在时,无法创建该文件。

原因是git clone下来的代码库中有个BUILD文件,而build和install指令需要新建build文件夹,名字冲突导致问题。暂时不清楚BUILD文件的作用。将该文件移动到其他目录,再运行上述指令,即可成功安装。

2.制作数据集

(1)可以使用labelme进行数据集的制作,首先使用pip安装labelme,一般都可以成功安装,个别可能出现错误,但都很容易解决。标注过程如下:

点击保存后会出现一个同名的.json文件,里面包含了标注信息。由于源码只能通过json文件一个个的生产分割图像,太多麻烦,所以我修改了一下源码可以成批生产分割图像。

import argparse

import json

import os

import os.path as osp

import warnings

import copy

import shutil

import numpy as np

import PIL.Image

from skimage import io

import yaml

from labelme import utils

NAME_LABEL_MAP = {

'_background_': 0,

'Top': 1,

'Rock': 2,

'coal': 3,

}

def main():

parser = argparse.ArgumentParser()

parser.add_argument('json_file')

parser.add_argument('-o', '--out', default=None)

args = parser.parse_args()

json_file = args.json_file

out_dir = args.out

if not os.path.exists(out_dir):

os.mkdir(out_dir)

print(out_dir)

list = os.listdir(json_file)

for i in range(0, len(list)):

path = os.path.join(json_file, list[i])

if (list[i].split(".")[-1])!="json":

continue

filename = list[i][:-5] # .json

print(filename)

#label_name_to_value = {'_background_': 0}

if os.path.isfile(path):

data = json.load(open(path))

img = utils.image.img_b64_to_arr(data['imageData'])

lbl, lbl_names = utils.shape.labelme_shapes_to_label(img.shape, data['shapes']) # labelme_shapes_to_label

#lbl = utils.shapes_to_label(img.shape, data['shapes'], label_name_to_value)

# modify labels according to NAME_LABEL_MAP

lbl_tmp = copy.copy(lbl)

for key_name in lbl_names:

old_lbl_val = lbl_names[key_name]

new_lbl_val = NAME_LABEL_MAP[key_name]

lbl_tmp[lbl == old_lbl_val] = new_lbl_val

lbl_names_tmp = {}

for key_name in lbl_names:

lbl_names_tmp[key_name] = NAME_LABEL_MAP[key_name]

# Assign the new label to lbl and lbl_names dict

lbl = np.array(lbl_tmp, dtype=np.int8)

lbl_names = lbl_names_tmp

captions = ['%d: %s' % (l, name) for l, name in enumerate(lbl_names)]

lbl_viz = utils.draw.draw_label(lbl, img, captions)

utils.lblsave(osp.join(out_dir, '{}.png'.format(filename)), lbl)

print('Saved to: %s' % out_dir)

if __name__ == '__main__':

main()

其运行方式为python batch_json_to_dataset.py “json_path” -o=“out_path”

即可将文件夹中所以文件转化为分割图像;然后使用VOC数据集格式进行处理。获得最终数据集。

(2)将数据集分成train与val两部分,方便之后的测试;我的代码是按9:1来分的,具体比例根据实际情况来定。

#coding:utf-8

import os

import random

trainval_percent = 1 #训练验证数据集的百分比

train_percent = 0.9 #训练集的百分比

filepath = './JPEGImages'

total_img = os.listdir(filepath)

num=len(total_img) #列表的长度

list=range(num)

tv=int(num*trainval_percent) #训练验证集的图片个数

tr=int(tv*train_percent) #训练集的图片个数 # sample(seq, n) 从序列seq中选择n个随机且独立的元素;

trainval= random.sample(list,tv)

train=random.sample(trainval,tr)

#创建文件trainval.txt,test.txt,train.txt,val.txt

ftrain = open('./ImageSets/Segmentation/train.txt', 'w')

fval = open('./ImageSets/Segmentation/val.txt', 'w')

for i in list:

name=total_img[i][:-4]+'\n'

if i in train:

ftrain.write(name)

else:

fval.write(name)

ftrain.close()

fval.close()

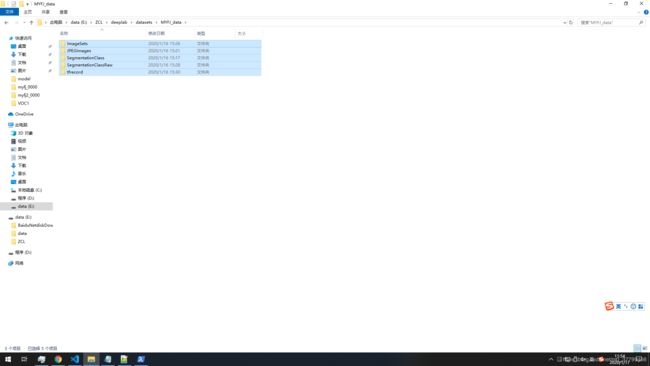

(3)数据集处理

首先使用代码中自带remove_gt_colormap.py文件,将数据集转化为单通道的标签数据,

'''Removes the color map from the ground truth segmentation annotations and save the results to output_dir.'''

import glob

import os.path

import numpy as np

from PIL import Image

import tensorflow as tf

FLAGS = tf.compat.v1.flags.FLAGS

#彩色分割图像目录

tf.compat.v1.flags.DEFINE_string('original_gt_folder',

'./SegmentationClass',

'Original ground truth annotations.')

#分割图像保存格式

tf.compat.v1.flags.DEFINE_string('segmentation_format', 'png', 'Segmentation format.')

#输出单通道图像保存目录

tf.compat.v1.flags.DEFINE_string('output_dir',

'./SegmentationClassRaw',

'folder to save modified ground truth annotations.')

#打开并转化图像数据排列方式

def _remove_colormap(filename):

return np.array(Image.open(filename))

#保存单通道语义分割图像

def _save_annotation(annotation, filename):

"""Saves the annotation as png file.

Args:

annotation: Segmentation annotation.

filename: Output filename.

"""

pil_image = Image.fromarray(annotation.astype(dtype=np.uint8))

with tf.io.gfile.GFile(filename, mode='w') as f:

pil_image.save(f, 'PNG')

def main(unused_argv):

#创建输出目录(如果不存在)

if not tf.io.gfile.isdir(FLAGS.output_dir):

tf.io.gfile.makedirs(FLAGS.output_dir)

#获取所有彩色语义分割图像目录

annotations = glob.glob(os.path.join(FLAGS.original_gt_folder,'*.' + FLAGS.segmentation_format))

#遍历所有的彩色语义分割图像,将其转化为单通道图像

for annotation in annotations:

raw_annotation = _remove_colormap(annotation)

filename = os.path.basename(annotation)[:-4]

_save_annotation(raw_annotation,os.path.join(FLAGS.output_dir,filename + '.' + FLAGS.segmentation_format))

if __name__ == '__main__':

tf.compat.v1.app.run()

然后使用build_voc2012_data.py文件将其转化为tfrcord格式的数据,运行时需要修改–image_format=“png”。

build_data.py

import collections

import six

#import tensorflow as tf

import tensorflow.compat.v1 as tf

FLAGS = tf.app.flags.FLAGS

tf.app.flags.DEFINE_enum('image_format', 'jpg', ['jpg', 'jpeg', 'png'],

'Image format.')

tf.app.flags.DEFINE_enum('label_format', 'png', ['png'],

'Segmentation label format.')

# A map from image format to expected data format.

_IMAGE_FORMAT_MAP = {

'jpg': 'jpeg',

'jpeg': 'jpeg',

'png': 'png',

}

class ImageReader(object):

"""Helper class that provides TensorFlow image coding utilities."""

def __init__(self, image_format='jpeg', channels=3):

"""Class constructor.

Args:

image_format: Image format. Only 'jpeg', 'jpg', or 'png' are supported.

channels: Image channels.

"""

with tf.Graph().as_default():

self._decode_data = tf.placeholder(dtype=tf.string)

self._image_format = image_format

self._session = tf.Session()

if self._image_format in ('jpeg', 'jpg'):

self._decode = tf.image.decode_jpeg(self._decode_data,

channels=channels)

elif self._image_format == 'png':

self._decode = tf.image.decode_png(self._decode_data,

channels=channels)

def read_image_dims(self, image_data):

"""Reads the image dimensions.

Args:

image_data: string of image data.

Returns:

image_height and image_width.

"""

image = self.decode_image(image_data)

return image.shape[:2]

def decode_image(self, image_data):

"""Decodes the image data string.

Args:

image_data: string of image data.

Returns:

Decoded image data.

Raises:

ValueError: Value of image channels not supported.

"""

image = self._session.run(self._decode,

feed_dict={self._decode_data: image_data})

if len(image.shape) != 3 or image.shape[2] not in (1, 3):

raise ValueError('The image channels not supported.')

return image

def _int64_list_feature(values):

"""Returns a TF-Feature of int64_list.

Args:

values: A scalar or list of values.

Returns:

A TF-Feature.

"""

if not isinstance(values, collections.Iterable):

values = [values]

return tf.train.Feature(int64_list=tf.train.Int64List(value=values))

def _bytes_list_feature(values):

"""Returns a TF-Feature of bytes.

Args:

values: A string.

Returns:

A TF-Feature.

"""

def norm2bytes(value):

return value.encode() if isinstance(value, str) and six.PY3 else value

return tf.train.Feature(

bytes_list=tf.train.BytesList(value=[norm2bytes(values)]))

def image_seg_to_tfexample(image_data, filename, height, width, seg_data):

"""Converts one image/segmentation pair to tf example.

Args:

image_data: string of image data.

filename: image filename.

height: image height.

width: image width.

seg_data: string of semantic segmentation data.

Returns:

tf example of one image/segmentation pair.

"""

return tf.train.Example(features=tf.train.Features(feature={

'image/encoded': _bytes_list_feature(image_data),

'image/filename': _bytes_list_feature(filename),

'image/format': _bytes_list_feature(_IMAGE_FORMAT_MAP[FLAGS.image_format]),

'image/height': _int64_list_feature(height),

'image/width': _int64_list_feature(width),

'image/channels': _int64_list_feature(3),

'image/segmentation/class/encoded': (_bytes_list_feature(seg_data)),

'image/segmentation/class/format': _bytes_list_feature(FLAGS.label_format),

}))

build_voc2012_data.py

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import math

import os.path

import sys

import build_data

from six.moves import range

#import tensorflow as tf

import tensorflow.compat.v1 as tf

FLAGS = tf.app.flags.FLAGS

#原始图像目录

tf.app.flags.DEFINE_string('image_folder',

'./MYFJ_data_v3/JPEGImages',

'Folder containing images.')

#单通道语义分割图像目录

tf.app.flags.DEFINE_string(

'semantic_segmentation_folder',

'./MYFJ_data_v3/SegmentationClassRaw',

'Folder containing semantic segmentation annotations.')

#训练和验证集的文件

tf.app.flags.DEFINE_string(

'list_folder',

'./MYFJ_data_v3/ImageSets/Segmentation',

'Folder containing lists for training and validation')

#输出tfrecord保存目录

tf.app.flags.DEFINE_string(

'output_dir',

'./MYFJ_data_v3/tfrecord',

'Path to save converted SSTable of TensorFlow examples.')

#图像分成几份

_NUM_SHARDS = 2

def _convert_dataset(dataset_split):

"""Converts the specified dataset split to TFRecord format.

Args:

dataset_split: The dataset split (e.g., train, test).

Raises:

RuntimeError: If loaded image and label have different shape.

"""

# 返回路径dataset_splits所对应的文件名,并且去除扩展名.txt

dataset = os.path.basename(dataset_split)[:-4]

sys.stdout.write('Processing ' + dataset)

# 打开索引文件,并生成索引文件名列表

filenames = [x.strip('\n') for x in open(dataset_split, 'r')]

num_images = len(filenames)# 数据集中图像的数量即索引列表的长度

num_per_shard = int(math.ceil(num_images / _NUM_SHARDS))# 将图像均匀分成_NUM_SHARDS份,输出_NUM_SHARDS个tfrecord文件

image_reader = build_data.ImageReader('jpeg', channels=3)

label_reader = build_data.ImageReader('png', channels=1)

for shard_id in range(_NUM_SHARDS):

output_filename = os.path.join(FLAGS.output_dir,

'%s-%05d-of-%05d.tfrecord' % (dataset, shard_id, _NUM_SHARDS))# _NUM_SHARDS个输出的tfrecord文件的输出目录

with tf.python_io.TFRecordWriter(output_filename) as tfrecord_writer:# 向output_filename文件夹写入tfrecord文件

start_idx = shard_id * num_per_shard# 每个tfrecord文件存储的起始图像索引

end_idx = min((shard_id + 1) * num_per_shard, num_images)# 每个tfrecord文件存储的结束图像索引;对于最后一个tfrecord文件,可能仅保存剩余的图像。

for i in range(start_idx, end_idx):

sys.stdout.write('\r>> Converting image %d/%d shard %d' % (i + 1, len(filenames), shard_id))

sys.stdout.flush()

# Read the image.

image_filename = os.path.join(FLAGS.image_folder, filenames[i] + '.' + FLAGS.image_format)# 图像路径

image_data = tf.gfile.GFile(image_filename, 'rb').read()#GFile函数读取图像

height, width = image_reader.read_image_dims(image_data)#获取图像的高宽

# Read the semantic segmentation annotation.

seg_filename = os.path.join(FLAGS.semantic_segmentation_folder,filenames[i] + '.' + FLAGS.label_format)# 标签图像路径

seg_data = tf.gfile.GFile(seg_filename, 'rb').read()# 读取标签

seg_height, seg_width = label_reader.read_image_dims(seg_data)

if height != seg_height or width != seg_width:

raise RuntimeError('Shape mismatched between image and label.')

# Convert to tf example.# 保存为tfrecord的example格式

example = build_data.image_seg_to_tfexample(image_data, filenames[i], height, width, seg_data)

tfrecord_writer.write(example.SerializeToString())# 用字符串形式存储example信息

sys.stdout.write('\n')

sys.stdout.flush()

def main(unused_argv):

# 查找匹配该路径名的文件(即训练集和验证集图像索引文件)

dataset_splits = tf.gfile.Glob(os.path.join(FLAGS.list_folder, '*.txt'))

#遍历训练集和验证集,依次转化为tfrecord格式文件

for dataset_split in dataset_splits:

_convert_dataset(dataset_split)

if __name__ == '__main__':

tf.app.run()

3.训练模型

(1)下载代码deeplab,同时下载预训练模型model

(2)修改代码

1)首先修改data_generator.py 文件,将自己的数据集加入到里面

_MYFJ = DatasetDescriptor(

splits_to_sizes={

#'train': 649, # num of samples in images/training

#'val': 73, # num of samples in images/validation

'train': 186, # num of samples in images/training

'val': 21, # num of samples in images/validation

},

num_classes=4,

ignore_label=255,

)

_DATASETS_INFORMATION = {

'cityscapes': _CITYSCAPES_INFORMATION,

'pascal_voc_seg': _PASCAL_VOC_SEG_INFORMATION,

'ade20k': _ADE20K_INFORMATION,

'myfj':_MYFJ,

}

2)不需要修改train_utils.py,在使用预训练权重时候,不加载该logit层,所以有人认为应该如下修改:

#exclude_list = ['global_step']

exclude_list = ['global_step','logits']

if not initialize_last_layer:

exclude_list.extend(last_layers)

但是事实上不需要,因为

if not initialize_last_layer:

exclude_list.extend(last_layers)

这句会将你设置的不用加载的层都排除在外,使其加入到exclude_list中;所以不用多此一举。

3)修改train .py

如果想在其他数据集上微调DeepLab时,有以下几种情况:

(a)想要使用预训练模型的所有权重:设置initialize_last_layer = True(在这种情况下,last_layers_contain_logits_only无关紧要)。

(b)只想使用网络主干网权重(即排除ASPP,解码器等):设置initialize_last_layer = False和last_layers_contain_logits_only = False。

(c)使用除logit层之外的所有训练好的权重(因为num_classes可能不同):设置initialize_last_layer = False和last_layers_contain_logits_only = True。

最终,我的设置是:

initialize_last_layer=False

last_layers_contain_logits_only=True

(3)运行train代码

python train.py \

--logtostderr \

--train_split="train" \ 可以选择train/val/trainval 不同的数据集

--model_variant="xception_65" \

--atrous_rates=6 \

--atrous_rates=12 \

--atrous_rates=18 \

--output_stride=16 \

--decoder_output_stride=4 \

--train_crop_size=513 \

--train_crop_size=513\

--train_batch_size=8\

--training_number_of_steps=10000 \

--fine_tune_batch_norm=False \(由于batchsize小于12,将其改为false)

--tf_initial_checkpoint="加载与训练模型/model.ckpt" \

--train_logdir="保存训练的中间结果" \

--dataset_dir="生成的tfrecord的路径"

(4)运行eval.py,注意eval_crop_size大小与输入的图像相同

python eval.py \

--logtostderr \

--eval_split="val" \

--model_variant="xception_65" \

--atrous_rates=6 \

--atrous_rates=12 \

--atrous_rates=18 \

--output_stride=16 \

--decoder_output_stride=4 \

--eval_crop_size=513 \

--eval_crop_size=513 \

--checkpoint_dir="${TRAIN_LOGDIR}" \

--eval_logdir="${EVAL_LOGDIR}" \

--dataset_dir="${DATASET}"

(5)运行vis.py,注意vis_crop_size大小与输入的图像相同

python vis.py \

--logtostderr \

--vis_split="val" \

--model_variant="xception_65" \

--atrous_rates=6 \

--atrous_rates=12 \

--atrous_rates=18 \

--output_stride=16 \

--decoder_output_stride=4 \

--vis_crop_size="513,513" \

--checkpoint_dir="${TRAIN_LOGDIR}" \

--vis_logdir="${VIS_LOGDIR}" \

--dataset_dir="${PASCAL_DATASET}" \

--max_number_of_iterations=1

(6)运行export_model.py,生成frozen_inference_graph.pb文件,还是注意crop_size大小与输入图像相同

CKPT_PATH="${TRAIN_LOGDIR}/model.ckpt-${NUM_ITERATIONS}"

EXPORT_PATH="${EXPORT_DIR}/frozen_inference_graph.pb"

python export_model.py \

--logtostderr \

--checkpoint_path="${CKPT_PATH}" \

--export_path="${EXPORT_PATH}" \

--model_variant="xception_65" \

--atrous_rates=6 \

--atrous_rates=12 \

--atrous_rates=18 \

--output_stride=16 \

--decoder_output_stride=4 \

--num_classes=21 \

--crop_size=513 \

--crop_size=513 \

--inference_scales=1.0