Tensorflow - ValueError: Dimensions must be equal, but are 256 and 228

代码是一个2层的LTSM,然后一个前向一个后向,构成双向双层LSTM网络,报错如下

ValueError: Dimensions must be equal, but are 256 and 228 for 'model/bidirectional_rnn/fw/fw/while/fw/multi_rnn_cell/cell_0/cell_0/basic_lstm_cell/MatMul_1' (op: 'MatMul') with input shapes: [?,256], [228,512].

错误就是维度不匹配,查阅了相关资料后,发现将这个中num_units的size改外embedding size就可以了:

single_cell_fw = tf.contrib.rnn.BasicLSTMCell(num_units=size, state_is_tuple=True)但是疑惑的是, num_units也就是LSTM输出的维度没必要等于embedding 维度,为啥会报错:

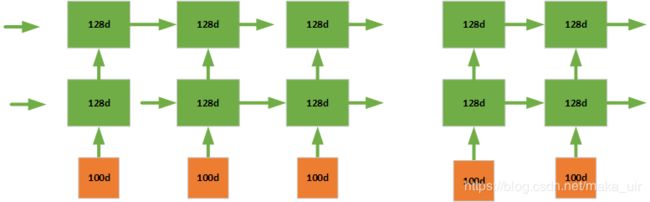

这个是我模型中的维度 word embedding为100d LSTM units 为128d在上述定义中,两个LSTM单元共享参数和维度,也就是说,layer 1 的输入是 128d + 100d 为228 第二次是 第一层的输出 和 第二层前一时刻的隐层状态 也就是128d + 128d 为256,由于两个LSTM共享维度,因此第一层的维度228与第二层的维度256相冲突,然后就报错了,也就是上述错误中 :

ValueError: Dimensions must be equal, but are 256 and 228

因此要不就换新版本的Tensorflow的方法,要不就单独定义每一层LSTM,不使用循环建立的方式

改成这样,single_cell_fw = [tf.nn.rnn_cell.LSTMCell(size) for size in [128, 128]] 如下代码也就是构建双层的 其余的自己改一下定义就好了不同layer的units大小

with tf.name_scope('lstm_cell_fw'):

# single_cell_fw = tf.contrib.rnn.GRUCell(size)

# if use_lstm:

# single_cell_fw = tf.contrib.rnn.BasicLSTMCell(num_units=size, state_is_tuple=True)

# cell_fw = single_cell_fw

cell_fw = tf.nn.rnn_cell.LSTMCell(size)

if num_layers > 1:

single_cell_fw = [tf.nn.rnn_cell.LSTMCell(size) for size in [128, 128]]

cell_fw = tf.nn.rnn_cell.MultiRNNCell(single_cell_fw)

if not forward_only and dropout_keep_prob < 1.0:

cell_fw = tf.contrib.rnn.DropoutWrapper(cell_fw,

input_keep_prob=dropout_keep_prob,

output_keep_prob=dropout_keep_prob)

with tf.name_scope('lstm_cell_bw'):

# single_cell_bw = tf.contrib.rnn.GRUCell(size)

# if use_lstm:

# single_cell_bw = tf.contrib.rnn.BasicLSTMCell(num_units=size, state_is_tuple=True)

# cell_bw = single_cell_bw

cell_bw = tf.nn.rnn_cell.LSTMCell(size)

if num_layers > 1:

single_cell_fw = [tf.nn.rnn_cell.LSTMCell(size) for size in [128, 128]]

cell_bw = tf.nn.rnn_cell.MultiRNNCell(single_cell_fw)

if not forward_only and dropout_keep_prob < 1.0:

cell_bw = tf.contrib.rnn.DropoutWrapper(cell_bw,

input_keep_prob=dropout_keep_prob,

output_keep_prob=dropout_keep_prob)

# init_state = cell.zero_state(batch_size, tf.float32)

with tf.name_scope('embeddings'):

embedding = tf.get_variable("embedding", [sent_vocab_size, word_embedding_size]) # 3351 100

self.embedded_inputs = tf.nn.embedding_lookup(embedding, self.inputs)

with tf.name_scope('one_hot'):

self.one_hot_labels = tf.one_hot(self.labels, depth=self.label_vocab_size,name='one-hot-labels')参考资料:https://tensorflow.google.cn/api_docs/python/tf/nn/dynamic_rnn?hl=zh-cn

参考资料:https://stackoverflow.com/questions/44615147/valueerror-trying-to-share-variable-rnn-multi-rnn-cell-cell-0-basic-lstm-cell-k

还有一种报错的原因是因为Tensorflow的版本的问题:

新版的TensorFlow在MultiRNNCell使用的时候用 for _ in range (nunmber_layers) 代替了 single_cell * nunmber_layers的方式

if num_layers > 1:

cell_bw = tf.contrib.rnn.MultiRNNCell([single_cell_bw for _ in range(num_layers)])

错误部分代码段如下:

with tf.name_scope('lstm_cell_fw'):

single_cell_fw = tf.contrib.rnn.GRUCell(size)

if use_lstm:

single_cell_fw = tf.contrib.rnn.BasicLSTMCell(num_units=size, state_is_tuple=True)

cell_fw = single_cell_fw

if num_layers > 1:

cell_fw = tf.contrib.rnn.MultiRNNCell([single_cell_fw for _ in range(num_layers)])

if not forward_only and dropout_keep_prob < 1.0:

cell_fw = tf.contrib.rnn.DropoutWrapper(cell_fw,

input_keep_prob=dropout_keep_prob,

output_keep_prob=dropout_keep_prob)

with tf.name_scope('lstm_cell_bw'):

single_cell_bw = tf.contrib.rnn.GRUCell(size)

if use_lstm:

single_cell_bw = tf.contrib.rnn.BasicLSTMCell(num_units=size, state_is_tuple=True)

cell_bw = single_cell_bw

if num_layers > 1:

cell_bw = tf.contrib.rnn.MultiRNNCell([single_cell_bw for _ in range(num_layers)])

if not forward_only and dropout_keep_prob < 1.0:

cell_bw = tf.contrib.rnn.DropoutWrapper(cell_bw,

input_keep_prob=dropout_keep_prob,

output_keep_prob=dropout_keep_prob)

# init_state = cell.zero_state(batch_size, tf.float32)

with tf.name_scope('embeddings'):

embedding = tf.get_variable("embedding", [sent_vocab_size, word_embedding_size]) # 3351 100

self.embedded_inputs = tf.nn.embedding_lookup(embedding, self.inputs)

with tf.name_scope('one_hot'):

self.one_hot_labels = tf.one_hot(self.labels, depth=self.label_vocab_size,name='one-hot-labels')