CentOS7+Hapdoop2.8+spark2.1完全分布式平台的搭建经历

写在前面个人心得与经验:

1、关于全分布和伪分布的区别:全分布是指在不同物理主机上搭建平台。伪分布是指一台物理主机中有多台虚拟机,这些虚拟机搭建的平台就是伪分布式平台。

2、关于平台版本选择:尽量选择成熟的版本,不要太旧也不要选择最新版本的。版本太旧会出现一些异常,可能是它平台本身存在的问题,也可能会出现与现在的一些主流框架不兼容的情况。最新版本的话会在配置文件上有所不同,网上搜到的资料不也是特别多,所以我推荐使用的是一些推出半年以上的版本,例如hadoop2.x,网上资料比较齐全,各种warn和error都有人遇到过,解决起来比较方便快捷。

3、怎么搭建比较顺利:我个人建议是跟着教学视频来一步一步搭建,视频讲的比较详细。博客的话可以作为参考,有的地方博客会有跳跃,可能对于初学者来说就有点迷惑。

4、怎么处理出现的错误:我也是第一次接触hadoop、spark等这方面的内容,是一个彻头彻尾的小白。在Debug方面没有什么好的经验,我就是不停的翻看log,然后百度,基本都能解决。

5、实用小工具:1、Xshell5等连接工具。可以很方便的在windows与虚拟机之间切换,可以使用ctrl+c、ctrl+v、tab等快捷键,会很方便快捷,省去手动输入一些复杂的指令。2、更新yum源。把yum源换成阿里源或者163源,可以很方便地下载软件,只要上面有的都能下载。

下面就是正文部分

目录:1、下载并配置CentOS7、Hadoop、Spark、jdk、scala、scala ide for ecplise

2、启动完全分布式集群

3、实现K-Means算法

正文:

1.1 下载并安装CentOS7

1.下载VmwareWorkstation10.0(可以下载其他版本)

2.安装CentOS7 参考博客:http://www.osyunwei.com/archives/7829.html

1.2 下载并安装Hadoop2.8.0

参考的博客:https://blog.csdn.net/pucao_cug/article/details/71698903

主要的步骤就是:实现不同虚拟机之间的免密登录-->安装hadoop-->修改hadoop配置文件-->启动hadoop--> 测试hadoop

1.3 下载并安装JDK1.8.0_162

参考的博客:http://blog.csdn.net/pucao_cug/article/details/68948639(虽然那篇博文用的是ubuntu,但是jdk安装在CentOS下也一样,我使用的是最新版的jdk)

1.4 下载并安装spark

参考的博客:https://www.cnblogs.com/NextNight/p/6703362.html

1.5 下载并安装scala

类似于jdk的安装,都是从官网下载压缩包,解压,添加路径即可

1.6 下载并安装Scala ide

1.下载地址:http://scala-ide.org/。使用Scala ide 需要用到CentOS7的GUI界面,可以用#yum installgroup GNOME 下载一个可视化桌面。安装完可视化界面之后,就解压压缩包,即可使用Scala Ide for Ecplise。

2.如何新建scala工程:参考博客:https://blog.csdn.net/wangmuming/article/details/34079119

3.配置Scala Ide for Ecplise:想要使用Spark Millb之类的包,必须导入spark依赖包。具体的方法是:在自己project右键-->properties-->Java Build Path-->Add extern jars-->打开自己spark文件夹中的Jars文件夹-->把所有.jar文件导入(spark2.0以上的版本才有jars文件夹)

4.Scala IDE环境已经搭建完毕,运行Demo:wordcount。

参考博客: https://blog.csdn.net/hqwang4/article/details/72615125

2.1 启动集群

其实前面的博客中已经提到了如何配置启动集群,这里就不再重复了。

2.2 把任务提交到集群

参考博客:https://www.cnblogs.com/zengxiaoliang/p/6508330.html

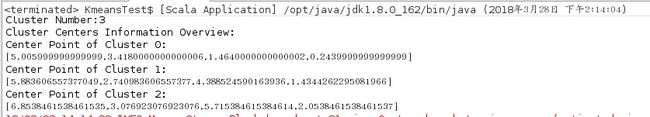

3.1 实现K-Means算法

实现K-Means算法的方式有很多种,我选择的是用基于Spark Millb包的K-Means算法,用的是Scala语言编写的。我选择的原始数据是Iris.csv(莺尾花数据)主要代码如下:

package test

import org.apache.spark.{SparkContext, SparkConf}

import org.apache.spark.mllib.clustering.{KMeans, KMeansModel}

import org.apache.spark.mllib.linalg.Vectors

object KmeansTest {

def main(args: Array[String]) {

val conf = new

SparkConf().setAppName("K-Means Clustering").setMaster("spark://192.168.1.110:7077")

val sc = new SparkContext(conf)

sc.addJar("/opt/KmeansTest.jar")

val rawTrainingData = sc.textFile("hdfs://192.168.1.110:9000/Hadoop/Input/Iris.csv")

val parsedTrainingData =

rawTrainingData.map(line => {

Vectors.dense(line.split(",").map(_.trim).filter(!"".equals(_)).map(_.toDouble))

}).cache()

//Cluster the data into two classes using KMeans

val numClusters = 3

val numIterations = 40

val runTimes = 3

var clusterIndex: Int = 0

val clusters : KMeansModel = KMeans.train(parsedTrainingData, numClusters, numIterations, runTimes)

println("Cluster Number:" + clusters.clusterCenters.length)

println("Cluster Centers Information Overview:")

clusters.clusterCenters.foreach(

x => {

println("Center Point of Cluster " + clusterIndex + ":")

println(x)

clusterIndex += 1

})

//begin to check which cluster each test data belongs to based on the clustering result

val rawTestData = sc.textFile("hdfs://192.168.1.110:9000/Hadoop/Input/Iris.csv")

val parsedTestData = rawTestData.map(line => {

Vectors.dense(line.split(",").map(_.trim).filter(!"".equals(_)).map(_.toDouble))

})

parsedTestData.collect().foreach(testDataLine => {

val predictedClusterIndex:

Int = clusters.predict(testDataLine)

println("The data " + testDataLine.toString + " belongs to cluster " +

predictedClusterIndex)

})

println("Spark MLlib K-means clustering test finished.")

}

}

点击Run as Scala Application之后就会得出结果如下:

The data [5.1,3.5,1.4,0.2] belongs to cluster 0

The data [4.9,3.0,1.4,0.2] belongs to cluster 0

The data [4.7,3.2,1.3,0.2] belongs to cluster 0

The data [4.6,3.1,1.5,0.2] belongs to cluster 0

The data [5.0,3.6,1.4,0.2] belongs to cluster 0

The data [5.4,3.9,1.7,0.4] belongs to cluster 0

The data [4.6,3.4,1.4,0.3] belongs to cluster 0

The data [5.0,3.4,1.5,0.2] belongs to cluster 0

The data [4.4,2.9,1.4,0.2] belongs to cluster 0

The data [4.9,3.1,1.5,0.1] belongs to cluster 0

The data [5.4,3.7,1.5,0.2] belongs to cluster 0

The data [4.8,3.4,1.6,0.2] belongs to cluster 0

The data [4.8,3.0,1.4,0.1] belongs to cluster 0

The data [4.3,3.0,1.1,0.1] belongs to cluster 0

The data [5.8,4.0,1.2,0.2] belongs to cluster 0

The data [5.7,4.4,1.5,0.4] belongs to cluster 0

The data [5.4,3.9,1.3,0.4] belongs to cluster 0

The data [5.1,3.5,1.4,0.3] belongs to cluster 0

The data [5.7,3.8,1.7,0.3] belongs to cluster 0

The data [5.1,3.8,1.5,0.3] belongs to cluster 0

The data [5.4,3.4,1.7,0.2] belongs to cluster 0

The data [5.1,3.7,1.5,0.4] belongs to cluster 0

The data [4.6,3.6,1.0,0.2] belongs to cluster 0

The data [5.1,3.3,1.7,0.5] belongs to cluster 0

The data [4.8,3.4,1.9,0.2] belongs to cluster 0

The data [5.0,3.0,1.6,0.2] belongs to cluster 0

The data [5.0,3.4,1.6,0.4] belongs to cluster 0

The data [5.2,3.5,1.5,0.2] belongs to cluster 0

The data [5.2,3.4,1.4,0.2] belongs to cluster 0

The data [4.7,3.2,1.6,0.2] belongs to cluster 0

The data [4.8,3.1,1.6,0.2] belongs to cluster 0

The data [5.4,3.4,1.5,0.4] belongs to cluster 0

The data [5.2,4.1,1.5,0.1] belongs to cluster 0

The data [5.5,4.2,1.4,0.2] belongs to cluster 0

The data [4.9,3.1,1.5,0.1] belongs to cluster 0

The data [5.0,3.2,1.2,0.2] belongs to cluster 0

The data [5.5,3.5,1.3,0.2] belongs to cluster 0

The data [4.9,3.1,1.5,0.1] belongs to cluster 0

The data [4.4,3.0,1.3,0.2] belongs to cluster 0

The data [5.1,3.4,1.5,0.2] belongs to cluster 0

The data [5.0,3.5,1.3,0.3] belongs to cluster 0

The data [4.5,2.3,1.3,0.3] belongs to cluster 0

The data [4.4,3.2,1.3,0.2] belongs to cluster 0

The data [5.0,3.5,1.6,0.6] belongs to cluster 0

The data [5.1,3.8,1.9,0.4] belongs to cluster 0

The data [4.8,3.0,1.4,0.3] belongs to cluster 0

The data [5.1,3.8,1.6,0.2] belongs to cluster 0

The data [4.6,3.2,1.4,0.2] belongs to cluster 0

The data [5.3,3.7,1.5,0.2] belongs to cluster 0

The data [5.0,3.3,1.4,0.2] belongs to cluster 0

The data [7.0,3.2,4.7,1.4] belongs to cluster 2

The data [6.4,3.2,4.5,1.5] belongs to cluster 1

The data [6.9,3.1,4.9,1.5] belongs to cluster 2

The data [5.5,2.3,4.0,1.3] belongs to cluster 1

The data [6.5,2.8,4.6,1.5] belongs to cluster 1

The data [5.7,2.8,4.5,1.3] belongs to cluster 1

The data [6.3,3.3,4.7,1.6] belongs to cluster 1

The data [4.9,2.4,3.3,1.0] belongs to cluster 1

The data [6.6,2.9,4.6,1.3] belongs to cluster 1

The data [5.2,2.7,3.9,1.4] belongs to cluster 1

The data [5.0,2.0,3.5,1.0] belongs to cluster 1

The data [5.9,3.0,4.2,1.5] belongs to cluster 1

The data [6.0,2.2,4.0,1.0] belongs to cluster 1

The data [6.1,2.9,4.7,1.4] belongs to cluster 1

The data [5.6,2.9,3.6,1.3] belongs to cluster 1

The data [6.7,3.1,4.4,1.4] belongs to cluster 1

The data [5.6,3.0,4.5,1.5] belongs to cluster 1

The data [5.8,2.7,4.1,1.0] belongs to cluster 1

The data [6.2,2.2,4.5,1.5] belongs to cluster 1

The data [5.6,2.5,3.9,1.1] belongs to cluster 1

The data [5.9,3.2,4.8,1.8] belongs to cluster 1

The data [6.1,2.8,4.0,1.3] belongs to cluster 1

The data [6.3,2.5,4.9,1.5] belongs to cluster 1

The data [6.1,2.8,4.7,1.2] belongs to cluster 1

The data [6.4,2.9,4.3,1.3] belongs to cluster 1

The data [6.6,3.0,4.4,1.4] belongs to cluster 1

The data [6.8,2.8,4.8,1.4] belongs to cluster 1

The data [6.7,3.0,5.0,1.7] belongs to cluster 2

The data [6.0,2.9,4.5,1.5] belongs to cluster 1

The data [5.7,2.6,3.5,1.0] belongs to cluster 1

The data [5.5,2.4,3.8,1.1] belongs to cluster 1

The data [5.5,2.4,3.7,1.0] belongs to cluster 1

The data [5.8,2.7,3.9,1.2] belongs to cluster 1

The data [6.0,2.7,5.1,1.6] belongs to cluster 1

The data [5.4,3.0,4.5,1.5] belongs to cluster 1

The data [6.0,3.4,4.5,1.6] belongs to cluster 1

The data [6.7,3.1,4.7,1.5] belongs to cluster 1

The data [6.3,2.3,4.4,1.3] belongs to cluster 1

The data [5.6,3.0,4.1,1.3] belongs to cluster 1

The data [5.5,2.5,4.0,1.3] belongs to cluster 1

The data [5.5,2.6,4.4,1.2] belongs to cluster 1

The data [6.1,3.0,4.6,1.4] belongs to cluster 1

The data [5.8,2.6,4.0,1.2] belongs to cluster 1

The data [5.0,2.3,3.3,1.0] belongs to cluster 1

The data [5.6,2.7,4.2,1.3] belongs to cluster 1

The data [5.7,3.0,4.2,1.2] belongs to cluster 1

The data [5.7,2.9,4.2,1.3] belongs to cluster 1

The data [6.2,2.9,4.3,1.3] belongs to cluster 1

The data [5.1,2.5,3.0,1.1] belongs to cluster 1

The data [5.7,2.8,4.1,1.3] belongs to cluster 1

The data [6.3,3.3,6.0,2.5] belongs to cluster 2

The data [5.8,2.7,5.1,1.9] belongs to cluster 1

The data [7.1,3.0,5.9,2.1] belongs to cluster 2

The data [6.3,2.9,5.6,1.8] belongs to cluster 2

The data [6.5,3.0,5.8,2.2] belongs to cluster 2

The data [7.6,3.0,6.6,2.1] belongs to cluster 2

The data [4.9,2.5,4.5,1.7] belongs to cluster 1

The data [7.3,2.9,6.3,1.8] belongs to cluster 2

The data [6.7,2.5,5.8,1.8] belongs to cluster 2

The data [7.2,3.6,6.1,2.5] belongs to cluster 2

The data [6.5,3.2,5.1,2.0] belongs to cluster 2

The data [6.4,2.7,5.3,1.9] belongs to cluster 2

The data [6.8,3.0,5.5,2.1] belongs to cluster 2

The data [5.7,2.5,5.0,2.0] belongs to cluster 1

The data [5.8,2.8,5.1,2.4] belongs to cluster 1

The data [6.4,3.2,5.3,2.3] belongs to cluster 2

The data [6.5,3.0,5.5,1.8] belongs to cluster 2

The data [7.7,3.8,6.7,2.2] belongs to cluster 2

The data [7.7,2.6,6.9,2.3] belongs to cluster 2

The data [6.0,2.2,5.0,1.5] belongs to cluster 1

The data [6.9,3.2,5.7,2.3] belongs to cluster 2

The data [5.6,2.8,4.9,2.0] belongs to cluster 1

The data [7.7,2.8,6.7,2.0] belongs to cluster 2

The data [6.3,2.7,4.9,1.8] belongs to cluster 1

The data [6.7,3.3,5.7,2.1] belongs to cluster 2

The data [7.2,3.2,6.0,1.8] belongs to cluster 2

The data [6.2,2.8,4.8,1.8] belongs to cluster 1

The data [6.1,3.0,4.9,1.8] belongs to cluster 1

The data [6.4,2.8,5.6,2.1] belongs to cluster 2

The data [7.2,3.0,5.8,1.6] belongs to cluster 2

The data [7.4,2.8,6.1,1.9] belongs to cluster 2

The data [7.9,3.8,6.4,2.0] belongs to cluster 2

The data [6.4,2.8,5.6,2.2] belongs to cluster 2

The data [6.3,2.8,5.1,1.5] belongs to cluster 1

The data [6.1,2.6,5.6,1.4] belongs to cluster 2

The data [7.7,3.0,6.1,2.3] belongs to cluster 2

The data [6.3,3.4,5.6,2.4] belongs to cluster 2

The data [6.4,3.1,5.5,1.8] belongs to cluster 2

The data [6.0,3.0,4.8,1.8] belongs to cluster 1

The data [6.9,3.1,5.4,2.1] belongs to cluster 2

The data [6.7,3.1,5.6,2.4] belongs to cluster 2

The data [6.9,3.1,5.1,2.3] belongs to cluster 2

The data [5.8,2.7,5.1,1.9] belongs to cluster 1

The data [6.8,3.2,5.9,2.3] belongs to cluster 2

The data [6.7,3.3,5.7,2.5] belongs to cluster 2

The data [6.7,3.0,5.2,2.3] belongs to cluster 2

The data [6.3,2.5,5.0,1.9] belongs to cluster 1

The data [6.5,3.0,5.2,2.0] belongs to cluster 2

The data [6.2,3.4,5.4,2.3] belongs to cluster 2

The data [5.9,3.0,5.1,1.8] belongs to cluster 1

Spark MLlib K-means clustering test finished.

至此,整个分布式集群已经搭建完毕,也实现了K-Means算法

PS:途中会遇到很多问题,下一篇博客再详细记录。