13 Openstack-Ussuri-接入ceph-nautilus集群-centos8

==重点章节,花很多心血排查版本差异写的,因此粉丝可见,请见谅==

- 1 前言

- 2 openstack集群上的操作

- 3 ceph集群上的操作

- 3.1 创建openstack集群将要使用的pool

- 3.2 ceph授权设置

- 3.2.1 创建用户

- 3.2.2 推送client.glance&client.cinder秘钥

- 3.2.3 libvirt秘钥

- 4 Glance集成Ceph

- 4.1 配置glance-api.conf

- 5 Cinder集成Ceph

- 4.1 配置cinder.conf

- 4.2 创建一个volume

- 6 Nova集成Ceph

- 6.1 配置ceph.conf

- 6.2 配置nova.conf

- 6.3 配置live-migration

- 6.3.1 修改/etc/libvirt/libvirtd.conf

- 6.3.2 修改/etc/sysconfig/libvirtd

- 6.3.3 计算节点设置免密访问

- 6.3.4 设置iptables - ==前面已经关闭了iptables,因此不用设置==

- 6.3.5 重启服务

- 6.4 验证是否集成

- 6.4.1创建基于ceph存储的bootable存储卷

- 6.4.2 从ceph rbd启动虚拟机

- 6.4.3 对rbd启动的虚拟机进行live-migration

- X.过程中遇到的问题

1 前言

参考:ceph接入openstack配置

#本文解释从Netonline大佬转载过来的,用来解释接入集群的一些配置

Openstack环境中,数据存储可分为临时性存储与永久性存储。

临时性存储:主要由本地文件系统提供,并主要用于nova虚拟机的本地系统与临时数据盘,以及存储glance上传的系统镜像;

永久性存储:主要由cinder提供的块存储与swift提供的对象存储构成,以cinder提供的块存储应用最为广泛,块存储通常以云盘的形式挂载到虚拟机中使用。

Openstack中需要进行数据存储的三大项目主要是nova项目(虚拟机镜像文件),glance项目(共用模版镜像)与cinder项目(块存储)。

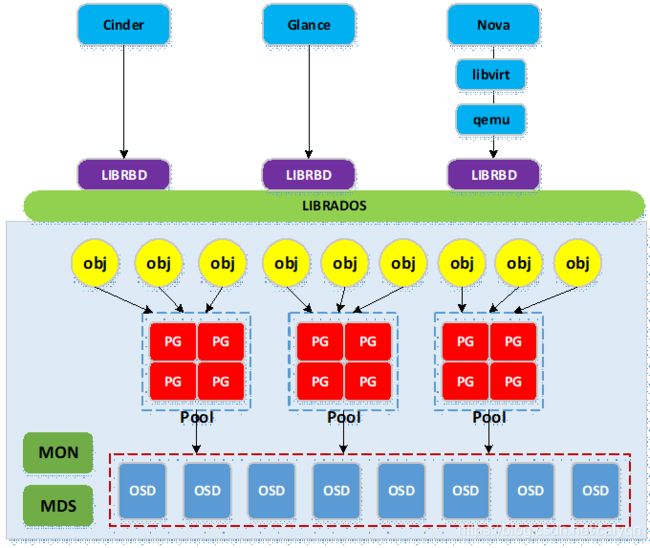

下图为cinder,glance与nova访问ceph集群的逻辑图:

ceph与openstack集成主要用到ceph的rbd服务,ceph底层为rados存储集群,ceph通过librados库实现对底层rados的访问;

openstack各项目客户端调用librbd,再由librbd调用librados访问底层rados;

实际使用中,nova需要使用libvirtdriver驱动以通过libvirt与qemu调用librbd;cinder与glance可直接调用librbd;

写入ceph集群的数据被条带切分成多个object,object通过hash函数映射到pg(构成pg容器池pool),然后pg通过几圈crush算法近似均匀地映射到物理存储设备osd(osd是基于文件系统的物理存储设备,如xfs,ext4等)。

2 openstack集群上的操作

#在openstack所有控制和计算节点安装ceph nautilus源码包,centos8有默认安装,但是版本一定要跟你连接的ceph版本一模一样!

#默认安装源码包的方法如下:

yum install centos-release-ceph-nautilus.noarch

#但是我这里使用的是nautilus14.2.10,因此

[Ceph]

name=Ceph packages for $basearch

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el8/$basearch

enabled=1

gpgcheck=0

type=rpm-md

[Ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el8/noarch

enabled=1

gpgcheck=0

type=rpm-md

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el8/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

#glance-api服务所在节点需要安装python3-rbd;

#这里glance-api服务运行在3个控制节点,因此三台都必须安装

yum install python3-rbd -y

#cinder-volume与nova-compute服务所在节点需要安装ceph-common;

#这里cinder-volume与nova-compute服务运行在2个计算(存储)节点

yum install ceph-common -y

3 ceph集群上的操作

#ceph集群创建,在OpenStack Ussuri 集群部署教程 - centos8我有提供两个选择,自行选择

#在ceph集群和openstack集群添加hosts

#vim /etc/hosts

172.16.1.131 ceph131

172.16.1.132 ceph132

172.16.1.133 ceph133

172.16.1.160 controller160

172.16.1.161 controller161

172.16.1.162 controller162

172.16.1.168 controller168

172.16.1.163 compute163

172.16.1.164 compute164

3.1 创建openstack集群将要使用的pool

#Ceph默认使用pool的形式存储数据,pool是对若干pg进行组织管理的逻辑划分,pg里的对象被映射到不同的osd,因此pool分布到整个集群里。

#可以将不同的数据存入1个pool,但如此操作不便于客户端数据区分管理,因此一般是为每个客户端分别创建pool。

#为cinder,nova,glance分别创建pool,命名为:volumes,vms,images

#这里volumes池是永久性存储,vms是实例临时后端存储,images是镜像存储

#pg数是有算法的,可以使用官网计算器去计算!

#PG数量的预估 集群中单个池的PG数计算公式如下:PG 总数 = (OSD 数 * 100) / 最大副本数 / 池数 (结果必须舍入到最接近2的N次幂的值)

#在ceph集群里操作,创建pools

[root@ceph131 ~]# ceph osd pool create volumes 16 16 replicated

pool 'volumes' created

[root@ceph131 ~]# ceph osd pool create vms 16 16 replicated

pool 'vms' created

[root@ceph131 ~]# ceph osd pool create images 16 16 replicated

pool 'images' created

[root@ceph131 ~]# ceph osd lspools

1 cephfs_data

2 cephfs_metadata

3 rbd_storage

4 .rgw.root

5 default.rgw.control

6 default.rgw.meta

7 default.rgw.log

10 volumes

11 vms

12 images

3.2 ceph授权设置

3.2.1 创建用户

#ceph默认启用cephx authentication,需要为nova/cinder与glance客户端创建新的用户并授权;

#可在管理节点上分别为运行cinder-volume与glance-api服务的节点创建client.glance与client.cinder用户并设置权限;

#针对pool设置权限,pool名对应创建的pool

[root@ceph131 ~]# ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images'

[client.cinder]

key = AQDYA/9eFE4vIBAArdMpCCNxKxLUpSKaKc6nDg==

[root@ceph131 ~]# ceph auth get-or-create client.glance mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=images'

[client.glance]

key = AQDkA/9eCaGUERAAxKTqRS5Vk7iuLugYnEP5BQ==

3.2.2 推送client.glance&client.cinder秘钥

#配置节点免密操作

[cephdeploy@ceph131 ~]$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:CsvXYKm8mRzasMFwgWVLx5LvvfnPrRc5S1wSb6kPytM root@ceph131

The key's randomart image is:

+---[RSA 2048]----+

| +o. |

| =oo. . |

|. oo o . |

| .. . . = |

|. ....+ S . * |

| + o.=.+ O |

| + * oo.. + * |

| B *o .+.E . |

| o * ...++. |

+----[SHA256]-----+

#推送密钥至各openstack集群节点

ssh-copy-id root@controller160

ssh-copy-id root@controller161

ssh-copy-id root@controller162

ssh-copy-id root@compute163

ssh-copy-id root@compute164

#这里nova-compute服务与nova-volume服务运行在相同节点,不必重复操作。

#将创建client.glance用户生成的秘钥推送到运行glance-api服务的节点

[root@ceph131 ~]# ceph auth get-or-create client.glance | tee /etc/ceph/ceph.client.glance.keyring

/ceph/ceph.client.glance.keyring

[root@ceph131 ~]# ceph auth get-or-create client.glance | ssh root@controller160 tee /etc/ceph/ceph.client.glance.keyring

[client.glance]

key = AQDkA/9eCaGUERAAxKTqRS5Vk7iuLugYnEP5BQ==

[root@ceph131 ~]# ceph auth get-or-create client.glance | ssh root@controller161 tee /etc/ceph/ceph.client.glance.keyring

[client.glance]

key = AQDkA/9eCaGUERAAxKTqRS5Vk7iuLugYnEP5BQ==

[root@ceph131 ~]# ceph auth get-or-create client.glance | ssh root@controller162 tee /etc/ceph/ceph.client.glance.keyring

[client.glance]

key = AQDkA/9eCaGUERAAxKTqRS5Vk7iuLugYnEP5BQ==

#同时修改秘钥文件的属主与用户组

#chown glance:glance /etc/ceph/ceph.client.glance.keyring

ssh root@controller160 chown glance:glance /etc/ceph/ceph.client.glance.keyring

ssh root@controller161 chown glance:glance /etc/ceph/ceph.client.glance.keyring

ssh root@controller162 chown glance:glance /etc/ceph/ceph.client.glance.keyring

#将创建client.cinder用户生成的秘钥推送到运行cinder-volume服务的节点

[root@ceph131 ceph]# ceph auth get-or-create client.cinder | ssh root@compute163 tee /etc/ceph/ceph.client.cinder.keyring

[client.cinder]

key = AQDYA/9eFE4vIBAArdMpCCNxKxLUpSKaKc6nDg==

[root@ceph131 ceph]# ceph auth get-or-create client.cinder | ssh root@compute164 tee /etc/ceph/ceph.client.cinder.keyring

[client.cinder]

key = AQDYA/9eFE4vIBAArdMpCCNxKxLUpSKaKc6nDg==

#同时修改秘钥文件的属主与用户组

ssh root@compute163 chown cinder:cinder /etc/ceph/ceph.client.cinder.keyring

ssh root@compute164 chown cinder:cinder /etc/ceph/ceph.client.cinder.keyring

3.2.3 libvirt秘钥

#nova-compute所在节点需要将client.cinder用户的秘钥文件存储到libvirt中;当基于ceph后端的cinder卷被attach到虚拟机实例时,libvirt需要用到该秘钥以访问ceph集群;

#在管理节点向计算(存储)节点推送client.cinder秘钥文件,生成的文件是临时性的,将秘钥添加到libvirt后可删除

[root@ceph131 ceph]# ceph auth get-key client.cinder | ssh root@compute164 tee /etc/ceph/client.cinder.key

AQDYA/9eFE4vIBAArdMpCCNxKxLUpSKaKc6nDg==

[root@ceph131 ceph]# ceph auth get-key client.cinder | ssh root@compute163 tee /etc/ceph/client.cinder.key

AQDYA/9eFE4vIBAArdMpCCNxKxLUpSKaKc6nDg==

#在计算(存储)节点将秘钥加入libvirt,以compute163节点为例;

#首先生成1个uuid,全部计算(存储)节点可共用此uuid(其他节点不用操作此步);

#uuid后续配置nova.conf文件时也会用到,请保持一致

[root@compute163 ~]# uuidgen

e9776771-b980-481d-9e99-3ddfdbf53d1e

[root@compute163 ~]# cd /etc/ceph/

[root@compute163 ceph]# touch secret.xml

[root@compute163 ceph]# vim secret.xml

<secret ephemeral='no' private='no'>

<uuid>cb26bb6c-2a84-45c2-8187-fa94b81dd53d</uuid>

<usage type='ceph'>

<name>client.cinder secret</name>

</usage>

</secret>

[root@compute163 ceph]# virsh secret-define --file secret.xml

Secret cb26bb6c-2a84-45c2-8187-fa94b81dd53d created

[root@compute163 ceph]# virsh secret-set-value --secret cb26bb6c-2a84-45c2-8187-fa94b81dd53d --base64 $(cat /etc/ceph/client.cinder.key)

Secret value set

#推送ceph.conf

[root@ceph131 ceph]# scp ceph.conf root@controller160:/etc/ceph/

ceph.conf 100% 514 407.7KB/s 00:00

[root@ceph131 ceph]# scp ceph.conf root@controller161:/etc/ceph/

ceph.conf 100% 514 631.5KB/s 00:00

[root@ceph131 ceph]# scp ceph.conf root@controller162:/etc/ceph/

ceph.conf 100% 514 218.3KB/s 00:00

[root@ceph131 ceph]# scp ceph.conf root@compute163:/etc/ceph/

ceph.conf 100% 514 2.3KB/s 00:00

[root@ceph131 ceph]# scp ceph.conf root@compute164:/etc/ceph/

ceph.conf 100% 514 3.6KB/s 00:00

4 Glance集成Ceph

4.1 配置glance-api.conf

#在运行glance-api服务的节点修改glance-api.conf文件,含3个控制节点,以controller160节点为例

#以下只列出涉及glance集成ceph的相关

#vim /etc/glance/glance-api.conf

[DEFAULT]

#打开copy-on-write功能

show_image_direct_url = True

[glance_store]

stores = rbd

default_store = rbd

rbd_store_chunk_size = 8

rbd_store_pool = images

rbd_store_user = glance

rbd_store_ceph_conf = /etc/ceph/ceph.conf

#stores = file,http

#default_store = file

#filesystem_store_datadir = /var/lib/glance/images/

#变更配置文件,重启服务

systemctl restart openstack-glance-api.service

#上传cirros镜像

[root@controller160 ~]# glance image-create --name "rbd_cirros-05" \

--file cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--visibility=public

+------------------+----------------------------------------------------------------------------------+

| Property | Value |

+------------------+----------------------------------------------------------------------------------+

| checksum | 443b7623e27ecf03dc9e01ee93f67afe |

| container_format | bare |

| created_at | 2020-07-06T15:25:40Z |

| direct_url | rbd://76235629-6feb-4f0c-a106-4be33d485535/images/f6da37cd-449a-436c-b321-e0c1c0 |

| | 6761d8/snap |

| disk_format | qcow2 |

| id | f6da37cd-449a-436c-b321-e0c1c06761d8 |

| min_disk | 0 |

| min_ram | 0 |

| name | rbd_cirros-05 |

| os_hash_algo | sha512 |

| os_hash_value | 6513f21e44aa3da349f248188a44bc304a3653a04122d8fb4535423c8e1d14cd6a153f735bb0982e |

| | 2161b5b5186106570c17a9e58b64dd39390617cd5a350f78 |

| os_hidden | False |

| owner | d3dda47e8c354d86b17085f9e382948b |

| protected | False |

| size | 12716032 |

| status | active |

| tags | [] |

| updated_at | 2020-07-06T15:26:06Z |

| virtual_size | Not available |

| visibility | public |

+------------------+----------------------------------------------------------------------------------+

#远程查看images pool有没有此文件ID,要能够在controller节点使用rbd命令,需要安装ceph-common

[root@controller161 ~]# rbd -p images --id glance -k /etc/ceph/ceph.client.glance.keyring ls

f6da37cd-449a-436c-b321-e0c1c06761d8

[root@ceph131 ceph]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 96 GiB 93 GiB 169 MiB 3.2 GiB 3.30

TOTAL 96 GiB 93 GiB 169 MiB 3.2 GiB 3.30

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

cephfs_data 1 0 B 0 0 B 0 29 GiB

cephfs_metadata 2 8.9 KiB 22 1.5 MiB 0 29 GiB

rbd_storage 3 33 MiB 20 100 MiB 0.11 29 GiB

.rgw.root 4 1.2 KiB 4 768 KiB 0 29 GiB

default.rgw.control 5 0 B 8 0 B 0 29 GiB

default.rgw.meta 6 369 B 2 384 KiB 0 29 GiB

default.rgw.log 7 0 B 207 0 B 0 29 GiB

volumes 10 0 B 0 0 B 0 29 GiB

vms 11 0 B 0 0 B 0 29 GiB

images 12 12 MiB 8 37 MiB 0.04 29 GiB

[root@ceph131 ceph]# rbd ls images

f6da37cd-449a-436c-b321-e0c1c06761d8

#查看ceph集群,发现有个HEALTH_WARN,原因是刚刚创建的未定义pool池类型,可定义为’cephfs’, ‘rbd’, 'rgw’等

[root@ceph131 ceph]# ceph -s

cluster:

id: 76235629-6feb-4f0c-a106-4be33d485535

health: HEALTH_WARN

application not enabled on 1 pool(s)

services:

mon: 3 daemons, quorum ceph131,ceph132,ceph133 (age 3d)

mgr: ceph131(active, since 4d), standbys: ceph132, ceph133

mds: cephfs_storage:1 {0=ceph132=up:active}

osd: 3 osds: 3 up (since 3d), 3 in (since 4d)

rgw: 1 daemon active (ceph131)

task status:

scrub status:

mds.ceph132: idle

data:

pools: 10 pools, 224 pgs

objects: 271 objects, 51 MiB

usage: 3.2 GiB used, 93 GiB / 96 GiB avail

pgs: 224 active+clean

io:

client: 4.1 KiB/s rd, 0 B/s wr, 4 op/s rd, 2 op/s wr

[root@ceph131 ceph]# ceph health detail

HEALTH_WARN application not enabled on 1 pool(s)

POOL_APP_NOT_ENABLED application not enabled on 1 pool(s)

application not enabled on pool 'images'

use 'ceph osd pool application enable ' , where <app-name> is 'cephfs', 'rbd', 'rgw', or freeform for custom applications.

#解决方法,定义pool类型为rbd

[root@ceph131 ceph]# ceph osd pool application enable images rbd

enabled application 'rbd' on pool 'images'

[root@ceph131 ceph]# ceph osd pool application enable volumes rbd

enabled application 'rbd' on pool 'volumes'

[root@ceph131 ceph]# ceph osd pool application enable vms rbd

enabled application 'rbd' on pool 'vms'

#验证方法如下:

[root@ceph131 ceph]# ceph health detail

HEALTH_OK

[root@ceph131 ceph]# ceph osd pool application get images

{

"rbd": {}

}

5 Cinder集成Ceph

4.1 配置cinder.conf

#cinder利用插件式结构,支持同时使用多种后端存储,在cinder-volume所在节点设置cinder.conf中设置相应的ceph rbd驱动即可,以compute163为例

#vim /etc/cinder/cinder.conf

# 后端使用ceph存储

[DEFAULT]

#enabled_backends = lvm #注释掉本行

enabled_backends = ceph

[ceph]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

glance_api_version = 2

rbd_user = cinder

#注意替换uuid

rbd_secret_uuid = cb26bb6c-2a84-45c2-8187-fa94b81dd53d

volume_backend_name = ceph

#变更配置文件,重启服务

[root@compute163 ceph]# systemctl restart openstack-cinder-volume.service

#验证

[root@controller160 ~]# openstack volume service list

+------------------+-----------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+-----------------+------+---------+-------+----------------------------+

| cinder-scheduler | controller160 | nova | enabled | up | 2020-07-06T16:10:36.000000 |

| cinder-scheduler | controller162 | nova | enabled | up | 2020-07-06T16:10:39.000000 |

| cinder-scheduler | controller161 | nova | enabled | up | 2020-07-06T16:10:33.000000 |

| cinder-volume | compute163@lvm | nova | enabled | down | 2020-07-06T16:07:09.000000 |

| cinder-volume | compute164@lvm | nova | enabled | down | 2020-07-06T16:07:04.000000 |

| cinder-volume | compute164@ceph | nova | enabled | up | 2020-07-06T16:10:38.000000 |

| cinder-volume | compute163@ceph | nova | enabled | up | 2020-07-06T16:10:39.000000 |

+------------------+-----------------+------+---------+-------+----------------------------+

4.2 创建一个volume

#设置卷类型,在控制节点为cinder的ceph后端存储创建对应的type,在配置多存储后端时可区分类型;可通过“cinder type-list”查看

[root@controller160 ~]# cinder type-create ceph

+--------------------------------------+------+-------------+-----------+

| ID | Name | Description | Is_Public |

+--------------------------------------+------+-------------+-----------+

| bc90d094-a76b-409f-affa-f8329d2b54d5 | ceph | - | True |

+--------------------------------------+------+-------------+-----------+

#为ceph type设置扩展规格,键值” volume_backend_name”,value值”ceph”

[root@controller160 ~]# cinder type-key ceph set volume_backend_name=ceph

[root@controller160 ~]# cinder extra-specs-list

+--------------------------------------+-------------+---------------------------------+

| ID | Name | extra_specs |

+--------------------------------------+-------------+---------------------------------+

| 0aacd847-535a-447e-914c-895289bf1a19 | __DEFAULT__ | {} |

| bc90d094-a76b-409f-affa-f8329d2b54d5 | ceph | {'volume_backend_name': 'ceph'} |

+--------------------------------------+-------------+---------------------------------+

#创建一个volume

[root@controller160 ~]# cinder create --volume-type ceph --name ceph-volume 1

+--------------------------------+--------------------------------------+

| Property | Value |

+--------------------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2020-07-06T16:14:36.000000 |

| description | None |

| encrypted | False |

| group_id | None |

| id | 63cb956c-2e4f-434e-a21a-9280530f737e |

| metadata | {} |

| migration_status | None |

| multiattach | False |

| name | ceph-volume |

| os-vol-host-attr:host | None |

| os-vol-mig-status-attr:migstat | None |

| os-vol-mig-status-attr:name_id | None |

| os-vol-tenant-attr:tenant_id | d3dda47e8c354d86b17085f9e382948b |

| provider_id | None |

| replication_status | None |

| service_uuid | None |

| shared_targets | True |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| updated_at | None |

| user_id | ec8c820dba1046f6a9d940201cf8cb06 |

| volume_type | ceph |

+--------------------------------+--------------------------------------+

#验证

[root@controller160 ~]# openstack volume list

+--------------------------------------+-------------+-----------+------+-------------+

| ID | Name | Status | Size | Attached to |

+--------------------------------------+-------------+-----------+------+-------------+

| 63cb956c-2e4f-434e-a21a-9280530f737e | ceph-volume | available | 1 | |

| 9575c54a-d44e-46dd-9187-0c464c512c01 | test1 | available | 2 | |

+--------------------------------------+-------------+-----------+------+-------------+

[root@ceph131 ceph]# rbd ls volumes

volume-63cb956c-2e4f-434e-a21a-9280530f737e

6 Nova集成Ceph

6.1 配置ceph.conf

#如果需要从ceph rbd中启动虚拟机,必须将ceph配置为nova的临时后端;

#推荐在计算节点的配置文件中启用rbd cache功能;

#为了便于故障排查,配置admin socket参数,这样每个使用ceph rbd的虚拟机都有1个socket将有利于虚拟机性能分析与故障解决;

#相关配置只涉及全部计算节点ceph.conf文件的[client]与[client.cinder]字段,以compute163节点为例

[root@compute163 ~]# vim /etc/ceph/ceph.conf

[client]

rbd cache = true

rbd cache writethrough until flush = true

admin socket = /var/run/ceph/guests/$cluster-$type.$id.$pid.$cctid.asok

log file = /var/log/qemu/qemu-guest-$pid.log

rbd concurrent management ops = 20

[client.cinder]

keyring = /etc/ceph/ceph.client.cinder.keyring

# 创建ceph.conf文件中指定的socker与log相关的目录,并更改属主

[root@compute163 ~]# mkdir -p /var/run/ceph/guests/ /var/log/qemu/

[root@compute163 ~]# chown qemu:libvirt /var/run/ceph/guests/ /var/log/qemu/

6.2 配置nova.conf

#在全部计算节点配置nova后端使用ceph集群的vms池,以compute163节点为例

[root@compute01 ~]# vim /etc/nova/nova.conf

[DEFAULT]

vif_plugging_is_fatal = False

vif_plugging_timeout = 0

[libvirt]

images_type = rbd

images_rbd_pool = vms

images_rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_user = cinder

rbd_secret_uuid = cb26bb6c-2a84-45c2-8187-fa94b81dd53d #uuid前后一致

disk_cachemodes="network=writeback"

live_migration_flag="VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE,VIR_MIGRATE_PERSIST_DEST,VIR_MIGRATE_TUNNELLED"

# 禁用文件注入

inject_password = false

inject_key = false

inject_partition = -2

# 虚拟机临时root磁盘discard功能,”unmap”参数在scsi接口类型磁盘释放后可立即释放空间

hw_disk_discard = unmap

#变更配置文件,重启计算服务

[root@compute163 ~]# systemctl restart libvirtd.service openstack-nova-compute.service

[root@compute163 ~]# systemctl status libvirtd.service openstack-nova-compute.service

6.3 配置live-migration

6.3.1 修改/etc/libvirt/libvirtd.conf

#在全部计算节点操作,以compute163节点为例;

#以下给出libvirtd.conf文件的修改处所在的行num

[root@compute163 ~]# egrep -vn "^$|^#" /etc/libvirt/libvirtd.conf

# 取消以下三行的注释

22:listen_tls = 0

33:listen_tcp = 1

45:tcp_port = "16509"

# 取消注释,并修改监听端口

55:listen_addr = "172.16.1.163"

# 取消注释,同时取消认证

158:auth_tcp = "none"

6.3.2 修改/etc/sysconfig/libvirtd

#在全部计算节点操作,以compute163节点为例;

#以下给出libvirtd文件的修改处所在的行num

[root@compute163 ~]# egrep -vn "^$|^#" /etc/sysconfig/libvirtd

# 取消注释

9:LIBVIRTD_ARGS="--listen"

6.3.3 计算节点设置免密访问

#所有计算节点都必须设置免密,迁移必备!

[root@compute163 ~]# usermod -s /bin/bash nova

[root@compute163 ~]# passwd nova

Changing password for user nova.

New password:

BAD PASSWORD: The password contains the user name in some form

Retype new password:

passwd: all authentication tokens updated successfully.

[root@compute163 ~]# su - nova

[nova@compute163 ~]$ ssh-keygen -t rsa -N '' -f ~/.ssh/id_rsa

Generating public/private rsa key pair.

Your identification has been saved in /var/lib/nova/.ssh/id_rsa.

Your public key has been saved in /var/lib/nova/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:bnGCcG6eRvSG3Bb58eu+sXwEAnb72hUHmlNja2bQLBU nova@compute163

The key's randomart image is:

+---[RSA 3072]----+

| +E. |

| o.. o B |

| . o.oo.. B + |

| * = ooo= * .|

| B S oo.* o |

| + = + ..o |

| + o ooo |

| . . .o.o. |

| .*o |

+----[SHA256]-----+

[nova@compute163 ~]$ ssh-copy-id nova@compute164

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/var/lib/nova/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

nova@compute164's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'nova@compute164'"

and check to make sure that only the key(s) you wanted were added.

[nova@compute163 ~]$ ssh [email protected]

Activate the web console with: systemctl enable --now cockpit.socket

Last failed login: Wed Jul 8 00:24:02 CST 2020 from 172.16.1.163 on ssh:notty

There were 5 failed login attempts since the last successful login.

[nova@compute164 ~]$

6.3.4 设置iptables - 前面已经关闭了iptables,因此不用设置

#live-migration时,源计算节点主动连接目的计算节点tcp16509端口,可以使用”virsh -c qemu+tcp://{node_ip or node_name}/system”连接目的计算节点测试;

#迁移前后,在源目计算节点上的被迁移instance使用tcp49152~49161端口做临时通信;

#因虚拟机已经启用iptables相关规则,此时切忌随意重启iptables服务,尽量使用插入的方式添加规则;

#同时以修改配置文件的方式写入相关规则,切忌使用”iptables saved”命令;

#在全部计算节点操作,以compute163节点为例

[root@compute163 ~]# iptables -I INPUT -p tcp -m state --state NEW -m tcp --dport 16509 -j ACCEPT

[root@compute163 ~]# iptables -I INPUT -p tcp -m state --state NEW -m tcp --dport 49152:49161 -j ACCEPT

6.3.5 重启服务

systemctl mask libvirtd.socket libvirtd-ro.socket \

libvirtd-admin.socket libvirtd-tls.socket libvirtd-tcp.socket

service libvirtd restart

systemctl restart openstack-nova-compute.service

#验证

[root@compute163 ~]# netstat -lantp|grep libvirtd

tcp 0 0 172.16.1.163:16509 0.0.0.0:* LISTEN 582582/libvirtd

6.4 验证是否集成

6.4.1创建基于ceph存储的bootable存储卷

#当nova从rbd启动instance时,镜像格式必须是raw格式,否则虚拟机在启动时glance-api与cinder均会报错;

#首先进行格式转换,将*.img文件转换为*.raw文件

#cirros-0.4.0-x86_64-disk.img 这个文件网上自己下载

[root@controller160 ~]# qemu-img convert -f qcow2 -O raw ~/cirros-0.4.0-x86_64-disk.img ~/cirros-0.4.0-x86_64-disk.raw

# 生成raw格式镜像

[root@controller160 ~]# openstack image create "cirros-raw" \

> --file ~/cirros-0.4.0-x86_64-disk.raw \

> --disk-format raw --container-format bare \

> --public

+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| checksum | ba3cd24377dde5dfdd58728894004abb |

| container_format | bare |

| created_at | 2020-07-07T02:13:06Z |

| disk_format | raw |

| file | /v2/images/459f5ddd-c094-4b0f-86e5-f55baa33595c/file |

| id | 459f5ddd-c094-4b0f-86e5-f55baa33595c |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros-raw |

| owner | d3dda47e8c354d86b17085f9e382948b |

| properties | direct_url='rbd://76235629-6feb-4f0c-a106-4be33d485535/images/459f5ddd-c094-4b0f-86e5-f55baa33595c/snap', os_hash_algo='sha512', os_hash_value='b795f047a1b10ba0b7c95b43b2a481a59289dc4cf2e49845e60b194a911819d3ada03767bbba4143b44c93fd7f66c96c5a621e28dff51d1196dae64974ce240e', os_hidden='False', owner_specified.openstack.md5='ba3cd24377dde5dfdd58728894004abb', owner_specified.openstack.object='images/cirros-raw', owner_specified.openstack.sha256='87ddf8eea6504b5eb849e418a568c4985d3cea59b5a5d069e1dc644de676b4ec', self='/v2/images/459f5ddd-c094-4b0f-86e5-f55baa33595c' |

| protected | False |

| schema | /v2/schemas/image |

| size | 46137344 |

| status | active |

| tags | |

| updated_at | 2020-07-07T02:13:42Z |

| visibility | public |

+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

#使用新镜像创建bootable卷

[root@controller160 ~]# cinder create --image-id 459f5ddd-c094-4b0f-86e5-f55baa33595c --volume-type ceph --name ceph-boot 1

+--------------------------------+--------------------------------------+

| Property | Value |

+--------------------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2020-07-07T02:17:42.000000 |

| description | None |

| encrypted | False |

| group_id | None |

| id | 46a45564-e148-4f85-911b-a4542bdbd4f0 |

| metadata | {} |

| migration_status | None |

| multiattach | False |

| name | ceph-boot |

| os-vol-host-attr:host | None |

| os-vol-mig-status-attr:migstat | None |

| os-vol-mig-status-attr:name_id | None |

| os-vol-tenant-attr:tenant_id | d3dda47e8c354d86b17085f9e382948b |

| provider_id | None |

| replication_status | None |

| service_uuid | None |

| shared_targets | True |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| updated_at | None |

| user_id | ec8c820dba1046f6a9d940201cf8cb06 |

| volume_type | ceph |

+--------------------------------+--------------------------------------+

#查看新创建的bootable卷,创建需要时间,过会查status才会是available

[root@controller160 ~]# cinder list

+--------------------------------------+-----------+-------------+------+-------------+----------+-------------+

| ID | Status | Name | Size | Volume Type | Bootable | Attached to |

+--------------------------------------+-----------+-------------+------+-------------+----------+-------------+

| 62a21adb-0a22-4439-bf0b-121442790515 | available | ceph-boot | 1 | ceph | true | |

| 63cb956c-2e4f-434e-a21a-9280530f737e | available | ceph-volume | 1 | ceph | false | |

| 9575c54a-d44e-46dd-9187-0c464c512c01 | available | test1 | 2 | __DEFAULT__ | false | |

+--------------------------------------+-----------+-------------+------+-------------+----------+-------------+

#从基于ceph后端的volumes新建实例;

#“–boot-volume”指定具有”bootable”属性的卷,启动后,虚拟机运行在volumes卷

#创建云主机类型

[root@controller160 ~]# openstack flavor create --id 1 --vcpus 1 --ram 256 --disk 1 m1.nano

+----------------------------+---------+

| Field | Value |

+----------------------------+---------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 1 |

| id | 1 |

| name | m1.nano |

| os-flavor-access:is_public | True |

| properties | |

| ram | 256 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 1 |

+----------------------------+---------+

#安全规则

[root@controller160 ~]# openstack security group rule create --proto icmp default

[root@controller160 ~]# openstack security group rule create --proto tcp --dst-port 22 'default'

#创建虚拟网络

openstack network create --share --external \

--provider-physical-network provider \

--provider-network-type flat provider-eth1

#创建子网,根据实际进行修改

openstack subnet create --network provider-eth1 \

--allocation-pool start=172.16.2.220,end=172.16.2.229 \

--dns-nameserver 114.114.114.114 --gateway 172.16.2.254 --subnet-range 172.16.2.0/24 \

172.16.2.0/24

#云主机可用类型

openstack flavor list

#可用镜像

openstack image list

#可用的安全组

openstack security group list

#可用的网络

openstack network list

#创建实例,也可以通过web创建

[root@controller160 ~]# nova boot --flavor m1.nano \

--boot-volume d3770a82-068c-49ad-a9b7-ef863bb61a5b \

--nic net-id=53b98327-3a47-4316-be56-cba37e8f20f2 \

--security-group default \

ceph-boot02

[root@controller160 ~]# nova show c592ca1a-dbce-443a-9222-c7e47e245725

+--------------------------------------+----------------------------------------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------------------------------------+

| OS-DCF:diskConfig | AUTO |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-SRV-ATTR:host | compute163 |

| OS-EXT-SRV-ATTR:hostname | ceph-boot02 |

| OS-EXT-SRV-ATTR:hypervisor_hostname | compute163 |

| OS-EXT-SRV-ATTR:instance_name | instance-00000025 |

| OS-EXT-SRV-ATTR:kernel_id | |

| OS-EXT-SRV-ATTR:launch_index | 0 |

| OS-EXT-SRV-ATTR:ramdisk_id | |

| OS-EXT-SRV-ATTR:reservation_id | r-ty5v9w8n |

| OS-EXT-SRV-ATTR:root_device_name | /dev/vda |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-STS:task_state | - |

| OS-EXT-STS:vm_state | active |

| OS-SRV-USG:launched_at | 2020-07-07T10:55:39.000000 |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| config_drive | |

| created | 2020-07-07T10:53:30Z |

| description | - |

| flavor:disk | 1 |

| flavor:ephemeral | 0 |

| flavor:extra_specs | {} |

| flavor:original_name | m1.nano |

| flavor:ram | 256 |

| flavor:swap | 0 |

| flavor:vcpus | 1 |

| hostId | 308132ea4792b277acfae8d3c5d88439d3d5d6ba43d8b06395581d77 |

| host_status | UP |

| id | c592ca1a-dbce-443a-9222-c7e47e245725 |

| image | Attempt to boot from volume - no image supplied |

| key_name | - |

| locked | False |

| locked_reason | - |

| metadata | {} |

| name | ceph-boot02 |

| os-extended-volumes:volumes_attached | [{"id": "d3770a82-068c-49ad-a9b7-ef863bb61a5b", "delete_on_termination": false}] |

| progress | 0 |

| provider-eth1 network | 172.16.2.228 |

| security_groups | default |

| server_groups | [] |

| status | ACTIVE |

| tags | [] |

| tenant_id | d3dda47e8c354d86b17085f9e382948b |

| trusted_image_certificates | - |

| updated | 2020-07-07T10:55:40Z |

| user_id | ec8c820dba1046f6a9d940201cf8cb06 |

+--------------------------------------+----------------------------------------------------------------------------------+

6.4.2 从ceph rbd启动虚拟机

#--nic:net-id指网络id,非subnet-id;

#最后“cirros-cephrbd-instance1”为instance名称

[root@controller160 ~]# openstack server create --flavor m1.nano --image cirros-raw --nic net-id=53b98327-3a47-4316-be56-cba37e8f20f2 --security-group default cirros-cephrbd-instance1

+-------------------------------------+---------------------------------------------------+

| Field | Value |

+-------------------------------------+---------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | |

| OS-EXT-SRV-ATTR:host | None |

| OS-EXT-SRV-ATTR:hypervisor_hostname | None |

| OS-EXT-SRV-ATTR:instance_name | |

| OS-EXT-STS:power_state | NOSTATE |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | None |

| OS-SRV-USG:terminated_at | None |

| accessIPv4 | |

| accessIPv6 | |

| addresses | |

| adminPass | CRiNuZoK6ftt |

| config_drive | |

| created | 2020-07-07T15:13:08Z |

| flavor | m1.nano (1) |

| hostId | |

| id | 6ea79ec0-1ec6-47ff-b185-233c565b1fab |

| image | cirros-raw (459f5ddd-c094-4b0f-86e5-f55baa33595c) |

| key_name | None |

| name | cirros-cephrbd-instance1 |

| progress | 0 |

| project_id | d3dda47e8c354d86b17085f9e382948b |

| properties | |

| security_groups | name='eea8a6b4-2b6d-4f11-bfe8-12b56bafe36c' |

| status | BUILD |

| updated | 2020-07-07T15:13:10Z |

| user_id | ec8c820dba1046f6a9d940201cf8cb06 |

| volumes_attached | |

+-------------------------------------+---------------------------------------------------+

#查询生成的instance

[root@controller160 ~]# nova list

+--------------------------------------+--------------------------+--------+------------+-------------+----------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+--------------------------+--------+------------+-------------+----------------------------+

| c592ca1a-dbce-443a-9222-c7e47e245725 | ceph-boot02 | ACTIVE | - | Running | provider-eth1=172.16.2.228 |

| 6ea79ec0-1ec6-47ff-b185-233c565b1fab | cirros-cephrbd-instance1 | ACTIVE | - | Running | provider-eth1=172.16.2.226 |

+--------------------------------------+--------------------------+--------+------------+-------------+----------------------------+

6.4.3 对rbd启动的虚拟机进行live-migration

#使用“nova show 6ea79ec0-1ec6-47ff-b185-233c565b1fab”得知从rbd启动的instance在迁移前位于compute163节点;

#或使用”nova hypervisor-servers compute163”进行验证;

[root@controller01 ~]# nova live-migration cirros-cephrbd-instance1 compute164

# 迁移过程中可查看状态

[root@controller160 ~]# nova list

+--------------------------------------+--------------------------+-----------+------------+-------------+----------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+--------------------------+-----------+------------+-------------+----------------------------+

| c592ca1a-dbce-443a-9222-c7e47e245725 | ceph-boot02 | ACTIVE | - | Running | provider-eth1=172.16.2.228 |

| 6ea79ec0-1ec6-47ff-b185-233c565b1fab | cirros-cephrbd-instance1 | MIGRATING | migrating | Running | provider-eth1=172.16.2.226 |

+--------------------------------------+--------------------------+-----------+------------+-------------+----------------------------+

# 迁移完成后,查看instacn所在节点;

# 或使用“nova show 6ea79ec0-1ec6-47ff-b185-233c565b1fab”命令查看”hypervisor_hostname”

[root@controller01 ~]# nova hypervisor-servers compute163

[root@controller01 ~]# nova hypervisor-servers compute164

X.过程中遇到的问题

eg1.2020-07-04 00:39:56.394 671959 ERROR glance.common.wsgi rados.ObjectNotFound: [errno 2] error calling conf_read_file

原因是:找不到ceph.conf配置文件

解决方案:从ceph集群复制ceph.conf配置至各节点/etc/ceph/里面

eg2.2020-07-04 01:01:27.736 1882718 ERROR glance_store._drivers.rbd [req-fd768a6d-e7e2-476b-b1d3-d405d7a560f2 ec8c820dba1046f6a9d940201cf8cb06 d3dda47e8c354d86b17085f9e382948b - default default] Error con

necting to ceph cluster.: rados.ObjectNotFound: [errno 2] error connecting to the cluster

eg3.libvirtd[580770]: --listen parameter not permitted with systemd activation sockets, see 'man libvirtd' for further guidance

原因是:默认使用了systemd模式,要恢复到传统模式,所有的systemd必须被屏蔽

解决方案:

systemctl mask libvirtd.socket libvirtd-ro.socket \

libvirtd-admin.socket libvirtd-tls.socket libvirtd-tcp.socket

然后使用以下命令重启即可:

service libvirtd restart

eg4.AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/guests/ceph-client.cinder.596406.94105140863224.asok': (13) Permission denied

原因是:这个是因为/var/run/ceph目录权限有问题,qemu起的这些虚拟机示例,其属主属组都是qemu,但是/var/run/ceph目录的属主属组是ceph:ceph,权限是770

解决方案:直接将/var/run/ceph目录的权限改为777,另外,/var/log/qemu/也最好设置一下权限,设置为777

eg5.Error on AMQP connection <0.9284.0> (172.16.1.162:55008 -> 172.16.1.162:5672, state: starting):

AMQPLAIN login refused: user 'guest' can only connect via localhost

原因是:从3版本开始不支持guest远程登陆

解决方案:vim /etc/rabbitmq/rabbitmq.config添加以下字段,有点!!然后重启rabbitmq服务

[{rabbit, [{loopback_users, []}]}].

eg6.Failed to allocate network(s): nova.exception.VirtualInterfaceCreateException: Virtual Interface creation failed

在计算节点的/etc/nova/nova.conf中添加下面两句,然后重启

vif_plugging_is_fatal = False

vif_plugging_timeout = 0

eg7.Resize error: not able to execute ssh command: Unexpected error while running command.

原因是:openstack迁移是以ssh为基础,因此需要计算节点间都配置免密

解决方案:见6.3.3