针对豆瓣TOP250电影知识图谱的构建(Python+neo4j)

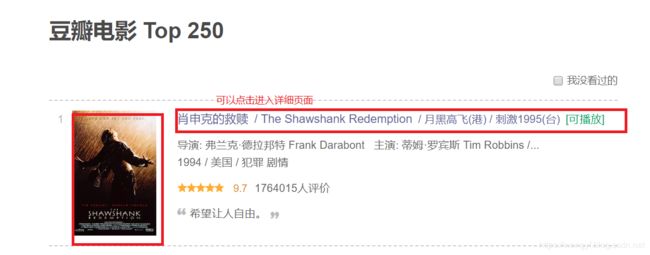

数据爬取网站: https://movie.douban .com/top250?start=0.

1. 首先对网页数据进行分析,进而确定节点和关系

我们直接分析电影点进去的详细页面,页面如下:(由于豆瓣在没有登录的情况下频繁对网站进行请求会被认为恶意攻击,导致自己的ip无法访问该网站,所以最好先下载下来)

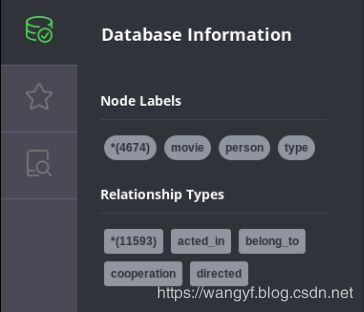

通过上图,我们选择4个结点和4种关系

4个结点分别为:

- 电影名称(film_name)

- 导演(director)

- 演员(actor)

- 类型(type)

4种关系分别为:

- acted_in(电影——>演员)

- directed(电影——>导演)

- belong_to(电影——>类型)

- cooperation(导演——>演员)

2. 下载分析页面

点击鼠标右键查看源代码,知道链接的模式之后我们可以采用正则表达式进行匹配,然后获取链接对应页面,最终将获取的页面保存在文件中,为我们后面分析提供数据。

分析后,我们采用后面那包含class属性的链接(其实和上面一种一样,只是为了防止多次访问同样页面),最终我们采用如下正则表达式:

<a href="https://movie.douban.com/subject/\d*?/" class="">

最终形成的获取分析页面代码如下(最终我存放在代码目录下的contents目录下)(1_getPage.py):

import requests

import re

import time

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'

}

x = range(0, 250, 25)

for i in x:

# 请求排行榜页面

html = requests.get("https://movie.douban.com/top250?start=" + str(i), headers=headers)

# 防止请求过于频繁

time.sleep(0.01)

# 将获取的内容采用utf8解码

cont = html.content.decode('utf8')

# 使用正则表达式获取电影的详细页面链接

urlList = re.findall('', cont)

# 排行榜每一页都有25个电影,于是匹配到了25个链接,逐个对访问进行请求

for j in range(len(urlList)):

# 获取啊、标签中的url

url = urlList[j].replace(', "").replace('" class="">', "")

# 将获取的内容采用utf8解码

content = requests.get(url, headers=headers).content.decode('utf8')

# 采用数字作为文件名

film_name = i + j

# 写入文件

with open('contents/' + str(film_name) + '.txt', mode='w', encoding='utf8') as f:

f.write(content)

3. 数据爬取

3.1 结点数据爬取

3.1.1 电影名称结点获取

首先分析页面数据,发现电影名称使用title标签框住,于是可以采用如下正则表达式对电影名称(film_name)进行提取:

<title>.*?/title>

3.1.2 导演结点获取

接着分析导演(director)结点数据的提取:

通过分析源代码中的脚本,我们可以使用如下正则表达式对数据进行提取:

"director":.*?]

"name": ".*?"

3.1.3 演员结点获取

同理分析下面数据,提取演员(actor)结点数据:

我们可以使用如下正则表达式进行actor的提取:

"actor":.*?]

"name": ".*?"

3.1.4 类型结点获取

最后分析电影类别(type):

于是我们可以使用如下正则表达式进行数据提取:

<span property="v:genre">.*?span>

3.1.5 综上,现在列出获取所有结点数据并且保存在csv中的代码(2_getNode.py):

import re

import pandas

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'

}

def node_save(attrCont, tag, attr, label):

ID = []

for i in range(len(attrCont)):

ID.append(tag * 10000 + i)

data = {'ID': ID, attr: attrCont, 'LABEL': label}

dataframe = pandas.DataFrame(data)

dataframe.to_csv('details/' + attr + '.csv', index=False, sep=',', encoding="utf_8_sig")

def save(contents):

# save movie nodes

film_name = re.findall('.*?/title>'</span><span class="token punctuation">,</span> contents<span class="token punctuation">)</span><span class="token punctuation">[</span><span class="token number">0</span><span class="token punctuation">]</span>

film_name <span class="token operator">=</span> film_name<span class="token punctuation">.</span>lstrip<span class="token punctuation">(</span><span class="token string">"<title>"</span><span class="token punctuation">)</span><span class="token punctuation">.</span>rstrip<span class="token punctuation">(</span><span class="token string">"(豆瓣) ").replace(" ", "")

film_names.append(film_name)

# save director nodes

director_cont = re.findall('"director":.*?]', contents)[0]

director_cont = re.findall('"name": ".*?"', director_cont)

for i in range(len(director_cont)):

directors.append(director_cont[i].lstrip('"name": "').rstrip('"'))

# save actors nodes

actor_cont = re.findall('"actor":.*?]', contents)[0]

actor_cont = re.findall('"name": ".*?"', actor_cont)

for i in range(len(actor_cont)):

actors.append(actor_cont[i].lstrip('"name": "').rstrip('"'))

# save type

type_cont = re.findall('.*?', contents)

for i in range(len(type_cont)):

types.append(type_cont[i].lstrip('').rstrip(''))

film_names = []

actors = []

directors = []

types = []

for i in range(250):

with open('contents/' + str(i) + '.txt', mode='r', encoding='utf8') as f:

contents = f.read()

save(contents.replace("\n", "")) # 这里需要把读出来的数据换行符去掉

# 去重

actors = list(set(actors))

directors = list(set(directors))

types = list(set(types))

# 保存

node_save(film_names, 0, 'film_name', 'movie')

node_save(directors, 1, 'director', 'person')

node_save(actors, 2, 'actor', 'person')

node_save(types, 3, "type", "type")

print('ok1')

3.2 结点关系数据爬取

3.2.1 考虑acted_in关系

我们分别对每个电影详细页面进行分析,提取电影名称(fillm_name)和演员列表(actor),将它们分别加入列表中,分析250个电影详细页面文件后最终进行保存,代码如下:

def save_acted_in(content):

# 获取当前电影对应ID

film_name = re.findall('.*?/title>'</span><span class="token punctuation">,</span> content<span class="token punctuation">)</span><span class="token punctuation">[</span><span class="token number">0</span><span class="token punctuation">]</span>

film_name <span class="token operator">=</span> film_name<span class="token punctuation">.</span>lstrip<span class="token punctuation">(</span><span class="token string">"<title>"</span><span class="token punctuation">)</span><span class="token punctuation">.</span>rstrip<span class="token punctuation">(</span><span class="token string">"(豆瓣) ").replace(" ", "") # 电影名字每页只有一个

filmNameID = getID('film_name', film_name)

# 获取当前电影的演员和对应ID

actor_cont = re.findall('"actor":.*?]', content)[0]

actor_cont = re.findall('"name": ".*?"', actor_cont)

for i in range(len(actor_cont)): # 演员每页可能多个(通常都多个)

actor = actor_cont[i].lstrip('name": "').rstrip('"')

start_id.append(filmNameID)

end_id.append(getID('actor', actor)) # 查找演员名字对应ID

3.2.2 考虑directed关系

接下来,我们还是分别对每个电影详细页面进行分析,提取电影名称(fillm_name)和导演列表(director),将它们分别加入列表中,分析250个电影详细页面文件后最终进行保存,代码如下:

def save_directed(contnet):

# 获取当前电影对应ID

film_name = re.findall('.*?/title>'</span><span class="token punctuation">,</span> content<span class="token punctuation">)</span><span class="token punctuation">[</span><span class="token number">0</span><span class="token punctuation">]</span>

film_name <span class="token operator">=</span> film_name<span class="token punctuation">.</span>lstrip<span class="token punctuation">(</span><span class="token string">"<title>"</span><span class="token punctuation">)</span><span class="token punctuation">.</span>rstrip<span class="token punctuation">(</span><span class="token string">"(豆瓣) ").replace(" ", "")

filmNameID = getID('film_name', film_name)

#

director_cont = re.findall('"director":.*?]', content)[0]

director_cont = re.findall('"name": ".*?"', director_cont)

for i in range(len(director_cont)):

director = director_cont[i].lstrip('"name": "').rstrip('"')

start_id.append(filmNameID)

end_id.append(getID('director', director))

3.2.3 考虑belong_to关系

接下来,我们还是分别对每个电影详细页面进行分析,提取电影名称(fillm_name)和类型列表(type),将它们分别加入列表中,分析250个电影详细页面文件后最终进行保存,代码如下:

def save_belongto(content):

# 获取当前电影对应ID

film_name = re.findall('.*?/title>'</span><span class="token punctuation">,</span> content<span class="token punctuation">)</span><span class="token punctuation">[</span><span class="token number">0</span><span class="token punctuation">]</span>

film_name <span class="token operator">=</span> film_name<span class="token punctuation">.</span>lstrip<span class="token punctuation">(</span><span class="token string">"<title>"</span><span class="token punctuation">)</span><span class="token punctuation">.</span>rstrip<span class="token punctuation">(</span><span class="token string">"(豆瓣) ").replace(" ", "")

filmNameID = getID('film_name', film_name)

#

type_cont = re.findall('.*?', content)

for i in range(len(type_cont)):

type = type_cont[i].lstrip('').rstrip('')

start_id.append(filmNameID)

end_id.append(getID('type', type))

3.2.4 考虑cooperation关系

最后,我们还是分别对每个电影详细页面进行分析,提取演员列表(actor)和导演列表(director),将它们分别加入列表中,分析250个电影详细页面文件后最终进行保存,代码如下:

def save_cooperation(content):

# 获取当前电影的演员和对应ID

actor_cont = re.findall('"actor":.*?]', content)[0]

actor_cont = re.findall('"name": ".*?"', actor_cont)

#

director_cont = re.findall('"director":.*?]', content)[0]

director_cont = re.findall('"name": ".*?"', director_cont)

for i in range(len(actor_cont)):

actor = actor_cont[i].lstrip('name": "').rstrip('"')

for j in range(len(director_cont)):

director = director_cont[j].lstrip('"name": "').rstrip('"')

start_id.append(getID('actor', actor))

end_id.append(getID('director', director))

以上对保存每种关系分别定义了相应的函数进行关系对应,采用2个列表进行存储,一 一对应。

3.2.5 下面贴出获取所以关系的整个代码(3_getRelations.py):

(下面代码执行可能运行时间比较久、、、、、、、、、、、、、、、、、、)

import re

import pandas

def getID(name, nameValue):

df = pandas.read_csv('details/' + name + '.csv')

for j in range(len(df[name])):

if nameValue == df[name][j]:

return df['ID'][j]

acted_in_data = pandas.DataFrame()

directed_data = pandas.DataFrame()

cooperation_data = pandas.DataFrame()

belong_to_data = pandas.DataFrame()

def save_relation(start_id, end_id, relation):

dataframe = pandas.DataFrame({':START_ID': start_id, ':END_ID': end_id, ':relation': relation, ':TYPE': relation})

dataframe.to_csv('details/' + relation + '.csv', index=False, sep=',', encoding="utf_8_sig")

def save_acted_in(content):

# 获取当前电影对应ID

film_name = re.findall('.*?/title>'</span><span class="token punctuation">,</span> content<span class="token punctuation">)</span><span class="token punctuation">[</span><span class="token number">0</span><span class="token punctuation">]</span>

film_name <span class="token operator">=</span> film_name<span class="token punctuation">.</span>lstrip<span class="token punctuation">(</span><span class="token string">"<title>"</span><span class="token punctuation">)</span><span class="token punctuation">.</span>rstrip<span class="token punctuation">(</span><span class="token string">"(豆瓣) ").replace(" ", "") # 电影名字每页只有一个

filmNameID = getID('film_name', film_name)

# 获取当前电影的演员和对应ID

actor_cont = re.findall('"actor":.*?]', content)[0]

actor_cont = re.findall('"name": ".*?"', actor_cont)

for i in range(len(actor_cont)): # 演员每页可能多个(通常都多个)

actor = actor_cont[i].lstrip('name": "').rstrip('"')

start_id.append(filmNameID)

end_id.append(getID('actor', actor)) # 查找演员名字对应ID

def save_directed(contnet):

# 获取当前电影对应ID

film_name = re.findall('.*?/title>'</span><span class="token punctuation">,</span> content<span class="token punctuation">)</span><span class="token punctuation">[</span><span class="token number">0</span><span class="token punctuation">]</span>

film_name <span class="token operator">=</span> film_name<span class="token punctuation">.</span>lstrip<span class="token punctuation">(</span><span class="token string">"<title>"</span><span class="token punctuation">)</span><span class="token punctuation">.</span>rstrip<span class="token punctuation">(</span><span class="token string">"(豆瓣) ").replace(" ", "")

filmNameID = getID('film_name', film_name)

#

director_cont = re.findall('"director":.*?]', content)[0]

director_cont = re.findall('"name": ".*?"', director_cont)

for i in range(len(director_cont)):

director = director_cont[i].lstrip('"name": "').rstrip('"')

start_id.append(filmNameID)

end_id.append(getID('director', director))

def save_belongto(content):

# 获取当前电影对应ID

film_name = re.findall('.*?/title>'</span><span class="token punctuation">,</span> content<span class="token punctuation">)</span><span class="token punctuation">[</span><span class="token number">0</span><span class="token punctuation">]</span>

film_name <span class="token operator">=</span> film_name<span class="token punctuation">.</span>lstrip<span class="token punctuation">(</span><span class="token string">"<title>"</span><span class="token punctuation">)</span><span class="token punctuation">.</span>rstrip<span class="token punctuation">(</span><span class="token string">"(豆瓣) ").replace(" ", "")

filmNameID = getID('film_name', film_name)

#

type_cont = re.findall('.*?', content)

for i in range(len(type_cont)):

type = type_cont[i].lstrip('').rstrip('')

start_id.append(filmNameID)

end_id.append(getID('type', type))

def save_cooperation(content):

# 获取当前电影的演员和对应ID

actor_cont = re.findall('"actor":.*?]', content)[0]

actor_cont = re.findall('"name": ".*?"', actor_cont)

#

director_cont = re.findall('"director":.*?]', content)[0]

director_cont = re.findall('"name": ".*?"', director_cont)

for i in range(len(actor_cont)):

actor = actor_cont[i].lstrip('name": "').rstrip('"')

for j in range(len(director_cont)):

director = director_cont[j].lstrip('"name": "').rstrip('"')

start_id.append(getID('actor', actor))

end_id.append(getID('director', director))

# 用来存放关系节点ID的列表

start_id = []

end_id = []

# 循环查找每个页面(即contents文件夹中下载下来的页面),找出对应关系(acted_in)

for i in range(250):

with open('contents/' + str(i) + '.txt', mode='r', encoding='utf8') as f:

content = f.read().replace('\n', "") # 要去掉换行符

save_acted_in(content)

save_relation(start_id, end_id, 'acted_in')

print('[+] save acted_in finished!!!!!!!!!!!!!!!!!')

start_id.clear()

end_id.clear()

# 循环查找每个页面(即contents文件夹中下载下来的页面),找出对应关系(directed)

for i in range(250):

with open('contents/' + str(i) + '.txt', mode='r', encoding='utf8') as f:

content = f.read().replace('\n', "") # 要去掉换行符

save_directed(content)

save_relation(start_id, end_id, 'directed')

print('[+] save directed finished!!!!!!!!!!!!!!!!!')

start_id.clear()

end_id.clear()

# 循环查找每个页面(即contents文件夹中下载下来的页面),找出对应关系(belong_to)

for i in range(250):

with open('contents/' + str(i) + '.txt', mode='r', encoding='utf8') as f:

content = f.read().replace('\n', "") # 要去掉换行符

save_belongto(content)

save_relation(start_id, end_id, 'belong_to')

print('[+] save belong_to finished!!!!!!!!!!!!!!!!!')

start_id.clear()

end_id.clear()

# 循环查找每个页面(即contents文件夹中下载下来的页面),找出对应关系(cooperation)

for i in range(250):

with open('contents/' + str(i) + '.txt', mode='r', encoding='utf8') as f:

content = f.read().replace('\n', "") # 要去掉换行符

save_cooperation(content)

save_relation(start_id, end_id, 'cooperation')

print('[+] save cooperation finished!!!!!!!!!!!!!!!!!')

4. 使用neo4j创建知识图谱

我将neo4j安装在kail(Linux)上,WIndows下类似,使用如下命令导入我们爬取的csv文件,包括结点(使用–nodes)和关系(使用–relationships)

./neo4j-admin import --mode=csv --database=movies.db --nodes /usr/local/demo/actor.csv --nodes /usr/local/demo/director.csv --nodes /usr/local/demo/film_name.csv --nodes /usr/local/demo/type.csv --relationships /usr/local/demo/acted_in.csv --relationships /usr/local/demo/directed.csv --relationships /usr/local/demo/belong_to.csv --relationships /usr/local/demo/cooperation.csv

命令说明如下:(需要在neo4j文件夹下的bin目录才能这样执行)

./neo4j-admin:是导入的脚本

import :导入操作

–mode=csv:导入csv格式文件

–database=movies.db:导入的数据库(默认graph.db),若写成movies.db需要修改配置文件(在neo4j文件夹下的conf/neo4j.conf)

-nodes /usr/local/demo/actor.csv --nodes /usr/local/demo/director.csv:导入结点,后面为结点文件路径

–relationships /usr/local/demo/belong_to.csv --relationships /usr/local/demo/cooperation.csv:导入关系,后面为关系文件路径