菜鸟学习tensorflow1

因为工作需要,开始学习tensorflow,个人不是喜欢数学公式的类型,之前学习caffe、Keras都是从实用角度去学习即会用就行,对于CNN理论我都没有细看,那么多数学公式看得想睡觉。所以我学tensorflow的宗旨是先大概学习理论,对于理论要知道是怎么回事,公式看不懂就先不看,然后去实践能训练符合实际需求的模型会及时调整模型就OK。至于具体的理论公式那些,以后有需求再学。

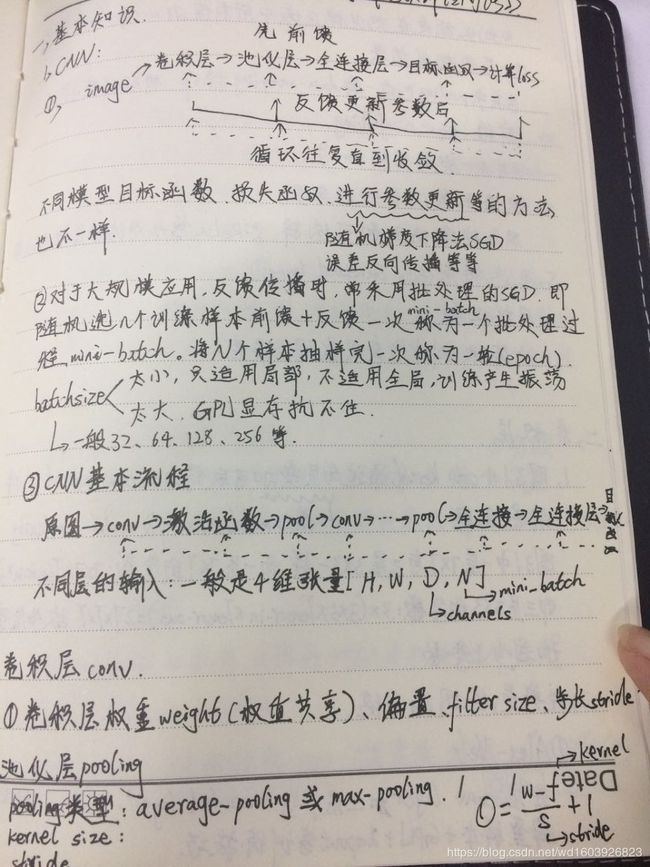

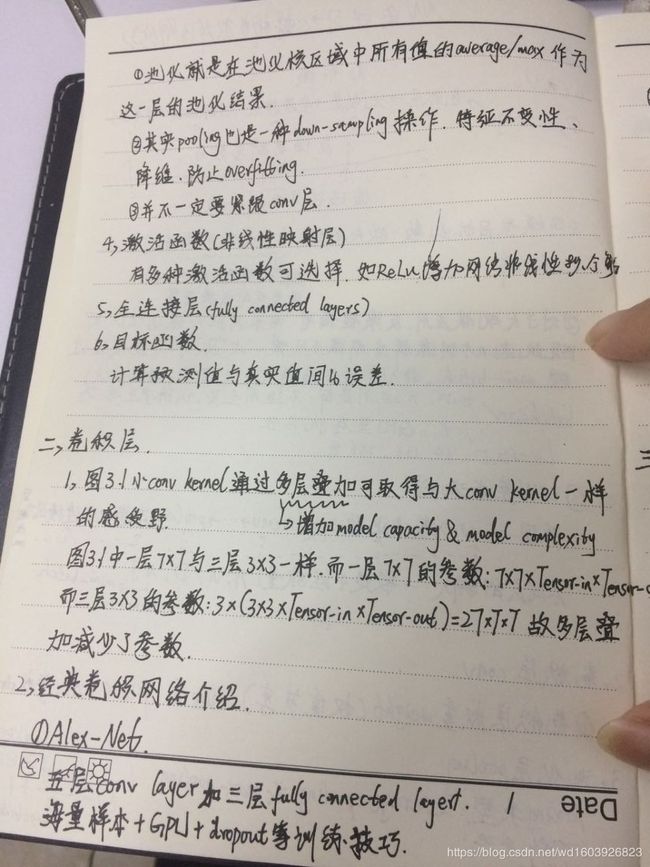

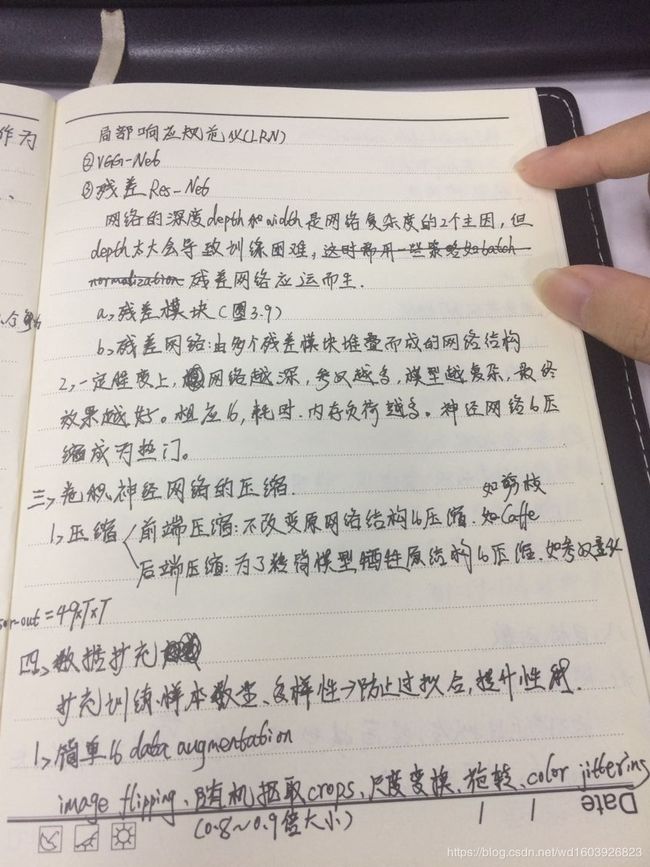

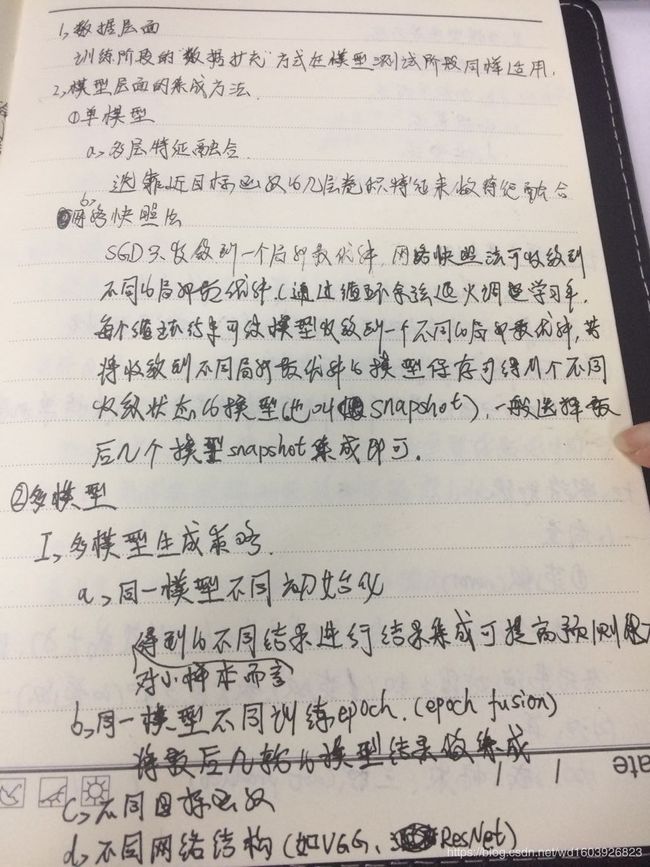

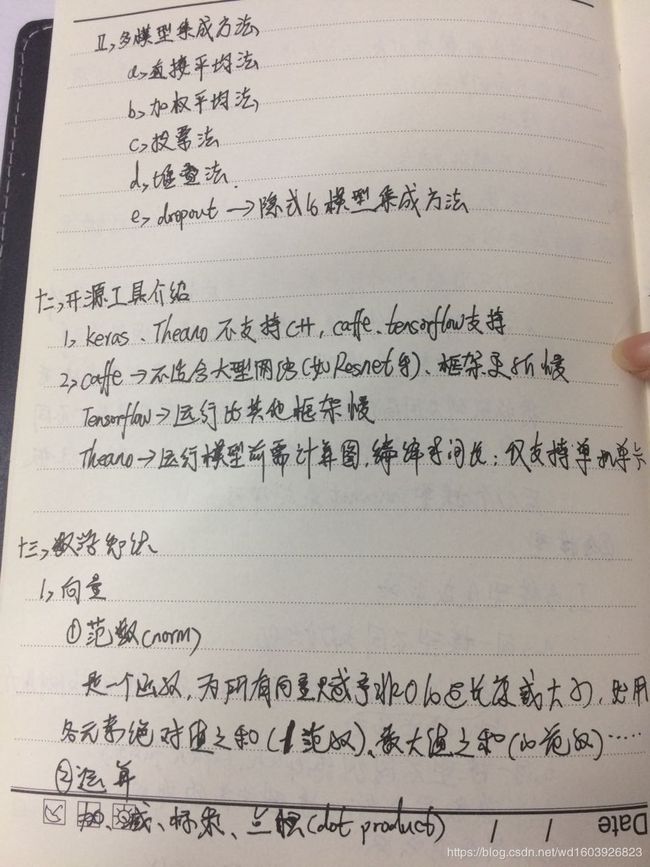

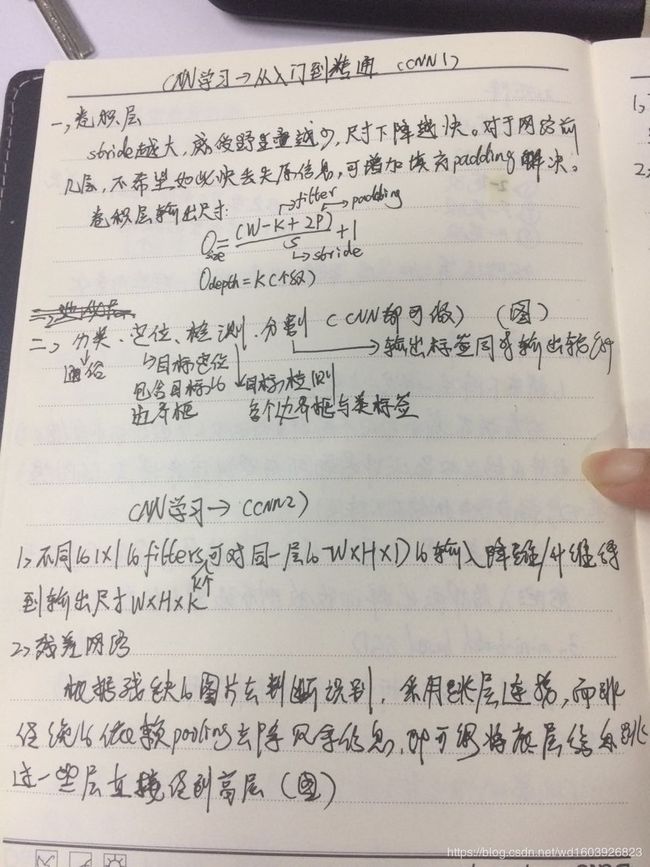

一、学习《解析卷积神经网络—深度学习实践手册》

这本书公式比较少,可以做入门的看看,但我笔记字迹潦草,也只有我自己看得懂。

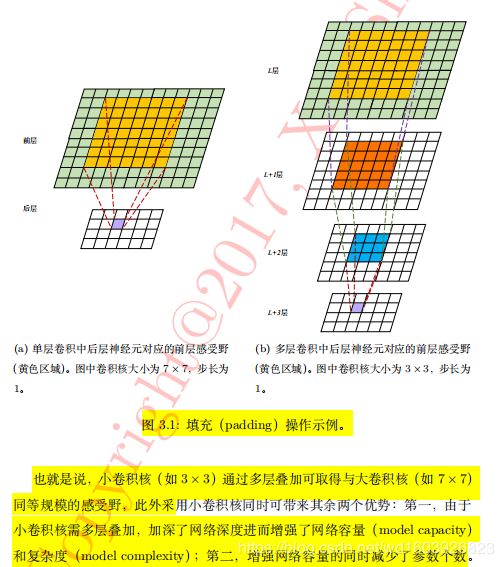

小的卷积核多层叠加可参考下图:

五、数据预处理:中心式归一化(图像减去均值),训练集、验证集、测试集都这样做。

六、网络参数初始化:全零初始化、随机初始化。

七、激活函数:有多种激活函数的。

八、目标函数:

九、网络正则化:为了防止过拟合,提高模型的泛化能力,建议使用正则化,如dropout、使用验证集等。

十三、随机梯度下降

1、梯度下降法:迭代一次需全部样本。沿着梯度减小的方向可找到目标函数的局部极小值如下图所示

2、随机梯度下降法SGD:每次计算只需要一个样本,故不一定是模型整体的最优方向,可能陷入局部最优解而收敛到不理想状态。

3、mini-batch based SGD:其实是上两个的折中办法,实际中常用。

至此,这本书囫囵吞枣看完。

二、看网页

https://mp.weixin.qq.com/s?__biz=MzA3MzI4MjgzMw==&mid=2650717691&idx=2&sn=3f0b66aa9706aae1a30b01309aa0214c#rd

https://zhuanlan.zhihu.com/p/27642620

https://zhuanlan.zhihu.com/p/23932714 https://www.cnblogs.com/Anita9002/p/9296014.html

三、搭建windows10+python3.6+tensorflow1.14.0+opencv环境

网上一大把教程讲如何搭建环境,一个不行就换另一个参考,一定要搭建合适自己显卡、显卡驱动、python版本、tensorflow版本相符的。我搭建完毕:

PS C:\Users\jp\Desktop> nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2018 NVIDIA Corporation

Built on Sat_Aug_25_21:08:04_Central_Daylight_Time_2018

Cuda compilation tools, release 10.0, V10.0.130

PS C:\Users\jp\Desktop> conda list

# packages in environment at D:\CUDA\anaconda:

#

_ipyw_jlab_nb_ext_conf 0.1.0 py36he6757f0_0

absl-py 0.7.1

alabaster 0.7.10 py36hcd07829_0

anaconda 5.0.0 py36hea9b2fc_0

anaconda-client 1.6.5 py36hd36550c_0

anaconda-navigator 1.6.8 py36h4b7dd57_0

anaconda-project 0.8.0 py36h8b3bf89_0

asn1crypto 0.22.0 py36h8e79faa_1

astor 0.8.0

astroid 1.5.3 py36h9d85297_0

astropy 2.0.2 py36h06391c4_4

babel 2.5.0 py36h35444c1_0

backports 1.0 py36h81696a8_1

backports.shutil_get_terminal_size 1.0.0 py36h79ab834_2

beautifulsoup4 4.6.0 py36hd4cc5e8_1

bitarray 0.8.1 py36h6af124b_0

bkcharts 0.2 py36h7e685f7_0

blaze 0.11.3 py36h8a29ca5_0

bleach 2.0.0 py36h0a7e3d6_0

bokeh 0.12.7 py36h012f572_1

boto 2.48.0 py36h1a776d2_1

bottleneck 1.2.1 py36hd119dfa_0

bzip2 1.0.6 vc14hdec8e7a_1 [vc14]

ca-certificates 2017.08.26 h94faf87_0

cachecontrol 0.12.3 py36hfe50d7b_0

certifi 2017.7.27.1 py36h043bc9e_0

cffi 1.10.0 py36hae3d1b5_1

chardet 3.0.4 py36h420ce6e_1

click 6.7 py36hec8c647_0

cloudpickle 0.4.0 py36h639d8dc_0

clyent 1.2.2 py36hb10d595_1

colorama 0.3.9 py36h029ae33_0

comtypes 1.1.2 py36heb9b3d1_0

conda 4.3.27 py36hcbae3bd_0

conda-build 3.0.22 py36hd71664c_0

conda-env 2.6.0 h36134e3_1

conda-verify 2.0.0 py36h065de53_0

console_shortcut 0.1.1 haa4cab3_2

contextlib2 0.5.5 py36he5d52c0_0

cryptography 2.0.3 py36h123decb_1

curl 7.55.1 vc14hdaba4a4_3 [vc14]

cycler 0.10.0 py36h009560c_0

cython 0.26.1 py36h18049ac_0

cytoolz 0.8.2 py36h547e66e_0

dask 0.15.2 py36h25e1b01_0

dask-core 0.15.2 py36hf9e56b0_0

datashape 0.5.4 py36h5770b85_0

decorator 4.1.2 py36he63a57b_0

distlib 0.2.5 py36h51371be_0

distributed 1.18.3 py36h5be4c3e_0

docutils 0.14 py36h6012d8f_0

entrypoints 0.2.3 py36hfd66bb0_2

et_xmlfile 1.0.1 py36h3d2d736_0

fastcache 1.0.2 py36hffdae1b_0

filelock 2.0.12 py36hd7ddd41_0

flask 0.12.2 py36h98b5e8f_0

flask-cors 3.0.3 py36h8a3855d_0

freetype 2.8 vc14h17c9bdf_0 [vc14]

gast 0.2.2

get_terminal_size 1.0.0 h38e98db_0

gevent 1.2.2 py36h342a76c_0

glob2 0.5 py36h11cc1bd_1

google-pasta 0.1.7

greenlet 0.4.12 py36ha00ad21_0

grpcio 1.23.0

h5py 2.7.0 py36hfbe0a52_1

hdf5 1.10.1 vc14hb361328_0 [vc14]

heapdict 1.0.0 py36h21fa5f4_0

html5lib 0.999999999 py36ha09b1f3_0

icc_rt 2017.0.4 h97af966_0

icu 58.2 vc14hc45fdbb_0 [vc14]

idna 2.6 py36h148d497_1

imageio 2.2.0 py36had6c2d2_0

imagesize 0.7.1 py36he29f638_0

intel-openmp 2018.0.0 hcd89f80_7

ipykernel 4.6.1 py36hbb77b34_0

ipython 6.1.0 py36h236ecc8_1

ipython_genutils 0.2.0 py36h3c5d0ee_0

ipywidgets 7.0.0 py36h2e74ada_0

isort 4.2.15 py36h6198cc5_0

itsdangerous 0.24 py36hb6c5a24_1

jdcal 1.3 py36h64a5255_0

jedi 0.10.2 py36hed927a0_0

jinja2 2.9.6 py36h10aa3a0_1

jpeg 9b vc14h4d7706e_1 [vc14]

jsonschema 2.6.0 py36h7636477_0

jupyter 1.0.0 py36h422fd7e_2

jupyter_client 5.1.0 py36h9902a9a_0

jupyter_console 5.2.0 py36h6d89b47_1

jupyter_core 4.3.0 py36h511e818_0

jupyterlab 0.27.0 py36h34cc53b_2

jupyterlab_launcher 0.4.0 py36h22c3ccf_0

Keras-Applications 1.0.8

Keras-Preprocessing 1.1.0

lazy-object-proxy 1.3.1 py36hd1c21d2_0

libiconv 1.15 vc14h29686d3_5 [vc14]

libpng 1.6.32 vc14hce43e6c_2 [vc14]

libssh2 1.8.0 vc14hcf584a9_2 [vc14]

libtiff 4.0.8 vc14h04e2a1e_10 [vc14]

libxml2 2.9.4 vc14h8fd0f11_5 [vc14]

libxslt 1.1.29 vc14hf85b8d4_5 [vc14]

llvmlite 0.20.0 py36_0

locket 0.2.0 py36hfed976d_1

lockfile 0.12.2 py36h0468280_0

lxml 3.8.0 py36h45350b2_0

Markdown 3.1.1

markupsafe 1.0 py36h0e26971_1

matplotlib 2.0.2 py36h58ba717_1

mccabe 0.6.1 py36hb41005a_1

menuinst 1.4.8 py36h870ab7d_0

mistune 0.7.4 py36h4874169_0

mkl 2018.0.0 h36b65af_4

mkl-service 1.1.2 py36h57e144c_4

mpmath 0.19 py36he326802_2

msgpack-python 0.4.8 py36h58b1e9d_0

multipledispatch 0.4.9 py36he44c36e_0

navigator-updater 0.1.0 py36h8a7b86b_0

nbconvert 5.3.1 py36h8dc0fde_0

nbformat 4.4.0 py36h3a5bc1b_0

networkx 1.11 py36hdf4b0f5_0

nltk 3.2.4 py36hd0e0a39_0

nose 1.3.7 py36h1c3779e_2

notebook 5.0.0 py36h27f7975_1

numba 0.35.0 np113py36_10

numexpr 2.6.2 py36h7ca04dc_1

numpy 1.17.0

numpy 1.13.1 py36haf1bc54_2

numpydoc 0.7.0 py36ha25429e_0

odo 0.5.1 py36h7560279_0

olefile 0.44 py36h0a7bdd2_0

openpyxl 2.4.8 py36hf3b77f6_1

openssl 1.0.2l vc14hcac20b0_2 [vc14]

packaging 16.8 py36ha0986f6_1

pandas 0.20.3 py36hce827b7_2

pandoc 1.19.2.1 hb2460c7_1

pandocfilters 1.4.2 py36h3ef6317_1

partd 0.3.8 py36hc8e763b_0

path.py 10.3.1 py36h3dd8b46_0

pathlib2 2.3.0 py36h7bfb78b_0

patsy 0.4.1 py36h42cefec_0

pep8 1.7.0 py36h0f3d67a_0

pickleshare 0.7.4 py36h9de030f_0

pillow 4.2.1 py36hdb25ab2_0

pip 9.0.1 py36hadba87b_3

pip 19.2.3

pkginfo 1.4.1 py36hb0f9cfa_1

ply 3.10 py36h1211beb_0

progress 1.3 py36hbeca8d3_0

prompt_toolkit 1.0.15 py36h60b8f86_0

protobuf 3.9.1

psutil 5.2.2 py36he84b5ac_0

py 1.4.34 py36ha4aca3a_1

pycodestyle 2.3.1 py36h7cc55cd_0

pycosat 0.6.2 py36hf17546d_1

pycparser 2.18 py36hd053e01_1

pycrypto 2.6.1 py36he68e6e2_1

pycurl 7.43.0 py36h086bf4c_3

pyflakes 1.5.0 py36h56100d8_1

pygments 2.2.0 py36hb010967_0

pylint 1.7.2 py36h091ff97_0

pyodbc 4.0.17 py36h0006bc2_0

pyopenssl 17.2.0 py36h15ca2fc_0

pyparsing 2.2.0 py36h785a196_1

pyqt 5.6.0 py36hb5ed885_5

pysocks 1.6.7 py36h698d350_1

pytables 3.4.2 py36h85b5e71_1

pytest 3.2.1 py36h753b05e_1

python 3.6.2 h6679aeb_11

python-dateutil 2.6.1 py36h509ddcb_1

pytz 2017.2 py36h05d413f_1

pywavelets 0.5.2 py36hc649158_0

pywin32 221 py36h9c10281_0

pyyaml 3.12 py36h1d1928f_1

pyzmq 16.0.2 py36h38c27d9_2

qt 5.6.2 vc14h6f8c307_12 [vc14]

qtawesome 0.4.4 py36h5aa48f6_0

qtconsole 4.3.1 py36h99a29a9_0

qtpy 1.3.1 py36hb8717c5_0

requests 2.18.4 py36h4371aae_1

rope 0.10.5 py36hcaf5641_0

ruamel_yaml 0.11.14 py36h9b16331_2

scikit-image 0.13.0 py36h6dffa3f_1

scikit-learn 0.19.0 py36h294a771_2

scipy 0.19.1 py36h7565378_3

seaborn 0.8.0 py36h62cb67c_0

setuptools 41.2.0

setuptools 36.5.0 py36h65f9e6e_0

simplegeneric 0.8.1 py36heab741f_0

singledispatch 3.4.0.3 py36h17d0c80_0

sip 4.18.1 py36h9c25514_2

six 1.10.0 py36h2c0fdd8_1

snowballstemmer 1.2.1 py36h763602f_0

sortedcollections 0.5.3 py36hbefa0ab_0

sortedcontainers 1.5.7 py36ha90ac20_0

sphinx 1.6.3 py36h9bb690b_0

sphinxcontrib 1.0 py36hbbac3d2_1

sphinxcontrib-websupport 1.0.1 py36hb5e5916_1

spyder 3.2.3 py36h132a2c8_0

sqlalchemy 1.1.13 py36h5948d12_0

sqlite 3.20.1 vc14h7ce8c62_1 [vc14]

statsmodels 0.8.0 py36h6189b4c_0

sympy 1.1.1 py36h96708e0_0

tblib 1.3.2 py36h30f5020_0

tensorboard 1.14.0

tensorflow-estimator 1.14.0rc1

tensorflow-gpu 1.14.0

termcolor 1.1.0

testpath 0.3.1 py36h2698cfe_0

tk 8.6.7 vc14hb68737d_1 [vc14]

toolz 0.8.2 py36he152a52_0

tornado 4.5.2 py36h57f6048_0

traitlets 4.3.2 py36h096827d_0

typing 3.6.2 py36hb035bda_0

unicodecsv 0.14.1 py36h6450c06_0

urllib3 1.22 py36h276f60a_0

vc 14 h2379b0c_1

vs2015_runtime 14.0.25123 hd4c4e62_1

wcwidth 0.1.7 py36h3d5aa90_0

webencodings 0.5.1 py36h67c50ae_1

werkzeug 0.12.2 py36h866a736_0

wheel 0.29.0 py36h6ce6cde_1

widgetsnbextension 3.0.2 py36h364476f_1

win_inet_pton 1.0.1 py36he67d7fd_1

win_unicode_console 0.5 py36hcdbd4b5_0

wincertstore 0.2 py36h7fe50ca_0

wrapt 1.11.1

xlrd 1.1.0 py36h1cb58dc_1

xlsxwriter 0.9.8 py36hdf8fb07_0

xlwings 0.11.4 py36hd3cf94d_0

xlwt 1.3.0 py36h1a4751e_0

yaml 0.1.7 vc14hb31d195_1 [vc14]

zict 0.1.2 py36hbda90c0_0

zlib 1.2.11 vc14h1cdd9ab_1 [vc14]

PS C:\Users\jp\Desktop> python

Python 3.6.2 |Anaconda, Inc.| (default, Sep 19 2017, 08:03:39) [MSC v.1900 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

D:\CUDA\anaconda\lib\site-packages\tensorflow\python\framework\dtypes.py:516: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint8 = np.dtype([("qint8", np.int8, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorflow\python\framework\dtypes.py:517: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint8 = np.dtype([("quint8", np.uint8, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorflow\python\framework\dtypes.py:518: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint16 = np.dtype([("qint16", np.int16, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorflow\python\framework\dtypes.py:519: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint16 = np.dtype([("quint16", np.uint16, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorflow\python\framework\dtypes.py:520: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint32 = np.dtype([("qint32", np.int32, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorflow\python\framework\dtypes.py:525: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

np_resource = np.dtype([("resource", np.ubyte, 1)])

D:\CUDA\anaconda\lib\site-packages\h5py\__init__.py:34: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.

from ._conv import register_converters as _register_converters

D:\CUDA\anaconda\lib\site-packages\tensorboard\compat\tensorflow_stub\dtypes.py:541: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint8 = np.dtype([("qint8", np.int8, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorboard\compat\tensorflow_stub\dtypes.py:542: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint8 = np.dtype([("quint8", np.uint8, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorboard\compat\tensorflow_stub\dtypes.py:543: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint16 = np.dtype([("qint16", np.int16, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorboard\compat\tensorflow_stub\dtypes.py:544: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint16 = np.dtype([("quint16", np.uint16, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorboard\compat\tensorflow_stub\dtypes.py:545: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint32 = np.dtype([("qint32", np.int32, 1)])

D:\CUDA\anaconda\lib\site-packages\tensorboard\compat\tensorflow_stub\dtypes.py:550: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

np_resource = np.dtype([("resource", np.ubyte, 1)])

>>> hello=tf.constant("Hello,Tensorflow!")

>>> sess=tf.Session()

2019-08-28 19:52:04.430777: I tensorflow/stream_executor/platform/default/dso_loader.cc:42] Successfully opened dynamic library nvcuda.dll

2019-08-28 19:52:04.620324: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1640] Found device 0 with properties:

name: GeForce GTX 750 Ti major: 5 minor: 0 memoryClockRate(GHz): 1.15

pciBusID: 0000:01:00.0

2019-08-28 19:52:04.626042: I tensorflow/stream_executor/platform/default/dlopen_checker_stub.cc:25] GPU libraries are statically linked, skip dlopen check.

2019-08-28 19:52:04.638772: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1763] Adding visible gpu devices: 0

2019-08-28 19:52:04.654001: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

2019-08-28 19:52:04.670785: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1640] Found device 0 with properties:

name: GeForce GTX 750 Ti major: 5 minor: 0 memoryClockRate(GHz): 1.15

pciBusID: 0000:01:00.0

2019-08-28 19:52:04.679668: I tensorflow/stream_executor/platform/default/dlopen_checker_stub.cc:25] GPU libraries are statically linked, skip dlopen check.

2019-08-28 19:52:04.684111: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1763] Adding visible gpu devices: 0

2019-08-28 19:56:01.856043: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1181] Device interconnect StreamExecutor with strength 1 edge matrix:

2019-08-28 19:56:02.554806: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1187] 0

2019-08-28 19:56:02.556662: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1200] 0: N

2019-08-28 19:56:02.602271: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1326] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 1380 MB memory) -> physical GPU (device: 0, name: GeForce GTX 750 Ti, pci bus id: 0000:01:00.0, compute capability: 5.0)

>>> print(sess.run(hello))

b'Hello,Tensorflow!'

>>>

操作实例时报错:

anaconda:no module named cv2

只能自己去官网 https://www.lfd.uci.edu/~gohlke/pythonlibs/#opencv

下载对应的opencv版本,

然后放进 D:\CUDA\anaconda\Lib\site-packages

打开Anaconda Prompt命令行进行安装pip install xxx.whl 即可。

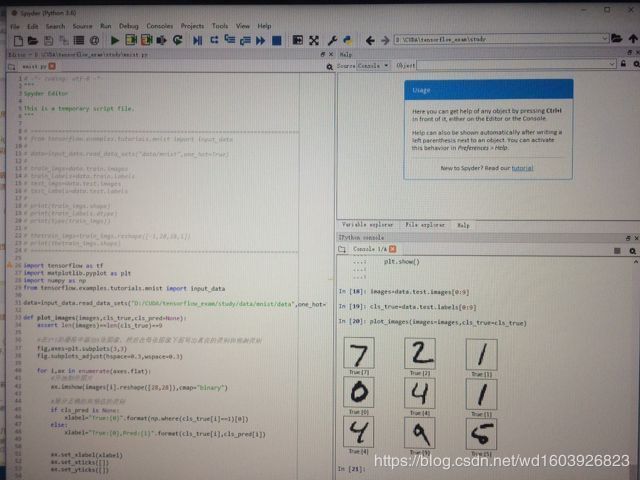

四、照搬实例验证搭建成功

https://blog.csdn.net/u014038273/article/details/77963501

五、边看边学习边操作边想

这个网页我觉得博主写得很好:https://www.cnblogs.com/wanyu416/category/1289418.html 建议大家去看。

我是跟着他写的过程边看边操作边想的,特别是这一篇 https://www.cnblogs.com/wanyu416/p/9009985.html

"""

Created on Tue Sep 3 14:15:55 2019

https://www.cnblogs.com/wanyu416/p/8954098.html

https://www.cnblogs.com/wanyu416/p/9009985.html

@author: jp

"""

import tensorflow as tf

#const and tensor declaration

#const

m1=tf.constant([[3,3]])

m2=tf.constant([[2],[3]])

print(m1)

#tensor

product=tf.matmul(m1,m2)

print(product)

#variable

x=tf.Variable([1,2])

print(x)

a=tf.Variable([3,3])

#op#tensor

sub=tf.subtract(x,a)

print(sub)

add=tf.add(x,sub)

#init variables

init=tf.global_variables_initializer()

#create session

#op and tensor can only return results only in session

with tf.Session() as sess:

sess.run(init)

print(sess.run(sub))

print(sess.run(add))

print(sess.run(product))

#a +1 for 5 times

n=tf.Variable(0,name='counter')

newvalue=tf.add(n,1)

update=tf.assign(n,newvalue)

init=tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

print(sess.run(n))

for i in range(5):

#sess.run(newvalue)这一步是多此一举,update这个op会自动运行newvalue这个op

sess.run(update)

print(sess.run(n))

#run more than one ops

input1 = tf.constant(3.0)

input2 = tf.constant(2.0)

input3 = tf.constant(5.0)

add = tf.add(input2,input3)

mul = tf.multiply(input1,add)

with tf.Session() as sess:

result = sess.run([mul,add])#顺序对结果是有关系的

result2 = sess.run([add,mul])

print(result)

print(result2)

#定义变量时可先不输入具体数值,先占位,在会话中调用op时,再输入具体值

input1 = tf.placeholder(tf.float32) # 使用placeholder()占位,需要提供类型

input2 = tf.placeholder(tf.float32)

output = tf.multiply(input1,input2)

# 以字典形式输入feed_dict

with tf.Session() as sess:

print(sess.run(output,feed_dict={input1:8.0,input2:2.0}))

# 表示生成正态分布随机数,形状两行三列,标准差是2,均值是0,随机种子是1

w=tf.Variable(tf.random_normal([2,3],stddev=2, mean=0, seed=1))

#生成去掉过大偏离点的正态分布随机数

w=tf.Variable(tf.Truncated_normal([2,3],stddev=2, mean=0, seed=1))

# 表示从一个均匀分布[minval maxval)中随机采样,产生的数是均匀分布的,

#注意定义域是左闭右开,即包含 minval,不包含 maxval。

w=tf.Variable(tf.random_uniform([2,3],minval=0,maxval=1,dtype=tf.float32,seed=1))

allzero=tf.zeros([3,2],tf.int32) # 表示生成[[0,0],[0,0],[0,0]]

allone=tf.ones([3,2],tf.int32) # 表示生成[[1,1],[1,1],[1,1]

all6=tf.fill([3,2],6) # 表示生成[[6,6],[6,6],[6,6]]

constvalues=tf.constant([3,2,1]) # 表示生成[3,2,1]

#前向传播...过程模拟

#用placeholder 实现输入定义(sess.run 中喂入一组数据)的情况,特征有体积和重量

# placeholder占位,首先要指定数据类型,然后可以指定形状,因为我们现在只需要占一组数据,且有两个特征值,所以shape为(1,2)

x = tf.placeholder(tf.float32,shape=(1,2))

w1 = tf.Variable(tf.random_normal([2,3],stddev=1,seed=1)) # 生成权重

w2 = tf.Variable(tf.random_normal([3,1],stddev=1,seed=1))

a = tf.matmul(x,w1) # 矩阵乘法op

y = tf.matmul(a,w2)

with tf.Session() as sess:

init = tf.global_variables_initializer() # 初始化以后就放在这里,不容易忘记

sess.run(init)

print("y is",sess.run(y,feed_dict={x:[[0.7,0.5]]}))

# 以字典形式给feed_dict赋值,赋的是一个一行两列的矩阵,注意张量的阶数。

#这里只执行了y的op,因为执行了y也就执行了a这个op

#用 placeholder 实现输入定义(sess.run 中喂入多组数据)的情况

#定义输入和参数

x=tf.placeholder(tf.float32,shape=(None,2)) # 这里占位因为不知道要输入多少组数据,但还是两个特征,所以shape=(None,2),注意大小写

w1=tf.Variable(tf.random_normal([2,3],stddev=1,seed=1))

w2=tf.Variable(tf.random_normal([3,1],stddev=1,seed=1))

#定义前向传播过程

a=tf.matmul(x,w1)

y=tf.matmul(a,w2)

#用会话计算结果

with tf.Session() as sess:

init_op=tf.global_variables_initializer()

sess.run(init_op)

print("y is:",sess.run(y,feed_dict={x:[[0.7,0.5],

[0.2,0.3],

[0.3,0.4],

[0.4,0.5]]})) # 输入数据,4行2列的矩阵

#反向传播让训练集上loss最小

# =============================================================================

# 反向传播训练方法: 以减小 loss 值为优化目标。

# 一般有梯度下降、 momentum 优化器、 adam 优化器等优化方法。这三种优化方法用 tensorflow 的函数可以表示为:

# train_step=tf.train.GradientDescentOptimizer(learning_rate).minimize(loss)

# train_step=tf.train.MomentumOptimizer(learning_rate, momentum).minimize(loss)

# train_step=tf.train.AdamOptimizer(learning_rate).minimize(loss)

# =============================================================================

#实际模拟训练过程

#训练 3000 轮,每 500 轮输出一次损失函数

BATCH_SIZE = 8 # 一次输入网络的数据,称为batch。一次不能喂太多数据

SEED = 23455 # 产生统一的随机数

# 基于seed产生随机数,这是根据随机种子产生随机数的一种常用方法,要熟练运用

rdm = np.random.RandomState(SEED)

# 随机数返回32行2列的矩阵 表示32组 体积和重量 作为输入数据集。因为这里没用真实的数据集,所以这样操作。

X = rdm.rand(32, 2)

# 从X这个32行2列的矩阵中 取出一行 判断如果和小于1 给Y赋值1 如果和不小于1 给Y赋值0 (这里只是人为的定义),作为输入数据集的标签(正确答案)

Y_ = [[int(x0 + x1 < 1)] for (x0, x1) in X]

print("X:\n", X)

print("Y_:\n",Y_)

# 1定义神经网络的输入、参数和输出,定义前向传播过程。

x = tf.placeholder(tf.float32, shape=(None, 2))

y_ = tf.placeholder(tf.float32, shape=(None, 1))

w1 = tf.Variable(tf.random_normal([2, 3], stddev=1, seed=1))

w2 = tf.Variable(tf.random_normal([3, 1], stddev=1, seed=1))

a = tf.matmul(x, w1)

y = tf.matmul(a, w2)

# 2定义损失函数及反向传播方法。

loss = tf.reduce_mean(tf.square(y - y_))

train_step = tf.train.GradientDescentOptimizer(0.001).minimize(loss) # 三种优化方法选择一个就可以

# train_step = tf.train.MomentumOptimizer(0.001,0.9).minimize(loss_mse)

# train_step = tf.train.AdamOptimizer(0.001).minimize(loss_mse)

# 3生成会话,训练STEPS轮

with tf.Session() as sess:

init = tf.global_variables_initializer()

sess.run(init)

# 输出目前(未经训练)的参数取值。

print("w1:\n", sess.run(w1))

print("w2:\n", sess.run(w2))

print("\n")

# 训练模型。

STEPS = 3000

for i in range(STEPS): #0-2999

start = (i * BATCH_SIZE) % 32 #i=0,start=0,end=8;i=1,start=8,end=16;i=2,start=16,end=24;i=3,start=24,end=32;i=4,start=0,end=8。也就是说每次训练8组数据,一共训练3000次。

end = start + BATCH_SIZE

sess.run(train_step, feed_dict={x: X[start:end], y_: Y_[start:end]})

if i % 500 == 0:

total_loss = sess.run(loss, feed_dict={x: X, y_: Y_})

print("After %d training step(s), loss on all data is %g"%(i,total_loss))

# 输出训练好的参数。

print("\n")

print("w1:\n", sess.run(w1))

print("w2:\n", sess.run(w2))特别是 https://www.cnblogs.com/wanyu416/p/9012405.html 非常推荐,可惜后面再没有继续更新了。很佩服这个博主,讲解得很好。