安装hadoop需要jdk依赖,我这里是用jdk8

jdk版本:jdk1.8.0_151

hadoop版本:hadoop-2.5.0-cdh5.3.6

hadoop下载地址:链接:https://pan.baidu.com/s/1qZNeVFm 密码:ciln

jdk下载地址:链接:https://pan.baidu.com/s/1qZLddl6 密码:c9w3

一切准备好以后,开始安装

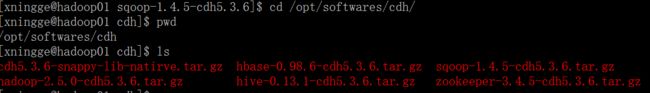

1、上传hadoop软件包和jdk软件包到Linux系统指定目录:/opt/softwares/cdh

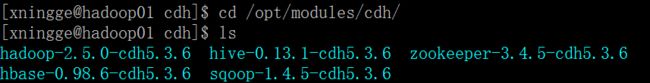

2、解压 hadoop软件包和jdk软件包到指定目录:/opt/modules/cdh/

解压命令:tar -zxvf hadoop-2.5.0-cdh5.3.6.tar.gz -C /opt/modules/cdh/

tar -zxvf jdk-8u151-linux-x64.tar.gz -C /opt/modules/cdh

3、jdk环境变量配置

在/etc/profile文件中配置

3.1 sudo vi /etc/profile

==========================================================================

#JAVA_HOME#

export JAVA_HOME=/opt/modules/jdk1.8.0_151

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

==========================================================================

3.2 source /etc/profile

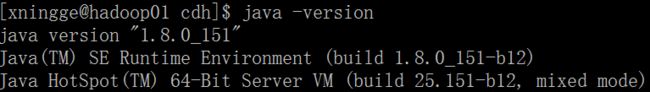

4、测试java是否已经安装成功

4.1 java -version

5、hadoop配置

5.1 删除hadoop/share/doc

5.2 修改配置文件

3个?-env,sh文件(hadoop,mapred,yarn)

export JAVA_HOME=/opt/modules/jdk1.8.0_151

4个?-site.xml文件(core-site.xml 、hdfs-site.xml、mapred-site.xml、yarn-site.xml)

core-site.xml

hdfs-site.xml

mapred-site.xml

yarn-site.xml

1个slaves

hadoop01.xningge.com

6、格式化namenode

$ bin/hdfs namenode -format

7、开启各服务

$ sbin/hadoop-daemon.sh start namenode

$ sbin/hadoop-daemon.sh start datanode

$ sbin/hadoop-daemon.sh start secondarynamenode

$ sbin/mr-jobhistory-daemon.sh start historyserver

$ sbin/yarn-daemon.sh start resourcemanager

$ sbin/yarn-daemon.sh start nodemanager

配置SSH免密登陆可使用:

$ sbin/start-dfs.sh

$ sbin/start-yarn.sh

$ sbin/start-all.sh

8、基本测试

$ bin/hdfs dfs -mkdir -p /user/xningge/mapreduce/input

$ bin/hdfs dfs -put /opt/datas/wc.input /user/xningge/mapreduce/input

$ bin/hdfs dfs -get /user/xningge/mapreduce/input/wc.input /

$ bin/hdfs dfs -cat /user/xningge/mapreduce/input/wc.input

9、跑一个简单的job

$ bin/yarn jar share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar wordcount /user/xningge/mapreduce/input /user/xningge/mapreduce/output