从零开始丨INDEMIND双目视觉惯性模组ROS平台ORB-SLAM教程及记录

本文涉及很多代码及文字,排版、文字错误请见谅。

阅读时间预计30分钟

本文涉及图像、数据均由INDEMIND双目视觉惯性模组采集

文章涉及所有代码修改皆已上传至Github,大家自行修改后可对比参照

下载链接:Github

文章目录

- 一:环境介绍

- 二:下载SDK及ORB-SLAM源码

- 三:修改源码

- 1 . 源码路径

- 2 . 修改stereo_euroc.cc文件

- 1)将其橙色区域改成

- 2)添加时间转换函数

- 3)删除LoadImages函数

- 4)添加回调函数imagelCallback

- 5)添加show_ORB函数

- 6)ros初始化

- 7)删除 LoadImages函数

- 3 . 新建FileYaml.h文件

- 4 . 新建FileYaml.cc文件

- 5 . 修改CMakeLists.txt文件

- 1)

- 2)

- 3)

- 4)

- 5)

- 6. 编译

- 1)

- 7. 执行

- 1)

- 2)

- 3)

一:环境介绍

系统:Ubuntu 16.04 ROS

ORB依赖库:Pangolin、OpenCV、Eigen3、DBoW2、g2o,ros

二:下载SDK及ORB-SLAM源码

下载地址:

SDK:http://indemind.cn/sdk.html

ORB-SLAM:https://github.com/raulmur/ORB_SLAM2

三:修改源码

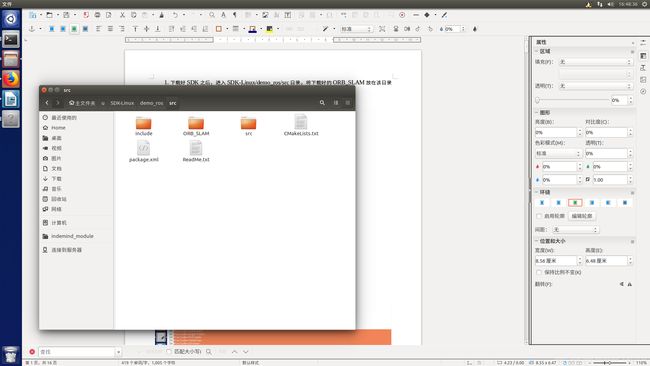

1 . 源码路径

下载好SDK之后,进入SDK-Linux/demo_ros/src目录。将下载好源码的放在该目录下,并对其改名,改为 ORB_SLAM

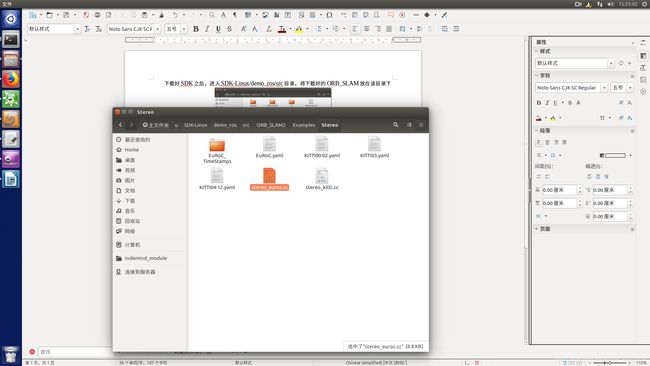

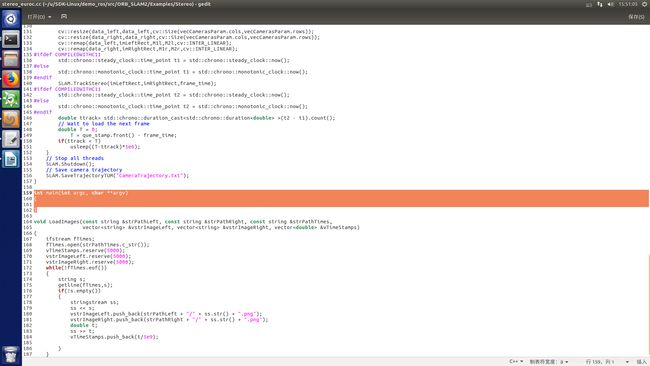

2 . 修改stereo_euroc.cc文件

进入ORB_SLAM2/Examples/Stereo目录下,修改stereo_euroc.cc文件。

1)将其橙色区域改成

代码如下:

#include

#include

#include

#include

#include

#include

#include

#include "ros/ros.h"

#include

#include

#include "std_msgs/String.h"

#include "FileYaml.h"

#include

#include

#include

#include

using namespace std;

ros::Subscriber image_l_sub;

ros::Subscriber image_r_sub;

int image_l_count = 0;

queue que_image_l;

queue que_image_r;

queue que_stamp;

std::mutex limage_mutex;

std::mutex rimage_mutex;

std::condition_variable left_data_cond;

std::condition_variable right_data_cond;

// std::lock_guardlock(rimage_mutex);

// std::thread show_thread{show_image}; //visualization thread

// show_thread.detach();

cv::Mat imLeft;

cv::Mat imRight;

ros::Time ros_stamp;

long double stamp;

修改后如下:

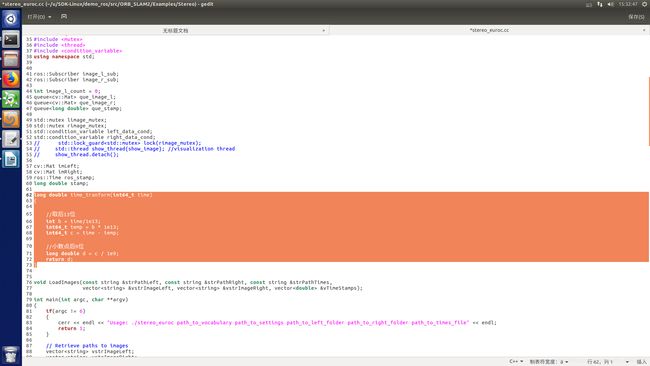

2)添加时间转换函数

在代码,long double stamp;后添加时间转换函数

long double time_tranform(int64_t time)

{

//取后13位

int b = time/1e13;

int64_t temp = b * 1e13;

int64_t c = time - temp;

//小数点后9位

long double d = c / 1e9;

return d;

}

修改后如下:

3)删除LoadImages函数

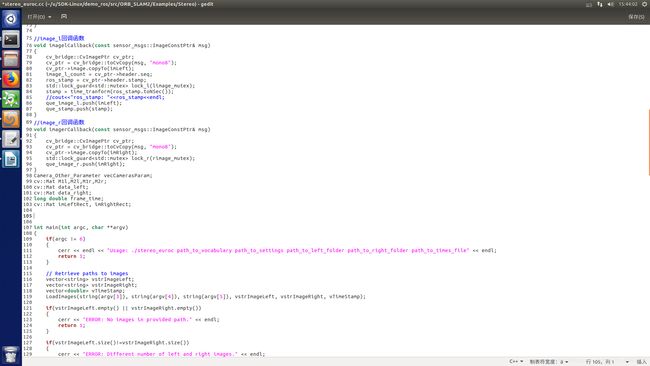

4)添加回调函数imagelCallback

在time_tranform和main函数之间添加左图回调函数imagelCallback,添加右图回调函数imagerCallback,以及一些变量的定义

//image_l回调函数

void imagelCallback(const sensor_msgs::ImageConstPtr&msg)

{

cv_bridge::CvImagePtr cv_ptr;

cv_ptr = cv_bridge::toCvCopy(msg, "mono8");

cv_ptr->image.copyTo(imLeft);

image_l_count = cv_ptr->header.seq;

ros_stamp = cv_ptr->header.stamp;

std::lock_guard lock_l(limage_mutex);

stamp = time_tranform(ros_stamp.toNSec());

//cout<<"ros_stamp: "<image.copyTo(imRight);

std::lock_guard lock_r(rimage_mutex);

que_image_r.push(imRight);

}

Camera_Other_Parameter vecCamerasParam;

cv::Mat M1l,M2l,M1r,M2r;

cv::Mat data_left;

cv::Mat data_right;

long double frame_time;

cv::Mat imLeftRect, imRightRect;

5)添加show_ORB函数

在cv::Mat imLeftRect, imRightRect;之后,添加show_ORB函数,用于获取图像数据和时间,以及启动ORB的功能

void show_ORB()

{

//-----------------ORB_SLAM2 Init---------------------------------------------

const string param1_ORBvoc = "Vocabulary/ORBvoc.txt";

const string param3_ORByaml = "Examples/Stereo/EuRoC.yaml";

ORB_SLAM2::System SLAM(param1_ORBvoc,param3_ORByaml,ORB_SLAM2::System::STEREO,true);

std::this_thread::sleep_for(std::chrono::milliseconds(500));

while(true)

{

std::this_thread::sleep_for(std::chrono::milliseconds(30));

std::unique_lock lock_l(limage_mutex);

data_left = que_image_l.front();

frame_time = que_stamp.front();

que_image_l.pop();

que_stamp.pop();

lock_l.unlock();

std::unique_lock lock_r(rimage_mutex);

data_right = que_image_r.front();

que_image_r.pop();

lock_r.unlock();

// cout.precision(13);

// cout<<"frame: "< >(t2 - t1).count();

// Wait to load the next frame

double T = 0;

T = que_stamp.front() - frame_time;

if(ttrack < T)

usleep((T-ttrack)*1e6);

/*

cv::imshow("left",data_left);

cv::imshow("right",data_right);

cv::waitKey(1);*/

}

// Stop all threads

SLAM.Shutdown();

// Save camera trajectory

SLAM.SaveTrajectoryTUM("CameraTrajectory.txt");

修改后如下:

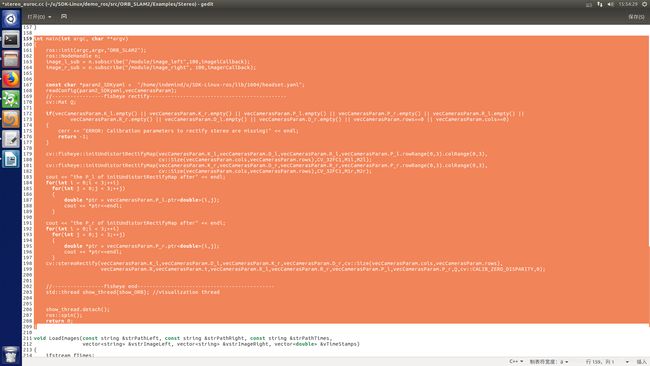

6)ros初始化

将main函数内的内容全部删除,在main函数内添加ros初始化,读取配置文件,去畸变,获取参数,校正,ORB_SLAM的启动

ros::init(argc,argv,"ORB_SLAM2");

ros::NodeHandle n;

image_l_sub = n.subscribe("/module/image_left",100,imagelCallback);

image_r_sub = n.subscribe("/module/image_right", 100,imagerCallback);

const char *param2_SDKyaml = "/home/indemind/u/SDK-Linux-ros/lib/1604/headset.yaml";

readConfig(param2_SDKyaml,vecCamerasParam);

//-----------------fisheye rectify---------------------------------------------

cv::Mat Q;

if(vecCamerasParam.K_l.empty() || vecCamerasParam.K_r.empty() || vecCamerasParam.P_l.empty() || vecCamerasParam.P_r.empty() || vecCamerasParam.R_l.empty() ||

vecCamerasParam.R_r.empty() || vecCamerasParam.D_l.empty() || vecCamerasParam.D_r.empty() || vecCamerasParam.rows==0 || vecCamerasParam.cols==0)

{

cerr << "ERROR: Calibration parameters to rectify stereo are missing!" << endl;

return -1;

}

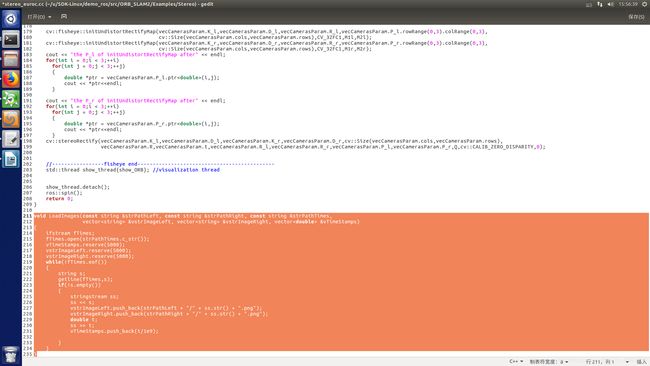

cv::fisheye::initUndistortRectifyMap(vecCamerasParam.K_l,vecCamerasParam.D_l,vecCamerasParam.R_l,vecCamerasParam.P_l.rowRange(0,3).colRange(0,3),

cv::Size(vecCamerasParam.cols,vecCamerasParam.rows),CV_32FC1,M1l,M2l);

cv::fisheye::initUndistortRectifyMap(vecCamerasParam.K_r,vecCamerasParam.D_r,vecCamerasParam.R_r,vecCamerasParam.P_r.rowRange(0,3).colRange(0,3),

cv::Size(vecCamerasParam.cols,vecCamerasParam.rows),CV_32FC1,M1r,M2r);

cout << "the P_l of initUndistortRectifyMap after" << endl;

for(int i = 0;i < 3;++i)

for(int j = 0;j < 3;++j)

{

double *ptr = vecCamerasParam.P_l.ptr(i,j);

cout << *ptr<(i,j);

cout << *ptr< 7)删除 LoadImages函数

3 . 新建FileYaml.h文件

进入SDK-Linux/demo_ros/src/ORB_SLAM2/include目录下,新建FileYaml.h文件,将下列代码填入文件:

#ifndef _FILEYAML_H

#define _FILEYAML_H

#include

#include

using namespace std;

#include

#include

#include

#include

#include

#include

#include

#include

#include

using namespace cv;

using namespace Eigen;

using namespace Sophus;

using cv::FileStorage;

using cv::FileNode;

struct Camera

{

Eigen::Matrix4d T_SC;

Eigen::Vector2d imageDimension;

Eigen::VectorXd distortionCoefficients;

Eigen::Vector2d focalLength;

Eigen::Vector2d principalPoint;

std::string distortionType;

Camera() :

T_SC(Eigen::Matrix4d::Identity()),

imageDimension(Eigen::Vector2d(1280, 800)),

distortionCoefficients(Eigen::Vector2d(0, 0)),

focalLength(Eigen::Vector2d(700, 700)),

principalPoint(Eigen::Vector2d(640, 400)),

distortionType("equidistant")

{

}

void write(FileStorage& fs) const //Write serialization for this class

{

fs << "{:";

fs << "T_SC";

fs << "[:";

for (int i = 0; i < 4; i++)

{

for (int j = 0; j < 4; j++)

{

fs << T_SC(i, j);

}

}

fs << "]";

fs << "image_dimension";

fs << "[:";

fs << imageDimension(0) << imageDimension(1);

fs << "]";

fs << "distortion_coefficients";

fs << "[:";

for (int i = 0; i < distortionCoefficients.rows(); i++)

{

fs << distortionCoefficients(i);

}

fs << "]";

fs << "distortion_type" << distortionType;

fs << "focal_length";

fs << "[:";

fs << focalLength(0) << focalLength(1);

fs << "]";

fs << "principal_point";

fs << "[:";

fs << principalPoint(0) << principalPoint(1);

fs << "]";

fs << "}";

}

void read(const FileNode& node) //Read serialization for this class

{

cv::FileNode T_SC_node = node["T_SC"];

cv::FileNode imageDimensionNode = node["image_dimension"];

cv::FileNode distortionCoefficientNode = node["distortion_coefficients"];

cv::FileNode focalLengthNode = node["focal_length"];

cv::FileNode principalPointNode = node["principal_point"];

// extrinsics

T_SC << T_SC_node[0], T_SC_node[1], T_SC_node[2], T_SC_node[3], T_SC_node[4], T_SC_node[5], T_SC_node[6], T_SC_node[7], T_SC_node[8], T_SC_node[9], T_SC_node[10], T_SC_node[11], T_SC_node[12], T_SC_node[13], T_SC_node[14], T_SC_node[15];

imageDimension << imageDimensionNode[0], imageDimensionNode[1];

distortionCoefficients.resize(distortionCoefficientNode.size());

for (size_t i = 0; i 注:该文件为读取文件的头文件

4 . 新建FileYaml.cc文件

进入SDK-Linux/demo_ros/src/ORB_SLAM2/src目录下,新建FileYaml.cc文件,将下列代码填入文件:

#include "FileYaml.h"

static void write(FileStorage& fs, const std::string&, const Camera& x)

{

x.write(fs);

}

static void read(const FileNode& node, Camera& x, const Camera& default_value = Camera())

{

if (node.empty())

x = default_value;

else

x.read(node);

}

void readConfig(const char *yamlPath,Camera_Other_Parameter &vecCamerasOtherParam)

{

std::vector vecCameras;

cv::FileStorage fin(yamlPath, cv::FileStorage::READ);

if(!fin.isOpened())

{

cerr << endl << "Failed to load readConfig yamlPath " << endl;

return ;

}

cv::FileNode cameras_node = fin["cameras"];

/*

cv::FileNode Rl_node = fin["Rl"];

cv::FileNode Rr_node = fin["Rr"];

cv::FileNode Pl_node = fin["Pl"];

cv::FileNode Pr_node = fin["Pr"];

cv::FileNode Kl_node = fin["Kl"];

cv::FileNode Kr_node = fin["Kr"];

cv::FileNode Dl_node = fin["Dl"];

cv::FileNode Dr_node = fin["Dr"];*/

fin["Rl"] >> vecCamerasOtherParam.R_l;

fin["Rr"] >> vecCamerasOtherParam.R_r;

fin["Pl"] >> vecCamerasOtherParam.P_l;

fin["Pr"] >> vecCamerasOtherParam.P_r;

fin["Kl"] >> vecCamerasOtherParam.K_l;

fin["Kr"] >> vecCamerasOtherParam.K_r;

fin["Dl"] >> vecCamerasOtherParam.D_l;

fin["Dr"] >> vecCamerasOtherParam.D_r;

/*

vecCamerasOtherParam.R_l = Rl_node.mat();

vecCamerasOtherParam.R_r = Rr_node.mat();

vecCamerasOtherParam.P_l = Pl_node.mat();

vecCamerasOtherParam.P_r = Pr_node.mat();

vecCamerasOtherParam.K_l = Kl_node.mat();

vecCamerasOtherParam.K_r = Kr_node.mat();

vecCamerasOtherParam.D_l = Dl_node.mat();

vecCamerasOtherParam.D_r = Dr_node.mat();*/

for (cv::FileNodeIterator it = cameras_node.begin(); it != cameras_node.end(); it++)

{

Camera camera;

(*it) >> camera;

vecCameras.push_back(camera);

}

//obtain col & row

vecCamerasOtherParam.cols = vecCameras[0].imageDimension(0);

vecCamerasOtherParam.rows = vecCameras[0].imageDimension(1);

//obtain R & t

Eigen::Matrix4d T_SC_left;

Eigen::Matrix4d T_SC_right;

T_SC_left = vecCameras[0].T_SC;

T_SC_right = vecCameras[1].T_SC;

SE3 T_SC_l(T_SC_left.topLeftCorner(3,3),T_SC_left.topRightCorner(3,1));

SE3 T_SC_r(T_SC_right.topLeftCorner(3,3),T_SC_right.topRightCorner(3,1));

SE3 Tcl_cr = T_SC_l.inverse()*T_SC_r;

SE3 Tcr_cl = T_SC_r.inverse()*T_SC_l;

Matrix3d R = Tcr_cl.rotation_matrix();

Vector3d t = Tcr_cl.translation();

//Eigen tranfer to array

double * R_ptr= new double[R.size()];

double *t_ptr = new double[t.size()];

Map(R_ptr, R.rows(), R.cols()) = R;

Map(t_ptr, t.rows(), t.cols()) = t;

cout<<"R_matrix"< 注:该文件为读取文件的源文件

5 . 修改CMakeLists.txt文件

进入SDK-Linux/demo_ros/src目录下,修改CMakeLists.txt文件

将ORB中的CMakeLists.txt添加到SDK的CMakeLists.txt下,修改如下:

1)

在add_compile_options(-std=c++11)后面添加

IF(NOT CMAKE_BUILD_TYPE)

SET(CMAKE_BUILD_TYPE Release)

ENDIF()

MESSAGE("Build type: " ${CMAKE_BUILD_TYPE})

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O3 -march=native ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O3 -march=native")

# Check C++11 or C++0x support

include(CheckCXXCompilerFlag)

CHECK_CXX_COMPILER_FLAG("-std=c++11" COMPILER_SUPPORTS_CXX11)

CHECK_CXX_COMPILER_FLAG("-std=c++0x" COMPILER_SUPPORTS_CXX0X)

if(COMPILER_SUPPORTS_CXX11)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11")

add_definitions(-DCOMPILEDWITHC11)

message(STATUS "Using flag -std=c++11.")

elseif(COMPILER_SUPPORTS_CXX0X)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++0x")

add_definitions(-DCOMPILEDWITHC0X)

message(STATUS "Using flag -std=c++0x.")

else()

message(FATAL_ERROR "The compiler ${CMAKE_CXX_COMPILER} has no C++11 support. Please use a different C++ compiler.")

endif()

LIST(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules)

find_package(OpenCV 3.0 QUIET)

if(NOT OpenCV_FOUND)

find_package(OpenCV 2.4.3 QUIET)

if(NOT OpenCV_FOUND)

message(FATAL_ERROR "OpenCV > 2.4.3 not found.")

endif()

endif()

#find_package(Eigen3 3.1.0 REQUIRED)

find_package(Pangolin REQUIRED)

修改后如下:

2)

#Eigen

include_directories("/usr/include/eigen3")

#Sophus

find_package(Sophus REQUIRED)

include_directories( ${Sophus_INCLUDE_DIRS})

include_directories(

${PROJECT_SOURCE_DIR}

${PROJECT_SOURCE_DIR}/ORB_SLAM/include

${PROJECT_SOURCE_DIR}/ORB_SLAM

#${EIGEN3_INCLUDE_DIR}

${Pangolin_INCLUDE_DIRS}

)

set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/lib)

3)

add_library(${PROJECT_NAME} SHARED

ORB_SLAM/src/FileYaml.cc

ORB_SLAM/src/System.cc

ORB_SLAM/src/Tracking.cc

ORB_SLAM/src/LocalMapping.cc

ORB_SLAM/src/LoopClosing.cc

ORB_SLAM/src/ORBextractor.cc

ORB_SLAM/src/ORBmatcher.cc

ORB_SLAM/src/FrameDrawer.cc

ORB_SLAM/src/Converter.cc

ORB_SLAM/src/MapPoint.cc

ORB_SLAM/src/KeyFrame.cc

ORB_SLAM/src/Map.cc

ORB_SLAM/src/MapDrawer.cc

ORB_SLAM/src/Optimizer.cc

ORB_SLAM/src/PnPsolver.cc

ORB_SLAM/src/Frame.cc

ORB_SLAM/src/KeyFrameDatabase.cc

ORB_SLAM/src/Sim3Solver.cc

ORB_SLAM/src/Initializer.cc

ORB_SLAM/src/Viewer.cc

4)

target_link_libraries(${PROJECT_NAME}

${OpenCV_LIBS}

#${EIGEN3_LIBS}

${Pangolin_LIBRARIES}

${Sophus_LIBRARIES}

${PROJECT_SOURCE_DIR}/ORB_SLAM/Thirdparty/DBoW2/lib/libDBoW2.so

${PROJECT_SOURCE_DIR}/ORB_SLAM/Thirdparty/g2o/lib/libg2o.so

)

5)

完成4)操作后,在其后添加:

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/ORB_SLAM/Examples/RGB-D)

add_executable(rgbd_tum

ORB_SLAM/Examples/RGB-D/rgbd_tum.cc)

target_link_libraries(rgbd_tum ${PROJECT_NAME}

${Sophus_LIBRARIES})

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/ORB_SLAM/Examples/Stereo)

add_executable(stereo_kitti

ORB_SLAM/Examples/Stereo/stereo_kitti.cc)

target_link_libraries(stereo_kitti ${PROJECT_NAME}

${Sophus_LIBRARIES})

add_executable(stereo_euroc

ORB_SLAM/Examples/Stereo/stereo_euroc.cc)

add_dependencies(stereo_euroc ${${PROJECT_NAME}_EXPORTED_TARGETS} ${catkin_EXPORTED_TARGETS})

target_link_libraries(stereo_euroc ${PROJECT_NAME}

${Sophus_LIBRARIES}

${catkin_LIBRARIES})

add_executable(module_driver src/camera_driver.cpp)

add_dependencies(module_driver ${${PROJECT_NAME}_EXPORTED_TARGETS} ${catkin_EXPORTED_TARGETS})

target_link_libraries(module_driver

${PROJECT_SOURCE_DIR}/../../lib/1604/libindem.so

${catkin_LIBRARIES}

${PROJECT_SOURCE_DIR}/../../lib/1604/libboost_filesystem.so.1.58.0

${PROJECT_SOURCE_DIR}/../../lib/1604/libboost_system.so.1.58.0

${PROJECT_SOURCE_DIR}/../../lib/1604/libg3logger.so.1.3.0-0

${PROJECT_SOURCE_DIR}/../../lib/1604/libnanomsg.so.5

${PROJECT_SOURCE_DIR}/../../lib/1604/libopencv_core.so.3.4

${PROJECT_SOURCE_DIR}/../../lib/1604/libopencv_imgproc.so.3.4

${PROJECT_SOURCE_DIR}/../../lib/1604/libopencv_videoio.so.3.4

pthread

stdc++fs

dl

)

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/ORB_SLAM/Examples/Monocular)

add_executable(mono_tum

ORB_SLAM/Examples/Monocular/mono_tum.cc)

target_link_libraries(mono_tum

${Sophus_LIBRARIES}

${PROJECT_NAME})

add_executable(mono_kitti

ORB_SLAM/Examples/Monocular/mono_kitti.cc)

target_link_libraries(mono_kitti

${Sophus_LIBRARIES}

${PROJECT_NAME})

add_executable(mono_euroc

ORB_SLAM/Examples/Monocular/mono_euroc.cc)

target_link_libraries(mono_euroc

${Sophus_LIBRARIES}

${PROJECT_NAME})

CMakeLists.txt修改完毕

6. 编译

1)

安装INDEMIND SDK,教程请查看:开讲啦丨INDEMIND双目惯性模组运行ORB-SLAM算法示例

进入SDK-Linux/demo_ros目录下,执行catkin_make命令,结果如下

7. 执行

1)

2)

进入SDK-Linux/lib/1604目录下,执行

sudo -s,sudo chmod 777 ./run.sh

之后执行

./run.sh

3)

进入SDK-Linux/demo_ros/src/ORB_SLAM目录下,执行./Examples/Stereo/stereo_euroc命令

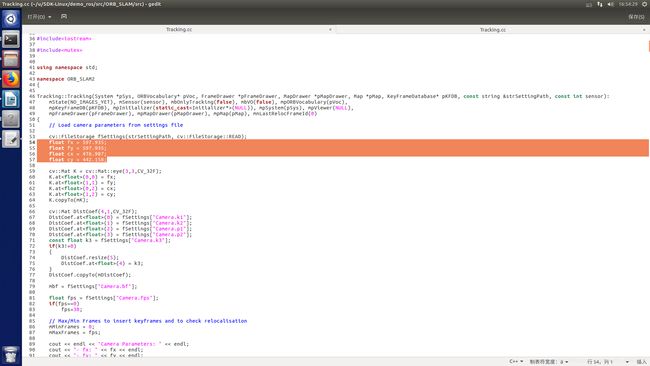

注意:此时,将获得去畸变之后的参数,把下列参数写入到SDK-Linux/demo_ros/src/ORB_SLAM/src/Tracking.cc文件中,如下图

float fx = 597.935;

float fy = 597.935;

float cx = 476.987;

float cy = 442.158;

最后,再次编译,并执行,即可得到实时ORB

Demo连接:腾讯视频