poll源码分析--基于3.10.0-693.11.1

poll epoll是linux下服务器高性能况下的基础组件,对其进行深入分析对于写代码和查bug都是极好的,现在就来分析下这个poll的实现。

我们依然从函数调用开始分析,先分析poll的系统调用实现

SYSCALL_DEFINE3(poll, struct pollfd __user *, ufds, unsigned int, nfds,

int, timeout_msecs)

{

struct timespec end_time, *to = NULL;

int ret;

//如果传了超时时间,则在这里获取墙上时间

if (timeout_msecs >= 0) {

to = &end_time;

poll_select_set_timeout(to, timeout_msecs / MSEC_PER_SEC,

NSEC_PER_MSEC * (timeout_msecs % MSEC_PER_SEC));

}

ret = do_sys_poll(ufds, nfds, to); //实际干活函数

//如果调用执行中有信号到来,就填充当前线程的restart_block,用于重启系统调度。系统调用返回用户层前会处理信号。

if (ret == -EINTR) {

struct restart_block *restart_block;

restart_block = ¤t_thread_info()->restart_block;

restart_block->fn = do_restart_poll;

restart_block->poll.ufds = ufds;

restart_block->poll.nfds = nfds;

if (timeout_msecs >= 0) {

restart_block->poll.tv_sec = end_time.tv_sec;

restart_block->poll.tv_nsec = end_time.tv_nsec;

restart_block->poll.has_timeout = 1;

} else

restart_block->poll.has_timeout = 0;

ret = -ERESTART_RESTARTBLOCK //这个返回值是不会用户层看到的,在信号处理结束前会调用restart_syscall来重启这个调用

}

return ret;

}

看do_sys_poll的实现,这个函数结构还是比较简单的

int do_sys_poll(struct pollfd __user *ufds, unsigned int nfds,

struct timespec *end_time)

{

struct poll_wqueues table;

int err = -EFAULT, fdcount, len, size;

/* Allocate small arguments on the stack to save memory and be

faster - use long to make sure the buffer is aligned properly

on 64 bit archs to avoid unaligned access */

//一部分fds存在栈中,可以在fds少的时候减少一次内存申请

long stack_pps[POLL_STACK_ALLOC/sizeof(long)];

struct poll_list *const head = (struct poll_list *)stack_pps;

struct poll_list *walk = head;

unsigned long todo = nfds;

if (nfds > rlimit(RLIMIT_NOFILE))

return -EINVAL;

len = min_t(unsigned int, nfds, N_STACK_PPS);

for (;;) {

walk->next = NULL;

walk->len = len;

if (!len)

break;

//第一次内存拷贝

if (copy_from_user(walk->entries, ufds + nfds-todo,

sizeof(struct pollfd) * walk->len))

goto out_fds;

todo -= walk->len;

if (!todo)

break;

len = min(todo, POLLFD_PER_PAGE);

size = sizeof(struct poll_list) + sizeof(struct pollfd) * len;

walk = walk->next = kmalloc(size, GFP_KERNEL); //申请内存存储fds

if (!walk) {

err = -ENOMEM;

goto out_fds;

}

}

poll_initwait(&table); //初始化poll_wqueues,理解poll_wqueues相关对理解poll和epoll工作原理很重要

fdcount = do_poll(nfds, head, &table, end_time); //主要函数

poll_freewait(&table);

for (walk = head; walk; walk = walk->next) {

struct pollfd *fds = walk->entries;

int j;

for (j = 0; j < walk->len; j++, ufds++)

if (__put_user(fds[j].revents, &ufds->revents)) //第二次内存拷贝

goto out_fds;

}

err = fdcount;

out_fds:

walk = head->next;

while (walk) {

struct poll_list *pos = walk;

walk = walk->next;

kfree(pos); //释放先前申请的内存

}

return err;

}

do_sys_poll需要从用户层中将所有fds拷贝到内核,在fds很多的时候还要申请内存,在返回的时候还要将fds拷贝回用户层,这就是广为诟病poll性能差的原因。这个函数应该重点分析do_poll,但是poll_initwait也是很重要的,先看下这个函数做了什么初始化

void poll_initwait(struct poll_wqueues *pwq)

{

init_poll_funcptr(&pwq->pt, __pollwait); //初始化pwq->pt->_qproc的函数指针为__pollwait

pwq->polling_task = current;

pwq->triggered = 0;

pwq->error = 0;

pwq->table = NULL;

pwq->inline_index = 0;

}

static inline void init_poll_funcptr(poll_table *pt, poll_queue_proc qproc)

{

pt->_qproc = qproc;

pt->_key = ~0UL; /* all events enabled */ //监听所有事件

}

static void __pollwait(struct file *filp, wait_queue_head_t *wait_address,

poll_table *p)

{

struct poll_wqueues *pwq = container_of(p, struct poll_wqueues, pt);

struct poll_table_entry *entry = poll_get_entry(pwq); //获取一个poll_tables_entry

if (!entry)

return;

entry->filp = get_file(filp);

entry->wait_address = wait_address;

entry->key = p->_key;

init_waitqueue_func_entry(&entry->wait, pollwake); //初始化wait_queue_t的唤醒函数,当等待队列进行唤醒时执行这个函数

entry->wait.private = pwq;

add_wait_queue(wait_address, &entry->wait); //把wait_queue_t添加到某个等待队列

}

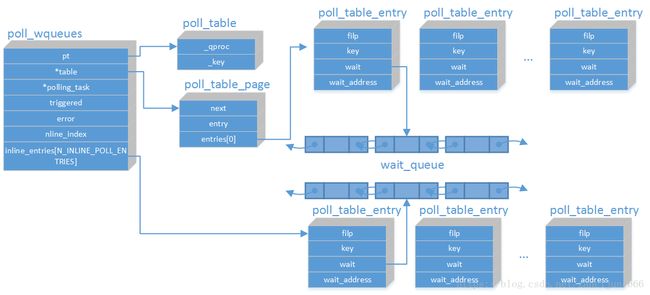

主要是初始化一些字段和设置一个函数指针__pollwait,__pollwait主要是获取一个poll_table_entry将poll_wqueues挂入一个等待队列,这个等待队列是参数传进来的,唤醒函数是pollwake。poll_wqueues中有很多的poll_table_entry,可见poll_wqueues是被挂入很多等待队列的。其数据结构如下图所示

接着看下当等待队列被唤醒时会发生什么,分析pollwake

static int pollwake(wait_queue_t *wait, unsigned mode, int sync, void *key)

{

struct poll_table_entry *entry;

entry = container_of(wait, struct poll_table_entry, wait);

if (key && !((unsigned long)key & entry->key)) //当唤醒事件的key不是期待的key时,直接返回,不继续执行

return 0;

return __pollwake(wait, mode, sync, key);

}

static int __pollwake(wait_queue_t *wait, unsigned mode, int sync, void *key)

{

struct poll_wqueues *pwq = wait->private;

DECLARE_WAITQUEUE(dummy_wait, pwq->polling_task); //初始化一个假的等待队列,将pwq记录的进程传进去,这里的唤醒函数是default_wake_function,这个函数会唤醒进程

/*

* Although this function is called under waitqueue lock, LOCK

* doesn't imply write barrier and the users expect write

* barrier semantics on wakeup functions. The following

* smp_wmb() is equivalent to smp_wmb() in try_to_wake_up()

* and is paired with set_mb() in poll_schedule_timeout.

*/

smp_wmb(); //写障碍

pwq->triggered = 1; //将triggered置为1,表示poll_wqueues等待的事件发生了

/*

* Perform the default wake up operation using a dummy

* waitqueue.

*

* TODO: This is hacky but there currently is no interface to

* pass in @sync. @sync is scheduled to be removed and once

* that happens, wake_up_process() can be used directly.

*/

return default_wake_function(&dummy_wait, mode, sync, key);

}

poll_wqueues被挂到某个等待队列,当这个等待队列唤醒时会执行pollwake。而pollwake在确定等待的事件发生后初始化一个临时的等待队列,这个等待队列的回调函数是default_wake_function,这个函数会进行真正的进行唤醒。也就是唤醒了poll_wqueues保存的进程。这里要区分唤醒的等待队列和唤醒进程,等待队列唤醒是执行等待队列的回调函数,而进程唤醒是唤醒睡眠的进程。

这里我们可以看到poll_initwait初始化了pwq->triggered,设置了entry->key,又在唤醒函数中对比了entry->key设置了pwq->triggered,这些作用是什么呢?poll_wqueues具体又是等待在哪个队列呢? 这些我们分析了do_poll就明白了,所幸代码不是很复杂。

static int do_poll(unsigned int nfds, struct poll_list *list,

struct poll_wqueues *wait, struct timespec *end_time)

{

poll_table* pt = &wait->pt;

ktime_t expire, *to = NULL;

int timed_out = 0, count = 0;

unsigned long slack = 0;

unsigned int busy_flag = net_busy_loop_on() ? POLL_BUSY_LOOP : 0; //判断系统是否开启了busy_loop

unsigned long busy_end = 0;

/* Optimise the no-wait case */

if (end_time && !end_time->tv_sec && !end_time->tv_nsec) { //如果没有设置超时时间,即立即返回,则不挂入具体fd的等待队列(pt->_qproc置空)

pt->_qproc = NULL;

timed_out = 1;

}

if (end_time && !timed_out)

slack = select_estimate_accuracy(end_time); //这里选择一个粗略时间,因为进程睡眠时间是做不到准确的,他会在end_time之后end_time+slack之前唤醒。

for (;;) {

struct poll_list *walk;

bool can_busy_loop = false;

for (walk = list; walk != NULL; walk = walk->next) { //循环每一个内存块

struct pollfd * pfd, * pfd_end;

pfd = walk->entries;

pfd_end = pfd + walk->len;

for (; pfd != pfd_end; pfd++) { //循环每一个pfd

/*

* Fish for events. If we found one, record it

* and kill poll_table->_qproc, so we don't

* needlessly register any other waiters after

* this. They'll get immediately deregistered

* when we break out and return.

*/

if (do_pollfd(pfd, pt, &can_busy_loop, //对每一个fd调用do_pollfd,这个函数会调用fd所属文件系统实现的poll函数

busy_flag)) {

count++; //do_pollfd有返回代表有事件发生,而且这个事件至少是在对该fd调用pt->_qproc之前发生的。

pt->_qproc = NULL; //如果有事件发生了,则poll会返回,剩余未调用do_pollfd的fd不需要再执行pt->_qproc

/* found something, stop busy polling */

busy_flag = 0; //也不用判断是否需要busy_loop

can_busy_loop = false;

}

}

}

/*

* All waiters have already been registered, so don't provide

* a poll_table->_qproc to them on the next loop iteration.

*/

pt->_qproc = NULL; //这个函数只需要在每个fd中执行一次,只需要挂载一次等待队列就好了

if (!count) {

count = wait->error; //是否在执行do_pollfd中发生错误

if (signal_pending(current)) //如果调用poll之前没有事件发生,等判断下有没有有没有信号到来,有的话就要返回EINTR重启这个调用

count = -EINTR;

}

if (count || timed_out)

break;

/* only if found POLL_BUSY_LOOP sockets && not out of time */

//判断是否继续busy_loop,如果busy_loop的话,则直接重新进入这个循环,不进行后面的schedule

if (can_busy_loop && !need_resched()) {

if (!busy_end) {

busy_end = busy_loop_end_time();

continue;

}

if (!busy_loop_timeout(busy_end))

continue;

}

busy_flag = 0; //在不进行busy_loop后,后续都不再进行busy_loop

/*

* If this is the first loop and we have a timeout

* given, then we convert to ktime_t and set the to

* pointer to the expiry value.

*/

if (end_time && !to) {

expire = timespec_to_ktime(*end_time);

to = &expire;

}

if (!poll_schedule_timeout(wait, TASK_INTERRUPTIBLE, to, slack)) //如果pwq->triggered为0的话就进入可中断睡眠,如果没有中断打断的话,会睡眠到超时时间到来

timed_out = 1; //置超时位,在超时后还会进行一轮do_pollfd调用

}

return count;

}

do_poll先循环对所有fds进行调用do_pollfd判断是否有事件到来,同时do_pollfd执行时,如果pt->_qproc不为NULL的话,还会调用pt->_qproc指向的函数。如果没有事件到来则判断是否需要busy_loop,如果不需要busy_loop的话就调用poll_schedule_timeout,里面会判断是否进入调度。

我们先分析下poll_schedule_timeout这个函数

int poll_schedule_timeout(struct poll_wqueues *pwq, int state,

ktime_t *expires, unsigned long slack)

{

int rc = -EINTR;

set_current_state(state);

if (!pwq->triggered) //如果pwq->triggered没有被置位(即所检测到的fds没有事件到来),则进入调度

rc = freezable_schedule_hrtimeout_range(expires, slack,

HRTIMER_MODE_ABS);

__set_current_state(TASK_RUNNING);

/*

* Prepare for the next iteration.

*

* The following set_mb() serves two purposes. First, it's

* the counterpart rmb of the wmb in pollwake() such that data

* written before wake up is always visible after wake up.

* Second, the full barrier guarantees that triggered clearing

* doesn't pass event check of the next iteration. Note that

* this problem doesn't exist for the first iteration as

* add_wait_queue() has full barrier semantics.

*/

set_mb(pwq->triggered, 0); //当程序执行到这里的时候,要么有事件到来唤醒,要么是其它中断唤醒这个进程,这里为什么会把pwq->triggered置0 呢?

return rc;

}

poll_schedule_timeout这个函数会把pwq->triggered置0,这不是把事件唤醒给清除掉了吗?这个可能会让人疑惑。其实进程执行到了这里,一定会再进行一次对fds调用do_pollfd的循环,所以不会丢失事件唤醒,同时事件唤醒之后需要将pwq->triggered置0以便前面freezable_schedule_hrtimeout_range进入睡眠。同时,在这里置0而不直接在freezable_schedule_hrtimeout_range之前置0是为了避免在do_pollfd循环中已执行do_pollfd的fd事件到来,却需要进程睡眠之后才能感知到。

do_poll会循环对fds进行do_pollfd进行调用,睡眠或者busy_loop直到fd有事件发生。现在分析下do_pollfd

static inline unsigned int do_pollfd(struct pollfd *pollfd, poll_table *pwait,

bool *can_busy_poll,

unsigned int busy_flag)

{

unsigned int mask;

int fd;

mask = 0;

fd = pollfd->fd;

if (fd >= 0) {

struct fd f = fdget(fd);

mask = POLLNVAL;

if (f.file) {

mask = DEFAULT_POLLMASK;

if (f.file->f_op && f.file->f_op->poll) { //在文件系统实现了poll函数的情况下才调用

pwait->_key = pollfd->events|POLLERR|POLLHUP;

pwait->_key |= busy_flag; //传进去判断这个fd是否支持busy_loop

mask = f.file->f_op->poll(f.file, pwait); //调用文件系统实现的poll

if (mask & busy_flag)

*can_busy_poll = true; //poll返回是否支持busy_loop

}

/* Mask out unneeded events. */

mask &= pollfd->events | POLLERR | POLLHUP; //上面分析到所有事件的到来都会引起进程唤醒,但在这里只关心用户关心的事件

fdput(f);

}

}

pollfd->revents = mask; //返回发生了的事件

return mask;

}

这个函数主要是调度对应fd的文件系统实现的poll,传递的参数有poll_table。文件系统实现的poll会注册poll_table中的等待回调函数到对应的等待队列中,同时返回当前fd中是否有事件到来。

我们结合tcp看下poll的最后一部分实现,tcp的poll是tcp_poll,经过一系列封装最终会调到这个函数里

unsigned int tcp_poll(struct file *file, struct socket *sock, poll_table *wait)

{

unsigned int mask;

struct sock *sk = sock->sk;

const struct tcp_sock *tp = tcp_sk(sk);

sock_rps_record_flow(sk);

sock_poll_wait(file, sk_sleep(sk), wait); //看这个函数,可以看到调勇poll的进程最终会挂载到sk_sleep(sk)这个等待队列里。

if (sk->sk_state == TCP_LISTEN)

return inet_csk_listen_poll(sk);

/* Socket is not locked. We are protected from async events

* by poll logic and correct handling of state changes

* made by other threads is impossible in any case.

*/

mask = 0;

/*

* POLLHUP is certainly not done right. But poll() doesn't

* have a notion of HUP in just one direction, and for a

* socket the read side is more interesting.

*

//这里有个说明,就是POLLHUP 和 POLLOUT/POLLWR 是不兼容的,因为TCP在对端发来fin后,本端还是可以写的呀。

* Some poll() documentation says that POLLHUP is incompatible

* with the POLLOUT/POLLWR flags, so somebody should check this

* all. But careful, it tends to be safer to return too many

* bits than too few, and you can easily break real applications

* if you don't tell them that something has hung up!

*

* Check-me.

*

* Check number 1. POLLHUP is _UNMASKABLE_ event (see UNIX98 and

* our fs/select.c). It means that after we received EOF,

* poll always returns immediately, making impossible poll() on write()

* in state CLOSE_WAIT. One solution is evident --- to set POLLHUP

* if and only if shutdown has been made in both directions.

* Actually, it is interesting to look how Solaris and DUX

* solve this dilemma. I would prefer, if POLLHUP were maskable,

* then we could set it on SND_SHUTDOWN. BTW examples given

* in Stevens' books assume exactly this behaviour, it explains

* why POLLHUP is incompatible with POLLOUT. --ANK

*

* NOTE. Check for TCP_CLOSE is added. The goal is to prevent

* blocking on fresh not-connected or disconnected socket. --ANK

*/

... //省略一大段判断该返回哪些事件

return mask;

}

可以看到,poll是会挂到sk_sleep(sk)这个等待队列,至于这个等待队列啥时候进行唤醒,可以查看tcp协议栈处理各种事件的分析这篇文章。

至此,poll的实现就已经分析完了。其过程可以总结为:

- 将fds拷贝到内核

- 对每个fd将此进程注册到其等待队列,当有事件发生时会唤醒此进程

- 循环对每个fd判断是否有事件到来,没有的话进入可中断睡眠

- 有事件到来后将所有fds再拷贝回用户层

对于epoll,实现原理差不多,但是对于fd传递倒是优雅了很多,我后面再起文章分析

参考:

- centos kernel 3.10.0-693.11.1

- tcp协议栈处理各种事件的分析