theano学习指南--深度置信网络(DBN)(翻译)

欢迎fork我的github:https://github.com/zhaoyu611/DeepLearningTutorialForChinese

最近在学习Git,所以正好趁这个机会,把学习到的知识实践一下~ 看完DeepLearning的原理,有了大体的了解,但是对于theano的代码,还是自己撸一遍印象更深 所以照着deeplearning.net上的代码,重新写了一遍,注释部分是原文翻译和自己的理解。 感兴趣的小伙伴可以一起完成这个工作哦~ 有问题欢迎联系我 Email: [email protected] QQ: 3062984605

深度置信网络

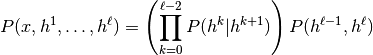

[Hinton06] 指出RBMs可以堆叠,并采用贪婪算法训练,从而形成深度置信网络(DBN)。DBNs是一个可以提取训练数据的深层特征的图模型。DBNs定义观察向量 x 和 l 层隐层 hk 的联合分布如下:

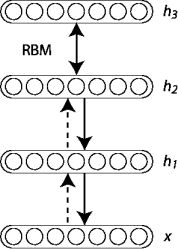

其中, x=h0,P(hk−1|hk) 是一个条件分布:第 k 层RBM中给定可见单元计算隐藏单元的概率。如下图所示:

使用逐层贪婪无监督训练DBNs时,每层的模块是RBM。流程如下:

- 将RBM作为模型的第一层,原始输入 x=h(0) 是可见层。

- 第一层的输入将作为第二层的输入。第二层的算法与第一层相同。输出为平均激活 p(h(1)|h(0)) 或 p(h(1)|h(0)) 的样本。

- 将第二层作为RBM进行训练,转换数据(样本或平均激活)作为训练集(用于RBM的可见层)

- 对于指定层 (第2层、第3层),每次向上传播样本或平均值

- 微调深度网络的所有参数,代价函数为对数似然函数,或是有监督的训练标准(使用额外的训练方法将学习的数据转换为有监督预测,例如线性分类)。

本教程中,通过有监督梯度下降进行微调。基于最上层隐层 h(l) 的输出,使用 logistic回归分类器对输入 d 分类。通过负对数似然代价函数的监督梯度下降法,实现参数的微调。因为监督梯度只对非空数据有效:每层的权重和隐层的偏置(RBM的可见层的偏置是空)。上述过程等价于初始化深度MLP的参数,其中权重和隐层偏置是通过无监督训练获得的。

预训练逐层贪婪学习

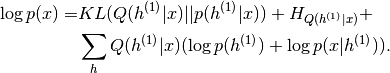

为什么这样的算法能工作?举例说明:两层DBN网络,隐层 h(1) 和隐层 h(2) (权重分别为 W(1) 和 W(2) )。[Hinton06]([Bengio09]提供了衍生模型)建立模型, logp(x) 写做:

(2)

(2)

KL(Q(h(1)|x)||p(h(1)|x)) 代表KL散度。如果是标准RBM,则表示后向 Q(h(1)|x) 的散度;如果是DBN,则表示同一层 p(h(1)|x) 的散度。(例如顶层RBM定义的 p(h(1),h(2)) )。 HQ(h(1)|x) 是分布 Q(h(1)|x) 的交叉熵。

可以看出,如果初始化两个隐层,例如 W(2)=W(1),Q(h(1)|x)=p(h(1)|x) ,同时KL散度为空。如果学习第一层RBM,然后固定参数 W(1) ,优化函数(2)可以改变 W(2) 来增加似然概率 p(x) 。

同时注意到,如果从公式 (2)分离出W^{(2)}影响量,可以得到:

![]()

分别优化 W(2) 来训练第二层RBM,使用 Q(h(1)|x) 作为训练分布,其中 x 从训练分布中采样,并用于第一层RBM。

编写代码

在Theano中运行DBNs,需要用到Restricted Boltzmann Machines (RBM)定义的类。可以看出,DBN的代码与SdA十分相似,因为两者都包含无监督逐层预训练,随后是监督微调,共同组成了深度MLP。最主要的区别就是用RBM类代替了dA类。

首先,我们定义DBN类,它包含了MLP的层,用于连接RBMs。因为使用RBMs初始化MLP,所以要尽量降低两个类的耦合度:RBMs用于初始化网络,MLP用于分类。

class DBN(object):

"""Deep Belief Network

A deep belief network is obtained by stacking several RBMs on top of each

other. The hidden layer of the RBM at layer `i` becomes the input of the

RBM at layer `i+1`. The first layer RBM gets as input the input of the

network, and the hidden layer of the last RBM represents the output. When

used for classification, the DBN is treated as a MLP, by adding a logistic

regression layer on top.

"""

def __init__(self, numpy_rng, theano_rng=None, n_ins=784,

hidden_layers_sizes=[500, 500], n_outs=10):

"""This class is made to support a variable number of layers.

:type numpy_rng: numpy.random.RandomState

:param numpy_rng: numpy random number generator used to draw initial

weights

:type theano_rng: theano.tensor.shared_randomstreams.RandomStreams

:param theano_rng: Theano random generator; if None is given one is

generated based on a seed drawn from `rng`

:type n_ins: int

:param n_ins: dimension of the input to the DBN

:type hidden_layers_sizes: list of ints

:param hidden_layers_sizes: intermediate layers size, must contain

at least one value

:type n_outs: int

:param n_outs: dimension of the output of the network

"""

self.sigmoid_layers = []

self.rbm_layers = []

self.params = []

self.n_layers = len(hidden_layers_sizes)

assert self.n_layers > 0

if not theano_rng:

theano_rng = MRG_RandomStreams(numpy_rng.randint(2 ** 30))

# allocate symbolic variables for the data

# the data is presented as rasterized images

self.x = T.matrix('x')

# the labels are presented as 1D vector of [int] labels

self.y = T.ivector('y')self.sigmoid_layers储存了正向图并组成了MP;self.rbm_layers储存了RBMs并预训练MLP的每一层。

随后,构造n_layers sigmoid层(使用 Multilayer Perceptron中介绍的HiddenLayer类,唯一的修改是将非线性函数tanh替换为logistic函数 s(x)=11+e−x )和n_layers RBMs,其中n_layers 是模型的深度。连接sigmoid层从而组成MLP;构造每个RBM从而他们和sigmoid层共享权重矩阵和隐层偏置。

for i in range(self.n_layers):

# construct the sigmoidal layer

# the size of the input is either the number of hidden

# units of the layer below or the input size if we are on

# the first layer

if i == 0:

input_size = n_ins

else:

input_size = hidden_layers_sizes[i - 1]

# the input to this layer is either the activation of the

# hidden layer below or the input of the DBN if you are on

# the first layer

if i == 0:

layer_input = self.x

else:

layer_input = self.sigmoid_layers[-1].output

sigmoid_layer = HiddenLayer(rng=numpy_rng,

input=layer_input,

n_in=input_size,

n_out=hidden_layers_sizes[i],

activation=T.nnet.sigmoid)

# add the layer to our list of layers

self.sigmoid_layers.append(sigmoid_layer)

# its arguably a philosophical question... but we are

# going to only declare that the parameters of the

# sigmoid_layers are parameters of the DBN. The visible

# biases in the RBM are parameters of those RBMs, but not

# of the DBN.

self.params.extend(sigmoid_layer.params)

# Construct an RBM that shared weights with this layer

rbm_layer = RBM(numpy_rng=numpy_rng,

theano_rng=theano_rng,

input=layer_input,

n_visible=input_size,

n_hidden=hidden_layers_sizes[i],

W=sigmoid_layer.W,

hbias=sigmoid_layer.b)

self.rbm_layers.append(rbm_layer)剩下的工作是加上一个logistic回归层组成MLP。这里使用Classifying MNIST digits using Logistic Regression提到的LogisticRegression类。

self.logLayer = LogisticRegression(

input=self.sigmoid_layers[-1].output,

n_in=hidden_layers_sizes[-1],

n_out=n_outs)

self.params.extend(self.logLayer.params)

# compute the cost for second phase of training, defined as the

# negative log likelihood of the logistic regression (output) layer

self.finetune_cost = self.logLayer.negative_log_likelihood(self.y)

# compute the gradients with respect to the model parameters

# symbolic variable that points to the number of errors made on the

# minibatch given by self.x and self.y

self.errors = self.logLayer.errors(self.y)

该类也提供了每个RBM的训练函数。函数返回一个列表,其中的元素 i 是指第 i 层RBM执行一步训练。

def pretraining_functions(self, train_set_x, batch_size, k):

'''Generates a list of functions, for performing one step of

gradient descent at a given layer. The function will require

as input the minibatch index, and to train an RBM you just

need to iterate, calling the corresponding function on all

minibatch indexes.

:type train_set_x: theano.tensor.TensorType

:param train_set_x: Shared var. that contains all datapoints used

for training the RBM

:type batch_size: int

:param batch_size: size of a [mini]batch

:param k: number of Gibbs steps to do in CD-k / PCD-k

'''

# index to a [mini]batch

index = T.lscalar('index') # index to a minibatch为了改变训练时的学习率,这里使用Theano变量类型,并赋予一个初始值。

learning_rate = T.scalar('lr') # learning rate to use

# begining of a batch, given `index`

batch_begin = index * batch_size

# ending of a batch given `index`

batch_end = batch_begin + batch_size

pretrain_fns = []

for rbm in self.rbm_layers:

# get the cost and the updates list

# using CD-k here (persisent=None) for training each RBM.

# TODO: change cost function to reconstruction error

cost, updates = rbm.get_cost_updates(learning_rate,

persistent=None, k=k)

# compile the theano function

fn = theano.function(

inputs=[index, theano.In(learning_rate, value=0.1)],

outputs=cost,

updates=updates,

givens={

self.x: train_set_x[batch_begin:batch_end]

}

)

# append `fn` to the list of functions

pretrain_fns.append(fn)

return pretrain_fns函数pretrain_fns[i]的输入变量为index,可选输入变量为lr–学习率。注意参数名是Theano的变量名(例如lr),而不是python变量名(例如learning_rate)。当使用Theano时,如果使用参数k(CD或PCD中的Gibbs采样步数),那么它也是函数的参数。

同样,DBN类包含了用于微调的函数(train_model,validate_model,test_model)。

def build_finetune_functions(self, datasets, batch_size, learning_rate):

'''Generates a function `train` that implements one step of

finetuning, a function `validate` that computes the error on a

batch from the validation set, and a function `test` that

computes the error on a batch from the testing set

:type datasets: list of pairs of theano.tensor.TensorType

:param datasets: It is a list that contain all the datasets;

the has to contain three pairs, `train`,

`valid`, `test` in this order, where each pair

is formed of two Theano variables, one for the

datapoints, the other for the labels

:type batch_size: int

:param batch_size: size of a minibatch

:type learning_rate: float

:param learning_rate: learning rate used during finetune stage

'''

(train_set_x, train_set_y) = datasets[0]

(valid_set_x, valid_set_y) = datasets[1]

(test_set_x, test_set_y) = datasets[2]

# compute number of minibatches for training, validation and testing

n_valid_batches = valid_set_x.get_value(borrow=True).shape[0]

n_valid_batches //= batch_size

n_test_batches = test_set_x.get_value(borrow=True).shape[0]

n_test_batches //= batch_size

index = T.lscalar('index') # index to a [mini]batch

# compute the gradients with respect to the model parameters

gparams = T.grad(self.finetune_cost, self.params)

# compute list of fine-tuning updates

updates = []

for param, gparam in zip(self.params, gparams):

updates.append((param, param - gparam * learning_rate))

train_fn = theano.function(

inputs=[index],

outputs=self.finetune_cost,

updates=updates,

givens={

self.x: train_set_x[

index * batch_size: (index + 1) * batch_size

],

self.y: train_set_y[

index * batch_size: (index + 1) * batch_size

]

}

)

test_score_i = theano.function(

[index],

self.errors,

givens={

self.x: test_set_x[

index * batch_size: (index + 1) * batch_size

],

self.y: test_set_y[

index * batch_size: (index + 1) * batch_size

]

}

)

valid_score_i = theano.function(

[index],

self.errors,

givens={

self.x: valid_set_x[

index * batch_size: (index + 1) * batch_size

],

self.y: valid_set_y[

index * batch_size: (index + 1) * batch_size

]

}

)

# Create a function that scans the entire validation set

def valid_score():

return [valid_score_i(i) for i in range(n_valid_batches)]

# Create a function that scans the entire test set

def test_score():

return [test_score_i(i) for i in range(n_test_batches)]

return train_fn, valid_score, test_score模块整合

以下代码构造了深度网络:

numpy_rng = numpy.random.RandomState(123)

print('... building the model')

# construct the Deep Belief Network

dbn = DBN(numpy_rng=numpy_rng, n_ins=28 * 28,

hidden_layers_sizes=[1000, 1000, 1000],

n_outs=10)

网络训练包括两步:(1)逐层预训练(2)微调。

预训练阶段,循环训练网络所有层。对于每一层,使用编译过的theano函数决定该层RBM的输入和一步CD-k。pretraining_epochs函数以一定迭代次数训练训练集。

#########################

# PRETRAINING THE MODEL #

#########################

print('... getting the pretraining functions')

pretraining_fns = dbn.pretraining_functions(train_set_x=train_set_x,

batch_size=batch_size,

k=k)

print('... pre-training the model')

start_time = timeit.default_timer()

# Pre-train layer-wise

for i in range(dbn.n_layers):

# go through pretraining epochs

for epoch in range(pretraining_epochs):

# go through the training set

c = []

for batch_index in range(n_train_batches):

c.append(pretraining_fns[i](index=batch_index,

lr=pretrain_lr))

print('Pre-training layer %i, epoch %d, cost ' % (i, epoch), end=' ')

print(numpy.mean(c))

end_time = timeit.default_timer()微调循环与 Multilayer Perceptron中十分相似,唯一区别是这里使用build_finetune_functions函数、

运行程序

调用以下代码运行程序:

python code/DBN.py使用默认设置,程序进行100次预训练迭代,minibatch大小为10,共进行500000次无监督参数更新。这里设置无监督学习率为0.01,有监督学习率为0.1。DBN有三个隐层,每层有1000个单元。因为有提前结束的条件设置,进行46次有监督迭代后结束,程序的最小验证误差为1.27%,测试误差为1.34%

实验配置:Intel(R) Xeon(R) CPU X5560,280GHZ,使用多线程MKL库(运行在4核),预训练耗时615分钟,平均2.05分钟/(层*迭代次数)。微调过程耗时101分钟,大概2.20分钟/一次迭代。

通过验证误差的优化选择超参数。我们在集合 {10−1,...,10−5} 测试无监督学习率,在集合 {10−1,...,10−4} 测试有监督学习率。除了提前结束,没有使用其他技巧,也没有优化预训练更新的数量。

提示与技巧

一个提高程序运行效率的方法(前提是有足够的内存)是:第 i−1 层权重确定的条件下,在第 i 层一次性计算整个数据集。换言之,程序从训练第一层RBM开始。完成训练后,根据数据集的样本,可以计算隐藏单元的值,并将该值作为新的数据集用于第二层RBM的训练。完成第二层的训练后,数据集可以用于第三层的训练。这样避免了计算中间(隐层)的representation,但是内存的使用提高了pretraining_epochs倍。