python实现多元线性拟合、一元多项式拟合、多元多项式拟合

数据分析中经常会使用到数据拟合,本文中将阐述如何实现一元以及多元的线性拟合以及多项式拟合,本文中只涉及实现方式,不涉及理论知识。

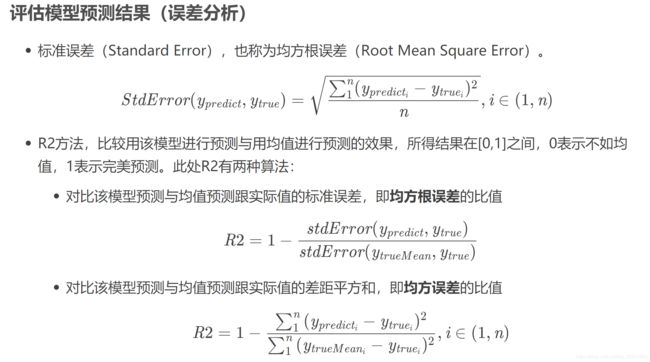

模型拟合中涉及的误差评估方法如下所示:

import numpy as np

def stdError_func(y_test, y):

return np.sqrt(np.mean((y_test - y) ** 2))

def R2_1_func(y_test, y):

return 1 - ((y_test - y) ** 2).sum() / ((y.mean() - y) ** 2).sum()

def R2_2_func(y_test, y):

y_mean = np.array(y)

y_mean[:] = y.mean()

return 1 - stdError_func(y_test, y) / stdError_func(y_mean, y)

一元线性拟合

import pandas as pd

import numpy as np

from sklearn.preprocessing import PolynomialFeatures

from sklearn import linear_model

filename = "E:/data.csv"

df= pd.read_csv(filename)

x = np.array(df.iloc[:,0].values)

y = np.array(df.iloc[:,5].values)

cft = linear_model.LinearRegression()

cft.fit(x[:,np.newaxis], y) #模型将x变成二维的形式, 输入的x的维度为[None, 1]

print("model coefficients", cft.coef_)

print("model intercept", cft.intercept_)

predict_y = cft.predict(x[:,np.newaxis])

strError = stdError_func(predict_y, y)

R2_1 = R2_1_func(predict_y, y)

R2_2 = R2_2_func(predict_y, y)

score = cft.score(x[:,np.newaxis], y) ##sklearn中自带的模型评估,与R2_1逻辑相同

print(' strError={:.2f}, R2_1={:.2f}, R2_2={:.2f}, clf.score={:.2f}'.format(

strError,R2_1,R2_2,score))

结果输出为:

model coefficients [-31.2375]

model intercept 7.415750000000001

strError=1.11, R2_1=0.28, R2_2=0.15, clf.score=0.28

模型拟合的表达式为:

y = 7.415750000000001 +(-31.2375) * x

从拟合的均方误差和得分来看效果不佳

多元线性拟合

import pandas as pd

import numpy as np

from sklearn.preprocessing import PolynomialFeatures

from sklearn import linear_model

filename = "E:/data.csv"

df= pd.read_csv(filename)

x = np.array(df.iloc[:,0:4].values)

y = np.array(df.iloc[:,5].values)

cft = linear_model.LinearRegression()

print(x.shape)

cft.fit(x, y) #

print("model coefficients", cft.coef_)

print("model intercept", cft.intercept_)

predict_y = cft.predict(x)

strError = stdError_func(predict_y, y)

R2_1 = R2_1_func(predict_y, y)

R2_2 = R2_2_func(predict_y, y)

score = cft.score(x, y) ##sklearn中自带的模型评估,与R2_1逻辑相同

print('strError={:.2f}, R2_1={:.2f}, R2_2={:.2f}, clf.score={:.2f}'.format(

strError,R2_1,R2_2,score))

结果输出为:

model coefficients [-31.2375 17.74375 44.325 5.7375 ]

model intercept 0.5051249999999978

strError=0.58, R2_1=0.80, R2_2=0.56, clf.score=0.80

模型拟合的表达式为:

y = 0.5051249999999978 +(-31.2375) * x11 + 17.74375 *x2 + 44.325 * x3 + 5.7375 * x4

从拟合的均方误差和得分来看在之前的基础上有所提升

一元多项式拟合

以三次多项式为例

import pandas as pd

import numpy as np

from sklearn.preprocessing import PolynomialFeatures

from sklearn import linear_model

filename = "E:/data.csv"

df= pd.read_csv(filename)

x = np.array(df.iloc[:,0].values)

y = np.array(df.iloc[:,5].values)

poly_reg =PolynomialFeatures(degree=3) #三次多项式

X_ploy =poly_reg.fit_transform(x[:, np.newaxis])

print(X_ploy.shape)

lin_reg_2=linear_model.LinearRegression()

lin_reg_2.fit(X_ploy,y)

predict_y = lin_reg_2.predict(X_ploy)

strError = stdError_func(predict_y, y)

R2_1 = R2_1_func(predict_y, y)

R2_2 = R2_2_func(predict_y, y)

score = lin_reg_2.score(X_ploy, y) ##sklearn中自带的模型评估,与R2_1逻辑相同

print("model coefficients", lin_reg_2.coef_)

print("model intercept", lin_reg_2.intercept_)

print('degree={}: strError={:.2f}, R2_1={:.2f}, R2_2={:.2f}, clf.score={:.2f}'.format(

3, strError,R2_1,R2_2,score))

输出结果

model coefficients [ 0. 990.64583333 -11906.25 44635.41666667]

model intercept -20.724999999999117

degree=3: strError=1.08, R2_1=0.32, R2_2=0.17, clf.score=0.32

对应的函数表达式为 -20.724999999999117 + [0, 990.64583333, -11906.25, 44635.41666667] *[1, x, x^2, x.^3].T = -20.724999999999117 + 990.64583333 * x + ( -11906.25) * x^2 + 44635.41666667 * x^3

多元多项式拟合

import pandas as pd

import numpy as np

from sklearn.preprocessing import PolynomialFeatures

from sklearn import linear_model

filename = "E:/data.csv"

df= pd.read_csv(filename)

x = np.array(df.iloc[:,0:4].values)

y = np.array(df.iloc[:,5].values)

poly_reg =PolynomialFeatures(degree=2) #三次多项式

X_ploy =poly_reg.fit_transform(x)

lin_reg_2=linear_model.LinearRegression()

lin_reg_2.fit(X_ploy,y)

predict_y = lin_reg_2.predict(X_ploy)

strError = stdError_func(predict_y, y)

R2_1 = R2_1_func(predict_y, y)

R2_2 = R2_2_func(predict_y, y)

score = lin_reg_2.score(X_ploy, y) ##sklearn中自带的模型评估,与R2_1逻辑相同

print("coefficients", lin_reg_2.coef_)

print("intercept", lin_reg_2.intercept_)

print('degree={}: strError={:.2f}, R2_1={:.2f}, R2_2={:.2f}, clf.score={:.2f}'.format(

3, strError,R2_1,R2_2,score))

函数输出结果为:

coefficients [ 0. 332.28129937 -19.9240981 -9.10607925

-191.05593023 -287.93919929 -912.11402936 -1230.21922184

-207.90033986 99.03441748 190.26204994 433.25169929

273.13674555 257.66550523 344.92652936]

intercept 4.35175537840722

degree=3: strError=0.23, R2_1=0.97, R2_2=0.82, clf.score=0.97

代码中输入的自变量是一个包含四个变量的输入, 对应coefficients输出的是长度为15的向量, 其中对应到的变量分别为 variable_X = [1, x1, x2, x3, x4, x 1 ∗ x 1 x1*x1 x1∗x1, x 1 ∗ x 2 x1*x2 x1∗x2, x 1 ∗ x 3 x1*x3 x1∗x3, x 1 ∗ x 4 x1*x4 x1∗x4, x 2 ∗ x 2 x2*x2 x2∗x2, x 2 ∗ x 3 x2*x3 x2∗x3, x 2 ∗ x 4 x2*x4 x2∗x4, x 3 ∗ x 3 x3*x3 x3∗x3, x 3 ∗ x 4 x3*x4 x3∗x4, x 4 ∗ x 4 x4*x4 x4∗x4]

对应的方程式为: i n t e r c e p t + c o e f f i c i e n t s ∗ v a r i a b l e X . T intercept + coefficients * variable_X.T intercept+coefficients∗variableX.T

代码中涉及到的数据集如下:

a,b,c,d,e

0.06,0.2,0.02,0.1,0.340

0.1,0.28,0.02,0.14,0.370

0.12,0.32,0.02,0.16,0.377

0.08,0.24,0.02,0.12,0.383

0.08,0.32,0.04,0.1,0.383

0.12,0.28,0.03,0.1,0.393

0.1,0.24,0.05,0.1,0.385

0.06,0.32,0.05,0.14,0.362

0.12,0.2,0.05,0.12,0.320

0.06,0.28,0.04,0.12,0.393

0.08,0.28,0.05,0.16,0.402

0.08,0.2,0.03,0.14,0.349

0.1,0.2,0.04,0.16,0.335

0.1,0.32,0.03,0.12,0.387

0.12,0.24,0.04,0.14,0.390

0.06,0.24,0.03,0.16,0.315

指数函数和幂函数拟合参照网址:

https://blog.csdn.net/kl28978113/article/details/88818885

参考链接:

https://blog.csdn.

net/weixin_44794704/article/details/89246032

https://blog.csdn.net/bxg1065283526/article/details/80043049

https://www.cnblogs.com/Lin-Yi/p/8975638.html