Kubernetes单master节点二进制部署

Kubernetes的三种部署方式

Minikube

- Minikube是一个工具,可以在本地快速运行一个单节点的Kubernetes,仅用于尝试Kubernetes或日常开发的用户使用

Kubeadm

- Kubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群

二进制包

- 从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群

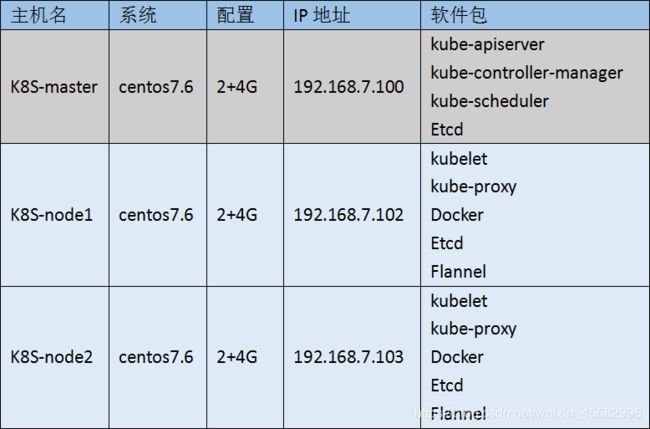

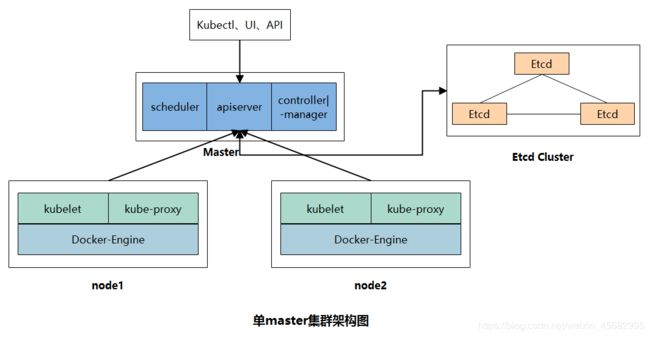

单Master节点二进制部署平台架构图

Master节点:

Master节点上面主要由四个模块组成,APIServer,schedule,controller-manager,etcd.

-

apiserver

apiserver负责对外提供RESTful的kubernetes API的服务,它是系统管理指令的统一接口,任何对资源的增删该查都要交给apiserver处理后再交给Etcd,如图,kubectl(kubernetes提供的客户端工具,该工具内部是对kubernetes API的调用)是直接和apiserver交互的 -

scheduler

scheduler负责调度Pod到合适的Node上,如果把scheduler看成一个黑匣子,那么它的输入是pod和由多个Node组成的列表,输出是Pod和一个Node的绑定。kubernetes目前提供了调度算法,同样也保留了接口。用户根据自己的需求定义自己的调度算法, -

controller manager

如果APIServer做的是前台的工作的话,那么controller manager就是负责后台的。每一个资源都对应一个控制器。而controller manager就是负责管理这些控制器的,比如我们通过apiserver创建了一个Pod,当这个Pod创建成功后,apiserver的任务就算完成了。 -

Etcd

Etcd是一个高可用的键值存储系统,kubernetes使用它来存储各个资源的状态,从而实现了Restful的API。

Node节点:

每个Node节点主要由三个模板组成:kublet,kube-proxy,Docker-Engine

-

kube-proxy

该模块实现了kubernetes中的服务发现和反向代理功能。kube-proxy支持TCP和UDP连接转发,默认基Round Robin算法将客户端流量转发到与service对应的一组后端pod。服务发现方面,kube-proxy使用etcd的watch机制监控集群中service和endpoint对象数据的动态变化,并且维护一个service到endpoint的映射关系,从而保证了后端pod的IP变化不会对访问者造成影响 -

kublet

kublet是Master在每个Node节点上面的agent,是Node节点上面最重要的模块,它负责维护和管理该Node上的所有容器,但是如果容器不是通过kubernetes创建的,它并不会管理。本质上,它负责使Pod的运行状态与期望的状态一致 -

Docker-Engine

容器引擎

kubernetes网络类型

- Overlay Network:覆盖网络,在基础网络上叠加的一种 虚拟网络技术模式,该网络中的主机通过虚拟链路连接起来

- VXLAN:将源数据包封装到UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后在以太网上传输,到达目的地后由隧道端点解封装并将数据发送给目

标地址 - Flannel:是Overlay网络的一种, 也是将源数据包封装在另一种网络包里面进行路由转发和通信,目前已经支持UDP、VXLAN、 AWS VPC和GCE路由等数据转发方式

实验部署(过程比较复杂,建议跟做)

- 实验所需安装包及脚本:

链接:https://pan.baidu.com/s/1vcVJSdpbl52nWzA1aKk4pQ

提取码:fnx7

| 组件 | 使用证书 |

|---|---|

| etcd | ca.pem,server.pem,server-key.pem |

| flannel | ca.pem,server.pem,server-key.pem |

| kube-apiserver | ca.pem,server.pem,server-key.pem |

| kubelet | ca.pem,ca-key.pem |

| kube-proxy | ca.pem,kube-proxy.pem,kube-proxy-key.pem |

| kubectl | ca.pem,admin.pem,admin-key.pem |

3、Etcd集群部署安装(在master节点操作)

(1)创建工作目录及证书创建目录

[root@localhost ~]# mkdir k8s

[root@localhost ~]# cd k8s/

[root@localhost k8s]# mkdir etcd-cert

(2)创建cfssl(证书创建工具)下载脚本

[root@localhost k8s]# vim cfssl.sh

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

#执行脚本

[root@localhost k8s]# bash cfssl.sh

[root@localhost k8s]# ls /usr/local/bin/

cfssl cfssl-certinfo cfssljson

//cfssl:生成证书工具

//cfssljson:通过传入json文件生成证书

//cfssl-certinfo:查看证书信息

(3)证书制作

#定义ca证书

[root@localhost k8s]# cd etcd-cert/

[root@localhost k8s]# cat > ca-config.json < ca-csr.json < server-csr.json < (4) 下载Etcd二进制包

- 下载地址:https://github.com/etcd-io/etcd/releases

(5)将下载的安装包上传至k8s目录中,并解压

[root@localhost k8s]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

[root@localhost k8s]# ls etcd-v3.3.10-linux-amd64

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

(6)创建etcd的工作目录,指定配置文件、命令文件、证书目录

[root@localhost k8s]# mkdir /opt/etcd/{cfg,bin,ssl} -p

[root@localhost k8s]# ls /opt/etcd/

bin cfg ssl

(7)添加etcd执行文件

[root@localhost k8s]# cd etcd-v3.3.10-linux-amd64/

[root@localhost etcd-v3.3.10-linux-amd64]# mv etcd etcdctl /opt/etcd/bin/

[root@localhost etcd-v3.3.10-linux-amd64]# ls /opt/etcd/bin/

etcd etcdctl

(8)证书拷贝到etcd工作目录中

[root@localhost k8s]# cp etcd-cert/*.pem /opt/etcd/ssl/

[root@localhost k8s]# ls /opt/etcd/ssl/

ca-key.pem ca.pem server-key.pem server.pem

(9)创建并执行etcd.sh脚本,生成配置文件,因为目前只有一个节点,无法添加到其它的节点,执行此脚本时会卡住等待其它节点

[root@localhost k8s]# vim etcd.sh

#!/bin/bash

#下面的举例是执行脚本的命令示例

#example: ./etcd.sh etcd01 192.168.1.10 etcd02=https://192.168.1.11:2380,etcd03=https://192.168.1.12:2380

ETCD_NAME=$1

ETCD_IP=$2

ETCD_CLUSTER=$3

WORK_DIR=/opt/etcd

cat <$WORK_DIR/cfg/etcd

#[Member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

#生成etcd的服务启动文件

cat </usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=${WORK_DIR}/ssl/server.pem \

--key-file=${WORK_DIR}/ssl/server-key.pem \

--peer-cert-file=${WORK_DIR}/ssl/server.pem \

--peer-key-file=${WORK_DIR}/ssl/server-key.pem \

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

#添加脚本权限,启动服务

[root@localhost k8s]# chmod +x etcd.sh

#因为目前只有一个etcd01节点,无法连接到etcd02、etcd03节点,所以报错是正常的,可以先暂时不管

[root@localhost k8s]# ./etcd.sh etcd01 192.168.7.100 etcd02=https://192.168.7.102:2380,etcd03=https://192.168.7.103:2380

#打开一个新的会话窗口,检查etcd的进程是否开启

[root@localhost k8s]# ps -ef | grep etcd

root 12331 1 2 20:52 ? 00:00:01 /opt/etcd/bin/etcd --name=etcd01 --data-dir=/var/lib/etcd/default.etcd --listen-peer-urls=https://192.168.7.100:2380 --listen-client-urls=https://192.168.7.100:2379,http://127.0.0.1:2379 --advertise-client-urls=https://192.168.7.100:2379 --initial-advertise-peer-urls=https://192.168.7.100:2380 --initial-cluster=etcd01=https://192.168.7.100:2380,etcd02=https://192.168.7.102:2380,etcd03=https://192.168.7.103:2380 --initial-cluster-token=etcd-cluster --initial-cluster-state=new --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem

root 12342 10089 0 20:52 pts/0 00:00:00 grep --color=auto etcd

(9)将etcd的工作目录和启动脚本文件拷贝到其它的节点,更改对应的配置文件/opt/etcd/cfg/etcd

#在master节点

[root@localhost k8s]# scp -r /opt/etcd/ [email protected]:/opt

[root@localhost k8s]# scp -r /opt/etcd/ [email protected]:/opt

[root@localhost k8s]# scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

[root@localhost k8s]# scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

#在node1、node2节点更改配置文件

[root@localhost ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02" //更改节点名称,不能重复

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.7.102:2380" //更改IP地址为本机地址

ETCD_LISTEN_CLIENT_URLS="https://192.168.7.102:2379" //更改IP地址为本机地址

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.7.102:2380" //更改IP地址为本机地址

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.7.102:2379" //更改IP地址为本机地址

#下面是固定格式不要更改

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.7.100:2380,etcd02=https://192.168.7.102:2380,etcd03=https://192.168.7.103:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#启动etcd

[root@localhost ~]# systemctl start etcd

[root@localhost ~]# systemctl enable etcd

[root@localhost ~]# systemctl status etcd

(10)检查etcd集群状态

#在证书目录下

[root@localhost ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.7.100:2379,https://192.168.7.102:2379,https://192.168.7.103:2379" cluster-health

member 57b92743cdbef0be is healthy: got healthy result from https://192.168.7.100:2379

member 823cb89d12a9ab55 is healthy: got healthy result from https://192.168.7.103:2379

member a99d699d2f1c604c is healthy: got healthy result from https://192.168.7.102:2379

cluster is healthy

3、在两个node节点安装docker

(1)依赖包、docker镜像源、docker安装

[root@localhost ~]# yum -y install yum-utils device-mapper-persistent-data lvm2

[root@localhost ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@localhost ~]# yum -y install docker-ce

#启动docker

[root@localhost ~]# systemctl restart docker

[root@localhost ~]# systemctl enable docker

(2)镜像加速(可在阿里云官方申请一个加速)

[root@localhost ~]# tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://xxx.mirror.aliyuncs.com"]

}

EOF

[root@localhost ~]# systemctl daemon-reload

[root@localhost ~]# systemctl restart docker

(3)网络优化

[root@localhost ~]# vim /etc/sysctl.conf

net.ipv4.ip_forward=1

[root@localhost ~]# sysctl -p

[root@localhost ~]# service network restart

[root@localhost ~]# systemctl restart docker

4、安装flannel网络组件(在node节点安装)

(1)在master添加基础网络到etcd中

[root@localhost ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.7.100:2379,https://192.168.7.102:2379,https://192.168.7.103:2379" set /coreos.com/network/config '{"Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

{"Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

#查看写入的信息

[root@localhost ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.7.100:2379,https://192.168.7.102:2379,https://192.168.7.103:2379" get /coreos.com/network/config

{"Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

(2)上传flannel软件包到所有的node节点,并完成解压

[root@localhost ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

flanneld

mk-docker-opts.sh

README.md

(3)创建k8s的工作目录

[root@localhost ~]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

#添加执行文件

[root@localhost ~]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

(4)启动flannel.sh脚本

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

#添加flannel配置文件

cat </opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

#添加flannel启动文件

cat </usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

[root@localhost ~]# bash flannel.sh https://192.168.7.100:2379,https://192.168.7.102,https://192.168.7.103:2379

(5)配置docker,与flannel建立关系

[root@localhost ~]# vim /usr/lib/systemd/system/docker.service

#更改如下

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock

#查看子网的文件

[root@localhost ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.57.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

//说明:bip指定启动时的子网

DOCKER_NETWORK_OPTIONS=" --bip=172.17.57.1/24 --ip-masq=false --mtu=1450"

#重启docker服务

[root@localhost ~]# systemctl daemon-reload

[root@localhost ~]# systemctl restart docker

#查看网络,docker0的网关IP已经变化

[root@localhost ~]# ifconfig

docker0: flags=4163 mtu 1450

inet 172.17.57.1 netmask 255.255.255.0 broadcast 172.17.57.255

inet6 fe80::42:7cff:feff:d613 prefixlen 64 scopeid 0x20

ether 02:42:7c:ff:d6:13 txqueuelen 0 (Ethernet)

RX packets 4996 bytes 208971 (204.0 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 9953 bytes 7571774 (7.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

(6)创建容器测试两个node节点

#创建容器查看网络

[root@localhost ~]# docker run -it centos:7 /bin/bash

[root@433ee230aaeb /]# ifconfig

eth0: flags=4163 mtu 1450

inet 172.17.57.2 netmask 255.255.255.0 broadcast 172.17.57.255

ether 02:42:ac:11:39:02 txqueuelen 0 (Ethernet)

RX packets 14 bytes 1076 (1.0 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 14 bytes 1204 (1.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

#ping另个node节点上的容器IP,可以ping通,flannel安装成功

[root@433ee230aaeb /]# ping 172.17.24.2

PING 172.17.24.2 (172.17.24.2) 56(84) bytes of data.

64 bytes from 172.17.24.2: icmp_seq=1 ttl=62 time=0.509 ms

64 bytes from 172.17.24.2: icmp_seq=2 ttl=62 time=0.691 ms

5、部署Master组件

(1)上传master脚本并解压

[root@localhost k8s]# unzip master.zip

(2)创建api-server证书目录

[root@localhost k8s]# mkdir k8s-cert

(3)创建证书生成脚本,并执行

[root@localhost k8s]# cd k8s-cert/

[root@localhost k8s-cert]# vim k8s-cert.sh

cat > ca-config.json < ca-csr.json < server-csr.json < admin-csr.json < kube-proxy-csr.json < (4)创建master上的k8s工作目录,将api-server证书复制到k8s工作目录中

[root@localhost k8s-cert]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@localhost k8s-cert]# cp *.pem /opt/kubernetes/ssl/

[root@localhost k8s-cert]# ls /opt/kubernetes/ssl/

admin-key.pem ca-key.pem kube-proxy-key.pem server-key.pem

admin.pem ca.pem kube-proxy.pem server.pem

(5)上传kubernetes安装包到/root/k8s,并解压

[root@localhost k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz

(6)将解压的安装包内关键的命令文件复制到k8s工作目录

[root@localhost k8s]# cd /root/k8s/kubernetes/server/bin/

[root@localhost bin]# cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

(7)创建管理用户角色

#先随机生成一个序列号

[root@localhost bin]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

5fd75f08ee0f22c9d2ae64dcd402b298

#创建token.csv文件

[root@localhost bin]# vim /opt/kubernetes/cfg/token.csv

5fd75f08ee0f22c9d2ae64dcd402b298,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

(8)二进制文件,token,证书都准备好以后,开启apiserver

[root@localhost k8s]# bash apiserver.sh 192.168.7.100 https://192.168.7.100:2379,https://192.168.7.102:2379,https://192.168.7.103:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

#查看服务及端口是否开启

[root@localhost k8s]# ps aux | grep apiserver

root 15472 39.1 8.6 421080 333804 ? Ssl 19:37 0:10 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://192.168.7.100:2379,https://192.168.7.102:2379,https://192.168.7.103:2379 --bind-address=192.168.7.100 --secure-port=6443 --advertise-address=192.168.7.100 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem

root 15495 0.0 0.0 112724 988 pts/1 S+ 19:38 0:00 grep --color=auto apiserver

[root@localhost k8s]# netstat -natp | grep 6443

tcp 0 0 192.168.7.100:6443 0.0.0.0:* LISTEN 15472/kube-apiserve

tcp 0 0 192.168.7.100:44136 192.168.7.100:6443 ESTABLISHED 15472/kube-apiserve

tcp 0 0 192.168.7.100:6443 192.168.7.100:44136 ESTABLISHED 15472/kube-apiserve

[root@localhost k8s]# netstat -natp | grep 8080

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 15472/kube-apiserve

(9)启动scheduler服务

[root@localhost k8s]# ./scheduler.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

#查看服务

[root@localhost k8s]# ps aux | grep kube

(10)启动controller-manager

[root@localhost k8s]# ./controller-manager.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

#查看服务

[root@localhost k8s]# ps aux | grep kube

(11)查看master节点状态,显示的是ok或者true才算正常

[root@localhost k8s]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

6、安装node组件

(1)上传node脚本,并解压

[root@localhost ~]# unzip node.zip

Archive: node.zip

inflating: proxy.sh //安装启动proxy脚本

inflating: kubelet.sh //安装启动kubelet脚本

(2)把master解压的k8s安装包内的文件kubelet、kube-proxy拷贝到node节点上去

[root@localhost bin]# scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

[root@localhost bin]# scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

(3)在master创建/root/k8s/kubeconfig工作目录,添加脚本,执行完成将文件复制到node节点上

- kubeconfig是为访问集群所作的配置

[root@localhost k8s]# mkdir kubeconfig

[root@localhost k8s]# cd kubeconfig/

[root@localhost kubeconfig]# vim kubeconfig

APISERVER=$1

SSL_DIR=$2

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://$APISERVER:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=5fd75f08ee0f22c9d2ae64dcd402b298 \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=$SSL_DIR/kube-proxy.pem \

--client-key=$SSL_DIR/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

#设置环境变量

[root@localhost kubeconfig]# vim /etc/profile

//在最后一行添加

export PATH=$PATH:/opt/kubernetes/bin/

[root@localhost kubeconfig]# source /etc/profile

#生成配置文件

[root@localhost kubeconfig]# bash kubeconfig 192.168.7.100 /root/k8s/k8s-cert/

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".

[root@localhost kubeconfig]# ls

bootstrap.kubeconfig kubeconfig kube-proxy.kubeconfig

#拷贝配置文件到node节点

[root@localhost kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg/

[root@localhost kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg/

#创建bootstrap角色赋予权限用于连接apiserver请求签名

[root@localhost kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

(4)在node01节点安装启动kubelet

#安装kubelet

[root@localhost ~]# bash kubelet.sh 192.168.7.102

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

#查看服务是否启动

[root@localhost ~]# ps aux | grep kubelet

[root@localhost ~]# systemctl status kubelet.service

(5)在master节点可以看到node1节点的证书请求

[root@localhost kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-bPGome_z3ZBCFpug_FyVVoOXCYFuID6MmCO5ymtDQpQ 2m1s kubelet-bootstrap Pending

//pending等待集群给该节点颁发证书

#在master节点同意颁发证书

[root@localhost kubeconfig]# kubectl certificate approve node-csr-bPGome_z3ZBCFpug_FyVVoOXCYFuID6MmCO5ymtDQpQ

[root@localhost kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-bPGome_z3ZBCFpug_FyVVoOXCYFuID6MmCO5ymtDQpQ 6m12s kubelet-bootstrap Approved,Issued

//Approved,Issued,已经被允许加入集群

#查看集群已经成功的加入node1节点

[root@localhost kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.7.102 Ready 2m9s v1.12.3

(6)在node1节点,启动proxy服务

[root@localhost ~]# bash proxy.sh 192.168.7.102

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

#查看服务状态是否已经启动

[root@localhost ~]# systemctl status kube-proxy.service

(7)node2节点部署

#把node1的/opt/kubernetes目录复制到node2节点

[root@localhost ~]# scp -r /opt/kubernetes/ [email protected]:/opt

#把node1节点的kubelet,kube-proxy的service文件拷贝到node2中

[root@localhost ~]# scp /usr/lib/systemd/system/{kubelet,kube-proxy}.service [email protected]:/usr/lib/systemd/system/

(8)在node2节点更改node1节点复制过来的配置文件

#首先删除复制过来的证书,各节点需要各自申请自己的证书

[root@localhost ~]# cd /opt/kubernetes/ssl/

[root@localhost ssl]# rm -rf *

#修改配置文件kubelet、kubelet.config、kube-proxy(三个配置文件)

[root@localhost ssl]# cd /opt/kubernetes/cfg/

[root@localhost cfg]# vim kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.7.103 \ //更改为本地IP

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

[root@localhost cfg]# vim kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.7.103 //更改为本地IP

[root@localhost cfg]# vim kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.7.103 \ //更改为本地IP

#启动服务

[root@localhost cfg]# systemctl start kubelet.service

[root@localhost cfg]# systemctl enable kubelet.service

[root@localhost cfg]# systemctl start kube-proxy.service

[root@localhost cfg]# systemctl enable kube-proxy.service

(9)在master节点同意node2节点的请求,颁发证书

#查看请求

[root@localhost kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-UFj47uNOLQwNmsXwAhVZPg4dtjPGIUL8FZwQaDhTYBI 2m56s kubelet-bootstrap Pending

node-csr-bPGome_z3ZBCFpug_FyVVoOXCYFuID6MmCO5ymtDQpQ 30m kubelet-bootstrap Approved,Issued

#同意Pending项

[root@localhost kubeconfig]# kubectl certificate approve node-csr-UFj47uNOLQwNmsXwAhVZPg4dtjPGIUL8FZwQaDhTYBI

[root@localhost kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-UFj47uNOLQwNmsXwAhVZPg4dtjPGIUL8FZwQaDhTYBI 5m23s kubelet-bootstrap Approved,Issued

node-csr-bPGome_z3ZBCFpug_FyVVoOXCYFuID6MmCO5ymtDQpQ 32m kubelet-bootstrap Approved,Issued

(10)在master节点查看集群信息,全为ready完成k8s单master节点部署

[root@localhost kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.7.102 Ready 26m v1.12.3

192.168.7.103 Ready 24s v1.12.3