搭建CDH 阿里云 (Step 1: 启动hdfs)

准备动作

- 在阿里云申请三台机器(Centos)

- 安装Oracle JDK

在阿里云申请三台机器(Centos)

PS. 内存要大于4g,因为namenode启动的时候内存需要

在每一台机器上配置域名

vim /etc/hosts

172.24.218.96 worker1

172.24.218.97 worker2

172.24.218.98 worker3

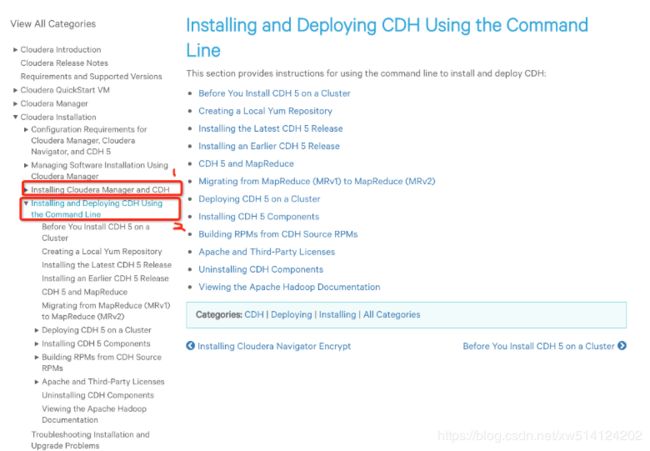

跟着cloudera官网文档走

安装CDH有两种方式:

- Cloudera Mangaer

- Command Line

安装CDH好处

安装CDH前 先安装Oracle JDK

文档地址

JDK 下载地址

PS. 下载x64版本的,如果选的系统是64位

下载 jdk后解压在 /usr/java/default 目录下

添加环境变量

[root@izhp333sqrlsy3kr46m4wuz java]# vim /etc/profile

export JAVA_HOME=/usr/java/default

export PATH=$PATH:$JAVA_HOME/bin

[root@izhp333sqrlsy3kr46m4wuz java]# source /etc/profile

验证 java 是否安装成功

[root@izhp333sqrlsy3kr46m4wuz java]# java -version

java version "1.8.0_162"

Java(TM) SE Runtime Environment (build 1.8.0_162-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.162-b12, mixed mode)

安装CDH

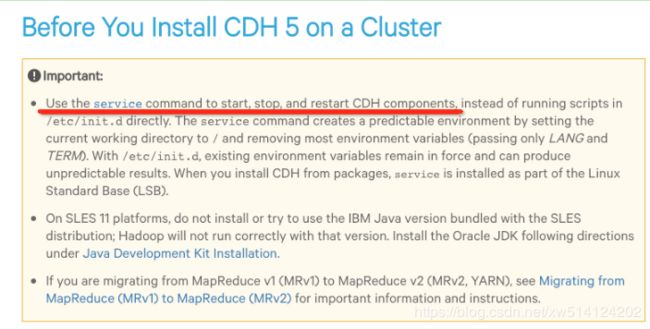

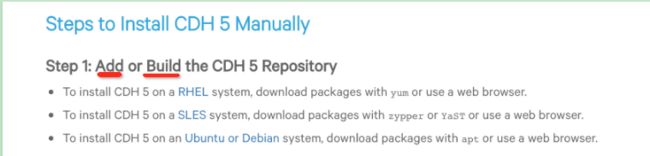

Step 1: 添加 cdh的 yum源

添加 cdh的 yum源,有两种方式(我用的第一种):

- 下载cloudera-cdh5.repo 放入 /etc/yum.repos.d/

cd /etc/yum.repos.d/

wget https://archive.cloudera.com/cdh5/redhat/7/x86_64/cdh/cloudera-cdh5.repo

sudo yum clean all

PS. 修改/etc/yum.conf的 keepcache 0->1 可以让下载的rpm文件保存

- Creating a Local Yum Repository

将cloudera-cdh5.repo 下的所有rpm包 下载到服务器,用web-service方式提供yum安装

相当于 yum 下载cdh相关 可以走内网

文档地址

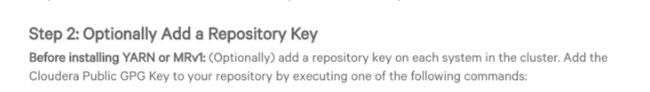

Step 2: add a repository key

sudo rpm --import https://archive.cloudera.com/cdh5/redhat/7/x86_64/cdh/RPM-GPG-KEY-cloudera

Step 3: 安装Zookeeper

因为要布置 NameNode HA,所以需要 Zookeeper 环境

文档地址

布置Zk的机器上安装 (集群选奇数台且数量>=3)

sudo yum install zookeeper -y

sudo yum install zookeeper-server -y

mkdir -p /var/lib/zookeeper

chown -R zookeeper /var/lib/zookeeper/

vim /etc/zookeeper/conf/zoo.cfg

# 添加

server.1=worker1:2888:3888

server.2=worker2:2888:3888

server.3=worker3:2888:3888

# worker1

sudo service zookeeper-server init --myid=1

# worker2

sudo service zookeeper-server init --myid=2

… 以此类推

# 启动 zookeeper

sudo service zookeeper-server start

# 测试是否启动成功

zookeeper-client -server worker1:2181

Step 4: 安装cdh服务

安装Resourcemanager+namenode

选定两台master机器安装(HA)

sudo yum install hadoop-yarn-resourcemanager -y

sudo yum install hadoop-hdfs-namenode -y

除了Resourcemanager 安装 Nodemanager

PS. (因为只有3台机器,所以在Resourcemanager上也安装了Nodemanager)

一般两台主节点, 其余的都是工作节点

sudo yum install hadoop-yarn-nodemanager hadoop-hdfs-datanode hadoop-mapreduce -y

找一个 worker节点 安装

sudo yum install hadoop-mapreduce-historyserver hadoop-yarn-proxyserver -y

客户端 安装

sudo yum install hadoop-client

Step 5: 修改配置文件

vim hdfs-site.xml

<property>

<name>dfs.nameservices</name>

<value>xiwu-cluster</value>

</property>

<property>

<name>dfs.ha.namenodes.xiwu-cluster</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.xiwu-cluster.nn1</name>

<value>worker1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.xiwu-cluster.nn2</name>

<value>worker2:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.xiwu-cluster.nn1</name>

<value>worker1:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.xiwu-cluster.nn2</name>

<value>worker2:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>[qjournal://worker1:8485](qjournal://worker1:8485);worker2:8485;worker3:8485/xiwu-cluster</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data/1/dfs/jn</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.xiwu-cluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence(hdfs)</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/var/lib/hadoop-hdfs/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions.superusergroup</name>

<value>hadoop</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///data/1/dfs/nn,file:///nfsmount/dfs/nn</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///data/1/dfs/dn,file:///data/2/dfs/dn,file:///data/3/dfs/dn,file:///data/4/dfs/dn</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

vim core-site.xml

<property>

<name>fs.defaultFS</name>

<value>[hdfs://xiwu-cluster](hdfs://xiwu-cluster)</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>worker1:2181,worker2:2181,worker3:2181</value>

</property>

将上面的两个配置同步到所有集群机器上

配置 HA 两台机器的hdfs用户免密登陆

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence(hdfs)</value>

</property>

先选一台master

su hdfs

ssh-keygen -t rsa

cd ~/.ssh

# pwd: /var/lib/hadoop-hdfs/.ssh

cp id_rsa.pub authorized_keys

# 将 ~/.ssh 目录 同步到另一台master上

PS. 注意目录权限 chown -R hdfs:hdfs .ssh

journalnode (选3台机器 一般是worker)

sudo mkdir -p /data/1/dfs/jn

sudo chown -R hdfs:hdfs /data/1/dfs/jn

sudo yum install hadoop-hdfs-journalnode -y

sudo service hadoop-hdfs-journalnode start -y

namenode

sudo mkdir -p /data/1/dfs/nn /nfsmount/dfs/nn

sudo chmod 700 /data/1/dfs/nn /nfsmount/dfs/nn

sudo chown -R hdfs:hdfs /data/1/dfs/nn /nfsmount/dfs/nn

# 格式化

sudo -u hdfs hdfs namenode -format

datanode

sudo mkdir -p /data/1/dfs/dn /data/2/dfs/dn /data/3/dfs/dn /data/4/dfs/dn

sudo chown -R hdfs:hdfs /data/1/dfs/dn /data/2/dfs/dn /data/3/dfs/dn /data/4/dfs/dn

zkfc (在namenode HA上控制)

sudo yum install hadoop-hdfs-zkfc -y

sudo service hadoop-hdfs-zkfc start

hdfs zkfc -formatZK

Step6: 启动服务

Primary NameNode

sudo service hadoop-hdfs-namenode start

Standby NameNode

sudo -u hdfs hdfs namenode -bootstrapStandby

sudo service hadoop-hdfs-namenode start

DataNode

sudo service hadoop-hdfs-datanode start

ResourceManager

sudo service hadoop-yarn-resourcemanager start

NodeManager

sudo service hadoop-yarn-nodemanager start

MapReduce JobHistory

sudo service hadoop-mapreduce-historyserver start

检测HDFS 是否启动成功

sudo -u hdfs hadoop fs -mkdir /tmp

sudo -u hdfs hadoop fs -chmod -R 1777 /tmp

PS. 关闭开机自动启动

chkconfig --list

chkconfig hadoop-yarn-nodemanager off

chkconfig hadoop-yarn-resourcemanager off