docker(十一):网络命名空间

一、网络命名空间应用docker实例

1.创建busybox容器test1,并且一直运行

sudo docker run -d --name test1 busybox /bin/sh -c "while true; do sleep 3600; done"

sudo docker ps2.进入容器

sudo docker exec -it bdff6fc0e901 /bin/sh3.显示当前容器网络接口

[vagrant@docker-node1 ~]$ sudo docker exec -it bdff6fc0e901 /bin/sh

/ # ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

5: eth0@if6: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever 4.退出容器,查看ip。宿主机与容器命名空间,相互隔离

(1)exit

(2)ip a

[vagrant@docker-node1 ~]$ ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:26:10:60 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 64507sec preferred_lft 64507sec

inet6 fe80::5054:ff:fe26:1060/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:06:5f:b4 brd ff:ff:ff:ff:ff:ff

inet 192.168.205.10/24 brd 192.168.205.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe06:5fb4/64 scope link

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ca:23:dc:f4 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:caff:fe23:dcf4/64 scope link

valid_lft forever preferred_lft forever

6: vethec4757e@if5: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 72:73:38:a1:ee:15 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::7073:38ff:fea1:ee15/64 scope link

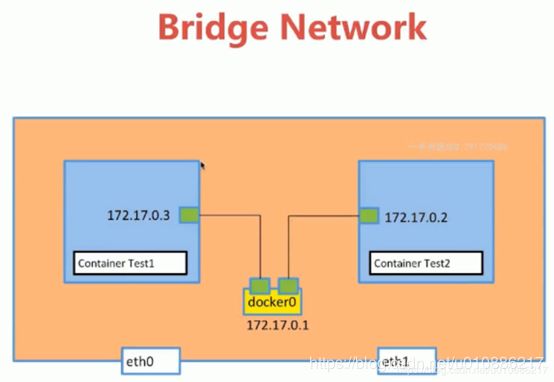

valid_lft forever preferred_lft forever 5.新创建容器test2

sudo docker run -d --name test2 busybox /bin/sh -c "while true; do sleep 3600; done"

sudo docker ps-》检测进程

[vagrant@docker-node1 ~]$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9b2130b3eedb busybox "/bin/sh -c 'while t…" 6 seconds ago Up 6 seconds test2

bdff6fc0e901 busybox "/bin/sh -c 'while t…" 7 minutes ago Up 7 minutes test16.检测两个容器的命名空间

sudo docker exec bdff6fc0e901 ip a

sudo docker exec 9b2130b3eedb ip a

结果

[vagrant@docker-node1 ~]$ sudo docker exec bdff6fc0e901 ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

5: eth0@if6: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[vagrant@docker-node1 ~]$ sudo docker exec 9b2130b3eedb ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

7: eth0@if8: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever 7.两个容器是可以互相访问ping通的

(1)进入test1

sudo docker exec -it bdff6fc0e901 /bin/sh

(2)访问test2

/ # ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3): 56 data bytes

64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.073 ms

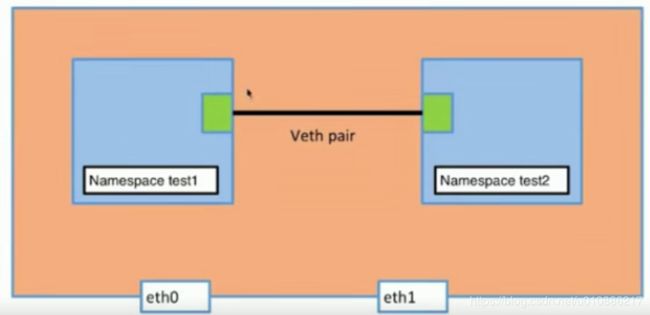

64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.084 ms二、手动创建命名空间并且配置二者可以互通

1.添加命名空间test1

(1)查看ns

sudo ip netns list

(2)删除

sudo ip netns delete test1

(3)添加

sudo ip netns add test12.查看ns内的ip,默认端口都是关闭

sudo ip netns exec test1 ip a

或者

sudo ip netns exec test1 ip link

结果:

[vagrant@docker-node1 ~]$ sudo ip netns exec test1 ip a

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

[vagrant@docker-node1 ~]$ sudo ip netns exec test1 ip link

1: lo: mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 3.启动lo

sudo ip netns exec test1 ip link set dev lo up

查看

[vagrant@docker-node1 ~]$ sudo ip netns exec test1 ip link set dev lo up

[vagrant@docker-node1 ~]$ sudo ip netns exec test1 ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 4.创建test2,然后开启

sudo ip netns add test2

sudo ip netns exec test2 ip link set dev lo up

检测

[vagrant@docker-node1 ~]$ sudo ip netns exec test2 ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever 5.添加网络连接端口

sudo ip link add veth-test1 type veth peer name veth-test2

查看

[vagrant@docker-node1 ~]$ ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:26:10:60 brd ff:ff:ff:ff:ff:ff

3: eth1: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:06:5f:b4 brd ff:ff:ff:ff:ff:ff

4: docker0: mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ca:23:dc:f4 brd ff:ff:ff:ff:ff:ff

6: vethec4757e@if5: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 72:73:38:a1:ee:15 brd ff:ff:ff:ff:ff:ff link-netnsid 0

8: vethc892055@if7: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 5a:f9:bb:0d:6a:f3 brd ff:ff:ff:ff:ff:ff link-netnsid 1

9: veth-test2@veth-test1: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 2a:ef:08:2a:bb:9a brd ff:ff:ff:ff:ff:ff

10: veth-test1@veth-test2: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 1e:7f:00:b7:50:9e brd ff:ff:ff:ff:ff:ff 6.添加接口到对应命名空间test1,检查test1,会添加新端口

sudo ip netns exec test1 ip link

sudo ip link set veth-test1 netns test1

sudo ip netns exec test1 ip link

例如

[vagrant@docker-node1 ~]$ sudo ip netns exec test1 ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

[vagrant@docker-node1 ~]$ sudo ip link set veth-test1 netns test1

[vagrant@docker-node1 ~]$ sudo ip netns exec test1 ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

10: veth-test1@if9: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 1e:7f:00:b7:50:9e brd ff:ff:ff:ff:ff:ff link-netnsid 0 -》本地查看ip link,少了10,这一个端口

[vagrant@docker-node1 ~]$ ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:26:10:60 brd ff:ff:ff:ff:ff:ff

3: eth1: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:06:5f:b4 brd ff:ff:ff:ff:ff:ff

4: docker0: mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ca:23:dc:f4 brd ff:ff:ff:ff:ff:ff

6: vethec4757e@if5: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 72:73:38:a1:ee:15 brd ff:ff:ff:ff:ff:ff link-netnsid 0

8: vethc892055@if7: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 5a:f9:bb:0d:6a:f3 brd ff:ff:ff:ff:ff:ff link-netnsid 1

9: veth-test2@if10: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 2a:ef:08:2a:bb:9a brd ff:ff:ff:ff:ff:ff link-netnsid 2 7.添加接口到test2

-》sudo ip link set veth-test2 netns test2

结果:

[vagrant@docker-node1 ~]$ sudo ip link set veth-test2 netns test2

[vagrant@docker-node1 ~]$ ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:26:10:60 brd ff:ff:ff:ff:ff:ff

3: eth1: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:06:5f:b4 brd ff:ff:ff:ff:ff:ff

4: docker0: mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ca:23:dc:f4 brd ff:ff:ff:ff:ff:ff

6: vethec4757e@if5: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 72:73:38:a1:ee:15 brd ff:ff:ff:ff:ff:ff link-netnsid 0

8: vethc892055@if7: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 5a:f9:bb:0d:6a:f3 brd ff:ff:ff:ff:ff:ff link-netnsid 1

-》查看test2里面的多的端口

sudo ip netns exec test2 ip link 8.给两个端口分配ip地址

(1)分配之前

sudo ip netns exec test1 ip link

sudo ip netns exec test2 ip link(2)添加

sudo ip netns exec test1 ip addr add 192.168.1.1/24 dev veth-test1

sudo ip netns exec test2 ip addr add 192.168.1.2/24 dev veth-test2(3)之后

sudo ip netns exec test1 ip link

sudo ip netns exec test2 ip link

结果没有变化,因为没有打开端口:

[vagrant@docker-node1 ~]$ sudo ip netns exec test1 ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

10: veth-test1@if9: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 1e:7f:00:b7:50:9e brd ff:ff:ff:ff:ff:ff link-netnsid 1

[vagrant@docker-node1 ~]$ sudo ip netns exec test2 ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

9: veth-test2@if10: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 2a:ef:08:2a:bb:9a brd ff:ff:ff:ff:ff:ff link-netnsid 0 (4)打开端口

sudo ip netns exec test1 ip link set dev veth-test1 up

sudo ip netns exec test2 ip link set dev veth-test2 up(5)打开端口查看,可以有相应ip

sudo ip netns exec test1 ip link

sudo ip netns exec test2 ip link

以及

sudo ip netns exec test1 ip a

sudo ip netns exec test2 ip a(6)分别查询在不同ns中ping另外一个,可以ping通,ok

sudo ip netns exec test1 ping 192.168.1.2

sudo ip netns exec test2 ping 192.168.1.1

三、network常用命令

1.查看网络

[root@docker-node1 mysql-data]# docker network ls

NETWORK ID NAME DRIVER SCOPE

1cc1a5a89d65 bridge bridge local

d183199b9d78 host host local

327dec5bc1f2 none null local

d788797e4c2a wordpress_my-bridge bridge local 2.查看网络个数和类型

(1)开启test1和test2

sudo docker run -d --name test1 busybox /bin/sh -c "while true; do sleep 3600; done"

sudo docker run -d --name test2 busybox /bin/sh -c "while true; do sleep 3600; done"(2)查看网络包含容器

[root@docker-node1 mysql-data]# docker network ls

NETWORK ID NAME DRIVER SCOPE

1cc1a5a89d65 bridge bridge local

d183199b9d78 host host local

327dec5bc1f2 none null local

d788797e4c2a wordpress_my-bridge bridge local3.查看特定网络包含容器内容

[root@docker-node1 mysql-data]# docker network inspect 1cc1a5a89d65

[

{

"Name": "bridge",

"Id": "1cc1a5a89d65c33929eb8c7519284e088337dd65f4a5e81c637af1048316438f",

"Created": "2019-06-28T13:48:49.515376322Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"3344aaca149fd03d016a54c98dce4c0c59ceadf62ae1c6485efc7b6fea3d912b": {

"Name": "test1",

"EndpointID": "382952278eb261c4b690a90d4cd8427a0cd12c356dbc40685a61fe27e3f56f79",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"5a854e1dc83dfe4a571dd85843d58cfc2a8f598175b160398104b3b82ffd50bb": {

"Name": "test2",

"EndpointID": "456e648cd8b1518a78874b37cf965e4db0aab1a6b8ea7b9d456a803f101eb7fd",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

] 4.查看容器内部网络

[root@docker-node1 mysql-data]# docker exec test1 ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

40: eth0@if41: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever 5.查看docker容器是否连接docker0 bridge。

(1)安装工具

yum install bridge-utils(2)查看连接到bridge0的接口

[root@docker-node1 mysql-data]# brctl show

bridge name bridge id STP enabled interfaces

br-d788797e4c2a 8000.0242fc85b166 no

docker0 8000.024201b44e11 no veth8f3d540(3)网络

[root@docker-node1 mysql-data]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:26:10:60 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 56750sec preferred_lft 56750sec

inet6 fe80::5054:ff:fe26:1060/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:06:5f:b4 brd ff:ff:ff:ff:ff:ff

inet 192.168.205.10/24 brd 192.168.205.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe06:5fb4/64 scope link

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:01:b4:4e:11 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:1ff:feb4:4e11/64 scope link

valid_lft forever preferred_lft forever

39: br-d788797e4c2a: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:fc:85:b1:66 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-d788797e4c2a

valid_lft forever preferred_lft forever

41: veth8f3d540@if40: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 3a:5c:56:62:3b:2c brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::385c:56ff:fe62:3b2c/64 scope link

valid_lft forever preferred_lft forever

43: veth8d0548b@if42: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 5a:85:ec:85:f0:f6 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::5885:ecff:fe85:f0f6/64 scope link

valid_lft forever preferred_lft forever (4)查看接口连接到docker0上

[root@docker-node1 mysql-data]# docker network inspect 1cc1a5a89d65

[

{

"Name": "bridge",

"Id": "1cc1a5a89d65c33929eb8c7519284e088337dd65f4a5e81c637af1048316438f",

"Created": "2019-06-28T13:48:49.515376322Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"3344aaca149fd03d016a54c98dce4c0c59ceadf62ae1c6485efc7b6fea3d912b": {

"Name": "test1",

"EndpointID": "382952278eb261c4b690a90d4cd8427a0cd12c356dbc40685a61fe27e3f56f79",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"5a854e1dc83dfe4a571dd85843d58cfc2a8f598175b160398104b3b82ffd50bb": {

"Name": "test2",

"EndpointID": "456e648cd8b1518a78874b37cf965e4db0aab1a6b8ea7b9d456a803f101eb7fd",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]6.进入test1来ping test2

(1)进入test1

docker exec -it test1 /bin/sh(2)ping test2

/ # ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3): 56 data bytes

64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.090 ms

64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.071 ms

64 bytes from 172.17.0.3: seq=2 ttl=64 time=0.070 ms