一、自动发现类型

在上一篇文中留了一个坑:

监控某个statefulset服务的时候,我在service文件中定义了个EP,然后把pod的ip写死在配置文件中,这样,当pod重启后,IP地址变化,就监控不到数据了,这肯定是不合理的。

如果在我们的 Kubernetes 集群中有了很多的 Service/Pod,那么我们都需要一个一个的去建立一个对应的 ServiceMonitor 对象来进行监控吗?这样岂不是也很麻烦么?

为解决上面的问题,Prometheus Operator 为我们提供了一个额外的抓取配置的来解决这个问题,我们可以通过额外的配置来获取k8s的资源监控(pod、service、node等)。

promethues支持多种文件发现。

其中通过kubernetes_sd_configs,可以达到我们想要的目的,监控其各种资源。kubernetes SD 配置允许从kubernetes REST API接受搜集指标,且总是和集群保持同步状态,以下任何一种role类型都能够配置来发现我们想要的对象,来自官网翻译的。

1、Node

Node role发现每个集群中的目标是通过默认的kubelet的HTTP端口。目标地址默认是kubernetes如下地址中node的第一个地址(NodeInternalIP, NodeExternalIP,NodeLegacyHostIP, and NodeHostName.)

可用的meta标签有:

__meta_kubernetes_node_name: The name of the node object.

__meta_kubernetes_node_label_: Each label from the node object.

__meta_kubernetes_node_labelpresent_: true for each label from the node object.

__meta_kubernetes_node_annotation_: Each annotation from the node object.

__meta_kubernetes_node_annotationpresent_: true for each annotation from the node object.

__meta_kubernetes_node_address_: The first address for each node address type, if it exists. 此外,node的实例标签将会被设置成从API server传递过来的node的name。

2、Service

service角色会为每个服务发现一个服务端口。对于黑盒监控的服务,这个比较有用。address将会被设置成service的kubernetes DNS名称以及各自的服务端口。

Available meta labels:

__meta_kubernetes_namespace: The namespace of the service object.

__meta_kubernetes_service_annotation_: Each annotation from the service object.

__meta_kubernetes_service_annotationpresent_: "true" for each annotation of the service object.

__meta_kubernetes_service_cluster_ip: The cluster IP address of the service. (Does not apply to services of type ExternalName)

__meta_kubernetes_service_external_name: The DNS name of the service. (Applies to services of type ExternalName)

__meta_kubernetes_service_label_: Each label from the service object.

__meta_kubernetes_service_labelpresent_: true for each label of the service object.

__meta_kubernetes_service_name: The name of the service object.

__meta_kubernetes_service_port_name: Name of the service port for the target.

__meta_kubernetes_service_port_protocol: Protocol of the service port for the target.

3、Pod

Pod role会发现所有pods以及暴露的容器作为target。每个容器声明一个端口,一个单独的target就会生成。如果一个容器没有指定端口,通过relabel手动指定一个端口,一个port-free target容器将会生成。

Available meta labels:

__meta_kubernetes_namespace: The namespace of the pod object.

__meta_kubernetes_pod_name: The name of the pod object.

__meta_kubernetes_pod_ip: The pod IP of the pod object.

__meta_kubernetes_pod_label_: Each label from the pod object.

__meta_kubernetes_pod_labelpresent_: truefor each label from the pod object.

__meta_kubernetes_pod_annotation_: Each annotation from the pod object.

__meta_kubernetes_pod_annotationpresent_: true for each annotation from the pod object.

__meta_kubernetes_pod_container_init: true if the container is an InitContainer

__meta_kubernetes_pod_container_name: Name of the container the target address points to.

__meta_kubernetes_pod_container_port_name: Name of the container port.

__meta_kubernetes_pod_container_port_number: Number of the container port.

__meta_kubernetes_pod_container_port_protocol: Protocol of the container port.

__meta_kubernetes_pod_ready: Set to true or false for the pod's ready state.

__meta_kubernetes_pod_phase: Set to Pending, Running, Succeeded, Failed or Unknown in the lifecycle.

__meta_kubernetes_pod_node_name: The name of the node the pod is scheduled onto.

__meta_kubernetes_pod_host_ip: The current host IP of the pod object.

__meta_kubernetes_pod_uid: The UID of the pod object.

__meta_kubernetes_pod_controller_kind: Object kind of the pod controller.

__meta_kubernetes_pod_controller_name: Name of the pod controller.

4、endpoints

endpoints role从每个服务监听的endpoints发现。每个endpoint都会发现一个port。如果endpoint是一个pod,所有包含的容器不被绑定到一个endpoint port,也会被targets被发现。

Available meta labels:

__meta_kubernetes_namespace: The namespace of the endpoints object.

__meta_kubernetes_endpoints_name: The names of the endpoints object.

For all targets discovered directly from the endpoints list (those not additionally inferred from underlying pods), the following labels are attached:

__meta_kubernetes_endpoint_hostname: Hostname of the endpoint.

__meta_kubernetes_endpoint_node_name: Name of the node hosting the endpoint.

__meta_kubernetes_endpoint_ready: Set to true or false for the endpoint's ready state.

__meta_kubernetes_endpoint_port_name: Name of the endpoint port.

__meta_kubernetes_endpoint_port_protocol: Protocol of the endpoint port.

__meta_kubernetes_endpoint_address_target_kind: Kind of the endpoint address target.

__meta_kubernetes_endpoint_address_target_name: Name of the endpoint address target.

If the endpoints belong to a service, all labels of the role: service discovery are attached.

For all targets backed by a pod, all labels of the role: pod discovery are attached.

5、ingress

ingress role将会发现每个ingress。ingress在黑盒监控上比较有用。address将会被设置成ingress指定的配置。

Available meta labels:

__meta_kubernetes_namespace: The namespace of the ingress object.

__meta_kubernetes_ingress_name: The name of the ingress object.

__meta_kubernetes_ingress_label_: Each label from the ingress object.

__meta_kubernetes_ingress_labelpresent_: true for each label from the ingress object.

__meta_kubernetes_ingress_annotation_: Each annotation from the ingress object.

__meta_kubernetes_ingress_annotationpresent_: true for each annotation from the ingress object.

__meta_kubernetes_ingress_scheme: Protocol scheme of ingress, https if TLS config is set. Defaults to http.

__meta_kubernetes_ingress_path: Path from ingress spec. Defaults to /.

二、自动发现Pod配置

比如业务上有一个微服务,类型为statefulset,启动后是2个pod的副本集,pod暴露的数据接口为http://pod_ip:7000/metrics。由于pod每次重启后,ip都会变化,所以只能通过自动发现的方式获取数据。

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

run: jx3recipe

name: jx3recipe

annotations:

prometheus.io/scrape: "true"

spec:

selector:

matchLabels:

app: jx3recipe

serviceName: jx3recipe-service

replicas: 2

template:

metadata:

labels:

app: jx3recipe

appCluster: jx3recipe-cluster

spec:

terminationGracePeriodSeconds: 20

containers:

- image: hub.kce.ooo.com/jx3pvp/jx3recipe:qa-latest

imagePullPolicy: Always

securityContext:

runAsUser: 1000

name: jx3recipe

lifecycle:

preStop:

exec:

command: ["kill","-s","SIGINT","1"]

volumeMounts:

- name: config-volume

mountPath: /data/conf.yml

subPath: conf.yml

resources:

requests:

cpu: "100m"

memory: "500Mi"

env:

- name: JX3PVP_ENV

value: "qa"

- name: JX3PVP_RUN_MODE

value: "k8s"

- name: JX3PVP_SERVICE_ID

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: JX3PVP_LOCAL_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: JX3PVP_CONSUL_IP

value: $(CONSUL_AGENT_SERVICE_HOST)

ports:

- name: biz

containerPort: 8000

protocol: "TCP"

- name: admin

containerPort: 7000

protocol: "TCP"

volumes:

- name: config-volume

configMap:

name: app-configure-file-jx3recipe

items:

- key: jx3recipe.yml

path: conf.yml

1、创建发现规则

设定发现pod规则:文件名为promethues-additional.yaml

- pod名称的label为jx3recipe

- pod的label_appCluster匹配为 jx3recipe-cluster

- pod的address为http://.*:7000/metrics格式

- job_name: 'kubernetes-service-pod'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_container_name]

action: replace

target_label: jx3recipe

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: ["__meta_kubernetes_pod_label_appCluster"]

regex: "jx3recipe-cluster"

action: keep

- source_labels: [__address__]

action: keep

regex: '(.*):7000'

2、创建对应的Secret对象

kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

创建完成后,会将上面配置信息进行 base64 编码后作为 prometheus-additional.yaml 这个 key 对应的值存在:

apiVersion: v1

data:

prometheus-additional.yaml: LSBqb2JfbmFtZTogJ2t1YmVybmV0ZXMtc2VydmljZS1wb2QnCiAga3ViZXJuZXRlc19zZF9jb25maWdzOgogIC0gcm9sZTogcG9kCiAgcmVsYWJlbF9jb25maWdzOgogIC0gc291cmNlX2xhYmVsczogW19fbWV0YV9rdWJlcm5ldGVzX3BvZF9jb250YWluZXJfbmFtZV0KICAgIGFjdGlvbjogcmVwbGFjZQogICAgdGFyZ2V0X2xhYmVsOiBqeDNyZWNpcGUKICAtIGFjdGlvbjogbGFiZWxtYXAKICAgIHJlZ2V4OiBfX21ldGFfa3ViZXJuZXRlc19wb2RfbGFiZWxfKC4rKQogIC0gc291cmNlX2xhYmVsczogIFsiX19tZXRhX2t1YmVybmV0ZXNfcG9kX2xhYmVsX2FwcENsdXN0ZXIiXQogICAgcmVnZXg6ICJqeDNyZWNpcGUtY2x1c3RlciIKICAgIGFjdGlvbjoga2VlcAogIC0gc291cmNlX2xhYmVsczogW19fYWRkcmVzc19fXQogICAgYWN0aW9uOiBrZWVwCiAgICByZWdleDogJyguKik6NzAwMCcK

kind: Secret

metadata:

creationTimestamp: "2019-09-10T09:32:22Z"

name: additional-configs

namespace: monitoring

resourceVersion: "1004681"

selfLink: /api/v1/namespaces/monitoring/secrets/additional-configs

uid: e455d657-d3ad-11e9-95b4-fa163e3c10ff

type: Opaque

然后我们只需要在声明 prometheus 的资源对象文件中添加上这个额外的配置:(prometheus-prometheus.yaml)

3、promethues添加资源对象

修改prometheus-prometheus.yaml文件

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

baseImage: quay.io/prometheus/prometheus

nodeSelector:

beta.kubernetes.io/os: linux

replicas: 2

secrets:

- etcd-certs

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

additionalScrapeConfigs:

name: additional-configs

key: prometheus-additional.yaml

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: v2.5.0

增加了下面这一段:

additionalScrapeConfigs:

name: additional-configs

key: prometheus-additional.yaml

4、应用配置

kubectl apply -f prometheus-prometheus.yaml

过一段时间,刷新promethues上的config,将会看到下面红色框框的配置。

5、添加权限

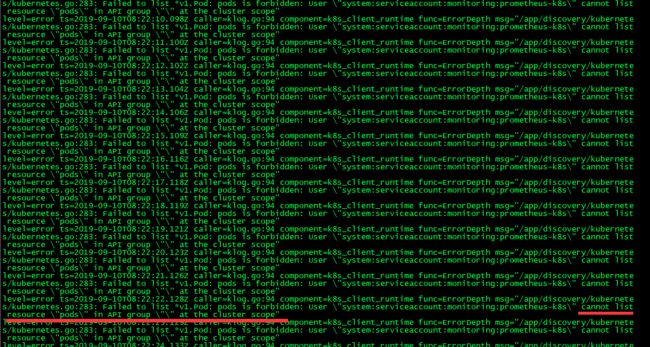

在 Prometheus Dashboard 的配置页面下面我们可以看到已经有了对应的的配置信息了,但是我们切换到 targets 页面下面却并没有发现对应的监控任务,查看 Prometheus 的 Pod 日志:

可以看到有很多错误日志出现,都是xxx is forbidden,这说明是 RBAC 权限的问题,通过 prometheus 资源对象的配置可以知道 Prometheus 绑定了一个名为 prometheus-k8s 的 ServiceAccount 对象,而这个对象绑定的是一个名为 prometheus-k8s 的 ClusterRole:(prometheus-clusterRole.yaml)

修改为:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus-k8s

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

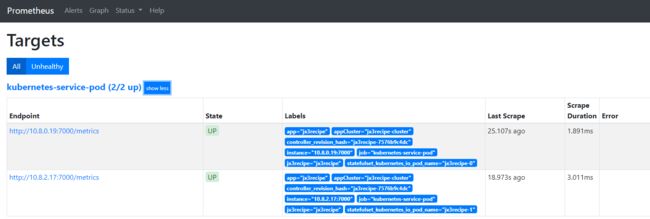

更新上面的 ClusterRole 这个资源对象,然后重建下 Prometheus 的所有 Pod,正常就可以看到 targets 页面下面有 kubernetes-service-pod这个监控任务了:

至此,一个自动发现pod的配置就完成了,其他资源(service、endpoint、ingress、node同样也可以通过自动发现的方式实现。)