【kubernetes/k8s概念】rook

https://github.com/rook/rook

云原生计算基金会(CNCF)的孵化级项目,是Kubernetes的开源云本地存储协调器,为各种存储解决方案提供平台,框架和支持,以便与云原生环境本地集成

Ceph is a highly scalable distributed storage solution for block storage, object storage, and shared file systems with years of production deployments.

实现了一个自动管理的、自动扩容的、自动修复的分布式存储服务。Rook 支持 Ceph 存储,基于 Kubernetes 使用 Rook 可以大大简化 Ceph 存储集群的搭建以及使用

Desigin

引用:https://github.com/rook/rook/blob/master/Documentation/ceph-storage.md

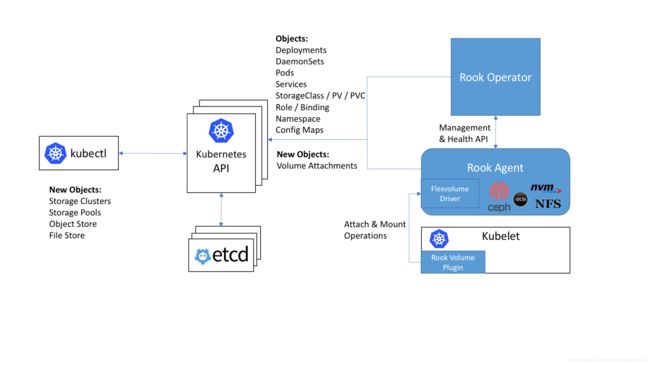

operator:作用启动监控存储集群,包括mon,osd,mgr等服务,可以支持 block 块存储,file system 文件存储,object 对象存储 (S3/Swift)。 启动包括ceph agent 与 discover服务,agent 包含 Flexvolume plugin,包括attaching network storage devices, mounting volumes, and formating the filesystem 三种操作。

Rook 架构

Block 块存储

Ceph 块设备也可以简称为 RBD 或 RADOS 块设备

Rook 创建 Ceph 块设备,先创建 CephBlockPool 和 StorageClass,CephBlockPool 是 Rook 的 CRD (创建一个 Block 池),在创建一个 StorageClass,这个是动态卷配置 (Dynamic provisioning)

File System 文件系统

A shared file system can be mounted with read/write permission from multiple pods. This may be useful for applications which can be clustered using a shared filesystem.

ROOK_ALLOW_MULTIPLE_FILESYSTEM定义允许多个文件系统共享

Object 对象存储

Ceph RGW 是基于 librados,为应用提供 RESTful 类型的对象存储接口,其接口方式支持

S3(兼容 Amazon S3 RESTful API) 和Swift(兼容 OpenStack Swift API) 两种类型

组件Mon

Mon作用是监控、管理和协调整个分布式系统环境中其它各个OSD/PG、Client、MDS角色的工作,保证整个分布环境中的数据一致性

MON采用主备模式(leader/follower),即使系统中有多个MON角色,实际工作的也只有一个MON,其它MON都处于standby状态

当Ceph失去了Leader MON后,其它MON会基于PaxOS算法,投票选出新的Leader

组件osd

存储落地操作的节点叫做OSD(Object Storage Device),基于它工作的RADOS、PG等模块

1. rook快速部署:

路径rook/cluster/example/kubernetes/ceph

1. kubectl apply -f common.yaml

2. kubectl apply -f operator.yaml

3. kubectl aplply -f cluster.yaml

- dataDirHostPath: 在宿主机上生成的,保存的是ceph的相关的配置文件,再重新生成集群目录为空,否则mon会无法启动

- useAllDevices: 使用所有的设备,建议为false,否则会把宿主机所有可用的磁盘都干掉

- useAllNodes:使用所有的node节点,建议为false,肯定不会用k8s集群内的所有node来搭建ceph的

- databaseSizeMB和journalSizeMB:当磁盘大于100G的时候,就注释这俩项就行了

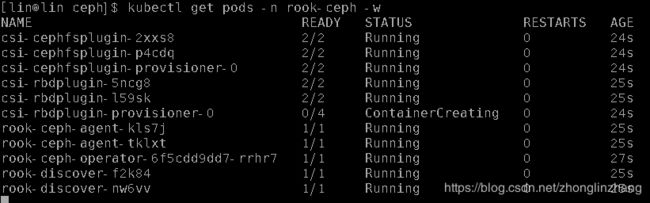

2. rook csi部署

2.1 需求

- a Kubernetes v1.13+ is needed in order to support CSI Spec 1.0.

--allow-privilegedflag set to true in kubelet and your API server

需要的镜像:

quay.io/k8scsi/csi-provisioner:v1.0.1

quay.io/cephcsi/rbdplugin:v1.0.0

quay.io/k8scsi/csi-attacher:v1.0.1

quay.io/k8scsi/csi-snapshotter:v1.0.1

quay.io/k8scsi/csi-node-driver-registrar:v1.0.2

quay.io/cephcsi/cephfsplugin:v1.0.0

2.2 为CSI驱动create RBRC

绑定的ClusterRole的rule未设置,是不是被骗了,搞这么一大堆

kubectl apply -f cluster/examples/kubernetes/ceph/csi/rbac/rbd/csi-nodeplugin-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: rook-csi-rbd-plugin-sa

namespace: rook-ceph

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin

aggregationRule:

clusterRoleSelectors:

- matchLabels:

rbac.ceph.rook.io/aggregate-to-rbd-csi-nodeplugin: "true"

rules: []

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin-rules

labels:

rbac.ceph.rook.io/aggregate-to-rbd-csi-nodeplugin: "true"

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "update"]

- apiGroups: [""]

resources: ["namespaces"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "list"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin

subjects:

- kind: ServiceAccount

name: rook-csi-rbd-plugin-sa

namespace: rook-ceph

roleRef:

kind: ClusterRole

name: rbd-csi-nodeplugin

apiGroup: rbac.authorization.k8s.iokubectl apply -f cluster/examples/kubernetes/ceph/csi/rbac/rbd/csi-provisioner-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: rook-csi-rbd-provisioner-sa

namespace: rook-ceph

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-external-provisioner-runner

aggregationRule:

clusterRoleSelectors:

- matchLabels:

rbac.ceph.rook.io/aggregate-to-rbd-external-provisioner-runner: "true"

rules: []

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-external-provisioner-runner-rules

labels:

rbac.ceph.rook.io/aggregate-to-rbd-external-provisioner-runner: "true"

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete", "update"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "create", "update"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshots"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "list", "create", "delete"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents"]

verbs: ["create", "get", "list", "watch", "update", "delete"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["apiextensions.k8s.io"]

resources: ["customresourcedefinitions"]

verbs: ["create"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-provisioner-role

subjects:

- kind: ServiceAccount

name: rook-csi-rbd-provisioner-sa

namespace: rook-ceph

roleRef:

kind: ClusterRole

name: rbd-external-provisioner-runner

apiGroup: rbac.authorization.k8s.io

同理创建cephfs的rbac,就不展示了

kubectl apply -f cluster/examples/kubernetes/ceph/csi/rbac/cephfs

2.3 创建operator-with-csi.yaml

kubectl apply -f operaor-with-csi.yaml

#################################################################################################################

# The deployment for the rook operator that enables the ceph-csi driver for beta testing.

# For example, to create the rook-ceph cluster:

# kubectl create -f common.yaml

# kubectl create -f operator-with-csi.yaml

# kubectl create -f cluster.yaml

#################################################################################################################

apiVersion: apps/v1

kind: Deployment

metadata:

name: rook-ceph-operator

namespace: rook-ceph

labels:

operator: rook

storage-backend: ceph

spec:

selector:

matchLabels:

app: rook-ceph-operator

replicas: 1

template:

metadata:

labels:

app: rook-ceph-operator

spec:

serviceAccountName: rook-ceph-system

containers:

- name: rook-ceph-operator

image: rook/ceph:master

args: ["ceph", "operator"]

volumeMounts:

- mountPath: /var/lib/rook

name: rook-config

- mountPath: /etc/ceph

name: default-config-dir

env:

- name: ROOK_CURRENT_NAMESPACE_ONLY

value: "true"

# CSI enablement

- name: ROOK_CSI_ENABLE_CEPHFS

value: "true"

- name: ROOK_CSI_CEPHFS_IMAGE

value: "quay.io/cephcsi/cephfsplugin:v1.0.0"

- name: ROOK_CSI_ENABLE_RBD

value: "true"

- name: ROOK_CSI_RBD_IMAGE

value: "quay.io/cephcsi/rbdplugin:v1.0.0"

- name: ROOK_CSI_REGISTRAR_IMAGE

value: "quay.io/k8scsi/csi-node-driver-registrar:v1.0.2"

- name: ROOK_CSI_PROVISIONER_IMAGE

value: "quay.io/k8scsi/csi-provisioner:v1.0.1"

- name: ROOK_CSI_SNAPSHOTTER_IMAGE

value: "quay.io/k8scsi/csi-snapshotter:v1.0.1"

- name: ROOK_CSI_ATTACHER_IMAGE

value: "quay.io/k8scsi/csi-attacher:v1.0.1"

# The name of the node to pass with the downward API

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# The pod name to pass with the downward API

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# The pod namespace to pass with the downward API

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: rook-config

emptyDir: {}

- name: default-config-dir

emptyDir: {}

核心是RADOS,也即高可用、自动化的分布式对象存储,该模块负责对OSD的自动化运行,保证整个存储系统的可用性。同时,该模块通过一个名为librados的公共库对外提供存储服务,块存储和对象存储都依赖该动态库。

参考资料

- https://github.com/rook/rook/blob/master/Documentation/ceph-block.md

- https://github.com/rook/rook/blob/master/Documentation/ceph-filesystem.md

- Rook Ceph Object Storage

- https://github.com/rook/rook/blob/master/Documentation/ceph-storage.md

https://github.com/rook/rook

https://rook.io/

https://edgefs.io/