SparkCore:RDD Persistence持久化策略, persist和cache算子

文章目录

- 1、RDD Persistence介绍

- 2、persist()和cache()算子

- 2.1 cache底层源码

- 2.2 StorageLevel

- 2.2 StorageLevel使用

- 2.3 StorageLevel如何选择

- 2.4 RDD.unpersist()移除缓存数据

官网:RDD Persistence

http://spark.apache.org/docs/latest/rdd-programming-guide.html#rdd-persistence

1、RDD Persistence介绍

-

One of the most important capabilities in Spark is persisting (or caching) a dataset in memory across operations.When you persist an RDD, each node stores any partitions of it that it computes in memory and reuses them in other actions on that dataset (or datasets derived from it).This allows future actions to be much faster (often by more than 10x). Caching is a key tool for iterative algorithms and fast interactive use.

Spark中最重要的功能之一是跨操作在内存中持久化(或缓存)数据集。当您持久化一个RDD时,每个节点将它计算的任何分区存储在内存中,并在action数据集时重新使用它们。这使得将来的操作要快得多(通常超过10倍)。缓存是迭代算法和快速交互使用的关键工具。 -

You can mark an RDD to be persisted using the persist() or cache() methods on it. The first time it is computed in an action, it will be kept in memory on the nodes. Spark’s cache is fault-tolerant – if any partition of an RDD is lost, it will automatically be recomputed using the transformations that originally created it.

您可以在RDD上使用persist()或cache()方法来标记要持久化的RDD。第一次在操作中计算它时,它将保存在节点的内存中。Spark的缓存是容错的——如果RDD的任何分区丢失,它将使用最初创建它的转换自动重新计算。

scala> val lines = sc.textFile("hdfs://192.168.137.130:9000/test.txt")

scala> lines.cache

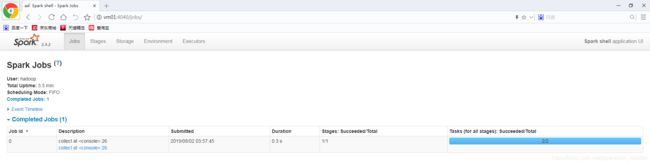

此时打开UI,是没有任何Job产生的,在Storage中也看不到持久化的数据

scala> lines.collect

res1: Array[String] = Array(hello spark, hello mr, hello yarn, hello hive, hello spark)

当遇到action时才会产生Job,然后查看Storage发现持久化了刚才的文件数据,并且100%持久化在内存中,这说明cache()是lazy的,当然他不属于transformer算子

2、persist()和cache()算子

2.1 cache底层源码

查看cache()源码,cache底层调用的是persist(),默认storage level (MEMORY_ONLY)

/**

* Persist this RDD with the default storage level (`MEMORY_ONLY`).

*/

def cache(): this.type = persist()

/**

* Persist this RDD with the default storage level (`MEMORY_ONLY`).

*/

def persist(): this.type = persist(StorageLevel.MEMORY_ONLY)

2.2 StorageLevel

/**

* Various [[org.apache.spark.storage.StorageLevel]] defined and utility functions for creating

* new storage levels.

*/

object StorageLevel {

val NONE = new StorageLevel(false, false, false, false)

val DISK_ONLY = new StorageLevel(true, false, false, false)

val DISK_ONLY_2 = new StorageLevel(true, false, false, false, 2)

val MEMORY_ONLY = new StorageLevel(false, true, false, true)

val MEMORY_ONLY_2 = new StorageLevel(false, true, false, true, 2)

val MEMORY_ONLY_SER = new StorageLevel(false, true, false, false)

val MEMORY_ONLY_SER_2 = new StorageLevel(false, true, false, false, 2)

val MEMORY_AND_DISK = new StorageLevel(true, true, false, true)

val MEMORY_AND_DISK_2 = new StorageLevel(true, true, false, true, 2)

val MEMORY_AND_DISK_SER = new StorageLevel(true, true, false, false)

val MEMORY_AND_DISK_SER_2 = new StorageLevel(true, true, false, false, 2)

val OFF_HEAP = new StorageLevel(true, true, true, false, 1)

然后在点击new里面的 StorageLevel源码,就是可以知道后面的false,true,2是代表什么意思

class StorageLevel private(

private var _useDisk: Boolean, //是否磁盘

private var _useMemory: Boolean, //是否内存

private var _useOffHeap: Boolean, //是否OffHeap

private var _deserialized: Boolean, //是否反序列化

private var _replication: Int = 1) //多少副本

extends Externalizable {}

2.2 StorageLevel使用

scala> lines.unpersist(true) #去掉缓存,此时去UI界面是看不到前面缓存信息了

scala> import org.apache.spark.storage.StorageLevel #导入StorageLevel类

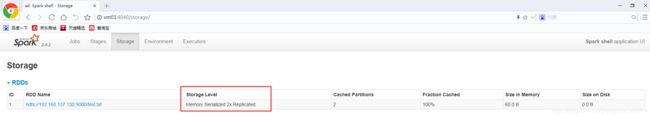

scala> lines.persist(StorageLevel.MEMORY_AND_DISK_SER_2)

scala> lines.count

19/08/02 04:26:21 WARN RandomBlockReplicationPolicy: Expecting 1 replicas with only 0 peer/s.

19/08/02 04:26:21 WARN BlockManager: Block rdd_1_1 replicated to only 0 peer(s) instead of 1 peers

19/08/02 04:26:21 WARN RandomBlockReplicationPolicy: Expecting 1 replicas with only 0 peer/s.

19/08/02 04:26:21 WARN BlockManager: Block rdd_1_0 replicated to only 0 peer(s) instead of 1 peers

res4: Long = 5

UI界面可以看到StorageLevel已经变成了 内存带序列化2个副本

2.3 StorageLevel如何选择

Spark’s storage levels are meant to provide different trade-offs between memory usage and CPU efficiency. We recommend going through the following process to select one:

Spark的存储级别旨在提供内存使用和CPU效率之间的不同权衡。

-

If your RDDs fit comfortably with the default storage level (MEMORY_ONLY), leave them that way. This is the most CPU-efficient option, allowing operations on the RDDs to run as fast as possible.

如果您的RDDs适合默认存储级别(MEMORY_ONLY),那么就让它们保持这种状态。这是最高效的cpu选项,允许RDDs上的操作尽可能快地运行。

默认是有一定道理的,一般选择MEMORY_ONLY,MEMORY_ONLY_SER -

If not, try using MEMORY_ONLY_SER and selecting a fast serialization library to make the objects much more space-efficient, but still reasonably fast to access. (Java and Scala)

如果没有,尝试使用MEMORY_ONLY_SER并选择一个快速序列化库,以使对象更节省空间,但访问速度仍然相当快。(Java和Scala)

序列化减少体积,但是耗cpu -

Don’t spill to disk unless the functions that computed your datasets are expensive, or they filter a large amount of the data. Otherwise, recomputing a partition may be as fast as reading it from disk.

除非计算数据集的函数非常昂贵,或者它们过滤了大量数据,否则不要写到磁盘。否则,重新计算分区的速度与从磁盘读取分区一样

白缓存了,一般在SparkCore里面带disk的StorageLevel就不要选了 -

Use the replicated storage levels if you want fast fault recovery (e.g. if using Spark to serve requests from a web application). All the storage levels provide full fault tolerance by recomputing lost data, but the replicated ones let you continue running tasks on the RDD without waiting to recompute a lost partition.

如果希望快速恢复故障,请使用复制存储级别(例如,如果使用Spark服务于来自web应用程序的请求)。通过重新计算丢失的数据,所有存储级别都提供了完全的容错能力,但是复制的存储级别允许您在RDD上继续运行任务,而不必等待重新计算丢失的分区。

2.4 RDD.unpersist()移除缓存数据

Spark automatically monitors cache usage on each node and drops out old data partitions in a least-recently-used (LRU) fashion. If you would like to manually remove an RDD instead of waiting for it to fall out of the cache, use the RDD.unpersist() method.

Spark自动监视每个节点上的缓存使用情况,并以最近最少使用(LRU)的方式删除旧数据分区。如果希望手动删除RDD,而不是等待它从缓存中删除,请使用RDD.unpersist()方法。

在你程序最后 sc.stop()前,最好加上RDD.unpersist()方法释放缓存