PostgreSQL中的等待事件wait_event

PostgreSQL9.6版本开始,加入了wait_event特性。通过查阅pg_stat_activity中的wait_event_type和wait_event我们可以了解到每个sql进程“当前”更详细的执行状态,无论是对于异常定位排查,还是系统优化来说都更加方便了。

这篇博文简单讨论下wait_event相关的原理。

一、等待事件分类

I.事件类型 :

共有9个分类,每一类都由不同事件组成

/* ----------

* Wait Classes

* ----------

*/

#define PG_WAIT_LWLOCK 0x01000000U /* 等待LWLock */

#define PG_WAIT_LOCK 0x03000000U /* 等待Lock */

#define PG_WAIT_BUFFER_PIN 0x04000000U /* 等待访问数据缓冲区 */

#define PG_WAIT_ACTIVITY 0x05000000U /* 服务器进程处于空闲状态 */

#define PG_WAIT_CLIENT 0x06000000U /* 等待应用客户端程序在套接字中进行操作 */

#define PG_WAIT_EXTENSION 0x07000000U /* 等待扩展模块中的操作 */

#define PG_WAIT_IPC 0x08000000U /* 等待进程间通信 */

#define PG_WAIT_TIMEOUT 0x09000000U /* 等待达到超时时间 */

#define PG_WAIT_IO 0x0A000000U /* 等待IO操作完成 */

II. 具体事件:

每种类型和具体事件含义请参考:https://www.postgresql.org/docs/12/monitoring-stats.html

1.PG_WAIT_LWLOCK类

/* 等待LWLock */

定义共有65种事件

const char *const MainLWLockNames[] = {

"" ,

"ShmemIndexLock",

"OidGenLock",

"XidGenLock",

"ProcArrayLock",

"SInvalReadLock",

"SInvalWriteLock",

"WALBufMappingLock",

"WALWriteLock",

"ControlFileLock",

"CheckpointLock",

"CLogControlLock",

"SubtransControlLock",

"MultiXactGenLock",

"MultiXactOffsetControlLock",

"MultiXactMemberControlLock",

"RelCacheInitLock",

"CheckpointerCommLock",

"TwoPhaseStateLock",

"TablespaceCreateLock",

"BtreeVacuumLock",

"AddinShmemInitLock",

"AutovacuumLock",

"AutovacuumScheduleLock",

"SyncScanLock",

"RelationMappingLock",

"AsyncCtlLock",

"AsyncQueueLock",

"SerializableXactHashLock",

"SerializableFinishedListLock",

"SerializablePredicateLockListLock",

"OldSerXidLock",

"SyncRepLock",

"BackgroundWorkerLock",

"DynamicSharedMemoryControlLock",

"AutoFileLock",

"ReplicationSlotAllocationLock",

"ReplicationSlotControlLock",

"CommitTsControlLock",

"CommitTsLock",

"ReplicationOriginLock",

"MultiXactTruncationLock",

"OldSnapshotTimeMapLock",

"LogicalRepWorkerLock",

"CLogTruncationLock"

};

typedef enum BuiltinTrancheIds

{

LWTRANCHE_CLOG_BUFFERS = NUM_INDIVIDUAL_LWLOCKS,

LWTRANCHE_COMMITTS_BUFFERS,

LWTRANCHE_SUBTRANS_BUFFERS,

LWTRANCHE_MXACTOFFSET_BUFFERS,

LWTRANCHE_MXACTMEMBER_BUFFERS,

LWTRANCHE_ASYNC_BUFFERS,

LWTRANCHE_OLDSERXID_BUFFERS,

LWTRANCHE_WAL_INSERT,

LWTRANCHE_BUFFER_CONTENT,

LWTRANCHE_BUFFER_IO_IN_PROGRESS,

LWTRANCHE_REPLICATION_ORIGIN,

LWTRANCHE_REPLICATION_SLOT_IO_IN_PROGRESS,

LWTRANCHE_PROC,

LWTRANCHE_BUFFER_MAPPING,

LWTRANCHE_LOCK_MANAGER,

LWTRANCHE_PREDICATE_LOCK_MANAGER,

LWTRANCHE_PARALLEL_HASH_JOIN,

LWTRANCHE_PARALLEL_QUERY_DSA,

LWTRANCHE_SESSION_DSA,

LWTRANCHE_SESSION_RECORD_TABLE,

LWTRANCHE_SESSION_TYPMOD_TABLE,

LWTRANCHE_SHARED_TUPLESTORE,

LWTRANCHE_TBM,

LWTRANCHE_PARALLEL_APPEND,

LWTRANCHE_FIRST_USER_DEFINED

} BuiltinTrancheIds;

2. PG_WAIT_LOCK类型

/* 等待Lock */

共有10种事件

/*

* LOCKTAG is the key information needed to look up a LOCK item in the

* lock hashtable. A LOCKTAG value uniquely identifies a lockable object.

*

* The LockTagType enum defines the different kinds of objects we can lock.

* We can handle up to 256 different LockTagTypes.

*/

typedef enum LockTagType

{

LOCKTAG_RELATION, /* whole relation */

LOCKTAG_RELATION_EXTEND, /* the right to extend a relation */

LOCKTAG_PAGE, /* one page of a relation */

LOCKTAG_TUPLE, /* one physical tuple */

LOCKTAG_TRANSACTION, /* transaction (for waiting for xact done) */

LOCKTAG_VIRTUALTRANSACTION, /* virtual transaction (ditto) */

LOCKTAG_SPECULATIVE_TOKEN, /* speculative insertion Xid and token */

LOCKTAG_OBJECT, /* non-relation database object */

LOCKTAG_USERLOCK, /* reserved for old contrib/userlock code */

LOCKTAG_ADVISORY /* advisory user locks */

} LockTagType;

3. PG_WAIT_BUFFER_PIN类型

/* 等待访问数据缓冲区 */

共有1种事件

/* ----------

* pgstat_get_wait_event() -

*

* Return a string representing the current wait event, backend is

* waiting on.

*/

const char *

pgstat_get_wait_event(uint32 wait_event_info)

{

/* 省略部分代码行 */

switch (classId)

{

/* 省略其他分支 */

case PG_WAIT_BUFFER_PIN:

event_name = "BufferPin";

break;

/* 省略其他分支 */

}

return event_name;

}

4. PG_WAIT_ACTIVITY类型

/* 当前服务器进程处于空闲状态 */

共有14种事件,分别对应14个进程主函数

typedef enum

{

WAIT_EVENT_ARCHIVER_MAIN = PG_WAIT_ACTIVITY,

WAIT_EVENT_AUTOVACUUM_MAIN,

WAIT_EVENT_BGWRITER_HIBERNATE,

WAIT_EVENT_BGWRITER_MAIN,

WAIT_EVENT_CHECKPOINTER_MAIN,

WAIT_EVENT_LOGICAL_APPLY_MAIN,

WAIT_EVENT_LOGICAL_LAUNCHER_MAIN,

WAIT_EVENT_PGSTAT_MAIN,

WAIT_EVENT_RECOVERY_WAL_ALL,

WAIT_EVENT_RECOVERY_WAL_STREAM,

WAIT_EVENT_SYSLOGGER_MAIN,

WAIT_EVENT_WAL_RECEIVER_MAIN,

WAIT_EVENT_WAL_SENDER_MAIN,

WAIT_EVENT_WAL_WRITER_MAIN

} WaitEventActivity;

5. PG_WAIT_CLIENT类型

/* 等待应用客户端程序在套接字中进行操作 */

共有9种事件

/* ----------

* Wait Events - Client

*

* Use this category when a process is waiting to send data to or receive data

* from the frontend process to which it is connected. This is never used for

* a background process, which has no client connection.

* ----------

*/

typedef enum

{

WAIT_EVENT_CLIENT_READ = PG_WAIT_CLIENT,

WAIT_EVENT_CLIENT_WRITE,

WAIT_EVENT_LIBPQWALRECEIVER_CONNECT,

WAIT_EVENT_LIBPQWALRECEIVER_RECEIVE,

WAIT_EVENT_SSL_OPEN_SERVER,

WAIT_EVENT_WAL_RECEIVER_WAIT_START,

WAIT_EVENT_WAL_SENDER_WAIT_WAL,

WAIT_EVENT_WAL_SENDER_WRITE_DATA,

WAIT_EVENT_GSS_OPEN_SERVER,

} WaitEventClient;

6. PG_WAIT_EXTENSION类型

/* 等待扩展模块中的操作 */

共有1种事件

/* ----------

* pgstat_get_wait_event() -

*

* Return a string representing the current wait event, backend is

* waiting on.

*/

const char *

pgstat_get_wait_event(uint32 wait_event_info)

{

/* 省略部分代码行 */

switch (classId)

{

/* 省略其他分支 */

case PG_WAIT_EXTENSION:

event_name = "Extension";

break;

/* 省略其他分支 */

}

return event_name;

}

7. PG_WAIT_IPC类型

/* 等待进程间通信 */

共有37种事件

typedef enum

{

WAIT_EVENT_BGWORKER_SHUTDOWN = PG_WAIT_IPC,

WAIT_EVENT_BGWORKER_STARTUP,

WAIT_EVENT_BTREE_PAGE,

WAIT_EVENT_CLOG_GROUP_UPDATE,

WAIT_EVENT_CHECKPOINT_DONE,

WAIT_EVENT_CHECKPOINT_START,

WAIT_EVENT_EXECUTE_GATHER,

WAIT_EVENT_HASH_BATCH_ALLOCATING,

WAIT_EVENT_HASH_BATCH_ELECTING,

WAIT_EVENT_HASH_BATCH_LOADING,

WAIT_EVENT_HASH_BUILD_ALLOCATING,

WAIT_EVENT_HASH_BUILD_ELECTING,

WAIT_EVENT_HASH_BUILD_HASHING_INNER,

WAIT_EVENT_HASH_BUILD_HASHING_OUTER,

WAIT_EVENT_HASH_GROW_BATCHES_ALLOCATING,

WAIT_EVENT_HASH_GROW_BATCHES_DECIDING,

WAIT_EVENT_HASH_GROW_BATCHES_ELECTING,

WAIT_EVENT_HASH_GROW_BATCHES_FINISHING,

WAIT_EVENT_HASH_GROW_BATCHES_REPARTITIONING,

WAIT_EVENT_HASH_GROW_BUCKETS_ALLOCATING,

WAIT_EVENT_HASH_GROW_BUCKETS_ELECTING,

WAIT_EVENT_HASH_GROW_BUCKETS_REINSERTING,

WAIT_EVENT_LOGICAL_SYNC_DATA,

WAIT_EVENT_LOGICAL_SYNC_STATE_CHANGE,

WAIT_EVENT_MQ_INTERNAL,

WAIT_EVENT_MQ_PUT_MESSAGE,

WAIT_EVENT_MQ_RECEIVE,

WAIT_EVENT_MQ_SEND,

WAIT_EVENT_PARALLEL_BITMAP_SCAN,

WAIT_EVENT_PARALLEL_CREATE_INDEX_SCAN,

WAIT_EVENT_PARALLEL_FINISH,

WAIT_EVENT_PROCARRAY_GROUP_UPDATE,

WAIT_EVENT_PROMOTE,

WAIT_EVENT_REPLICATION_ORIGIN_DROP,

WAIT_EVENT_REPLICATION_SLOT_DROP,

WAIT_EVENT_SAFE_SNAPSHOT,

WAIT_EVENT_SYNC_REP

} WaitEventIPC;

8. PG_WAIT_TIMEOUT类型

/* 等待达到超时时间 */

共有3种事件

/* ----------

* Wait Events - Timeout

*

* Use this category when a process is waiting for a timeout to expire.

* ----------

*/

typedef enum

{

WAIT_EVENT_BASE_BACKUP_THROTTLE = PG_WAIT_TIMEOUT,

WAIT_EVENT_PG_SLEEP,

WAIT_EVENT_RECOVERY_APPLY_DELAY

} WaitEventTimeout;

9. PG_WAIT_IO类型

/* 等待IO操作完成 */

共68种事件

/* ----------

* Wait Events - IO

*

* Use this category when a process is waiting for a IO.

* ----------

*/

typedef enum

{

WAIT_EVENT_BUFFILE_READ = PG_WAIT_IO,

WAIT_EVENT_BUFFILE_WRITE,

WAIT_EVENT_CONTROL_FILE_READ,

WAIT_EVENT_CONTROL_FILE_SYNC,

WAIT_EVENT_CONTROL_FILE_SYNC_UPDATE,

WAIT_EVENT_CONTROL_FILE_WRITE,

WAIT_EVENT_CONTROL_FILE_WRITE_UPDATE,

WAIT_EVENT_COPY_FILE_READ,

WAIT_EVENT_COPY_FILE_WRITE,

WAIT_EVENT_DATA_FILE_EXTEND,

WAIT_EVENT_DATA_FILE_FLUSH,

WAIT_EVENT_DATA_FILE_IMMEDIATE_SYNC,

WAIT_EVENT_DATA_FILE_PREFETCH,

WAIT_EVENT_DATA_FILE_READ,

WAIT_EVENT_DATA_FILE_SYNC,

WAIT_EVENT_DATA_FILE_TRUNCATE,

WAIT_EVENT_DATA_FILE_WRITE,

WAIT_EVENT_DSM_FILL_ZERO_WRITE,

WAIT_EVENT_LOCK_FILE_ADDTODATADIR_READ,

WAIT_EVENT_LOCK_FILE_ADDTODATADIR_SYNC,

WAIT_EVENT_LOCK_FILE_ADDTODATADIR_WRITE,

WAIT_EVENT_LOCK_FILE_CREATE_READ,

WAIT_EVENT_LOCK_FILE_CREATE_SYNC,

WAIT_EVENT_LOCK_FILE_CREATE_WRITE,

WAIT_EVENT_LOCK_FILE_RECHECKDATADIR_READ,

WAIT_EVENT_LOGICAL_REWRITE_CHECKPOINT_SYNC,

WAIT_EVENT_LOGICAL_REWRITE_MAPPING_SYNC,

WAIT_EVENT_LOGICAL_REWRITE_MAPPING_WRITE,

WAIT_EVENT_LOGICAL_REWRITE_SYNC,

WAIT_EVENT_LOGICAL_REWRITE_TRUNCATE,

WAIT_EVENT_LOGICAL_REWRITE_WRITE,

WAIT_EVENT_RELATION_MAP_READ,

WAIT_EVENT_RELATION_MAP_SYNC,

WAIT_EVENT_RELATION_MAP_WRITE,

WAIT_EVENT_REORDER_BUFFER_READ,

WAIT_EVENT_REORDER_BUFFER_WRITE,

WAIT_EVENT_REORDER_LOGICAL_MAPPING_READ,

WAIT_EVENT_REPLICATION_SLOT_READ,

WAIT_EVENT_REPLICATION_SLOT_RESTORE_SYNC,

WAIT_EVENT_REPLICATION_SLOT_SYNC,

WAIT_EVENT_REPLICATION_SLOT_WRITE,

WAIT_EVENT_SLRU_FLUSH_SYNC,

WAIT_EVENT_SLRU_READ,

WAIT_EVENT_SLRU_SYNC,

WAIT_EVENT_SLRU_WRITE,

WAIT_EVENT_SNAPBUILD_READ,

WAIT_EVENT_SNAPBUILD_SYNC,

WAIT_EVENT_SNAPBUILD_WRITE,

WAIT_EVENT_TIMELINE_HISTORY_FILE_SYNC,

WAIT_EVENT_TIMELINE_HISTORY_FILE_WRITE,

WAIT_EVENT_TIMELINE_HISTORY_READ,

WAIT_EVENT_TIMELINE_HISTORY_SYNC,

WAIT_EVENT_TIMELINE_HISTORY_WRITE,

WAIT_EVENT_TWOPHASE_FILE_READ,

WAIT_EVENT_TWOPHASE_FILE_SYNC,

WAIT_EVENT_TWOPHASE_FILE_WRITE,

WAIT_EVENT_WALSENDER_TIMELINE_HISTORY_READ,

WAIT_EVENT_WAL_BOOTSTRAP_SYNC,

WAIT_EVENT_WAL_BOOTSTRAP_WRITE,

WAIT_EVENT_WAL_COPY_READ,

WAIT_EVENT_WAL_COPY_SYNC,

WAIT_EVENT_WAL_COPY_WRITE,

WAIT_EVENT_WAL_INIT_SYNC,

WAIT_EVENT_WAL_INIT_WRITE,

WAIT_EVENT_WAL_READ,

WAIT_EVENT_WAL_SYNC,

WAIT_EVENT_WAL_SYNC_METHOD_ASSIGN,

WAIT_EVENT_WAL_WRITE

} WaitEventIO;

二、等待事件原理

等待事件较多,挑几种出来分析一下实现原理

从9.6版本开始,加入了该特性,体现在backend共享内存结构体PGPROC中新增了uint32 wait_event_info; /* proc’s wait information */如下#82行

struct PGPROC

{

/* proc->links MUST BE FIRST IN STRUCT (see ProcSleep,ProcWakeup,etc) */

SHM_QUEUE links; /* list link if process is in a list */

PGPROC **procgloballist; /* procglobal list that owns this PGPROC */

PGSemaphore sem; /* ONE semaphore to sleep on */

int waitStatus; /* STATUS_WAITING, STATUS_OK or STATUS_ERROR */

Latch procLatch; /* generic latch for process */

LocalTransactionId lxid; /* local id of top-level transaction currently

* being executed by this proc, if running;

* else InvalidLocalTransactionId */

int pid; /* Backend's process ID; 0 if prepared xact */

int pgprocno;

/* These fields are zero while a backend is still starting up: */

BackendId backendId; /* This backend's backend ID (if assigned) */

Oid databaseId; /* OID of database this backend is using */

Oid roleId; /* OID of role using this backend */

Oid tempNamespaceId; /* OID of temp schema this backend is

* using */

bool isBackgroundWorker; /* true if background worker. */

/*

* While in hot standby mode, shows that a conflict signal has been sent

* for the current transaction. Set/cleared while holding ProcArrayLock,

* though not required. Accessed without lock, if needed.

*/

bool recoveryConflictPending;

/* Info about LWLock the process is currently waiting for, if any. */

bool lwWaiting; /* true if waiting for an LW lock */

uint8 lwWaitMode; /* lwlock mode being waited for */

proclist_node lwWaitLink; /* position in LW lock wait list */

/* Support for condition variables. */

proclist_node cvWaitLink; /* position in CV wait list */

/* Info about lock the process is currently waiting for, if any. */

/* waitLock and waitProcLock are NULL if not currently waiting. */

LOCK *waitLock; /* Lock object we're sleeping on ... */

PROCLOCK *waitProcLock; /* Per-holder info for awaited lock */

LOCKMODE waitLockMode; /* type of lock we're waiting for */

LOCKMASK heldLocks; /* bitmask for lock types already held on this

* lock object by this backend */

/*

* Info to allow us to wait for synchronous replication, if needed.

* waitLSN is InvalidXLogRecPtr if not waiting; set only by user backend.

* syncRepState must not be touched except by owning process or WALSender.

* syncRepLinks used only while holding SyncRepLock.

*/

XLogRecPtr waitLSN; /* waiting for this LSN or higher */

int syncRepState; /* wait state for sync rep */

SHM_QUEUE syncRepLinks; /* list link if process is in syncrep queue */

/*

* All PROCLOCK objects for locks held or awaited by this backend are

* linked into one of these lists, according to the partition number of

* their lock.

*/

SHM_QUEUE myProcLocks[NUM_LOCK_PARTITIONS];

struct XidCache subxids; /* cache for subtransaction XIDs */

/* Support for group XID clearing. */

/* true, if member of ProcArray group waiting for XID clear */

bool procArrayGroupMember;

/* next ProcArray group member waiting for XID clear */

pg_atomic_uint32 procArrayGroupNext;

/*

* latest transaction id among the transaction's main XID and

* subtransactions

*/

TransactionId procArrayGroupMemberXid;

uint32 wait_event_info; /* proc's wait information */

/* Support for group transaction status update. */

bool clogGroupMember; /* true, if member of clog group */

pg_atomic_uint32 clogGroupNext; /* next clog group member */

TransactionId clogGroupMemberXid; /* transaction id of clog group member */

XidStatus clogGroupMemberXidStatus; /* transaction status of clog

* group member */

int clogGroupMemberPage; /* clog page corresponding to

* transaction id of clog group member */

XLogRecPtr clogGroupMemberLsn; /* WAL location of commit record for clog

* group member */

/* Per-backend LWLock. Protects fields below (but not group fields). */

LWLock backendLock;

/* Lock manager data, recording fast-path locks taken by this backend. */

uint64 fpLockBits; /* lock modes held for each fast-path slot */

Oid fpRelId[FP_LOCK_SLOTS_PER_BACKEND]; /* slots for rel oids */

bool fpVXIDLock; /* are we holding a fast-path VXID lock? */

LocalTransactionId fpLocalTransactionId; /* lxid for fast-path VXID

* lock */

/*

* Support for lock groups. Use LockHashPartitionLockByProc on the group

* leader to get the LWLock protecting these fields.

*/

PGPROC *lockGroupLeader; /* lock group leader, if I'm a member */

dlist_head lockGroupMembers; /* list of members, if I'm a leader */

dlist_node lockGroupLink; /* my member link, if I'm a member */

};

I.详细分析一个Lock类型的事件,了解等待事件的具体实现

如下sql进行update,等待事件类型为Lock,具体事件为transactionid

postgres=# select pid,wait_event_type,wait_event,query from pg_stat_activity where pid=21318;

-[ RECORD 1 ]---+-------------------------------------

pid | 21318

wait_event_type | Lock

wait_event | transactionid

query | update test_tbl set id=4 where id=3;

打印stack信息:

[postgres@postgres_zabbix ~]$ pstack 21318

#0 0x00007f1706ee95e3 in __epoll_wait_nocancel () from /lib64/libc.so.6

#1 0x0000000000853571 in WaitEventSetWaitBlock (set=0x2164778, cur_timeout=-1, occurred_events=0x7ffcac6f15b0, nevents=1) at latch.c:1080

#2 0x000000000085344c in WaitEventSetWait (set=0x2164778, timeout=-1, occurred_events=0x7ffcac6f15b0, nevents=1, wait_event_info=50331652) at latch.c:1032

#3 0x0000000000852d38 in WaitLatchOrSocket (latch=0x7f17001cf5c4, wakeEvents=33, sock=-1, timeout=-1, wait_event_info=50331652) at latch.c:407

#4 0x0000000000852c03 in WaitLatch (latch=0x7f17001cf5c4, wakeEvents=33, timeout=0, wait_event_info=50331652) at latch.c:347

#5 0x0000000000867ccb in ProcSleep (locallock=0x2072938, lockMethodTable=0xb8f5a0 <default_lockmethod>) at proc.c:1289

#6 0x0000000000861f98 in WaitOnLock (locallock=0x2072938, owner=0x2081d40) at lock.c:1768

#7 0x00000000008610be in LockAcquireExtended (locktag=0x7ffcac6f1a90, lockmode=5, sessionLock=false, dontWait=false, reportMemoryError=true, locallockp=0x0) at lock.c:1050

#8 0x0000000000860713 in LockAcquire (locktag=0x7ffcac6f1a90, lockmode=5, sessionLock=false, dontWait=false) at lock.c:713

#9 0x000000000085f592 in XactLockTableWait (xid=501, rel=0x7f1707c8fb10, ctid=0x7ffcac6f1b44, oper=XLTW_Update) at lmgr.c:658

#10 0x00000000004c99c9 in heap_update (relation=0x7f1707c8fb10, otid=0x7ffcac6f1e60, newtup=0x2164708, cid=1, crosscheck=0x0, wait=true, tmfd=0x7ffcac6f1d60, lockmode=0x7ffcac6f1d5c) at heapam.c:3228

#11 0x00000000004d411c in heapam_tuple_update (relation=0x7f1707c8fb10, otid=0x7ffcac6f1e60, slot=0x2162108, cid=1, snapshot=0x2074500, crosscheck=0x0, wait=true, tmfd=0x7ffcac6f1d60, lockmode=0x7ffcac6f1d5c, update_indexes=0x7ffcac6f1d5b) at heapam_handler.c:332

#12 0x00000000006db007 in table_tuple_update (rel=0x7f1707c8fb10, otid=0x7ffcac6f1e60, slot=0x2162108, cid=1, snapshot=0x2074500, crosscheck=0x0, wait=true, tmfd=0x7ffcac6f1d60, lockmode=0x7ffcac6f1d5c, update_indexes=0x7ffcac6f1d5b) at ../../../src/include/access/tableam.h:1275

#13 0x00000000006dce83 in ExecUpdate (mtstate=0x2160b40, tupleid=0x7ffcac6f1e60, oldtuple=0x0, slot=0x2162108, planSlot=0x21613a0, epqstate=0x2160c38, estate=0x21607c0, canSetTag=true) at nodeModifyTable.c:1311

#14 0x00000000006de36c in ExecModifyTable (pstate=0x2160b40) at nodeModifyTable.c:2222

#15 0x00000000006b2b07 in ExecProcNodeFirst (node=0x2160b40) at execProcnode.c:445

#16 0x00000000006a8ce7 in ExecProcNode (node=0x2160b40) at ../../../src/include/executor/executor.h:239

#17 0x00000000006ab063 in ExecutePlan (estate=0x21607c0, planstate=0x2160b40, use_parallel_mode=false, operation=CMD_UPDATE, sendTuples=false, numberTuples=0, direction=ForwardScanDirection, dest=0x2146860, execute_once=true) at execMain.c:1646

#18 0x00000000006a91c4 in standard_ExecutorRun (queryDesc=0x2152ab0, direction=ForwardScanDirection, count=0, execute_once=true) at execMain.c:364

#19 0x00000000006a9069 in ExecutorRun (queryDesc=0x2152ab0, direction=ForwardScanDirection, count=0, execute_once=true) at execMain.c:308

#20 0x000000000088017a in ProcessQuery (plan=0x2146780, sourceText=0x204b040 "update test_tbl set id=4 where id=3;", params=0x0, queryEnv=0x0, dest=0x2146860, completionTag=0x7ffcac6f2270 "") at pquery.c:161

#21 0x00000000008818c1 in PortalRunMulti (portal=0x20b6570, isTopLevel=true, setHoldSnapshot=false, dest=0x2146860, altdest=0x2146860, completionTag=0x7ffcac6f2270 "") at pquery.c:1283

#22 0x0000000000880efb in PortalRun (portal=0x20b6570, count=9223372036854775807, isTopLevel=true, run_once=true, dest=0x2146860, altdest=0x2146860, completionTag=0x7ffcac6f2270 "") at pquery.c:796

#23 0x000000000087b28f in exec_simple_query (query_string=0x204b040 "update test_tbl set id=4 where id=3;") at postgres.c:1215

#24 0x000000000087f30f in PostgresMain (argc=1, argv=0x207a6e0, dbname=0x207a578 "postgres", username=0x207a558 "postgres") at postgres.c:4247

#25 0x00000000007e6a9e in BackendRun (port=0x20702a0) at postmaster.c:4437

#26 0x00000000007e629d in BackendStartup (port=0x20702a0) at postmaster.c:4128

#27 0x00000000007e293d in ServerLoop () at postmaster.c:1704

#28 0x00000000007e21fd in PostmasterMain (argc=1, argv=0x2045c00) at postmaster.c:1377

#29 0x000000000070f76d in main (argc=1, argv=0x2045c00) at main.c:228

[postgres@postgres_zabbix ~]$

主要关注以下几行

#8行申请锁,lockmode为5即ShareLock

#4行设置等待事件为wait_event_info=50331652

#0行进入epoll_wait中观察list链表,并sleep

#0 0x00007f1706ee95e3 in __epoll_wait_nocancel () from /lib64/libc.so.6

#4 0x0000000000852c03 in WaitLatch (latch=0x7f17001cf5c4, wakeEvents=33, timeout=0, wait_event_info=50331652) at latch.c:347

#8 0x0000000000860713 in LockAcquire (locktag=0x7ffcac6f1a90, lockmode=5, sessionLock=false, dontWait=false) at lock.c:713

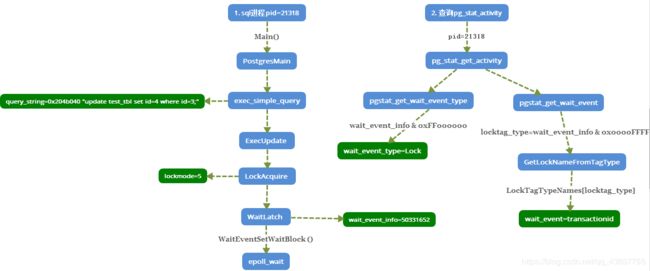

使用框图简单描述下wait_event的设置,以及wait_event的查询显示的代码调用逻辑。

这里蓝色框表示函数入口,绿色框表示变量

1)左半边框图表示event的设置,可以看到该update申请Lock时,具体设置的等待事件为wait_event_info=50331652

2)右半边框图表示event的查询显示,可以看到在pg_stat_activity中查询到该update语句对应的等待事件类型为Lock,具体事件为wait_event=transactionid

主要分析下如何从wait_event_info=50331652得到wait_event=transactionid

50331652是一个十进制数,之前有提到postgresql中等待事件类型的宏定义对应的实际值都是使用无符号16进制数来表示。

将50331652转化为无符号16进制为0x03000004U,

关注框图右侧部分的逻辑

a. wait_event_type 的运算取值:

wait_event_type = wait_event_info & 0xFF000000

= 0x03000004U & 0xFF000000

意为保留wait_event_info的高两位,运算值为0x03000000U,这个值比较眼熟吧,正是lock类型的定义

#define PG_WAIT_LOCK 0x03000000U

b. wait_event的运算取值:

step1 求locktag_type

locktag_type=wait_event_info & 0x0000FFFF

= 0x03000004U & 0x0000FFFF

意为保留wait_event_info的的低四位,运算值为0x00000004U,对应十进制值为4

step2 求wait_event

wait_event=LockTagTypeNames[locktag_type]

=LockTagTypeNames[4]

值为LockTagTypeNames下标为4的成员,从LockTagTypeNames数组定义可以找到下标为4对应的正是transactionid

/* This must match enum LockTagType! */

const char *const LockTagTypeNames[] = {

"relation",

"extend",

"page",

"tuple",

"transactionid",

"virtualxid",

"speculative token",

"object",

"userlock",

"advisory"

};

II.简单列举下其他几种类型

1.PG_WAIT_LWLOCK 类的buffer_content事件

如下一个表进行autovacuum ,可以看到当前的等待事件类型为LWLock,具体事件为buffer_content

-[ RECORD 1 ]---+----------------------------------------------------------------

pid 202623

wait_event_type | LWLock

wait_event | buffer_content

query | autovacuum: VACUUM public.apply_info_search (to prevent wraparound

backend_type | autovacuum worker

来看下stack信息:

[postgres@postgres_zabbix ~]$ pstack 202623

#0 0x002aae05c12aob in do_futex_wait.constprop.1 () from/lib64/libpthread.so

#1 0x002aae05c12a9f in new_sem_wait_slow.constprop.0 () from /lib64/libpthrd.so

#2 0x002aae05c12b3b in sem_wait@@GLIBC 2.2.5 ()from /lib64/libpthread.so.0

#3 0x0000000068a8e2 in PGSemaphoreLock()

#4 0x000000006f2c34 in LWLockAcquire ()

#5 0x00000000960se1 in LockBufferForcleanup ()

#6 0x00000000964930 in btvacuumpage()

#7 0x000000004c423f in btvacuumscan()

#8 0x0000000066438e in btbulkdelete()

#9 0x000009005d3ecl in lazy_vacuum_index ()

#10 0x00000060654da5 in Lazy_vacuum_rel ()

#11 0x0009000095d29bs in vacuum_rel ()

#12 0x0009090005d3852 in vacuum()

#13 0x09000009068d690 in do_autovacuum ()

#14 0x00006606668daac in AutoVacworkerMain.isra.6 ()

#15 0x000099000068349 in StartAutoVacworker ()

#16 0x0000990000059aa in sigusr1_handler ()

#17 <signal handler called>

#18 0x0002aae7e5d783 in seLect_nocancel () from /Lib64/libc.so.6

#19 0x000000000478ba inserverLoop ()

#20 0x00000006669559 in PostmasterMain ()

#21 0x0096006047972b in main ()

可以看到当前进程通过LWLockAcquire 函数申请LWlock,尝试访问临界数据。目前在等锁,pg中LWlock是通过PGsemaphore实现(底层调用sem_wait等接口),最终调入futex接口进行sleep,等待临界资源可操作。

进程strace信息:

strace -p 202623

strace: Process 202623 attached

futex(0x2aae0f901df8, FUTEX_WAIT, 0, NULL^Cstrace: Process 202623 detached

<detached ...>

使用futex是为了解决传统semaphore不必要的系统调用造成大量的性能损耗,具体可参考:Futex设计与实现

2.PG_WAIT_IPC 类的Bgworkershutdown事件

一个select查询,当前的等待事件类型为IPC,等待事件为Bgworkershutdown

-[ RECORD 1 ]---+----------------------------------------------------------------

pid | 20262

wait_event_type | IPC

wait_event | Bgworkershutdown

query | select sum(mem_used) from qsump_pacloud_oscginfo_activity_detail_info_day

stack信息:

[postgres@postgres_zabbix ~]$ pstack 20262

#0 0x00002aae97066903 in _epoll_wait nocancel () from /Lib64/libc.so.6

#1 0x00000000006e156e in WaitEventsetWait ()

#2 0x00000000006e1b97 in WaitLatchOrSocket ()

#3 0x000000000068f89a in WaitForBackgroundworkerShutdown ()

#4 0x00000000004ddbde in WaitForParallelworkersToExit.isra.1 ()

#5 0x00000000004de76d in DestroyParallelContext ()

#6 0x00000000004dec6b in AtEoxact_Parallel ()

#7 0x00000000004e8997 in AbortTransaction()

#8 0x00000000004e8fc5 in AbortCurrentTransaction()

#9 0x0000000000701501 in PostgresMain()

#10 0x0000000000478cdf in ServerLoop ()

#11 0x000000000069c559 in PostmasterMain ()

#12 0x000000000047972b in Main ()

[postgres@postgres_zabbix ~]$

从stack可以看到,

#8行 该select 进程当前在进行事务回滚

#5行 准备销毁ParallelContext

#3行 等待后台并行进程shutdown

#0行 进入epoll_wait中观察list链表,并sleep

ps可以看到目前20262有两个并行进程20273 ,202734

[postgres@postgres_zabbix ~]$ ps -ef|grep 20262| grep -v grep

postgres 20262 20260 0 May18? 00:00:00 postgres: pg12: pguser postgres 127.0.0.1(35442) SELECT

postgres 20273 20260 0 May18? 00:00:00 postgres: pg12: bgworker: parat 00:00:00 postgres: pg12: bgworker: parallel worker for PID 20262

postgres 20274 20260 0 May18? 00:00:00 postgres: pg12: bgworker: parat 00:00:00 postgres: pg12: bgworker: parallel worker for PID 20262

[postgres@postgres_zabbix ~]$

不进行详细的代码分析了,大致场景为查询进程事务回滚,在等待并行进程退出并返回结果。也就是目前是进程间通信状态,因此等待事件的类型为IPC,事件就是Bgworkershutdown

3.PG_WAIT_ACTIVITY 类型的CheckpointerMain事件

4.PG_WAIT_CLIENT 类型的ClientRead 事件

示例3和4请参考文章最后提到的另一篇博文

三、总结

通过以上的例子可以发现,PostgreSQL的等待事件,其实就是根据各种使用场景,自定义事件和类型,最终的“等待”基本是通过封装epoll、 futex等系统接口实现的。

特别是epoll的使用场景最多,了解epoll相关可以参考之前记录的一篇博文:《PostgreSQL中的io多路复用–select和epoll实现》