MTCNN人脸检测及人脸关键点提取(学习记录)

我看了很多关于MTCNN框架的文章,但基本上都是一概而过,本文章记录MTCNN每一步的流程及附上注释的代码。

MTCNN框架主要由三大子网络组成,即P-Net,R-Net,O-Net。

三大子网络的区别:P-Net最后没有全连接层,而是卷积层代替全连接层,类似于FCN(全连接神经网络),目的是最后一层的卷积层是由 带有人脸概率的小方格组成,而每一小方格都对应原始图像中的某一区域,属于像素级别的识别。而R-Net,O-Net最后由全连接层组成,为图像级别的识别,即对整幅图像识别。

具体流程:

原始图像:

第一阶段:

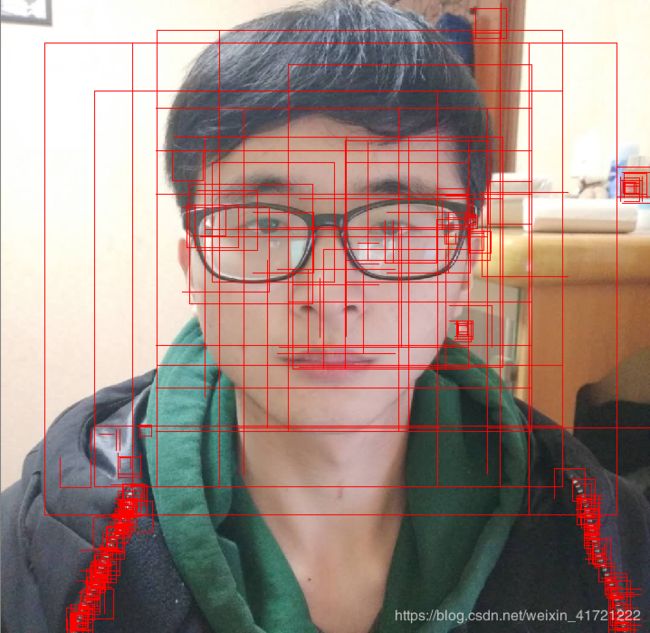

1:首先图像金字塔将原始图像放缩到不同尺度,然后将不同尺度的图像输入给Pnet网络,目的是为了可以检测到不同大小的人脸,从而实现多尺度目标检测。

2:P-Net输出最后一层的卷积层的每个小方格预测到的人脸概率以及预测到的边框坐标偏移量。注意:此时是所有小方格的人脸概率,可能存在为0,也可能存在为1。

3:将第二步得到数据作为输入,首先提取出人脸概率大于设定阈值的小方格,作一个初步过滤。因为有不同尺度下的小方格,而每个小方格在原始图像都代表一个区域,所以找到不同尺度下的小方格在原始图像中的区域。(会得到很多的人脸区域)

4:把初步得到可能是人脸的图片进行NMS非极大抑制。NMS就是待测图片中人脸的区域与实际上人脸框的面积重合比例(IOU)

5:NMS得到的图片再经行边框回归,边框回归是待测图片的人脸框与实际人脸框的位置相比较,对待测图片人脸框位置作调整,使它跟接近真是人脸框的位置。

以上为Pnet网络检测的基本流程,Pnet最后层网络是卷积层,Pnet输出结果是经过筛选和位置调整的人脸图片,注意输出还是图片,其文档保存图片路径,人脸得分,边框坐标。

第二阶段:

1:把Pnet网络输出的图片作为Rnet网络的输入,Rnet网络输出每张图片的人脸得分和边框坐标偏移量(Pnet输出的是图片某个区域的得分,Rnet输出的是整张图片的得分)

2:排除得分小于阈值的图片

3:NMS非极大抑制

4:边框回归

以上为Rnet网络的流程。Rnet是对人脸框的进一步过滤和调整边框位置

第三阶段:

Onet与Rnet基本流程大致一样,只是增加了5个人脸关键点的预测及位置调整

该文章是直接检测图片,也就是直接加载的别人训练好的模型,只需要把训练好的模型det1.npy,det2.npy,det3.npy加载即可

关于如何训练出这三个模型,会在之后的文章中写到。

1:detect_face.py

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from six import string_types, iteritems

import numpy as np

import tensorflow as tf

#from math import floor

import cv2

import os

def layer(op):

#定义可组合网络层的装饰器

def layer_decorated(self, *args, **kwargs):

#以.conv(3, 3, 10, 1, 1, padding='VALID', relu=False, name='conv1')为例

#args为(3, 3, 10, 1, 1)

#kwargs为(padding='VALID', relu=False, name='conv1')

# 如果没有提供name,则自动设置名称。

name = kwargs.setdefault('name', self.get_unique_name(op.__name__))#上面为例,此时name=conv1

#.setdefault字典插入

# 计算出层的输入

if len(self.terminals) == 0:

raise RuntimeError('No input variables found for layer %s.' % name)

elif len(self.terminals) == 1:

layer_input = self.terminals[0]

else:

layer_input = list(self.terminals)

# 执行操作并得到输出。

layer_output = op(self, layer_input, *args, **kwargs)

# 添加到层lut。

self.layers[name] = layer_output

# 这个输出现在是下一层的输入。

self.feed(layer_output)

return self

return layer_decorated

class Network(object):

def __init__(self, inputs, trainable=True):

#此网络的输入节点

self.inputs = inputs

print("self.inputs",self.inputs)

# 当前的终端节点列表

self.terminals = []

# 从层名称到层的映射

self.layers = dict(inputs)

# 如果为真,则将结果变量设置为可训练

self.trainable = trainable

self.setup()

def setup(self):

"""构建网络. """

raise NotImplementedError('必须由子类实现.')

def load(self, data_path, session, ignore_missing=False):

"""加载网络重量。数据_路径:数字序列化网络权值会话的路径:当前的张力流会话忽略不计:如果为真,则忽略缺少层的序列化权值.

"""

data_dict = np.load(data_path, encoding='latin1').item() #内核:禁用=无成员

for op_name in data_dict:

with tf.variable_scope(op_name, reuse=True):

for param_name, data in iteritems(data_dict[op_name]):

try:

var = tf.get_variable(param_name)

session.run(var.assign(data))

except ValueError:

if not ignore_missing:

raise

def feed(self, *args):

"""通过替换终端节点来设置下一个操作的输入。参数可以是层名称,也可以是实际的层。

"""

print("args=",args)

assert len(args) != 0

#assert A,如果A为false,则说明程序已经处于不正确的状态下,系统将给出警告并且退出。而这里args=data字节为1,应该永不为0

self.terminals = []

for fed_layer in args:#args内容为元组,fed_layer内容不变,格式为字符串

if isinstance(fed_layer, string_types):#isinstance(a,int),检测a是否属于int类

try:

fed_layer = self.layers[fed_layer]#把内容转换成字典类型

print("1111fed_layer=",fed_layer)

except KeyError:

raise KeyError('Unknown layer name fed: %s' % fed_layer)

self.terminals.append(fed_layer)#集合一块

return self

def get_output(self):#该代码没有用到

"""输出当前神经网络层."""

return self.terminals[-1]#self.terminals为字典,[-1]代表最后一个

def get_unique_name(self, prefix):

"""

返回给定前缀的索引后缀唯一名称。这用于基于类型前缀的自动生成层名称

"""

ident = sum(t.startswith(prefix) for t, _ in self.layers.items()) + 1

return '%s_%d' % (prefix, ident)

def make_var(self, name, shape):

"""创建变量"""

return tf.get_variable(name, shape, trainable=self.trainable)

def validate_padding(self, padding):

"""填充类型"""

assert padding in ('SAME', 'VALID')

@layer

def conv(self,

inp,#每层网络的模型数据

k_h,

k_w,

c_o,

s_h,

s_w,

name,

relu=True,

padding='SAME',

group=1,

biased=True):

print("c_o=",c_o)

self.validate_padding(padding)#填充类型为SAME

#c_i输入该卷积的通道数,c_o输出该卷积的通道数

c_i = int(inp.get_shape()[-1])

print("每层神经网络通道数=",c_i)

assert c_i % group == 0#当输入或者输出通道数为0时,发出警报

assert c_o % group == 0

#卷积。convolve=lambda i, k相当于convolve(i,k)需要被调用

convolve = lambda i, k: tf.nn.conv2d(i, k, [1, s_h, s_w, 1], padding=padding)

with tf.variable_scope(name) as scope:#空间命名,用于可视化

kernel = self.make_var('weights', shape=[k_h, k_w, c_i // group, c_o])#创建权重变量,模板大小为shape

output = convolve(inp, kernel)#调用前几句的convolve开始卷积

# 加入偏差

if biased:

biases = self.make_var('biases', [c_o])#创建偏差变量,模板大小为[c_o]

output = tf.nn.bias_add(output, biases)

#激活函数

if relu:

output = tf.nn.relu(output, name=scope.name)

return output

@layer

def prelu(self, inp, name):#relu中的学习率α仅仅是一个预先设定好的超参数。PReLU 中的参数α是一个需要学习的参数

with tf.variable_scope(name):

i = int(inp.get_shape()[-1])

alpha = self.make_var('alpha', shape=(i,))

output = tf.nn.relu(inp) + tf.multiply(alpha, -tf.nn.relu(-inp))

return output#输出PRelu值

@layer

def max_pool(self, inp, k_h, k_w, s_h, s_w, name, padding='SAME'):

self.validate_padding(padding)#填充方式

return tf.nn.max_pool(inp,

ksize=[1, k_h, k_w, 1],#模板尺寸k_h*k_w

strides=[1, s_h, s_w, 1],#步长s_h*s_w

padding=padding,

name=name)

@layer

def fc(self, inp, num_out, name, relu=True):

with tf.variable_scope(name):

input_shape = inp.get_shape()#得到模型数据的维度。

#Rnet初始为(7,3,3,64)

#onet初始为(7,3,3,128)

#7代表全连接输出7个值。分别f1 c1 f2 dx1 dy1 dx2 dy2,f1标记该样本是否参与分类,f2标记该样本是否参与回归

#c1是样本类别,dx dy为人脸框左上角坐标和右下角坐标

if input_shape.ndims == 4:

dim = 1

for d in input_shape[1:].as_list():

#input_shape[1:]得到除了第一个外,剩下的所有。第一次input_shape为(7,3,3,64),则现在为(3,3,64)

#.as_list()把元组变成列表,即()变成[]

#d就为一个数字

dim *= int(d)#计算出序列化的长度

feed_in = tf.reshape(inp, [-1, dim])#修改图像数据尺寸,即序列化,为全连接做准备

else:

feed_in, dim = (inp, input_shape[-1].value)

weights = self.make_var('weights', shape=[dim, num_out])#定义全连接的权重和尺寸

biases = self.make_var('biases', [num_out])#定义全连接的偏差和尺寸

op = tf.nn.relu_layer if relu else tf.nn.xw_plus_b

#tf.nn.xw_plus_b(x, weights, biases, name=None)相当于matmul(x, weights) + biases

#tf.nn.relu_layer(x, weights, biases, name=None)相当于tf.nn.relu(tf.matmul(x, weights) + biases, name=None)

fc = op(feed_in, weights, biases, name=name)#全连接计算

return fc

@layer

def softmax(self, target, axis, name=None):

#target为softmax网络层中保存好的模型数据

max_axis = tf.reduce_max(target, axis, keepdims=True)

target_exp = tf.exp(target-max_axis)

normalize = tf.reduce_sum(target_exp, axis, keepdims=True)

softmax = tf.div(target_exp, normalize, name)

return softmax

class PNet(Network):#PNet为子类,Network为父类

def setup(self):

(self.feed('data') #子类调用父类的.feed(),传入参数为字符串data

.conv(3, 3, 10, 1, 1, padding='VALID', relu=False, name='conv1')

#3,3:卷积模板大小 10:该网络层输出的通道数 1,1:移动的步长

.prelu(name='PReLU1')

.max_pool(2, 2, 2, 2, name='pool1')

.conv(3, 3, 16, 1, 1, padding='VALID', relu=False, name='conv2')

.prelu(name='PReLU2')

.conv(3, 3, 32, 1, 1, padding='VALID', relu=False, name='conv3')

.prelu(name='PReLU3')

.conv(1, 1, 2, 1, 1, relu=False, name='conv4-1')

.softmax(3,name='prob1'))

(self.feed('PReLU3') #pylint: disable=no-value-for-parameter

.conv(1, 1, 4, 1, 1, relu=False, name='conv4-2'))

class RNet(Network):

def setup(self):

(self.feed('data') #pylint: disable=no-value-for-parameter, no-member

.conv(3, 3, 28, 1, 1, padding='VALID', relu=False, name='conv1')

.prelu(name='prelu1')

.max_pool(3, 3, 2, 2, name='pool1')

.conv(3, 3, 48, 1, 1, padding='VALID', relu=False, name='conv2')

.prelu(name='prelu2')

.max_pool(3, 3, 2, 2, padding='VALID', name='pool2')

.conv(2, 2, 64, 1, 1, padding='VALID', relu=False, name='conv3')

.prelu(name='prelu3')

.fc(128, relu=False, name='conv4')#全连接层

.prelu(name='prelu4')

.fc(2, relu=False, name='conv5-1')

.softmax(1,name='prob1'))

(self.feed('prelu4') #pylint: disable=no-value-for-parameter

.fc(4, relu=False, name='conv5-2'))

class ONet(Network):

def setup(self):

(self.feed('data') #pylint: disable=no-value-for-parameter, no-member

.conv(3, 3, 32, 1, 1, padding='VALID', relu=False, name='conv1')

.prelu(name='prelu1')

.max_pool(3, 3, 2, 2, name='pool1')

.conv(3, 3, 64, 1, 1, padding='VALID', relu=False, name='conv2')

.prelu(name='prelu2')

.max_pool(3, 3, 2, 2, padding='VALID', name='pool2')

.conv(3, 3, 64, 1, 1, padding='VALID', relu=False, name='conv3')

.prelu(name='prelu3')

.max_pool(2, 2, 2, 2, name='pool3')

.conv(2, 2, 128, 1, 1, padding='VALID', relu=False, name='conv4')

.prelu(name='prelu4')

.fc(256, relu=False, name='conv5')

.prelu(name='prelu5')

.fc(2, relu=False, name='conv6-1')

.softmax(1, name='prob1'))

(self.feed('prelu5') #pylint: disable=no-value-for-parameter

.fc(4, relu=False, name='conv6-2'))

(self.feed('prelu5') #pylint: disable=no-value-for-parameter

.fc(10, relu=False, name='conv6-3'))

def create_mtcnn(sess, model_path):

if not model_path:#if not None 代表执行

model_path,_ = os.path.split(os.path.realpath(__file__))

#os.path.realpath(__file__)为脚本的绝对路径

#os.path.split()分割路径

print("model_path=",model_path)

with tf.variable_scope('pnet'):

data = tf.placeholder(tf.float32, (None,None,None,3), 'input')#PNet在训练阶段的输入尺寸为12*12

pnet = PNet({'data':data})#类

print("pnet",pnet)

pnet.load(os.path.join(model_path, 'det1.npy'), sess)#通过加载存储的.npy到对应的网络模型中恢复网络中的参数。

print("pnet.load",pnet)

# pnet.load为子类调用父类中的load定义

with tf.variable_scope('rnet'):

data = tf.placeholder(tf.float32, (None,24,24,3), 'input')#RNet的输入尺寸为24*24

rnet = RNet({'data':data})

#print("rnet",rnet)

rnet.load(os.path.join(model_path, 'det2.npy'), sess)

#print("rnet.load",rnet)

with tf.variable_scope('onet'):

data = tf.placeholder(tf.float32, (None,48,48,3), 'input')#ONet的输入尺寸为48*48

onet = ONet({'data':data})

#print("onet",onet)

onet.load(os.path.join(model_path, 'det3.npy'), sess)

#print("onet.load",onet)

pnet_fun = lambda img : sess.run(('pnet/conv4-2/BiasAdd:0', 'pnet/prob1:0'), feed_dict={'pnet/input:0':img})

rnet_fun = lambda img : sess.run(('rnet/conv5-2/conv5-2:0', 'rnet/prob1:0'), feed_dict={'rnet/input:0':img})

onet_fun = lambda img : sess.run(('onet/conv6-2/conv6-2:0', 'onet/conv6-3/conv6-3:0', 'onet/prob1:0'), feed_dict={'onet/input:0':img})

#lambda函数是匿名的:所谓匿名函数,通俗地说就是没有名字的函数

#例如:lambda x, y: x*y;函数输入是x和y,输出是它们的积x*y

tensor_variables = tf.global_variables()

for variable in tensor_variables:

print(str(variable))

return pnet_fun, rnet_fun, onet_fun

def detect_face(img, minsize, pnet, rnet, onet, threshold, factor):

""" 检测图像中的人脸,并返回包围框和它们的点。.

img: input image

minsize: 最小人脸大小

pnet, rnet, onet: caffemodel

threshold: threshold=[th1, th2, th3], 阈值

factor: 用于创建一个用于检测图像中人脸大小的缩放金字塔的因子

"""

points=np.empty(0)#

#创建图像金字塔

#初始部分除了一些判断语句外,最重要的是生成一个scales列表,这个列表中放的就是一系列的scale值,

#表示依次将原图缩小成原图的scale倍,从而组成图像金字塔。首先minl是输入图像的长或宽的最小值,

#12表示最小的检测尺寸;minsize表示最小的人脸尺寸

factor_count=0#初始化缩放因子的个数

h=img.shape[0]#图像行

w=img.shape[1]#图像列

minl=np.amin([h, w])#指定轴,则返回指定轴中的相应的值,不指定,则返回整个数组中某一个值

#A = np.array([[1,2,3,4,5],[6,7,8,9,0]])

#print('0轴上最小的值:',np.amin(A,axis=0)) aixs=0代表列轴

#输出 [1,2,3,4,0]

m=12.0/minsize

minl=minl*m

scales=[]

while minl>=12:

scales += [m*np.power(factor, factor_count)]#np.power()将第一个输入数组中的元素作为底数,计算它与第二个输入数组相应元素的幂

minl = minl*factor

factor_count += 1

print("一共%d个缩放因子"%factor_count)

#第一阶段。把图像金字塔一次放入Pnet网络。得到包含9个数的total_boxes

#total_boxes[0],[1],[2],[3]分别为边框的左上角和右下角坐标

#total_boxes[4]为人脸得分

#total_boxes[5][6][7][8]分别为边框回归的对应total_boxes[0],[1],[2],[3]的偏移量

#第一阶段部分是根据输入的scales列表,生成total_boxes这个二维的numpy ndarray

#注意:Pnet是FCN类型的全连接网络

total_boxes=np.empty((0,9))#建立0行9列的二维数组

p_boxes=[]

number=0

for scale in scales:

number=number+1

hs=int(np.ceil(h*scale))#变换后的高。np.ceil取整

ws=int(np.ceil(w*scale))#变换后的宽

print("放缩后图像的长宽:",hs,ws)

im_data = imresample(img, (hs, ws))#对图片进行缩小放大

im_data = (im_data-127.5)*0.0078125

img_x = np.expand_dims(im_data, 0)#np.expand_dims增加维度

#np.expand_dims(3) 输出[3]

#np.expand_dims([1,2], axis=0) 输出[[1,2]]

img_y = np.transpose(img_x, (0,2,1,3))#转置

out = pnet(img_y)#输入pnet网络中,获得输出

out0 = np.transpose(out[0], (0,2,1,3))#预测边框坐标偏置

out1 = np.transpose(out[1], (0,2,1,3))#二分类,即为人脸的概率

#输入的是最后卷积层的所有小方格的人脸概率和预测的边框坐标偏置

#输出:找到最后卷积层中大于人脸概率的小方格在原图像对应的边框范围。以及此时对应的预测边框偏移

boxes, _ = generateBoundingBox(out1[0,:,:,1].copy(), out0[0,:,:,:].copy(), scale, threshold[0])

#把所有得到的二维数据按列合并

if(number==1):

p_boxes=boxes

else:

p_boxes = np.vstack((p_boxes,boxes))#快速将两个矩阵或数组合并成一个数组,数组纵向合并

# 对预测出的预测框进行nms,筛选预测框

pick = nms(boxes.copy(), 0.5, 'Union')#pick为每层金字塔经过nms后需要保留边框的标签值

print("每层金字塔得到的nms人脸框=",pick.size)

if boxes.size>0 and pick.size>0:

boxes = boxes[pick,:]#保留pick行及该行所有列。为nms后边框数据

total_boxes = np.append(total_boxes, boxes, axis=0)#合并二维数组

pnet_nms_total_boxes=total_boxes

#边框回归

numbox = total_boxes.shape[0]

if numbox>0:

pick = nms(total_boxes.copy(), 0.7, 'Union')###提高阈值,进一步进行nms

total_boxes = total_boxes[pick,:]

regw = total_boxes[:,2]-total_boxes[:,0]

regh = total_boxes[:,3]-total_boxes[:,1]

qq1 = total_boxes[:,0]+total_boxes[:,5]*regw#修改左上角坐标X值

qq2 = total_boxes[:,1]+total_boxes[:,6]*regh

qq3 = total_boxes[:,2]+total_boxes[:,7]*regw

qq4 = total_boxes[:,3]+total_boxes[:,8]*regh

total_boxes = np.transpose(np.vstack([qq1, qq2, qq3, qq4, total_boxes[:,4]]))#依次为修正后的左上角,右下角坐标及该部分得分

total_boxes = rerec(total_boxes.copy())#使预测框变为正方形

total_boxes[:,0:4] = np.fix(total_boxes[:,0:4]).astype(np.int32)#取整

Pnet_end_total_boxes=total_boxes

dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph = pad(total_boxes.copy(), w, h)#对坐标进行修剪,使其不超出图片大小

#y,ey 为原图像中每个边框的上y值与下y值

#x, ex 为原图像中每个边框的左x值与右x值

#dy,edy 为从原图像中抠出边框,使边框成为新的输入图像。为新输入图像的上y值与下y值。上y为0,下y为原边框的高度

#dx,edx 为从原图像中抠出边框,使边框成为新的输入图像。为新输入图像的左x值与右x值。左x为0,右x为原边框的宽度

#第二阶段

#此时的输入必须为24*24大小,输入的图像为stage1产生的目标区域抠出来,输入到stage2(Rnet)中

#预测网络输出同样只有得分与预测框坐标修正,无关键点信息,与第一步很相似。

#注意rnet最后是全连接层,是对整幅图像进行分类,而不像pnet是对最后卷积层的每个小方格进行分类

numbox = total_boxes.shape[0]#输入图像的个数

if numbox>0:

tempimg = np.zeros((24,24,3,numbox))#初始化24*24的输入图像

for k in range(0,numbox):

tmp = np.zeros((int(tmph[k]),int(tmpw[k]),3))#初始化每个待检测的人脸边框图像

tmp[dy[k]-1:edy[k],dx[k]-1:edx[k],:] = img[y[k]-1:ey[k],x[k]-1:ex[k],:]#从原图像抠出待输出图像

if tmp.shape[0]>0 and tmp.shape[1]>0 or tmp.shape[0]==0 and tmp.shape[1]==0:

tempimg[:,:,:,k] = imresample(tmp, (24, 24))#把图像放缩到24*24

else:

return np.empty()

tempimg = (tempimg-127.5)*0.0078125

tempimg1 = np.transpose(tempimg, (3,1,0,2))

out = rnet(tempimg1)#输入rnet网络中,获得输出

out0 = np.transpose(out[0])#预测边框坐标偏移

out1 = np.transpose(out[1])#预测得分

score = out1[1,:]

ipass = np.where(score>threshold[1])#人脸得分大于阈值的坐标

##第一步中筛选出的预测框坐标。此时的坐标为原图中的坐标偏移,并非resize之后的坐标偏置。即直接将偏移加到原图中坐标即可

#因为Rnet输入图像为Pnet边框人脸图像,又因为Rnet第一步仅仅是判断这些人脸框图像得分是否大于阈值

total_boxes = np.hstack([total_boxes[ipass[0],0:4].copy(), np.expand_dims(score[ipass].copy(),1)])

rnet_thresh_boxes=total_boxes

mv = out0[:,ipass[0]]#第二步得出的偏移值

if total_boxes.shape[0]>0:

pick = nms(total_boxes, 0.7, 'Union')#非极大抑制

total_boxes = total_boxes[pick,:]

rnet_nms_boxes=total_boxes

total_boxes = bbreg(total_boxes.copy(), np.transpose(mv[:,pick]))#加偏移后的坐标

total_boxes = rerec(total_boxes.copy())#使矩形框变成正方形

r_net=total_boxes

numbox = total_boxes.shape[0]

#第三阶段Onet网络

if numbox>0:

total_boxes = np.fix(total_boxes).astype(np.int32)

dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph = pad(total_boxes.copy(), w, h)

tempimg = np.zeros((48,48,3,numbox))

for k in range(0,numbox):

tmp = np.zeros((int(tmph[k]),int(tmpw[k]),3))

tmp[dy[k]-1:edy[k],dx[k]-1:edx[k],:] = img[y[k]-1:ey[k],x[k]-1:ex[k],:]

if tmp.shape[0]>0 and tmp.shape[1]>0 or tmp.shape[0]==0 and tmp.shape[1]==0:

tempimg[:,:,:,k] = imresample(tmp, (48, 48))

else:

return np.empty()

tempimg = (tempimg-127.5)*0.0078125

tempimg1 = np.transpose(tempimg, (3,1,0,2))

out = onet(tempimg1)

out0 = np.transpose(out[0])

out1 = np.transpose(out[1])

out2 = np.transpose(out[2])

score = out2[1,:]

points = out1

ipass = np.where(score>threshold[2])

points = points[:,ipass[0]]

total_boxes = np.hstack([total_boxes[ipass[0],0:4].copy(), np.expand_dims(score[ipass].copy(),1)])

mv = out0[:,ipass[0]]

w = total_boxes[:,2]-total_boxes[:,0]+1

h = total_boxes[:,3]-total_boxes[:,1]+1

points[0:5,:] = np.tile(w,(5, 1))*points[0:5,:] + np.tile(total_boxes[:,0],(5, 1))-1

points[5:10,:] = np.tile(h,(5, 1))*points[5:10,:] + np.tile(total_boxes[:,1],(5, 1))-1

if total_boxes.shape[0]>0:

total_boxes = bbreg(total_boxes.copy(), np.transpose(mv))

pick = nms(total_boxes.copy(), 0.7, 'Min')

total_boxes = total_boxes[pick,:]

points = points[:,pick]

return total_boxes, points,p_boxes,pnet_nms_total_boxes,Pnet_end_total_boxes,rnet_thresh_boxes,rnet_nms_boxes,r_net

#没有用到

def bulk_detect_face(images, detection_window_size_ratio, pnet, rnet, onet, threshold, factor):

"""Detects faces in a list of images

images: list containing input images

detection_window_size_ratio: ratio of minimum face size to smallest image dimension

pnet, rnet, onet: caffemodel

threshold: threshold=[th1 th2 th3], th1-3 are three steps's threshold [0-1]

factor: the factor used to create a scaling pyramid of face sizes to detect in the image.

"""

all_scales = [None] * len(images)

images_with_boxes = [None] * len(images)

for i in range(len(images)):

images_with_boxes[i] = {'total_boxes': np.empty((0, 9))}

# create scale pyramid

for index, img in enumerate(images):

all_scales[index] = []

h = img.shape[0]

w = img.shape[1]

minsize = int(detection_window_size_ratio * np.minimum(w, h))

factor_count = 0

minl = np.amin([h, w])

if minsize <= 12:

minsize = 12

m = 12.0 / minsize

minl = minl * m

while minl >= 12:

all_scales[index].append(m * np.power(factor, factor_count))

minl = minl * factor

factor_count += 1

# # # # # # # # # # # # #

# first stage - fast proposal network (pnet) to obtain face candidates

# # # # # # # # # # # # #

images_obj_per_resolution = {}

# TODO: use some type of rounding to number module 8 to increase probability that pyramid images will have the same resolution across input images

for index, scales in enumerate(all_scales):

h = images[index].shape[0]

w = images[index].shape[1]

for scale in scales:

hs = int(np.ceil(h * scale))

ws = int(np.ceil(w * scale))

if (ws, hs) not in images_obj_per_resolution:

images_obj_per_resolution[(ws, hs)] = []

im_data = imresample(images[index], (hs, ws))

im_data = (im_data - 127.5) * 0.0078125

img_y = np.transpose(im_data, (1, 0, 2)) # caffe uses different dimensions ordering

images_obj_per_resolution[(ws, hs)].append({'scale': scale, 'image': img_y, 'index': index})

for resolution in images_obj_per_resolution:

images_per_resolution = [i['image'] for i in images_obj_per_resolution[resolution]]

outs = pnet(images_per_resolution)

for index in range(len(outs[0])):

scale = images_obj_per_resolution[resolution][index]['scale']

image_index = images_obj_per_resolution[resolution][index]['index']

out0 = np.transpose(outs[0][index], (1, 0, 2))

out1 = np.transpose(outs[1][index], (1, 0, 2))

boxes, _ = generateBoundingBox(out1[:, :, 1].copy(), out0[:, :, :].copy(), scale, threshold[0])

# inter-scale nms

pick = nms(boxes.copy(), 0.5, 'Union')

if boxes.size > 0 and pick.size > 0:

boxes = boxes[pick, :]

images_with_boxes[image_index]['total_boxes'] = np.append(images_with_boxes[image_index]['total_boxes'],

boxes,

axis=0)

for index, image_obj in enumerate(images_with_boxes):

numbox = image_obj['total_boxes'].shape[0]

if numbox > 0:

h = images[index].shape[0]

w = images[index].shape[1]

pick = nms(image_obj['total_boxes'].copy(), 0.7, 'Union')

image_obj['total_boxes'] = image_obj['total_boxes'][pick, :]

regw = image_obj['total_boxes'][:, 2] - image_obj['total_boxes'][:, 0]

regh = image_obj['total_boxes'][:, 3] - image_obj['total_boxes'][:, 1]

qq1 = image_obj['total_boxes'][:, 0] + image_obj['total_boxes'][:, 5] * regw

qq2 = image_obj['total_boxes'][:, 1] + image_obj['total_boxes'][:, 6] * regh

qq3 = image_obj['total_boxes'][:, 2] + image_obj['total_boxes'][:, 7] * regw

qq4 = image_obj['total_boxes'][:, 3] + image_obj['total_boxes'][:, 8] * regh

image_obj['total_boxes'] = np.transpose(np.vstack([qq1, qq2, qq3, qq4, image_obj['total_boxes'][:, 4]]))

image_obj['total_boxes'] = rerec(image_obj['total_boxes'].copy())

image_obj['total_boxes'][:, 0:4] = np.fix(image_obj['total_boxes'][:, 0:4]).astype(np.int32)

dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph = pad(image_obj['total_boxes'].copy(), w, h)

numbox = image_obj['total_boxes'].shape[0]

tempimg = np.zeros((24, 24, 3, numbox))

if numbox > 0:

for k in range(0, numbox):

tmp = np.zeros((int(tmph[k]), int(tmpw[k]), 3))

tmp[dy[k] - 1:edy[k], dx[k] - 1:edx[k], :] = images[index][y[k] - 1:ey[k], x[k] - 1:ex[k], :]

if tmp.shape[0] > 0 and tmp.shape[1] > 0 or tmp.shape[0] == 0 and tmp.shape[1] == 0:

tempimg[:, :, :, k] = imresample(tmp, (24, 24))

else:

return np.empty()

tempimg = (tempimg - 127.5) * 0.0078125

image_obj['rnet_input'] = np.transpose(tempimg, (3, 1, 0, 2))

# # # # # # # # # # # # #

# second stage - refinement of face candidates with rnet

# # # # # # # # # # # # #

bulk_rnet_input = np.empty((0, 24, 24, 3))

for index, image_obj in enumerate(images_with_boxes):

if 'rnet_input' in image_obj:

bulk_rnet_input = np.append(bulk_rnet_input, image_obj['rnet_input'], axis=0)

out = rnet(bulk_rnet_input)

out0 = np.transpose(out[0])

out1 = np.transpose(out[1])

score = out1[1, :]

i = 0

for index, image_obj in enumerate(images_with_boxes):

if 'rnet_input' not in image_obj:

continue

rnet_input_count = image_obj['rnet_input'].shape[0]

score_per_image = score[i:i + rnet_input_count]

out0_per_image = out0[:, i:i + rnet_input_count]

ipass = np.where(score_per_image > threshold[1])

image_obj['total_boxes'] = np.hstack([image_obj['total_boxes'][ipass[0], 0:4].copy(),

np.expand_dims(score_per_image[ipass].copy(), 1)])

mv = out0_per_image[:, ipass[0]]

if image_obj['total_boxes'].shape[0] > 0:

h = images[index].shape[0]

w = images[index].shape[1]

pick = nms(image_obj['total_boxes'], 0.7, 'Union')

image_obj['total_boxes'] = image_obj['total_boxes'][pick, :]

image_obj['total_boxes'] = bbreg(image_obj['total_boxes'].copy(), np.transpose(mv[:, pick]))

image_obj['total_boxes'] = rerec(image_obj['total_boxes'].copy())

numbox = image_obj['total_boxes'].shape[0]

if numbox > 0:

tempimg = np.zeros((48, 48, 3, numbox))

image_obj['total_boxes'] = np.fix(image_obj['total_boxes']).astype(np.int32)

dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph = pad(image_obj['total_boxes'].copy(), w, h)

for k in range(0, numbox):

tmp = np.zeros((int(tmph[k]), int(tmpw[k]), 3))

tmp[dy[k] - 1:edy[k], dx[k] - 1:edx[k], :] = images[index][y[k] - 1:ey[k], x[k] - 1:ex[k], :]

if tmp.shape[0] > 0 and tmp.shape[1] > 0 or tmp.shape[0] == 0 and tmp.shape[1] == 0:

tempimg[:, :, :, k] = imresample(tmp, (48, 48))

else:

return np.empty()

tempimg = (tempimg - 127.5) * 0.0078125

image_obj['onet_input'] = np.transpose(tempimg, (3, 1, 0, 2))

i += rnet_input_count

# # # # # # # # # # # # #

# third stage - further refinement and facial landmarks positions with onet

# # # # # # # # # # # # #

bulk_onet_input = np.empty((0, 48, 48, 3))

for index, image_obj in enumerate(images_with_boxes):

if 'onet_input' in image_obj:

bulk_onet_input = np.append(bulk_onet_input, image_obj['onet_input'], axis=0)

out = onet(bulk_onet_input)

out0 = np.transpose(out[0])

out1 = np.transpose(out[1])

out2 = np.transpose(out[2])

score = out2[1, :]

points = out1

i = 0

ret = []

for index, image_obj in enumerate(images_with_boxes):

if 'onet_input' not in image_obj:

ret.append(None)

continue

onet_input_count = image_obj['onet_input'].shape[0]

out0_per_image = out0[:, i:i + onet_input_count]

score_per_image = score[i:i + onet_input_count]

points_per_image = points[:, i:i + onet_input_count]

ipass = np.where(score_per_image > threshold[2])

points_per_image = points_per_image[:, ipass[0]]

image_obj['total_boxes'] = np.hstack([image_obj['total_boxes'][ipass[0], 0:4].copy(),

np.expand_dims(score_per_image[ipass].copy(), 1)])

mv = out0_per_image[:, ipass[0]]

w = image_obj['total_boxes'][:, 2] - image_obj['total_boxes'][:, 0] + 1

h = image_obj['total_boxes'][:, 3] - image_obj['total_boxes'][:, 1] + 1

points_per_image[0:5, :] = np.tile(w, (5, 1)) * points_per_image[0:5, :] + np.tile(

image_obj['total_boxes'][:, 0], (5, 1)) - 1

points_per_image[5:10, :] = np.tile(h, (5, 1)) * points_per_image[5:10, :] + np.tile(

image_obj['total_boxes'][:, 1], (5, 1)) - 1

if image_obj['total_boxes'].shape[0] > 0:

image_obj['total_boxes'] = bbreg(image_obj['total_boxes'].copy(), np.transpose(mv))

pick = nms(image_obj['total_boxes'].copy(), 0.7, 'Min')

image_obj['total_boxes'] = image_obj['total_boxes'][pick, :]

points_per_image = points_per_image[:, pick]

ret.append((image_obj['total_boxes'], points_per_image))

else:

ret.append(None)

i += onet_input_count

return ret

# function [boundingbox] = bbreg(boundingbox,reg)

def bbreg(boundingbox,reg):

"""Calibrate bounding boxes"""

if reg.shape[1]==1:

reg = np.reshape(reg, (reg.shape[2], reg.shape[3]))

w = boundingbox[:,2]-boundingbox[:,0]+1

h = boundingbox[:,3]-boundingbox[:,1]+1

b1 = boundingbox[:,0]+reg[:,0]*w

b2 = boundingbox[:,1]+reg[:,1]*h

b3 = boundingbox[:,2]+reg[:,2]*w

b4 = boundingbox[:,3]+reg[:,3]*h

boundingbox[:,0:4] = np.transpose(np.vstack([b1, b2, b3, b4 ]))

return boundingbox

#产生边框

#功能:pnet网络最后卷积层中若某个小方格的概率大于人脸阈值,则需要找到这个小方格在原图像对应的边框区域坐标

def generateBoundingBox(imap, reg, scale, t):

stride=2

cellsize=12

print("reg1",reg)

imap = np.transpose(imap)

dx1 = np.transpose(reg[:,:,0])

dy1 = np.transpose(reg[:,:,1])

dx2 = np.transpose(reg[:,:,2])

dy2 = np.transpose(reg[:,:,3])

y, x = np.where(imap >= t)#筛选出大于阈值的坐标。因为每个小单元格有一个预测概率值,四个坐标偏移值 H/12,W/12,y,x可看成index

if y.shape[0]==1:

dx1 = np.flipud(dx1)#np.flipud()对数组进行上下翻转

dy1 = np.flipud(dy1)

dx2 = np.flipud(dx2)

dy2 = np.flipud(dy2)

score = imap[(y,x)]#对于阈值的人脸得分

reg = np.transpose(np.vstack([ dx1[(y,x)], dy1[(y,x)], dx2[(y,x)], dy2[(y,x)] ]))#满足条件的人脸image预测框的坐标偏移

if reg.size==0:

reg = np.empty((0,3))

print("reg2",reg)

bb = np.transpose(np.vstack([y,x]))#np.vstack除了在纵向叠加矩阵外,还有将列表转换成矩阵的功能

#q1,q2值应为在原图中每一个预测框的左上角,右下角坐标

q1 = np.fix((stride*bb+1)/scale)

q2 = np.fix((stride*bb+cellsize-1+1)/scale)

boundingbox = np.hstack([q1, q2, np.expand_dims(score,1), reg])#np.hstack除了在横向叠加矩阵外,还有将列表转换成矩阵的功能

return boundingbox, reg

# 对预测出的预测框进行nms,筛选预测框

def nms(boxes, threshold, method):

if boxes.size==0:

return np.empty((0,3))

x1 = boxes[:,0]#该图像金字塔层得到预测框的左上角X点坐标

y1 = boxes[:,1]

x2 = boxes[:,2]

y2 = boxes[:,3]

s = boxes[:,4]#该图像金字塔层得到是人脸的得分

area = (x2-x1+1) * (y2-y1+1)#预测框面积

I = np.argsort(s)#每层人脸得分为一个列表,np.argsort()从小到大为标签值

pick = np.zeros_like(s, dtype=np.int16)#np.zeros_like创造维度与s一样的全0列表,并为int类型

counter = 0

while I.size>0:#如果该图像金字塔层存在可能存在不人脸。如果不存在待检测人脸,那么I为空数据

i = I[-1]#列表中最后一个,即为最大概率值

pick[counter] = i

counter += 1

idx = I[0:-1]#除最大概率外,其他所有概率

#计算当前概率最大矩形框与其他矩形框的相交框的坐标,

xx1 = np.maximum(x1[i], x1[idx])#np.max([-2, -1, 0, 1, 2]) 输出2 np.maximum([-2, -1, 0, 1, 2], 0) 输出array([0, 0, 0, 1, 2])

yy1 = np.maximum(y1[i], y1[idx])

xx2 = np.minimum(x2[i], x2[idx])

yy2 = np.minimum(y2[i], y2[idx])

#计算相交框的面积,注意矩形框不相交时w或h算出来会是负数,用0代替

w = np.maximum(0.0, xx2-xx1+1)

h = np.maximum(0.0, yy2-yy1+1)

inter = w * h

if method is 'Min':#计算重叠度IOU:重叠面积/(面积1+面积2-重叠面积)

o = inter / np.minimum(area[i], area[idx])

else:

o = inter / (area[i] + area[idx] - inter)

I = I[np.where(o<=threshold)]#np.where()二维数组中满足条件的坐标位置

pick = pick[0:counter]

return pick

##对坐标进行修剪,使其不超出图片大小

def pad(total_boxes, w, h):

tmpw = (total_boxes[:,2]-total_boxes[:,0]+1).astype(np.int32)

tmph = (total_boxes[:,3]-total_boxes[:,1]+1).astype(np.int32)

numbox = total_boxes.shape[0]

dx = np.ones((numbox), dtype=np.int32)

dy = np.ones((numbox), dtype=np.int32)

edx = tmpw.copy().astype(np.int32)

edy = tmph.copy().astype(np.int32)

x = total_boxes[:,0].copy().astype(np.int32)

y = total_boxes[:,1].copy().astype(np.int32)

ex = total_boxes[:,2].copy().astype(np.int32)

ey = total_boxes[:,3].copy().astype(np.int32)

tmp = np.where(ex>w)

edx.flat[tmp] = np.expand_dims(-ex[tmp]+w+tmpw[tmp],1)

ex[tmp] = w

tmp = np.where(ey>h)

edy.flat[tmp] = np.expand_dims(-ey[tmp]+h+tmph[tmp],1)

ey[tmp] = h

tmp = np.where(x<1)

dx.flat[tmp] = np.expand_dims(2-x[tmp],1)

x[tmp] = 1

tmp = np.where(y<1)

dy.flat[tmp] = np.expand_dims(2-y[tmp],1)

y[tmp] = 1

return dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph

# 使预测框变为正方形

def rerec(bboxA):

"""Convert bboxA to square."""

h = bboxA[:,3]-bboxA[:,1]

w = bboxA[:,2]-bboxA[:,0]

l = np.maximum(w, h)

bboxA[:,0] = bboxA[:,0]+w*0.5-l*0.5

bboxA[:,1] = bboxA[:,1]+h*0.5-l*0.5

bboxA[:,2:4] = bboxA[:,0:2] + np.transpose(np.tile(l,(2,1)))#重复某个数组,重复l,变成2行一列

return bboxA

#放缩图像

def imresample(img, sz):

im_data = cv2.resize(img, (sz[1], sz[0]), interpolation=cv2.INTER_AREA) #@UndefinedVariable

return im_data

2:检测2.py(修改成自己的输入图像地址就行)

#已经训练好的模型,模型的数据存储在.npy文件中。

#Pnet最终的输出的结果是1x1x32的特征图,再分成三条支路,用于人脸分类、边框回归、人脸特征点定位。

#这三条支路的损失函数分别是交叉熵(二分类问题常用)、平方和损失函数、5个特征点与标定好的数据的平方和损失。

#最后的总损失是三个损失乘上各自的权重比之和,在PNet里面三种损失的权重是1:0.5:0.5

import sys

import os

import argparse

import tensorflow as tf

import numpy as np

import detect_face

import cv2

def main():

sess = tf.Session()#开启会议

#create_mtcnn.py展示了如何使用模型,即在使用时必须先调用detect_facec的creat_mtcnn方法导入网络结构,

#此时在创建时又需要写出对应的网络结构然后通过.npy进行数据恢复然后再使用。

pnet, rnet, onet = detect_face.create_mtcnn(sess, None)#通过加载存储的.npy到对应的网络模型中恢复网络中的参数。

minsize = 20 #表示最小的人脸尺寸

threshold = [ 0.6, 0.7, 0.9 ] #分别为3个网络中人脸得分的阈值

factor = 0.709 # 金字塔缩放因子

draw = cv2.imread("C:/Users/Administrator/Desktop/1.jpg")

img=cv2.cvtColor(draw,cv2.COLOR_BGR2RGB)

bounding_boxes, points,pbox ,p_nms_box,p_bounding_box,r_net,rnet_thresh_boxes,rnet_nms_boxes= detect_face.detect_face(img, minsize, pnet, rnet, onet, threshold, factor)#人脸检测

#最终生成的bounding_boxes是一个n行5列的数据,n表示识别出的人脸个数,第5列表示人脸的可能性。1——4列分别表示人脸框的左上角和右下角坐标

#points是一个10行n列的坐标。其中n表示人脸的个数。point的前5行分别表示眼睛鼻子嘴的横坐标,后5行表示其对应的纵坐标。

#p_net网络未过滤的人脸框达上百个

p_faces = pbox.shape[0]#.shape[0]求行,.shape[1]求列,.shape[2]求通道数

print('pnet找到人脸数目为%d个'%p_faces)

p_draw = draw.copy()#原图像复制

for i in pbox:

cv2.rectangle(p_draw, (int(i[0]), int(i[1])), (int(i[2]), int(i[3])), (0, 0, 255))#画框

cv2.namedWindow("pnet",cv2.WINDOW_NORMAL)

cv2.imshow("pnet",p_draw)

#p_net网络nms非极大抑制

p_nms_faces = p_nms_box.shape[0]#.shape[0]求行,.shape[1]求列,.shape[2]求通道数

print('p_nms_net找到人脸数目为%d个'%p_nms_faces)

p_nms_draw = draw.copy()#原图像复制

for i in p_nms_box:

cv2.rectangle(p_nms_draw, (int(i[0]), int(i[1])), (int(i[2]), int(i[3])), (0, 0, 255))#画框

cv2.namedWindow("p_nms_net",cv2.WINDOW_NORMAL)

cv2.imshow("p_nms_net",p_nms_draw)

#p_net网络边框回归

p_bounding_box_faces = p_bounding_box.shape[0]#.shape[0]求行,.shape[1]求列,.shape[2]求通道数

print('p_bounding_box_net找到人脸数目为%d个'%p_bounding_box_faces)

p_bounding_box_draw = draw.copy()#原图像复制

for i in p_bounding_box:

cv2.rectangle(p_bounding_box_draw, (int(i[0]), int(i[1])), (int(i[2]), int(i[3])), (0, 0, 255))#画框

cv2.namedWindow("p_bounding_box_net",cv2.WINDOW_NORMAL)

cv2.imshow("p_bounding_box_net",p_bounding_box_draw)

#rnet_thresh_boxes网络

rnet_thresh_boxes_faces = rnet_thresh_boxes.shape[0]#.shape[0]求行,.shape[1]求列,.shape[2]求通道数

print('rnet_thresh_boxes找到人脸数目为%d个'%rnet_thresh_boxes_faces)

rnet_thresh_boxes_draw = draw.copy()#原图像复制

for i in rnet_thresh_boxes:

cv2.rectangle(rnet_thresh_boxes_draw, (int(i[0]), int(i[1])), (int(i[2]), int(i[3])), (0, 0, 255))#画框

cv2.namedWindow("rnet_thresh_boxes",cv2.WINDOW_NORMAL)

cv2.imshow("rnet_thresh_boxes",rnet_thresh_boxes_draw)

rnet_nms_boxes

#rnet_nms_boxes网络

rnet_nms_boxes_faces = rnet_nms_boxes.shape[0]#.shape[0]求行,.shape[1]求列,.shape[2]求通道数

print('rnet_nms_boxes找到人脸数目为%d个'%rnet_nms_boxes_faces)

rnet_nms_boxes_draw = draw.copy()#原图像复制

for i in rnet_nms_boxes:

cv2.rectangle(rnet_nms_boxes_draw, (int(i[0]), int(i[1])), (int(i[2]), int(i[3])), (0, 0, 255))#画框

cv2.namedWindow("rnet_nms_boxes",cv2.WINDOW_NORMAL)

cv2.imshow("rnet_nms_boxes",rnet_nms_boxes_draw)

#r_net网络

r_faces = r_net.shape[0]#.shape[0]求行,.shape[1]求列,.shape[2]求通道数

print('r_net找到人脸数目为%d个'%r_faces)

r_net_draw = draw.copy()#原图像复制

for i in r_net:

cv2.rectangle(r_net_draw, (int(i[0]), int(i[1])), (int(i[2]), int(i[3])), (0, 0, 255))#画框

cv2.namedWindow("r_net",cv2.WINDOW_NORMAL)

cv2.imshow("r_net",r_net_draw)

nrof_faces = bounding_boxes.shape[0]#.shape[0]求行,.shape[1]求列,.shape[2]求通道数

print('onet找到人脸数目为%d个'%nrof_faces)

face_number=1;

for b in bounding_boxes:#把找到的所有人脸框按行赋给b

cv2.rectangle(draw, (int(b[0]), int(b[1])), (int(b[2]), int(b[3])), (0, 0, 255))#画框

print("第%d个人脸框左上角坐标:(%d,%d)"%(face_number,int(b[0]),int(b[1])))

print("第%d个人脸框右下角坐标:(%d,%d)"%(face_number,int(b[2]),int(b[3])))

print("第%d个人脸的可能性为:%f"%(face_number,float(b[4])))

face_number+=1

for p in points.T:#把找到的所有人脸特征点按行赋给p。 .T为转置,把10行n列变成n行10列

for i in range(5):

cv2.circle(draw, (p[i], p[i + 5]), 1, (0, 0, 255), 2)

cv2.namedWindow("face_detect",cv2.WINDOW_NORMAL)

cv2.imshow("face_detect",draw)

cv2.waitKey(0)

if __name__ == '__main__':

main()