Android8.0 Audio系统之AudioTrack

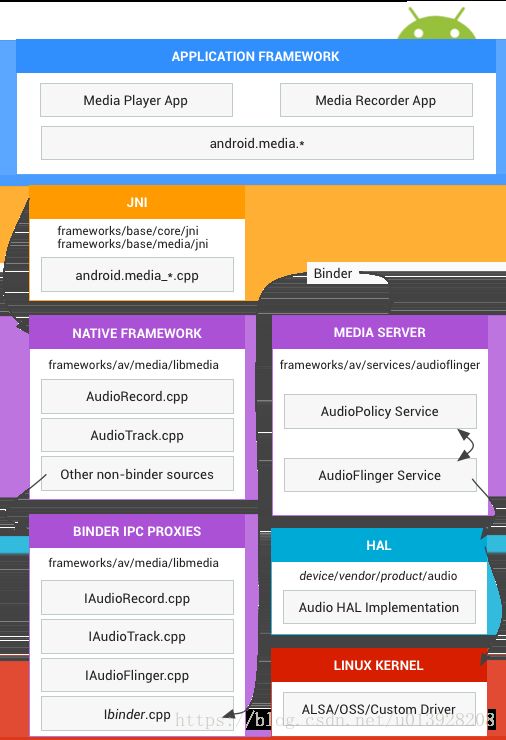

继上一篇Android硬件抽象层和HAL层Binder框架,我们这次选择Audio系统来研究,之所以选择Audio系统,并非Audio系统简单,恰恰是因为Audio系统复杂。Audio系统与Media系统,Surface系统,通话系统,蓝牙系统…都有交互,通过Audio系统的复杂度,让我们去领略它的设计思想。我们将从四个层面去剖析Audio系统:1. AudioTrack , 2. AudioFlinger , 3. AudioPolicy, 4.Audio硬件抽象层。

从整个Audio系统来看,要完成音频的路由,合成,解码,AP和BP处理器等功能避免不了复杂度的上升。为此需要寻找一个切入点去分析,一来不脱离主线,二来简化分析流程;本篇我们主要分析AudioTrack。

1. Java层的AudioTrack

以API的使用为切入点分析AudioTrack, 逐步转移到AudioPolicy, AudioFlinger的分析

//1.查询硬件所能分配的最小缓冲区

int bufsize = AudioTrack.getMinBufferSize(16000,

AudioFormat.CHANNEL_CONFIGURATION_STEREO,

AudioFormat.ENCODING_PCM_16BIT);

//2.创建音轨

AudioTrack trackPlayer = new AudioTrack(AudioManager.STREAM_MUSIC, 16000,

AudioFormat.CHANNEL_CONFIGURATION_STEREO,

AudioFormat.ENCODING_PCM_16BIT,buffersize,

AudioFormat.MODE_STREAM);

//3.播放PCM流

trackPlayer.play();

//4.写入PCM数据

trackplayer.write(bytes, bytres.length);

//5.停止和释放资源

trackplayer.stop();

reackerplayer.release();

1.1 getMinBufferSize()

sampleRateInHz: 采样率; channelConfig : 声道数; audioFormat : 采样精度

static public int getMinBufferSize(int sampleRateInHz, int channelConfig, int audioFormat) {

int channelCount = 0;

switch(channelConfig) {

case AudioFormat.CHANNEL_OUT_MONO:

case AudioFormat.CHANNEL_CONFIGURATION_MONO:

channelCount = 1;

break;

case AudioFormat.CHANNEL_OUT_STEREO:

case AudioFormat.CHANNEL_CONFIGURATION_STEREO:

channelCount = 2;

break;

default:

if (!isMultichannelConfigSupported(channelConfig)) {

loge("getMinBufferSize(): Invalid channel configuration.");

return ERROR_BAD_VALUE;

} else {

channelCount = AudioFormat.channelCountFromOutChannelMask(channelConfig);

}

}

if (!AudioFormat.isPublicEncoding(audioFormat)) { //采样精度参数校验

loge("getMinBufferSize(): Invalid audio format.");

return ERROR_BAD_VALUE;

}

// sample rate, note these values are subject to change

// Note: AudioFormat.SAMPLE_RATE_UNSPECIFIED is not allowed

if ( (sampleRateInHz < AudioFormat.SAMPLE_RATE_HZ_MIN) || //采样率参数校验

(sampleRateInHz > AudioFormat.SAMPLE_RATE_HZ_MAX) ) {

loge("getMinBufferSize(): " + sampleRateInHz + " Hz is not a supported sample rate.");

return ERROR_BAD_VALUE;

}

//调用JNI

int size = native_get_min_buff_size(sampleRateInHz, channelCount, audioFormat);

if (size <= 0) {

loge("getMinBufferSize(): error querying hardware");

return ERROR;

}

else {

return size;

}

}

frameworks\base\core\jni\android_media_AudioTrack.cpp

static jint android_media_AudioTrack_get_min_buff_size(JNIEnv *env, jobject thiz,

jint sampleRateInHertz, jint channelCount, jint audioFormat) {

size_t frameCount;

const status_t status = AudioTrack::getMinFrameCount(&frameCount, AUDIO_STREAM_DEFAULT,

sampleRateInHertz); //获取音频帧

const audio_format_t format = audioFormatToNative(audioFormat);

if (audio_has_proportional_frames(format)) {

const size_t bytesPerSample = audio_bytes_per_sample(format);

return frameCount * channelCount * bytesPerSample; //计算最小缓冲大小

} else {

return frameCount;

}

}

frameworks\av\media\libaudioclient\AudioTrack.cpp

AudioSystem::getOutputSamplingRate 将调用AudioFlinger查询参数

status_t AudioTrack::getMinFrameCount(

size_t* frameCount,

audio_stream_type_t streamType,

uint32_t sampleRate)

{

if (frameCount == NULL) {

return BAD_VALUE;

}

......

uint32_t afSampleRate;

status_t status;

status = AudioSystem::getOutputSamplingRate(&afSampleRate, streamType); //采样率

size_t afFrameCount;

status = AudioSystem::getOutputFrameCount(&afFrameCount, streamType); //音频帧

uint32_t afLatency;

status = AudioSystem::getOutputLatency(&afLatency, streamType);//音频延迟

// When called from createTrack, speed is 1.0f (normal speed).

// This is rechecked again on setting playback rate (TODO: on setting sample rate, too).

*frameCount = calculateMinFrameCount(afLatency, afFrameCount, afSampleRate, sampleRate, 1.0f

/*, 0 notificationsPerBufferReq*/);

......

return NO_ERROR;

}

计算采样缓冲大小

static size_t calculateMinFrameCount(

uint32_t afLatencyMs, uint32_t afFrameCount, uint32_t afSampleRate,

uint32_t sampleRate, float speed /*, uint32_t notificationsPerBufferReq*/)

{

// Ensure that buffer depth covers at least audio hardware latency

uint32_t minBufCount = afLatencyMs / ((1000 * afFrameCount) / afSampleRate);

if (minBufCount < 2) {

minBufCount = 2; //至少需要两个缓冲

}

#if 0

if (minBufCount < notificationsPerBufferReq) {

minBufCount = notificationsPerBufferReq;

}

#endif

return minBufCount * sourceFramesNeededWithTimestretch(

sampleRate, afFrameCount, afSampleRate, speed);

}

1.2 创建AudioTrack

public AudioTrack(AudioAttributes attributes, AudioFormat format, int bufferSizeInBytes,

int mode, int sessionId)

throws IllegalArgumentException {

super(attributes, AudioPlaybackConfiguration.PLAYER_TYPE_JAM_AUDIOTRACK);

......

// native initialization

int initResult = native_setup(new WeakReference<AudioTrack>(this), mAttributes,

sampleRate, mChannelMask, mChannelIndexMask, mAudioFormat,

mNativeBufferSizeInBytes, mDataLoadMode, session, 0 /*nativeTrackInJavaObj*/);

......

}

使用内存共享和生产者消费者模式传递数据

static jint android_media_AudioTrack_setup(JNIEnv *env, jobject thiz, jobject weak_this, jobject jaa,

jintArray jSampleRate, jint channelPositionMask, jint channelIndexMask,

jint audioFormat, jint buffSizeInBytes, jint memoryMode, jintArray jSession,

jlong nativeAudioTrack) {

sp<AudioTrack> lpTrack = 0;

jint* nSession = (jint *) env->GetPrimitiveArrayCritical(jSession, NULL);

audio_session_t sessionId = (audio_session_t) nSession[0];

env->ReleasePrimitiveArrayCritical(jSession, nSession, 0);

nSession = NULL;

// 环形内存共享,消息队列和管道基本上都是4次拷贝,而共享内存(mmap, shmget)只有两次

AudioTrackJniStorage* lpJniStorage = NULL;

audio_attributes_t *paa = NULL;

jclass clazz = env->GetObjectClass(thiz);

// if we pass in an existing *Native* AudioTrack, we don't need to create/initialize one.

if (nativeAudioTrack == 0) {

......

// create the native AudioTrack object

lpTrack = new AudioTrack(); //创建AudioTrack对象

// this data will be passed with every AudioTrack callback

lpJniStorage = new AudioTrackJniStorage();

lpJniStorage->mCallbackData.audioTrack_class = (jclass)env->NewGlobalRef(clazz);

// we use a weak reference so the AudioTrack object can be garbage collected.

lpJniStorage->mCallbackData.audioTrack_ref = env->NewGlobalRef(weak_this);

lpJniStorage->mCallbackData.busy = false;

// initialize the native AudioTrack object

status_t status = NO_ERROR;

switch (memoryMode) {

case MODE_STREAM:

status = lpTrack->set( //创建Track

AUDIO_STREAM_DEFAULT,

sampleRateInHertz,

format,// word length, PCM

nativeChannelMask,

frameCount,

AUDIO_OUTPUT_FLAG_NONE,

audioCallback, &(lpJniStorage->mCallbackData),//callback, callback data (user)

0,

0,// shared mem

true,// thread can call Java

sessionId,// audio session ID

AudioTrack::TRANSFER_SYNC,

NULL, // default offloadInfo

-1, -1, // default uid, pid values

paa);

break;

......

}

} else { // end if (nativeAudioTrack == 0)

lpTrack = (AudioTrack*)nativeAudioTrack; //取本地

// initialize the callback information:

// this data will be passed with every AudioTrack callback

lpJniStorage = new AudioTrackJniStorage();

lpJniStorage->mCallbackData.audioTrack_class = (jclass)env->NewGlobalRef(clazz);

// we use a weak reference so the AudioTrack object can be garbage collected.

lpJniStorage->mCallbackData.audioTrack_ref = env->NewGlobalRef(weak_this);

lpJniStorage->mCallbackData.busy = false;

}

......

return (jint) AUDIO_JAVA_SUCCESS;

}

1.3 play()

static void

android_media_AudioTrack_start(JNIEnv *env, jobject thiz)

{

sp<AudioTrack> lpTrack = getAudioTrack(env, thiz);

if (lpTrack == NULL) {

jniThrowException(env, "java/lang/IllegalStateException",

"Unable to retrieve AudioTrack pointer for start()");

return;

}

lpTrack->start(); //调用native的Track

}

取已保存的AudioTrack对象

static sp<AudioTrack> getAudioTrack(JNIEnv* env, jobject thiz)

{

Mutex::Autolock l(sLock);

AudioTrack* const at =

(AudioTrack*)env->GetLongField(thiz, javaAudioTrackFields.nativeTrackInJavaObj);

return sp<AudioTrack>(at);

}

1.4 write()

template <typename T>

static jint android_media_AudioTrack_writeArray(JNIEnv *env, jobject thiz,

T javaAudioData,

jint offsetInSamples, jint sizeInSamples,

jint javaAudioFormat,

jboolean isWriteBlocking) {

//ALOGV("android_media_AudioTrack_writeArray(offset=%d, sizeInSamples=%d) called",

// offsetInSamples, sizeInSamples);

sp<AudioTrack> lpTrack = getAudioTrack(env, thiz);

......

jint samplesWritten = writeToTrack(lpTrack, javaAudioFormat, cAudioData,

offsetInSamples, sizeInSamples, isWriteBlocking == JNI_TRUE /* blocking */);

return samplesWritten;

}

最终会调用到这里

template <typename T>

static jint writeToTrack(const sp<AudioTrack>& track, jint audioFormat, const T *data,

jint offsetInSamples, jint sizeInSamples, bool blocking) {

// give the data to the native AudioTrack object (the data starts at the offset)

ssize_t written = 0;

// regular write() or copy the data to the AudioTrack's shared memory?

size_t sizeInBytes = sizeInSamples * sizeof(T);

if (track->sharedBuffer() == 0) { //STREAM模式

written = track->write(data + offsetInSamples, sizeInBytes, blocking); //写数据

// for compatibility with earlier behavior of write(), return 0 in this case

if (written == (ssize_t) WOULD_BLOCK) {

written = 0;

}

} else { //STATIC模式

// writing to shared memory, check for capacity

if ((size_t)sizeInBytes > track->sharedBuffer()->size()) {

sizeInBytes = track->sharedBuffer()->size();

}

//静态模式下直接拷贝到内存

memcpy(track->sharedBuffer()->pointer(), data + offsetInSamples, sizeInBytes);

written = sizeInBytes;

}

if (written >= 0) {

return written / sizeof(T);

}

return interpretWriteSizeError(written);

}

到此AudioTrack的Java层分析结束,四个流程中还留涉及到AudioFlinger,AudioPolicyService相关的部分我们先放一放。

2. Native层的AudioTrack

继上面AudioTrack对象创建的set函数,创建 AudioTrackThread线程

if (cbf != NULL) {

mAudioTrackThread = new AudioTrackThread(*this, threadCanCallJava);

mAudioTrackThread->run("AudioTrack", ANDROID_PRIORITY_AUDIO, 0 /*stack*/);

// thread begins in paused state, and will not reference us until start()

}

// create the IAudioTrack

status_t status = createTrack_l(); //创建Track()

if (status != NO_ERROR) {

if (mAudioTrackThread != 0) {

mAudioTrackThread->requestExit(); // see comment in AudioTrack.h

mAudioTrackThread->requestExitAndWait();

mAudioTrackThread.clear();

}

return status;

}

createTrack_l()函数将调用AudioFlinger创建Track()

const sp<IAudioFlinger>& audioFlinger = AudioSystem::get_audio_flinger();

sp<IAudioTrack> track = audioFlinger->createTrack(......); //后续分析

//线性环形缓冲

audio_track_cblk_t* cblk = static_cast<audio_track_cblk_t*>(iMemPointer);

AudioTrackThread启动后就开始处理数据

bool AudioTrack::AudioTrackThread::threadLoop()

{

nsecs_t ns = mReceiver.processAudioBuffer();

}

处理线性环形缓冲数据

nsecs_t AudioTrack::processAudioBuffer()

{

// perform callbacks while unlocked

if (newUnderrun) {

mCbf(EVENT_UNDERRUN, mUserData, NULL);

}

while (loopCountNotifications > 0) {

mCbf(EVENT_LOOP_END, mUserData, NULL);

--loopCountNotifications;

}

if (flags & CBLK_BUFFER_END) {

mCbf(EVENT_BUFFER_END, mUserData, NULL);

}

if (markerReached) {

mCbf(EVENT_MARKER, mUserData, &markerPosition);

}

//循环播放通知

while (newPosCount > 0) {

size_t temp = newPosition.value(); // FIXME size_t != uint32_t

mCbf(EVENT_NEW_POS, mUserData, &temp);

newPosition += updatePeriod;

newPosCount--;

}

if (mObservedSequence != sequence) {

mObservedSequence = sequence;

mCbf(EVENT_NEW_IAUDIOTRACK, mUserData, NULL);

// for offloaded tracks, just wait for the upper layers to recreate the track

if (isOffloadedOrDirect()) {

return NS_INACTIVE;

}

}

// if inactive, then don't run me again until re-started

if (!active) {

return NS_INACTIVE;

}

// Compute the estimated time until the next timed event (position, markers, loops)

// FIXME only for non-compressed audio

//数据使用超过警戒线

if (!markerReached && position < markerPosition) {

minFrames = (markerPosition - position).value();

}

if (loopPeriod > 0 && loopPeriod < minFrames) {

// loopPeriod is already adjusted for actual position.

minFrames = loopPeriod;

}

if (updatePeriod > 0) { //进度通知

minFrames = min(minFrames, (newPosition - position).value());

}

// If > 0, poll periodically to recover from a stuck server. A good value is 2.

static const uint32_t kPoll = 0;

if (kPoll > 0 && mTransfer == TRANSFER_CALLBACK && kPoll * notificationFrames < minFrames) {

minFrames = kPoll * notificationFrames;

}

......

size_t writtenFrames = 0;

while (mRemainingFrames > 0) {

Buffer audioBuffer;

audioBuffer.frameCount = mRemainingFrames;

size_t nonContig;

//得到一块可写的缓冲

status_t err = obtainBuffer(&audioBuffer, requested, NULL, &nonContig);

LOG_ALWAYS_FATAL_IF((err != NO_ERROR) != (audioBuffer.frameCount == 0),

"obtainBuffer() err=%d frameCount=%zu", err, audioBuffer.frameCount);

......

size_t reqSize = audioBuffer.size;

mCbf(EVENT_MORE_DATA, mUserData, &audioBuffer); //从用户那里PUll数据

size_t writtenSize = audioBuffer.size;

......

releaseBuffer(&audioBuffer); //写完毕,释放缓冲

writtenFrames += releasedFrames;

......

return 0;

}

写PCM数据,取一块空闲内存,将数据拷贝到内存

ssize_t AudioTrack::write(const void* buffer, size_t userSize, bool blocking)

{

......

size_t written = 0;

Buffer audioBuffer;

while (userSize >= mFrameSize) {

audioBuffer.frameCount = userSize / mFrameSize;

//获得一块空闲内存

status_t err = obtainBuffer(&audioBuffer,

blocking ? &ClientProxy::kForever : &ClientProxy::kNonBlocking);

if (err < 0) {

if (written > 0) {

break;

}

if (err == TIMED_OUT || err == -EINTR) {

err = WOULD_BLOCK;

}

return ssize_t(err);

}

size_t toWrite = audioBuffer.size;

memcpy(audioBuffer.i8, buffer, toWrite); //传递数据

buffer = ((const char *) buffer) + toWrite;

userSize -= toWrite;

written += toWrite;

//释放

releaseBuffer(&audioBuffer);

}

if (written > 0) {

mFramesWritten += written / mFrameSize;

}

return written;

}

最终释放缓冲

void AudioTrack::stop()

{

AutoMutex lock(mLock);

if (mState != STATE_ACTIVE && mState != STATE_PAUSED) {

return;

}

if (isOffloaded_l()) {

mState = STATE_STOPPING;

} else {

mState = STATE_STOPPED;

ALOGD_IF(mSharedBuffer == nullptr,

"stop() called with %u frames delivered", mReleased.value());

mReleased = 0;

}

mProxy->interrupt();

mAudioTrack->stop();

// Note: legacy handling - stop does not clear playback marker

// and periodic update counter, but flush does for streaming tracks.

if (mSharedBuffer != 0) {

// clear buffer position and loop count.

//清空循环播放设置

mStaticProxy->setBufferPositionAndLoop(0 /* position */,

0 /* loopStart */, 0 /* loopEnd */, 0 /* loopCount */);

}

sp<AudioTrackThread> t = mAudioTrackThread;

if (t != 0) {

if (!isOffloaded_l()) {

t->pause(); //线程暂停

}

} else {

setpriority(PRIO_PROCESS, 0, mPreviousPriority);

set_sched_policy(0, mPreviousSchedulingGroup);

}

}

OK,到此AudioTrack的分析到此打住,我们仅仅分析了AudioTrack的创建,数据处理。由于涉及到与AudioFlinger,AudioPolicy的交互,等我们分析完这两个以后再回过头来看AudioTrack。到此结束。