Mask R-CNN 中计算map (以钢材DWTT断口为例)

一.概念

平均精度(AP)和平均精度均值(mAP)。Mean Average Precision(mAP)通常在信息检索和目标检测中作为评估标准使用。在这两个领域中mAP的计算方法不同,这里主要介绍目标检测中的mAP

AP: 就是Precision-recall 曲线下面的面积,通常来说一个越好的分类器,AP值越高。

mAP是多个类别AP的平均值。这个mean的意思是对每个类的AP再求平均,得到的就是mAP的值,mAP的大小一定在[0,1]区间,越大越好。该指标是目标检测算法中最重要的一个。

二.公式

Recall和Precise计算

下面讲解recall和precise的计算方法,其中

TP为既是正样本又被预测为正样本的个数,全拼TruePositive

FP为负样本被预测为了正样本的个数,全拼FalsePositive

FN为正样本被预测为了负样本的个数,全拼FalseNegtive

1.precise计算公式,表示预测所有预测为正样本的样本中正样本的比例

Precise = TP/(TP+FP)

2.recall计算公式,表示所有正样本中有多少被预测正确。

recall = TP/(TP+FN)

AP(Average Precision)计算方法为PR曲线下覆盖的面积,所有类别的AP进行平均,得到的为mAP(mean Average Precision)。

3.Recall和Precision计算标准

recall和precision计算有两种方式:iou(intersection-over-union)和confidence score。

iou通过ground Truth和prediction box的相交情况进行判别,可以设置阈值计算两者是不是预测正确。

confidence score为yolov3输出的一个参数,在nms(极大值抑制)等算法中都会用到score参数,作为该框是否预测正确。

三.源码

# -*- coding: utf-8 -*-

import os

import sys

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

import tensorflow as tf

from mrcnn.config import Config

#import utils

from mrcnn import model as modellib,utils

from mrcnn import visualize1

import yaml

from mrcnn.model import log

from PIL import Image

# Root directory of the project

ROOT_DIR = os.getcwd()

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

iter_num=0

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "mask_rcnn_coco.h5")

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Number of classes (including background)

NUM_CLASSES = 1 + 1 # background + 3 shapes

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 256

IMAGE_MAX_DIM = 512

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8 * 6, 16 * 6, 32 * 6, 64 * 6, 128 * 6) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 50

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 50

# use small validation steps since the epoch is small

VALIDATION_STEPS = 20

config = ShapesConfig()

config.display()

class DrugDataset(utils.Dataset):

# 得到该图中有多少个实例(物体)

def get_obj_index(self, image):

n = np.max(image)

return n

# 解析labelme中得到的yaml文件,从而得到mask每一层对应的实例标签

def from_yaml_get_class(self, image_id):

info = self.image_info[image_id]

with open(info['yaml_path']) as f:

temp = yaml.load(f.read())

labels = temp['label_names']

del labels[0]

return labels

# 重新写draw_mask

def draw_mask(self, num_obj, mask, image,image_id):

#print("draw_mask-->",image_id)

#print("self.image_info",self.image_info)

info = self.image_info[image_id]

#print("info-->",info)

#print("info[width]----->",info['width'],"-info[height]--->",info['height'])

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

#print("image_id-->",image_id,"-i--->",i,"-j--->",j)

#print("info[width]----->",info['width'],"-info[height]--->",info['height'])

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] = 1

return mask

def load_shapes(self, count, img_floder, mask_floder, imglist, dataset_root_path):

"""Generate the requested number of synthetic images.

count: number of images to generate.

height, width: the size of the generated images.

"""

# Add classes

self.add_class("shapes", 1, "fragility") # 脆性区域

for i in range(count):

# 获取图片宽和高

filestr = imglist[i].split(".")[0]

mask_path = mask_floder + "/" + filestr + ".png"

yaml_path = dataset_root_path + "labelme_json/" + filestr + "_json/info.yaml"

print(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

cv_img = cv2.imread(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_floder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path)

# 重写load_mask

def load_mask(self, image_id):

"""Generate instance masks for shapes of the given image ID.

"""

global iter_num

print("image_id",image_id)

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img,image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = []

labels = self.from_yaml_get_class(image_id)

labels_form = []

for i in range(len(labels)):

if labels[i].find("fragility") != -1:

# print "box"

labels_form.append("fragility")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

def get_ax(rows=1, cols=1, size=8):

"""Return a Matplotlib Axes array to be used in

all visualizations in the notebook. Provide a

central point to control graph sizes.

Change the default size attribute to control the size

of rendered images

"""

_, ax = plt.subplots(rows, cols, figsize=(size * cols, size * rows))

return ax

#基础设置

dataset_root_path="dataset_fragility256512/"

img_floder = dataset_root_path + "pic"

mask_floder = dataset_root_path + "cv2_mask"

#yaml_floder = dataset_root_path

imglist = os.listdir(img_floder)

count = len(imglist)

#train与val数据集准备

dataset_train = DrugDataset()

dataset_train.load_shapes(count, img_floder, mask_floder, imglist,dataset_root_path)

dataset_train.prepare()

#print("dataset_train-->",dataset_train._image_ids)

dataset_val = DrugDataset()

dataset_val.load_shapes(7, img_floder, mask_floder, imglist,dataset_root_path)

dataset_val.prepare()

# mAP

# Compute VOC-Style mAP @ IoU=0.5

# Running on 10 images. Increase for better accuracy.

class InferenceConfig(ShapesConfig):

GPU_COUNT = 1

IMAGES_PER_GPU = 1

inference_config = InferenceConfig()

# Recreate the model in inference mode

model = modellib.MaskRCNN(mode="inference",

config=inference_config,

model_dir=MODEL_DIR)

# Get path to saved weights

# Either set a specific path or find last trained weights

# model_path = os.path.join(ROOT_DIR, ".h5 file name here")

#model_path = model.find_last()

model_path=os.path.join("E:/graduation/Mask_RCNN-master/Mask_RCNN-master/maskrcnn_fragility/maskrcnn_fragility/linux导出训练模型/20191206(resnet50)", "mask_rcnn_shapes_0026.h5") #修改成自己训练好的模型

#model_path=os.path.join("E:/graduation/Mask_RCNN-master/Mask_RCNN-master/maskrcnn_fragility/maskrcnn_fragility/logs", "mask_rcnn_shapes_0023.h5")

# Load trained weights

print("Loading weights from ", model_path)

model.load_weights(model_path, by_name=True)

# Test on a random image

image_id = random.choice(dataset_val.image_ids)

original_image, image_meta, gt_class_id, gt_bbox, gt_mask = \

modellib.load_image_gt(dataset_val, inference_config,

image_id, use_mini_mask=False)

log("original_image", original_image)

log("image_meta", image_meta)

log("gt_class_id", gt_class_id)

log("gt_bbox", gt_bbox)

log("gt_mask", gt_mask)

visualize1.display_instances(original_image, gt_bbox, gt_mask, gt_class_id,

dataset_train.class_names, figsize=(8, 8))

results = model.detect([original_image], verbose=1)

r = results[0]

visualize1.display_instances(original_image, r['rois'], r['masks'], r['class_ids'],

dataset_val.class_names, r['scores'], ax=get_ax())

image_ids = np.random.choice(dataset_val.image_ids, 51)

APs = []

for image_id in image_ids:

# Load image and ground truth data

image, image_meta, gt_class_id, gt_bbox, gt_mask = \

modellib.load_image_gt(dataset_val, inference_config,

image_id, use_mini_mask=False)

molded_images = np.expand_dims(modellib.mold_image(image, inference_config), 0)

# Run object detection

results = model.detect([image], verbose=0)

r = results[0]

# Compute AP

AP, precisions, recalls, overlaps = \

utils.compute_ap(gt_bbox, gt_class_id, gt_mask,

r["rois"], r["class_ids"], r["scores"], r['masks'])

APs.append(AP)

print("AP: ", APs)

print("mAP: ", np.mean(APs))

我是在原有训练文件train_test.py的基础上进行修改的,可以结合文件:

train_shapes.ipynb文件进行修改。

下载链接:https://download.csdn.net/download/hopegrace/12033535

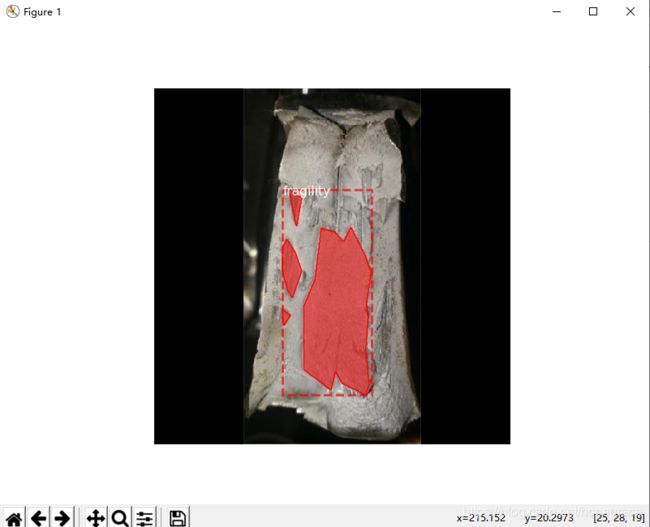

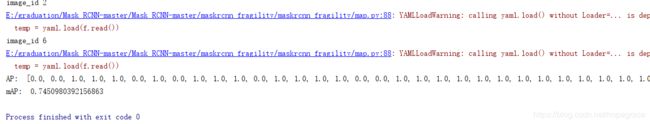

四.检测结果

选择51副钢材断口图像进行预测计算。

map结果:

训练好的钢材落锤撕裂断口(DWTT)脆性区域识别模型下载链接:

https://download.csdn.net/download/hopegrace/12033591