SVM-非线性

SVM非线性内核:

- 多项式 poly

- 径向基函数 rbf

- Sigmod

- 高级定制核

一、对比linear和rbf内核的性能区别

#比较几种核及参数,挑选出性能最好的svm模型

#载入数据

from sklearn import datasets

digits = datasets.load_digits()

X,y = digits.data, digits.target

#数据区分测试集和训练集,并将数据标准化

from sklearn.cross_validation import train_test_split, cross_val_score

from sklearn.preprocessing import MinMaxScaler

# We keep 30% random examples for test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=101)

# We scale the data in the range [-1,1]

scaling = MinMaxScaler(feature_range=(-1, 1)).fit(X_train)

X_train = scaling.transform(X_train)

X_test = scaling.transform(X_test)

from sklearn.svm import SVC

from sklearn.grid_search import GridSearchCV

import numpy as np

learning_algo = SVC(class_weight='balanced', random_state=101)

search_space = [{'kernel': ['linear'], 'C': np.logspace(-3, 3, 7)},

{'kernel': ['rbf'], 'degree':[2,3,4], 'C':np.logspace(-3, 3, 7), 'gamma': np.logspace(-3, 2, 6)}]

gridsearch = GridSearchCV(learning_algo, param_grid=search_space, scoring='accuracy', refit=True, cv=10, n_jobs=-1)

gridsearch.fit(X_train,y_train)

cv_performance = gridsearch.best_score_

test_performance = gridsearch.score(X_test, y_test)

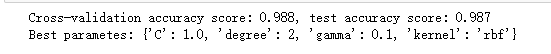

print ('Cross-validation accuracy score: %0.3f, test accuracy score: %0.3f' % (cv_performance,test_performance))

print ('Best parametes: %s' % gridsearch.best_params_)

结果:rbf胜出

二、用SVR来执行回归

注意:epsilon参数主要衡量可接受的误差数量,高epsilon暗示更少的支持点,小epsilon需要大量的支持点。

其实epsilon在过拟合的欠拟合中间做权衡。经验上epsilon的搜索空间在【0,0.01,0.1,0.5,1,2,4】表现不错。

from sklearn.cross_validation import train_test_split, cross_val_score

from sklearn.preprocessing import MinMaxScaler

from sklearn.grid_search import GridSearchCV

from sklearn import datasets

boston = datasets.load_boston()

X,y = boston.data, boston.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=101)

scaling = MinMaxScaler(feature_range=(-1, 1)).fit(X_train)

X_train = scaling.transform(X_train)

X_test = scaling.transform(X_test)

from sklearn.svm import SVR

learning_algo = SVR()

search_space = [{'kernel': ['linear'], 'C': np.logspace(-3, 2, 6), 'epsilon': [0, 0.01, 0.1, 0.5, 1, 2, 4]},

{'kernel': ['rbf'], 'degree':[2,3], 'C':np.logspace(-3, 3, 7), 'gamma': np.logspace(-3, 2, 6), 'epsilon': [0, 0.01, 0.1, 0.5, 1, 2, 4]}]

gridsearch = GridSearchCV(learning_algo, param_grid=search_space, refit=True, scoring= 'r2', cv=10, n_jobs=-1)

gridsearch.fit(X_train,y_train)

cv_performance = gridsearch.best_score_

test_performance = gridsearch.score(X_test, y_test)

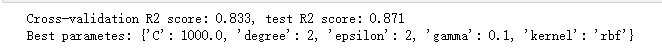

print ('Cross-validation R2 score: %0.3f, test R2 score: %0.3f' % (cv_performance,test_performance))

print ('Best parametes: %s' % gridsearch.best_params_)

结果如下:

epsilon取值为2,误差度量使用R平方计算。

三、用SVM创建随机解决方案

SVC和LinearSVC对比

#linearsvc和svc对比

from sklearn.datasets import make_classification

import numpy as np

X,y = make_classification(n_samples=10**4, n_features=15, n_informative=10, random_state=101)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=101)

from sklearn.svm import SVC, LinearSVC

slow_SVM = SVC(kernel="linear", random_state=101)

fast_SVM = LinearSVC(random_state=101, loss='hinge')

slow_SVM.fit(X_train, y_train)

fast_SVM.fit(X_train, y_train)

print ('SVC test accuracy score: %0.3f' % slow_SVM.score(X_test, y_test))

print ('LinearSVC test accuracy score: %0.3f' % fast_SVM.score(X_test, y_test))

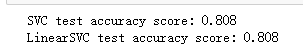

结果显示:

算法可以得到相同的结果。

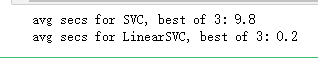

分析两种算法的速度,如下:

import timeit

X,y = make_classification(n_samples=10**4, n_features=15, n_informative=10, random_state=101)

print ('avg secs for SVC, best of 3: %0.1f' % np.mean(timeit.timeit("slow_SVM.fit(X, y)", "from __main__ import slow_SVM, X, y", number=1)))

print ('avg secs for LinearSVC, best of 3: %0.1f' % np.mean(timeit.timeit("fast_SVM.fit(X, y)", "from __main__ import fast_SVM, X, y", number=1)))

LinearSVC速度要快的多,性能比例=9.8/0.2=49倍

如果样本增加继续指数增加可看下效果

import timeit

X,y = make_classification(n_samples=20000, n_features=15, n_informative=10, random_state=101)

print ('avg secs for SVC, best of 3: %0.1f' % np.mean(timeit.timeit("slow_SVM.fit(X, y)", "from __main__ import slow_SVM, X, y", number=1)))

print ('avg secs for LinearSVC, best of 3: %0.1f' % np.mean(timeit.timeit("fast_SVM.fit(X, y)", "from __main__ import fast_SVM, X, y", number=1)))

差距持续扩大

四、对比SGD与SVM算法

1、使用SVM中的LinearSVC算法进行分类

from sklearn.datasets import make_classification

from sklearn.cross_validation import train_test_split, cross_val_score

from sklearn.svm import LinearSVC

import timeit

from sklearn.linear_model import SGDClassifier

X,y = make_classification(n_samples=10**6, n_features=15, n_informative=10, random_state=101)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=101)

fast_SVM = LinearSVC(random_state=101, penalty='l2', loss='l1', dual=True)

fast_SVM.fit(X_train, y_train)

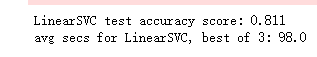

print ('LinearSVC test accuracy score: %0.3f' % fast_SVM.score(X_test, y_test))

print ('avg secs for LinearSVC, best of 3: %0.1f' % np.mean(timeit.timeit("fast_SVM.fit(X_train, y_train)", "from __main__ import fast_SVM, X_train, y_train", number=1)))

结果如下

测试集上的准确度为0.811,使用98秒

2、下面尝试使用SGDclassifier算法

stochastic_SVM = SGDClassifier(loss='hinge',max_iter=5, shuffle=True, random_state=101)

stochastic_SVM.fit(X_train, y_train)

print ('SGDClassifier test accuracy score: %0.3f' % stochastic_SVM.score(X_test, y_test))

print ('avg secs for SGDClassifier, best of 3: %0.1f' % np.mean(timeit.timeit("stochastic_SVM.fit(X_train, y_train)", "from __main__ import stochastic_SVM, X_train, y_train", number=1)))

SGDClassifier test accuracy score: 0.799 avg secs for SGDClassifier, best of 3: 0.7

SGDClassifier和LinearSVC相比,精度差异不大,但训练速度差别在百倍以上。

综上,在分类算法中,从速度上来讲:

SGDClassifier> LinearSVC>SVC