eastmoney东方财富网python爬取

目标需求

爬取一年的所有年报业绩报表。

难点分析

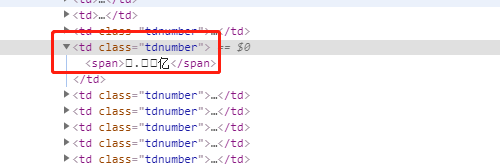

数字有字体加密

索性它的字体编码文件是在json里返回了。

部分数据json例子如下

{

"scode": "601991",

"sname": "大唐发电",

"securitytype": "A股",

"trademarket": "上交所主板",

"latestnoticedate": "2020-04-30T00:00:00",

"reportdate": "2020-03-31T00:00:00",

"publishname": "电力行业",

"securitytypecode": "058001001",

"trademarketcode": "069001001001",

"firstnoticedate": "2020-04-30T00:00:00",

"basiceps": ".",

"cutbasiceps": "-",

"totaloperatereve": "",

"ystz": "-.",

"yshz": "-.",

"parentnetprofit": "",

"sjltz": ".",

"sjlhz": ".",

"roeweighted": ".",

"bps": ".",

"mgjyxjje": ".",

"xsmll": ".",

"assigndscrpt": "-",

"gxl": "-"

}],

font: {

"WoffUrl": "http://data.eastmoney.com/font/0db/0db56b02cc9745e287dace49e18e3d09.woff",

"EotUrl": "http://data.eastmoney.com/font/0db/0db56b02cc9745e287dace49e18e3d09.eot",

"FontMapping": [{

"code": "",

"value": 1

}, {

"code": "",

"value": 2

}, {

因此处理方式就比较确定了,用正则提取数据和字体的json

json_str1 = ct.getRegex('data: (\[.*\]),',page_buf)

data_dict = json.loads(json_str1)

font_json = ct.getRegex('"FontMapping":\s*(\[.*\])',page_buf)

font_list= json.loads(font_json)

mgjyxjje = data['mgjyxjje'] # 每股经营现金流

mgjyxjje = parser_num(mgjyxjje, font_dict)

print(mgjyxjje)

,然后用replace一个个替换。

def parser_num(num_str,code_map):

for code in code_map:

num_str =num_str.replace(code,str(code_map[code]))

try:

result = float(num_str)

except Exception as e:

result=num_str

return result

完整参考代码如下

# -*- coding: utf-8 -*-

"""

File Name: eastmoney

Description :

Author : meng_zhihao

mail : 312141830@qq.com

date: 2020/5/2

"""

from crawl_tool_for_py3 import crawlerTool as ct

import time

import json

import re

def parser_num(num_str,code_map):

for code in code_map:

num_str =num_str.replace(code,str(code_map[code]))

try:

result = float(num_str)

except Exception as e:

result=num_str

return result

def main():

yield ['股票代码','股票简称','营业收入','营业收入同比增长','营业收入环比增长','净利润','净利润同比增长','净利润环比增长','每股净资产(元)','净资产收益率','(%) 每股经营现金流量(元)','销售毛利率','所处行业','最新公告日期','利润分配']

for i in range(1,80,1):

page_buf = ct.get('http://dcfm.eastmoney.com/em_mutisvcexpandinterface/api/js/get?type=YJBB21_YJBB&token=70f12f2f4f091e459a279469fe49eca5&st=latestnoticedate&sr=-1&p='+str(i)+'&ps=50&js=var%20DlYxWtOD={pages:(tp),data:%20(x),font:(font)}&filter=(securitytypecode%20in%20(%27058001001%27,%27058001002%27))(reportdate=^2019-12-31^)&rt=52947736')

print(page_buf)

json_str1 = ct.getRegex('data: (\[.*\]),',page_buf)

data_dict = json.loads(json_str1)

font_json = ct.getRegex('"FontMapping":\s*(\[.*\])',page_buf)

font_list= json.loads(font_json)

font_dict = {}

for font in font_list:

code = font['code']

value = font['value']

font_dict[code] = value

for data in data_dict:

sname = data['sname'] # 名称

print(sname)

scode = data['scode'] # 代码

latestnoticedate = data['latestnoticedate'] # 最新公告时间

ystz = data['ystz']#同比增长

ystz = parser_num(ystz, font_dict)

print(ystz)

basiceps = data['basiceps'] # 每股收益

basiceps = parser_num(basiceps, font_dict)

print(basiceps)

bps = data['bps'] # 每股净资产

bps = parser_num(bps, font_dict)

print(bps)

mgjyxjje = data['mgjyxjje'] # 每股经营现金流

mgjyxjje = parser_num(mgjyxjje, font_dict)

print(mgjyxjje)

roeweighted = data['roeweighted'] # 净资产收益率

roeweighted = parser_num(roeweighted, font_dict)

print(roeweighted)

sjlhz = data['sjlhz'] # 净利润季度环比增长

sjlhz = parser_num(sjlhz, font_dict)

print('sjlhz',sjlhz)

sjltz = data['sjltz'] # 净收入同比增长

sjltz = parser_num(sjltz, font_dict)

print(sjltz)

xsmll = data['xsmll'] # 销售毛利率

xsmll = parser_num(xsmll, font_dict)

print(xsmll)

yshz = data['yshz'] #季度环比增长

yshz = parser_num(yshz, font_dict)

print(yshz)

ystz = data['ystz'] # 同比增长

ystz = parser_num(ystz, font_dict)

print(ystz)

totaloperatereve = data['totaloperatereve'] # 营业收入

totaloperatereve = parser_num(totaloperatereve, font_dict)

print(totaloperatereve)

parentnetprofit =data['parentnetprofit'] # 营业收入

parentnetprofit = parser_num(parentnetprofit, font_dict)

print(parentnetprofit)

publishname = data['publishname']

assigndscrpt = data.get('assigndscrpt','')

assigndscrpt = parser_num(assigndscrpt, font_dict)

print(assigndscrpt)

# yield ['股票代码', '股票简称', '营业收入', '营业收入同比增长', '营业收入环比增长', '净利润', '净利润同比增长', '净利润环比增长', '每股净资产(元)', '净资产收益率',

# '(%) 每股经营现金流量(元)', '销售毛利率', '所处行业', '最新公告日期']

# sjlhz

yield [scode,sname,totaloperatereve,ystz,yshz,parentnetprofit,sjltz,sjlhz,bps,roeweighted,mgjyxjje,xsmll,publishname,latestnoticedate,assigndscrpt]

print(data_dict)

time.sleep(1)

if __name__ == '__main__':

datas = main()

ct.writer_to_csv(datas,"2019.csv")

# parser_num('', '')

我的工具类crawl_tool_for_py3.py

# -*- coding: utf-8 -*-

"""

File Name: crawl_tool_for_py3

Description :

Author : meng_zhihao

date: 2018/11/20

"""

import requests

from lxml import etree

import re

HEADERS = {'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3112.101 Safari/537.36'}

#通用方法

class crawlerTool:

def __init__(self):

self.session = requests.session()

pass

def __del__(self):

self.session.close()

@staticmethod

def get(url,proxies=None, cookies={}, referer=''):

if referer:

headers = {'Referer': referer}

headers.update(HEADERS)

else:

headers = HEADERS

rsp = requests.get(url, timeout=10, headers=headers, cookies=cookies)

return rsp.content # 二进制返回

@staticmethod

def post(url,data):

rsp = requests.post(url,data,timeout=10)

return rsp.content

def sget(self,url,cookies={}):

rsp = self.session.get(url,timeout=10,headers=HEADERS,cookies=cookies)

return rsp.content # 二进制返回

def spost(self,url,data):

rsp = self.session.post(url,data,timeout=10,headers=HEADERS)

return rsp.content

# 获取xpath 要判断一下输入类型,或者异常处理

@staticmethod

def getXpath(xpath, content): #xptah操作貌似会把中文变成转码&#xxxx; /text()变unicode编码

"""

:param xpath:

:param content:

:return:

"""

tree = etree.HTML(content)

out = []

results = tree.xpath(xpath)

for result in results:

if 'ElementStringResult' in str(type(result)) or 'ElementUnicodeResult' in str(type(result)) :

out.append(result)

else:

out.append(etree.tostring(result,encoding = "utf8",method = "html"))

return out

@staticmethod

def getXpath1(xpath, content): #xptah操作貌似会把中文变成转码&#xxxx; /text()变unicode编码

tree = etree.HTML(content)

out = []

results = tree.xpath(xpath)

for result in results:

if 'ElementStringResult' in str(type(result)) or 'ElementUnicodeResult' in str(type(result)):

out.append(result)

else:

out.append(etree.tostring(result, encoding="utf8", method="html"))

if out:

return out[0]

else:

return ''

@staticmethod

def getRegex(regex, content):

if type(content) == type(b''):

content = content.decode('utf8')

rs = re.search(regex,content)

if rs:

return rs.group(1)

else:

return ''

@staticmethod

def writer_to_csv(datas,file_path):

import csv

with open(file_path,'w',encoding='utf_8_sig',newline='',) as fout:

writer = csv.writer(fout)

for data in datas:

writer.writerow(data)

fout.flush()

if __name__ == '__main__':

content = crawlerTool.get('https://www.amazon.ca/Adrianna-Papell-Womens-Sleeve-Illusion/dp/B00MHFQWUI/ref=sr_1_1?dchild=1&keywords=041877530&qid=1569500740&s=apparel&sr=1-1&th=1')

content = (content.decode('utf8'))

print(content)