【Active Learning - 04】Generative Adversarial Active Learning

主动学习系列博文:

【Active Learning - 00】主动学习重要资源总结、分享(提供源码的论文、一些AL相关的研究者):https://blog.csdn.net/Houchaoqun_XMU/article/details/85245714

【Active Learning - 01】深入学习“主动学习”:如何显著地减少标注代价:https://blog.csdn.net/Houchaoqun_XMU/article/details/80146710

【Active Learning - 02】Fine-tuning Convolutional Neural Networks for Biomedical Image Analysis: Actively and Incrementally:https://blog.csdn.net/Houchaoqun_XMU/article/details/78874834

【Active Learning - 03】Adaptive Active Learning for Image Classification:https://blog.csdn.net/Houchaoqun_XMU/article/details/89553144

【Active Learning - 04】Generative Adversarial Active Learning:https://blog.csdn.net/Houchaoqun_XMU/article/details/89631986

【Active Learning - 05】Adversarial Sampling for Active Learning:https://blog.csdn.net/Houchaoqun_XMU/article/details/89736607

【Active Learning - 06】面向图像分类任务的主动学习系统(理论篇):https://blog.csdn.net/Houchaoqun_XMU/article/details/89717028

【Active Learning - 07】面向图像分类任务的主动学习系统(实践篇 - 展示):https://blog.csdn.net/Houchaoqun_XMU/article/details/89955561

【Active Learning - 08】主动学习(Active Learning)资料汇总与分享:https://blog.csdn.net/Houchaoqun_XMU/article/details/96210160

【Active Learning - 09】主动学习策略研究及其在图像分类中的应用:研究背景与研究意义:https://blog.csdn.net/Houchaoqun_XMU/article/details/100177750

【Active Learning - 10】图像分类技术和主动学习方法概述:https://blog.csdn.net/Houchaoqun_XMU/article/details/101126055

【Active Learning - 11】一种噪声鲁棒的半监督主动学习框架:https://blog.csdn.net/Houchaoqun_XMU/article/details/102417465

【Active Learning - 12】一种基于生成对抗网络的二阶段主动学习方法:https://blog.csdn.net/Houchaoqun_XMU/article/details/103093810

【Active Learning - 13】总结与展望 & 参考文献的整理与分享(The End…):https://blog.csdn.net/Houchaoqun_XMU/article/details/103094113

【2017.11.15】Generative Adversarial Active Learning

相关文档:

【2017】Generative Adversarial Active Learning.pdf

【2017】Generative Adversarial Active Learning_PPT.pdf

学习笔记:GAN + Active learning

主要步骤:

- GANs to synthesize informative training instances that are adapted

to the current learner. - Human oracles to label these instances.

- The labeled data is added

back to the training set to update the learner. - This protocol is executed iteratively until the label

budget is reached.

主要贡献:

-

This is the first active learning framework using deep genera-

tive models. -

With enough capacity from the trained generator, our

method allows us to have control over the generated instances which may not be available

to the previous active learners. -

The results are promising, compare with self-taught learning.

-

This is the first work to report numerical results in active learning synthesis for image

classification. -

We show that our approach can perform

competitively when compared against pool-based methods.

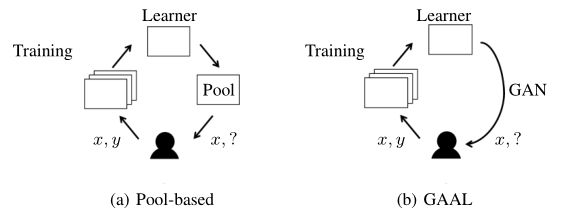

主动学习类型(scenario):

-

基于 stream(不是很懂):stream-based active learning

-

基于未标注样本池:pool-based active learning

-

样本生成式(例如GAN之类的生成模型):learning by query synthesis active learning

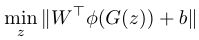

核心部分:

-

GAN:使用DCGAN(TensorFlow,https://github.com/carpedm20/DCGAN-tensorflow)

-

generative models:用于生成训练样本(To directly synthesize training data)。而且 generative models 的训练数据集是未标注的样本集,因此生成的样本也是未知标签的(如果训练的数据集是带标注的,那么生成的样本也是带标注的,参考hy的GAN)。值得注意的是,训练 generative models 时,可以对loss function加一些限制条件控制生成的样本(类似于主动选择中的策略,比如,增加生成样本之间的diversity、每个样本的entropy)。

-

We adaptively generate new queries by solving an optimization problem.

-

作者反复强调:本文是第一个将 GAN 和 active learning 进行结合,并且提供了 numerical results。此外,本文的主要目的是基于 GAN 和 active learning 提出一种 framework,并不是重在提升模型性能(如,分类问题的准确率等)。

-

训练数据的初始化:随机筛选50个样本进行初始化;每次1个batch对应10次queries。

作者在文中留下的展望:

- Alternatively, we can incorporate diversity into our active learning principle. 将一些主动筛选的策略加入到目标函数.

- GAN的改进:We also plan to investigate the possibility of using

Wasserstein GAN in our framework.

值得继续深入的论文:related work 部分

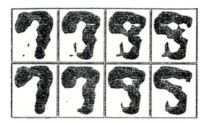

早期的“generative models +主动学习”

- 《Kevin J. Lang and Eric B Baum.** Query Learning Can Work Poorly when a Human Oracle is

Used**, 1992.》1992年,作者使用“synthesized learning queries and used human oracles”训练一个神经网络,解决手写字分类问题。但效果并不可观,因为当时生成的部分图像连human oracles都无法辨识(如下图所示)。

- 《Nicolas Papernot, Martín Abadi, Úlfar Erlingsson, Ian Goodfellow, and Kunal Talwar. Semi-

supervised knowledge transfer for deep learning from private training datanumerical results**。

generative models +半监督学习(semi-supervised learning)

-

《Kamal Nigam, Andrew Kachites Mccallum, Sebastian Thrun, and Tom Mitchell. Text Classi-

fication from Labeled and Unlabeled Documents using EM. Mach. Learn., 39:103–134,2000.》2000年,Kamal等人提出一种基于generative models的半监督方法,解决文本分类问题。 -

《TimothyM.Hospedales,ShaogangGong,andTaoXiang. Findingrareclasses: Activelearning

with generative and discriminative models. IEEE Trans. Knowl. Data Eng., 25(2):374–386,

2013.》2013年,TimothyM等人将高斯混合模型引入主动学习中,generative models 作为一个分类器。 -

《Jost Tobias Springenberg. Unsupervised and Semi-supervised Learning with Categorical Gen-

erative Adversarial Networks. arXiv, (2009):1–20,2015.》2015年,GAN + semi-supervised learning。本文作者强调了 active learning 和 semi-superviesd learning 的不同。

其他:

-

self-taught learning algorithm:《 Rajat Raina, Alexis Battle, Honglak Lee, Benjamin Packer, and Andrew Y Ng. Self-taught

Learning : Transfer Learning from Unlabeled Data. Proc. 24th Int. Conf. Mach. Learn., pages

759–766, 2007》 -

**diversity strategy:**Multi-Class

Active Learning by Uncertainty Sampling with Diversity Maximization.Semi-Supervised SVM Batch

Mode Active Learning with Applications to Image Retrieval. ACM Trans. Informations Syst.

ACM Trans. Inf. Syst. Publ. ACM Trans. Inf. Syst., 27(16):24–26,2009.》

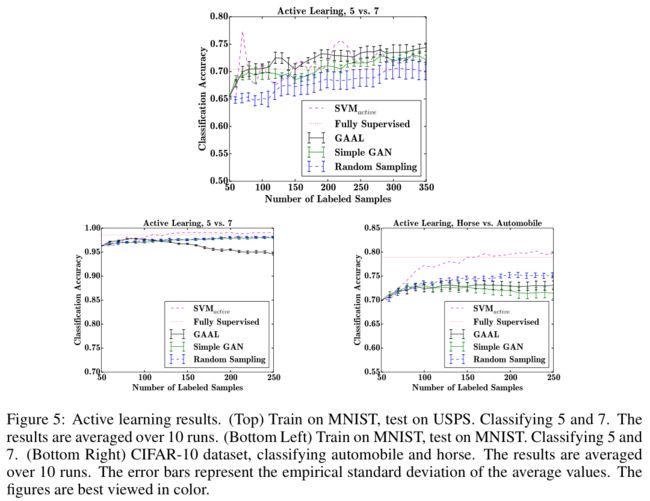

实验部分:

-

本文方法:DCGAN + active learning + linear SVM classifier(γ = 0.001);

-

任务类型:图像的二分类任务;作者提到,后续可以扩展到多任务问题、语言类型问题;

主要对比实验:与其他主动学习方法做对比

-

使用普通的 GAN 生成训练数据(regular GAN,simple GAN);

-

SVM active learning;

-

Random select from unlabeled pool;

-

self-taught learning;

实验的数据集:

-

MNIST+:只取数字 5 和 7(由于MNIST划分的test集和train集属于同一种分布,基于pool-based的主动学习具有处理这类数据集的天然优势;因此引入了与MNIST类似的数据集USPS,并作为test集);

-

CIFAR-10:只取 automobile 和 horse(为了防止猫和狗这两种类别比较模棱两可,因此选汽车和马);

Cifar-10 数据集的实验中,由于纬度相对MNIST较高,GAN较难训练,产生的部分样本质量较差。作者只挑选出质量较好的样本使用,丢弃掉质量不好的样本。此外,作者也有提到一些可以改进GAN的方法,但作为未来工作。

本文方法 vs. self-taught learning

Balancing exploitation and exploration

作者尝试结合 GAAL+Random sampling,并在手写体数据集上做实验(5-7,MNIST for training,USPS for test)。实验结果表明:混合方法的效果优于单独使用的方法。

A mixed scheme is able to achieve better performance than either using GAAL or random sampling alone. Therefore, it implies that GAAL, as an exploitation scheme, performs even better in combination with an exploration scheme. A detailed analysis such mixed schemes will be an interesting future topic.

思考:

GAN:

- 训练GAN的数据集:

- 带标签的数据集:GAN生成的图像对应的标签也是确定的。

- 不带标签的数据集:GAN生成的图像对应的标签未知。

- 生成样本的方式:训练完GAN模型后,一次性生成N张所需的样本?online learning形式,每次不断生成新样本?

后续的改进文章

- 《ADVERSARIAL SAMPLING FOR ACTIVE LEARNING,2019》后续会对此文章进行精度并详细地整理。