微调StyleGAN2模型

目录

- 前言

- StyleGAN2

- 爬取图片

- 处理图片

- 裁剪

- waifu2x放大图片

- 鉴别器排序

- 人工筛选

- 创建数据集

- 进行训练

- 再次尝试

- 后续

前言

打算微调2020-01-11-skylion-stylegan2-animeportraits-networksnapshot-024664.pkl这个StyleGAN2 512*512 的动漫人物生成模型为特定角色的生成模型.于是开始构建特定角色的数据集.

StyleGAN2

在这之前,首先得配置好StyleGAN2的运行环境,可以参考我前几篇博客(虽然是StyleGAN1的).

建议直接git clone我的储存库https://github.com/DLW3D/stylegan2encoder然后手动下载所需模型到models文件夹下.

-

预训练动漫头像生成模型

2020-01-11-skylion-stylegan2-animeportraits-networksnapshot-024664.pkl

https://pan.baidu.com/s/1HVR_6D_BGXfpZbVtI3JEtg 提取码: 6b84 -

inception_v3_features.pkl

https://pan.baidu.com/s/1lnsvAawx3bCM35OXfQ4ajg 提取码: d5r8 -

vgg16_zhang_perceptual.pkl(这个不知道会不会用到,反正链接先给出来)

https://pan.baidu.com/s/1vP6NM9-w4s3Cy6l4T7QpbQ 提取码: 5qkp

模型的路径设置统一在config.py文件中的Model变量中

爬取图片

我用的是 https://github.com/Redcxx/Pikax 来爬取P站图片

git clone 后直接在项目根目录打开Python一步一步运行

from pikax import Pikax, settings, params

pixiv = Pikax()

pixiv = Pikax(settings.username, settings.password)

results = pixiv.search(keyword='列克星敦', match=params.Match.PARTIAL)

new_results = results.bookmarks > 20

pixiv.download(new_results)

由于网络问题最后一步可能要多运行几次,文件会下载到项目根目录的一个文件夹.

处理图片

裁剪

使用 https://github.com/nagadomi/lbpcascade_animeface 识别动漫人物脸.

按如下格式配置文件夹(如果git clone了我的储存库可以在cropy文件夹下找到)

下载 lbpcascade_animeface.xml

crop.py

import cv2

import sys

import os.path

def f2f(cascade_file, origin_dir, face_dir):

if not os.path.isfile(cascade_file):

raise RuntimeError("%s: not found" % cascade_file)

cascade = cv2.CascadeClassifier(cascade_file)

image_names = [files for root, dirs, files in os.walk(origin_dir)][0]

print('find %s files in %s' % (len(image_names), origin_dir))

for index, image_name in enumerate(image_names):

image_path = os.path.join(origin_dir, image_name)

image = cv2.imread(image_path)

if image is None:

print('error read image: %s\n' % image_name)

continue

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

gray = cv2.equalizeHist(gray)

faces = cascade.detectMultiScale(gray,

# detector options

scaleFactor=1.1,

minNeighbors=5,

minSize=(50, 50))

i = 0

for (x, y, w, h) in faces:

# cropped = image[y: y + h, x: x + w] # origin

# cropped = image[int(y * 0.25): y + h, int(x * 0.90): x + int(w * 1.25)] # gwern

top = max(y - int(h * 0.5), 0)

left = max(x - int(w * 0.25), 0)

cropped = image[top: top + int(h * 1.5), left: left + int(w * 1.5)] # math

cv2.imwrite(os.path.join(face_dir, "%s_%s.png" % (image_name, str(i))), cropped)

i += 1

print("\r", "%s/%s " % (index, len(image_names)), end="", flush=True)

print('Done!')

if len(sys.argv) != 4:

sys.stderr.write("usage: crop.py )

sys.exit(-1)

f2f(sys.argv[1], sys.argv[2], sys.argv[3])

将原图放入./origin文件夹中.

建议先批量重命名,因为文件名不能有中文字符,否则会出现

cv2.error: OpenCV(4.1.0) C:\projects\opencv-python\opencv\modules\imgproc\src\color.cpp:182: error: (-215:Assertion failed) !_src.empty() in function 'cv::cvtColor'.

运行裁剪

python crop.py lbpcascade_animeface.xml ./origin ./faces

在./faces查看裁剪结果

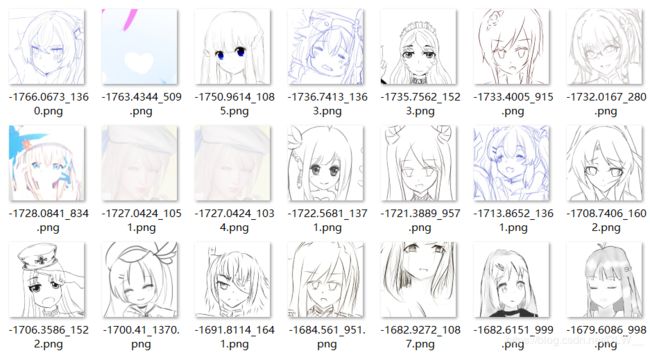

为什么我2408张原图只裁剪出1659张图片?

而且好像混进去了些奇怪的东西(例如萨拉托加)

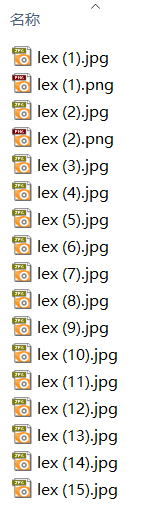

waifu2x放大图片

使用waifu2x将图片放大到512*512.

直接下载release(依赖cudnn)

https://github.com/lltcggie/waifu2x-caffe/releases

https://pan.baidu.com/s/1N7SUNU-KP-G6ymh1TNP7ww 密码:adw5

GUI是好文明.

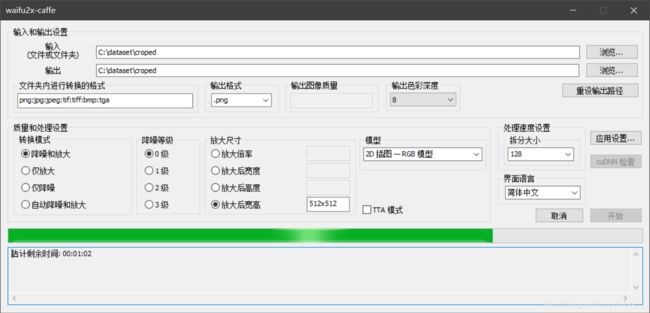

鉴别器排序

我们可以使用训练好的动漫人物头像生成模型的鉴别器对数据集进行筛选(要求数据集图片分辨率512*512).

ranker.py(放在StyleGAN2项目根目录下)

import os

import pickle

import numpy as np

import PIL.Image

import dnnlib

import dnnlib.tflib as tflib

import config

import sys

def main(origin_dir):

image_names = [files for root, dirs, files in os.walk(origin_dir)][0]

print('find %s files in %s' % (len(image_names), origin_dir))

tflib.init_tf()

_G, D, _Gs = pickle.load(open(config.Model, "rb"))

for index, image_name in enumerate(image_names):

image_path = os.path.join(origin_dir, image_name)

img = np.asarray(PIL.Image.open(image_path))

img = img.reshape(1, 3, 512, 512)

score = D.run(img, None)

os.rename(image_path, os.path.join(origin_dir, '%s_%s.png' % (score[0][0], index)))

print(image_name, score[0][0])

print('Done!')

if __name__ == "__main__":

main(sys.argv[1])

配置好模型路径,运行代码.对文件夹中的图片根据Discriminator的分数进行重命名.

python ranker.py .\cropy\faces

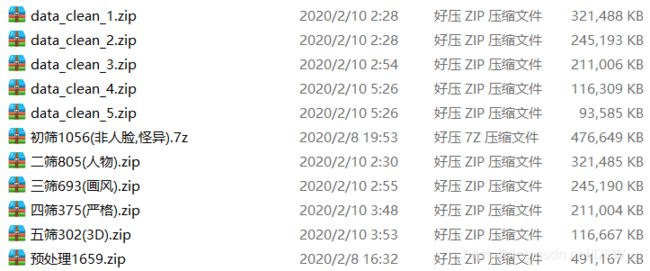

人工筛选

基本思路是先筛选掉[黑白][非人脸][人脸不完整][画风奇特][非特定角色]的图片

然后筛选出[有奇怪要素][画风差异较大]的图片

(分几步筛选是为了对比不同筛选程度训练得到的模型效果)

创建数据集

使用StyleGAN2项目根目录下的dataset_tool.py创建数据集

建议修改dataset_tool.py,在运行时打印log以便出现错误时找到导致出错的图片.

with TFRecordExporter(tfrecord_dir, len(image_filenames)) as tfr:

order = tfr.choose_shuffled_order() if shuffle else np.arange(len(image_filenames))

for idx in range(order.size):

print(image_filenames[order[idx]])

img = np.asarray(PIL.Image.open(image_filenames[order[idx]]))

if channels == 1:

img = img[np.newaxis, :, :] # HW => CHW

else:

img = img.transpose([2, 0, 1]) # HWC => CHW

tfr.add_image(img)

运行

python dataset_tool.py create_from_images ./datasets/mypictures ./cropy/faces

检查创建的数据集,以免混进去些奇怪的东西,按回车显示下一个.

python dataset_tool.py display ./datasets/mypictures

进行训练

python run_training.py --num-gpus=1 --data-dir=./datasets/ --config=config-f --dataset=mypictures --mirror-augment=true --total-kimg=10000 --resume-pkl=./models/2020-01-11-skylion-stylegan2-animeportraits-networksnapshot-024664.pkl

并没有成功,因为OOM.

......

tensorflow.python.framework.errors_impl.ResourceExhaustedError: OOM when allocating tensor with shape[256,511,511,1] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

[[{{node GPU0/G_loss/D/512x512/Skip/UpFirDn2D}}]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.

......

我的GTX1060只有6G显存,而官方说最低所需显存为16G,哭了.

换了一台RTX2080(8G显存),尝试下能不能通过降低batch_size跑起来.

修改run_training.py

55 sched.minibatch_size_base = 1

56 sched.minibatch_gpu_base = 1

然后重新运行

python run_training.py --num-gpus=1 --data-dir=./datasets/ --config=config-f --dataset=mypictures --mirror-augment=true --total-kimg=10000 --resume-pkl=./models/2020-01-11-skylion-stylegan2-animeportraits-networksnapshot-024664.pkl

报错

...

Building TensorFlow graph...

Initializing logs...

Training for 10000 kimg...

Traceback (most recent call last):

File "D:\Anaconda3\lib\site-packages\tensorflow\python\client\session.py", line 1356, in _do_call

return fn(*args)

File "D:\Anaconda3\lib\site-packages\tensorflow\python\client\session.py", line 1341, in _run_fn

options, feed_dict, fetch_list, target_list, run_metadata)

File "D:\Anaconda3\lib\site-packages\tensorflow\python\client\session.py", line 1429, in _call_tf_sessionrun

run_metadata)

tensorflow.python.framework.errors_impl.InternalError: cudaErrorInvalidConfiguration

[[{{node GPU0/G_loss/PathReg/G/G_synthesis/8x8/Upsample/UpFirDn2D}}]]

During handling of the above exception, another exception occurred:

...

Errors may have originated from an input operation.

Input Source operations connected to node GPU0/G_loss/PathReg/G/G_synthesis/8x8/Upsample/UpFirDn2D:

GPU0/G_loss/PathReg/G/G_synthesis/8x8/Upsample/Reshape (defined at D:\tencent\date\823239962\FileRecv\stylegan2encoder\stylegan2encoder\dnnlib\tflib\ops\upfirdn_2d.py:358)

GPU0/G_loss/PathReg/G/G_synthesis/8x8/Upsample/Const (defined at D:\tencent\date\823239962\FileRecv\stylegan2encoder\stylegan2encoder\dnnlib\tflib\ops\upfirdn_2d.py:123)

...

不是OOM了,但是这个报错我怎么查都查不到是怎么回事.

再次尝试

(这段是我完成了所有工作后才写的)

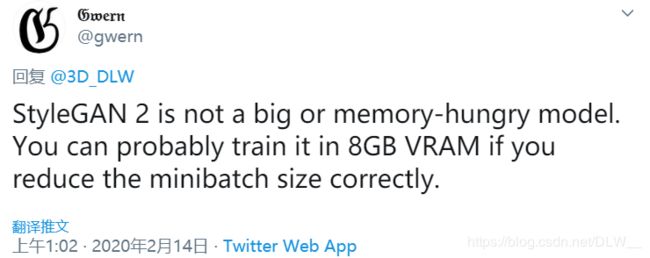

经gwern大佬提醒,8G显存也是可以跑StyleGAN2的,于是我重新拿起了RTX2080开始调试.

经过多次测试发现,cudaErrorInvalidConfiguration的原因是minibatch_size_base和minibatch_gpu_base的配置问题.

将这两个参数设置为 [1, 1], [2, 1], [4, 1], [3, 2] 都不行,设置为 [4, 2], [6, 2] 则可以

结论:

minibatch_gpu_base最低为2minibatch_size_base必需为minibatch_gpu_base的倍数.- RTX1080(8G显存) [256, 2], [192, 3] 可以[32, 4]不行.

- GTX1060(6G显存) [128, 2] 可以, [24, 3] 不行.

后续

微调StyleGAN2模型(二)使用Google Colab训练模型