机器学习之支持向量机SVM(二)

第二次总结支持向量机,主要涉及到实现SVM的算法—SMO算法,分为简易版和完整版。打卡,7月1号之前完成~

文章目录

- 一、SMO算法的最优化问题分析

- 1.无约束下的二次规划问题的极值

- 2.约束条件下的可行域问题

- 二、两个变量的选择问题

- 1.第一个变量的选择问题

- 2.第二个变量的选择问题

- 三.简易版的SMO算法

一、SMO算法的最优化问题分析

SMO算法要解决的凸二次规划的对偶问题: min α 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j K ( x i , x j ) − ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 0 ⩽ α i ⩽ C , i = 1 , 2 , … , N \min\limits_{\alpha}\frac{1}{2}\sum\limits_{i=1}^{N}\sum\limits_{j=1}^{N}\alpha_{i}\alpha_{j}y_{i}y_{j}K(x_{i},x_{j})-\sum\limits_{i=1}^{N}\alpha_{i}\\s.t.\hspace{0.5cm}\sum\limits_{i=1}^{N}\alpha_{i}y_{i}=0\hspace{3.5cm}\\0\leqslant\alpha_{i}\leqslant C,i =1,2,\dots,N αmin21i=1∑Nj=1∑NαiαjyiyjK(xi,xj)−i=1∑Nαis.t.i=1∑Nαiyi=00⩽αi⩽C,i=1,2,…,N

注:在写数学公式的时候,光标出现了问题,之前光标是一条线,想在哪里修改就在哪里修改。不知道哪里出现了问题,光标变成了一个蓝框,公式编起来非常麻烦。

解决方法:

切换光标的模式的方法为按 insert 键。笔记本电脑有的需要同时按 Fn + insert 键能在三种状态之间切换。

SMO算法是一种启发式算法,其主要思想为:选择两个变量 α 1 , α 2 \alpha_{1},\alpha_{2} α1,α2,固定其他变量,针对这两个变量建立一个二次规划问题,形式为:

min α 1 , α 2 W ( α 1 , α 2 ) = 1 2 K 11 α 1 2 + 1 2 K 22 α 2 2 + y 1 y 2 K 12 α 1 α 2 − ( α 1 + α 2 ) + y 1 α 1 ∑ i = 3 N y i α i K i 1 + y 2 α 2 ∑ i = 3 N y i α i K i , 2 s . t . α 1 y 1 + α 2 y 2 = − ∑ i = 3 N y i α i = ς 0 ⩽ α i ⩽ C , i = 1 , 2 \min\limits_{\alpha_{1},\alpha_{2}}\ W(\alpha_{1},\alpha_{2})=\frac{1}{2}K_{11}\alpha_{1}^{2}+\frac{1}{2}K_{22}\alpha_{2}^{2}+y_{1}y_{2}K_{12}\alpha_{1}\alpha_{2}-(\alpha_{1}+\alpha_{2})\\+y_{1}\alpha_{1}\sum\limits_{i=3}^{N}y_{i}\alpha_{i}K_{i1}+y_{2}\alpha_{2}\sum\limits_{i=3}^{N}y_{i}\alpha_{i}K_{i,2}\\s.t.\hspace{1cm}\alpha_{1}y_{1}+\alpha_{2}y_{2}=-\sum\limits_{i=3}^{N}y_{i}\alpha_{i}=\varsigma\hspace{4cm}\\0\leqslant\alpha_{i}\leqslant C,i =1,2\hspace{2.5cm} α1,α2min W(α1,α2)=21K11α12+21K22α22+y1y2K12α1α2−(α1+α2)+y1α1i=3∑NyiαiKi1+y2α2i=3∑NyiαiKi,2s.t.α1y1+α2y2=−i=3∑Nyiαi=ς0⩽αi⩽C,i=1,2

1.无约束下的二次规划问题的极值

令 ν i = ∑ j = 3 N = g ( x i ) − ∑ j = 1 2 α j y j K ( x i , x j ) − b , i = 1 , 2 \nu_{i}=\sum\limits_{j=3}^{N}=g(x_{i})-\sum\limits_{j=1}^{2}\alpha_{j}y_{j}K(x_{i},x_{j})-b, \ i=1,2 νi=j=3∑N=g(xi)−j=1∑2αjyjK(xi,xj)−b, i=1,2

目标函数为:

W ( α 1 , α 2 ) = = 1 2 K 11 α 1 2 + 1 2 K 22 α 2 2 + y 1 y 2 K 12 α 1 α 2 − ( α 1 + α 2 ) + y 1 ν 1 α 1 + y 2 ν 2 α 2 W(\alpha_{1},\alpha_{2})==\frac{1}{2}K_{11}\alpha_{1}^{2}+\frac{1}{2}K_{22}\alpha_{2}^{2}+y_{1}y_{2}K_{12}\alpha_{1}\alpha_{2}-(\alpha_{1}+\alpha_{2})\\+y_{1}\nu_{1}\alpha_{1}+y_{2}\nu_{2}\alpha_{2} W(α1,α2)==21K11α12+21K22α22+y1y2K12α1α2−(α1+α2)+y1ν1α1+y2ν2α2

由 α 1 y 1 = ς − α 2 y 2 \alpha_{1}y_{1}=\varsigma-\alpha_{2}y_{2} α1y1=ς−α2y2及 y 1 2 = 1 y_{1}^{2}=1 y12=1,可将 α 1 \alpha_{1} α1表示为:

α 1 = ( ς − y 2 α 2 ) y 1 \alpha_{1}=(\varsigma-y_{2}\alpha_{2})y_{1} α1=(ς−y2α2)y1

带入到 W ( α 1 , α 2 ) W(\alpha_{1},\alpha_{2}) W(α1,α2)的表达式中,

W ( α 2 ) = 1 2 K 11 ( ς − y 2 α 2 ) 2 + 1 2 K 22 α 2 2 + y 2 K 12 ( ς − y 2 α 2 ) α 2 − ( ς − y 2 α 2 ) y 1 − α 2 + ν 1 ( ς − y 2 α 2 ) + y 2 ν 2 α 2 W(\alpha_{2})=\frac{1}{2}K_{11}(\varsigma-y_{2}\alpha_{2})^{2}+\frac{1}{2}K_{22}\alpha_{2}^{2}+y_{2}K_{12}(\varsigma-y_{2}\alpha_{2})\alpha_{2}\\-(\varsigma-y_{2}\alpha_{2})y_{1}-\alpha_{2}+\nu_{1}(\varsigma-y_{2}\alpha_{2})+y_{2}\nu_{2}\alpha_{2} W(α2)=21K11(ς−y2α2)2+21K22α22+y2K12(ς−y2α2)α2−(ς−y2α2)y1−α2+ν1(ς−y2α2)+y2ν2α2

对 α 2 求 偏 导 , \alpha_{2}求偏导, α2求偏导,

∂ W ∂ α 2 = K 11 α 2 + K 22 α 2 − 2 K 12 α 2 − K 11 ς y 2 − K 12 ς y 2 + y 1 y 2 − 1 − ν 1 y 2 + y 2 ν 2 \frac{\partial W}{\partial \alpha_{2}}=K_{11}\alpha_{2}+K_{22}\alpha_{2}-2K_{12}\alpha_{2}-K_{11}\varsigma y_{2}-K_{12}\varsigma \\y_{2}+y_{1}y_{2}-1-\nu_{1}y_{2}+y_{2}\nu_{2} ∂α2∂W=K11α2+K22α2−2K12α2−K11ςy2−K12ςy2+y1y2−1−ν1y2+y2ν2

令其为0,得到,

( K 11 + K 22 − 2 K 12 ) α 2 = y 2 ( y 2 − y 1 + ς K 11 − ς K 12 + ν 1 − ν 2 ) = y 2 [ y 2 − y 1 + ς K 11 − ς K 12 + ( g ( x 1 ) − ∑ j = 1 2 α j y j K ( x i , x j ) − b ) − ( g ( x 2 ) − ∑ j = 1 2 α j y j K ( x i , x j ) − b ) ] (K_{11}+K_{22}-2K_{12})\alpha_{2}= y_{2}(y_{2}-y_{1}+\varsigma K_{11}-\varsigma K_{12}+\nu_{1}-\nu_{2})\\\hspace{4.5cm} \!=y_{2}[y_{2}-\!y_{1}\!+\!\varsigma K_{11}-\!\varsigma K_{12}+(g(x_{1}) - \sum\limits_{j=1}^{2}\alpha_{j}y_{j}K(x_{i},x_{j})-b)\\-(g(x_{2})-\!\sum\limits_{j=1}^{2}\alpha_{j}y_{j}K(x_{i},x_{j})-b)] (K11+K22−2K12)α2=y2(y2−y1+ςK11−ςK12+ν1−ν2)=y2[y2−y1+ςK11−ςK12+(g(x1)−j=1∑2αjyjK(xi,xj)−b)−(g(x2)−j=1∑2αjyjK(xi,xj)−b)]

将 ς = α 1 o l d y 1 + α 2 o l d y 2 \varsigma=\alpha_{1}^{old}y_{1}+\alpha_{2}^{old}y_{2} ς=α1oldy1+α2oldy2代入,

( K 11 + K 22 − 2 K 12 ) α 2 n e w , n u c = y 2 ( ( K 11 + K 22 − 2 K 12 ) α 2 o l d y 2 + y 2 − y 1 + g ( x 1 ) − g ( x 2 ) ) = ( K 11 + K 22 − 2 K 12 ) α 2 o l d + y 2 ( E 1 − E 2 ) (K_{11}+K_{22}-2K_{12})\alpha_{2}^{new,nuc}=y_{2}((K_{11}+K_{22}-2K_{12})\alpha_{2}^{old}y_{2}+y_{2}-y_{1}\\+g(x_{1})-g(x_{2}))\\\hspace{4.1cm}=(K_{11}+K_{22}-2K_{12})\alpha_{2}^{old}+y_{2}(E_{1}-E_{2}) (K11+K22−2K12)α2new,nuc=y2((K11+K22−2K12)α2oldy2+y2−y1+g(x1)−g(x2))=(K11+K22−2K12)α2old+y2(E1−E2)

将 η = K 11 + K 22 − 2 K 12 \eta=K_{11}+K_{22}-2K_{12} η=K11+K22−2K12代入,

α 2 n e w , n u c = α 2 o l d + y 2 ( E 1 − E 2 ) η \alpha_{2}^{new,nuc}=\alpha_{2}^{old}+\frac{y_{2}(E_{1}-E_{2})}{\eta} α2new,nuc=α2old+ηy2(E1−E2)

2.约束条件下的可行域问题

由于只有两个变量,我们可以用如图所示的正方形区域来表示.

假设该优化问题的的初始可行解为 α 1 o l d , α 2 o l d \alpha_{1}^{old},\alpha_{2}^{old} α1old,α2old ,最优解为 α 1 n e w , α 2 n e w \alpha_{1}^{new},\alpha_{2}^{new} α1new,α2new一旦一个变量确定,另一个变量也可以确定下来,因为:

α 1 n e w y 1 + α 2 n e w y 2 = α 1 o l d y 1 + α 2 o l d y 2 \alpha_{1}^{new}y_{1}+\alpha_{2}^{new}y_{2}=\alpha_{1}^{old}y_{1}+\alpha_{2}^{old}y_{2} α1newy1+α2newy2=α1oldy1+α2oldy2

接下来,我们讨论 α 2 \alpha_{2} α2的可行域:

由 α 1 y 1 + α 2 y 2 = − ∑ i = 3 N y i α i = ς \alpha_{1}y_{1}+\alpha_{2}y_{2}=-\sum\limits_{i=3}^{N}y_{i}\alpha_{i}=\varsigma α1y1+α2y2=−i=3∑Nyiαi=ς,

1.如果 y 1 ≠ y 2 y_{1}\neq y_{2} y1̸=y2,则原式可以变为: α 1 − α 2 = k \alpha_{1}-\alpha_{2}=k α1−α2=k

如左图所示,

1)当k<0时,直线位于右下方位置,

直线不管怎么移动,对应的 α 2 \alpha_{2} α2的最大值为C,最小值为直线下端点与 α 2 \alpha_{2} α2轴的交点,这个点 α 1 = 0 \alpha_{1}=0 α1=0,故有, 0 − α 2 n e w = α 1 o l d − α 2 o l d 0-\alpha_{2}^{new}=\alpha_{1}^{old}-\alpha_{2}^{old} 0−α2new=α1old−α2old α 2 n e w = α 2 o l d − α 1 o l d \alpha_{2}^{new}=\alpha_{2}^{old}-\alpha_{1}^{old} α2new=α2old−α1old

此时, α 2 ∈ [ α 2 o l d − α 1 o l d , C ] \alpha_{2}\in[\alpha_{2}^{old}-\alpha_{1}^{old},C] α2∈[α2old−α1old,C]

2)当k>0时,直线位于左上角位置,

直线不管怎么移动,对应的 α 2 \alpha_{2} α2的最小值为0,最大值为直线上端点与 α 2 \alpha_{2} α2轴的平行轴的交点,这个点 α 1 = C \alpha_{1}=C α1=C,故有 C − α 2 n e w = α 1 o l d − α 2 o l d C-\alpha_{2}^{new}=\alpha_{1}^{old}-\alpha_{2}^{old} C−α2new=α1old−α2old α 2 n e w = C + α 2 o l d − α 1 o l d \alpha_{2}^{new}=C+\alpha_{2}^{old}-\alpha_{1}^{old} α2new=C+α2old−α1old

此时, α 2 ∈ [ 0 , C + α 2 o l d − α 1 o l d ] \alpha_{2}\in[0,C+\alpha_{2}^{old}-\alpha_{1}^{old}] α2∈[0,C+α2old−α1old]

又因为, 0 ⩽ α i ⩽ C 0\leqslant\alpha_{i}\leqslant C 0⩽αi⩽C

设L,H分别为 α 2 \alpha_{2} α2的上界和下界, L = m a x ( 0 , α 2 o l d − α 1 o l d ) L=max(0,\alpha_{2}^{old}-\alpha_{1}^{old}) L=max(0,α2old−α1old) H = m i n ( C + α 2 o l d − α 1 o l d , C ) \hspace{0.6cm}H=min(C+\alpha_{2}^{old}-\alpha_{1}^{old},C) H=min(C+α2old−α1old,C)

2.如果 y 1 = y 2 y_{1}= y_{2} y1=y2,则原式可以变为: α 1 + α 2 = k \alpha_{1}+\alpha_{2}=k α1+α2=k

如右图所示,

1)当0

此时, α 2 ∈ [ 0 , α 2 o l d + α 1 o l d ] \alpha_{2}\in[0,\alpha_{2}^{old}+\alpha_{1}^{old}] α2∈[0,α2old+α1old]

2)当C

此时, α 2 ∈ [ α 2 o l d + α 1 o l d − C , C ] \alpha_{2}\in[\alpha_{2}^{old}+\alpha_{1}^{old}-C,C] α2∈[α2old+α1old−C,C]

又因为, 0 ⩽ α i ⩽ C 0\leqslant\alpha_{i}\leqslant C 0⩽αi⩽C

设L,H分别为 α 2 \alpha_{2} α2的上界和下界, L = m a x ( 0 , α 2 o l d + α 1 o l d − C ) L=max(0,\alpha_{2}^{old}+\alpha_{1}^{old}-C) L=max(0,α2old+α1old−C) H = m i n ( α 2 o l d + α 1 o l d , C ) H=min(\alpha_{2}^{old}+\alpha_{1}^{old},C)\hspace{0.7cm} H=min(α2old+α1old,C)

由以上分析,我们得出最终的解 α 2 n e w , u n c \alpha_{2}^{new,unc} α2new,unc满足:

α 2 n e w , u n c = { H α 2 n e w , u n c > H α 2 n e w , u n c L ≤ α 2 n e w , u n c ≤ H L α 2 n e w , u n c < L \alpha_{2}^{new,unc}= \begin{cases} H & \alpha_{2}^{new,unc} > H\\ \alpha_{2}^{new,unc} & L\le \alpha_{2}^{new,unc}\le H\\ L & \alpha_{2}^{new,unc} < L \end{cases} α2new,unc=⎩⎪⎨⎪⎧Hα2new,uncLα2new,unc>HL≤α2new,unc≤Hα2new,unc<L

二、两个变量的选择问题

1.第一个变量的选择问题

SMO算法把寻找第一个变量的过程称为外循环。外循环是在训练样本集中选取违反KKT条件最严重的的点作为第一个变量。如何判断样本点是否满足KKT条件?

原始问题是凸二次规划问题,求得的KKT条件,

∇ w L ( w ∗ , b ∗ , ξ ∗ , α ∗ , μ ∗ ) = w ∗ − ∑ i = 1 N α ∗ y i x i = 0 ∇ b L ( w ∗ , b ∗ , ξ ∗ , α ∗ , μ ∗ ) = − ∑ i = 1 N α i y i = 0 ∇ L ( w ∗ , b ∗ , ξ ∗ , α ∗ , μ ∗ ) = C − α ∗ − μ ∗ = 0 α ∗ ( y i ( w ∗ x i + b ∗ ) − 1 + ξ ∗ ) = 0 μ ∗ ξ ∗ = 0 y i ( w ∗ x i + b ∗ ) − 1 + ξ i ∗ ≥ 0 ξ i ∗ ≥ 0 α i ∗ ≥ 0 μ i ∗ ≥ 0 \nabla_{w} L(w^{*},b^{*},\xi^{*},\alpha^{*},\mu^{*})=w^{*}-\sum\limits_{i=1}^{N}\alpha^{*}y_{i}x_{i}=0\\ \nabla_{b}L(w^{*},b^{*},\xi^{*},\alpha^{*},\mu^{*})=-\sum\limits_{i=1}^{N}\alpha_{i}y_{i}=0\hspace{1cm}\\ \nabla L(w^{*},b^{*},\xi^{*},\alpha^{*},\mu^{*})=C-\alpha^{*}-\mu^{*}=0\hspace{0.7cm}\\ \alpha^{*}(y_{i}(w^{*}x_{i}+b^{*})-1+\xi^{*})=0\\ \mu^{*}\xi^{*}=0\\ y_{i}(w^{*}x_{i}+b^{*})-1+\xi_{i}^{*}\geq 0\\ \xi_{i}^{*}\geq 0\\ \alpha_{i}^{*}\geq 0\\ \mu_{i}^{*}\geq 0 ∇wL(w∗,b∗,ξ∗,α∗,μ∗)=w∗−i=1∑Nα∗yixi=0∇bL(w∗,b∗,ξ∗,α∗,μ∗)=−i=1∑Nαiyi=0∇L(w∗,b∗,ξ∗,α∗,μ∗)=C−α∗−μ∗=0α∗(yi(w∗xi+b∗)−1+ξ∗)=0μ∗ξ∗=0yi(w∗xi+b∗)−1+ξi∗≥0ξi∗≥0αi∗≥0μi∗≥0

进一步的,

1.当 α i = 0 \alpha_{i}=0 αi=0时,则 μ ∗ = C ⇒ ξ ∗ = 0 ⇒ y i ( w ∗ x i + b ∗ ) − 1 ≥ 0 \mu^{*}=C\Rightarrow \xi^{*}=0 \Rightarrow y_{i}(w^{*}x_{i}+b^{*})-1\geq 0 μ∗=C⇒ξ∗=0⇒yi(w∗xi+b∗)−1≥0;

2.当 α i = C \alpha_{i}=C αi=C时,则 μ ∗ = 0 ⇒ y i ( w ∗ x i + b ∗ ) − 1 + ξ i ∗ = 0 ⇒ y i ( w ∗ x i + b ∗ ) = 1 − ξ i ∗ ≤ 1 \mu^{*}=0\Rightarrow y_{i}(w^{*}x_{i}+b^{*})-1+\xi_{i}^{*}= 0 \Rightarrow y_{i}(w^{*}x_{i}+b^{*})=1-\xi_{i}^{*}\leq 1 μ∗=0⇒yi(w∗xi+b∗)−1+ξi∗=0⇒yi(w∗xi+b∗)=1−ξi∗≤1;

3.当 0 < α i < C 0<\alpha_{i}<C 0<αi<C时,则 ξ ∗ = 0 , y i ( w ∗ x i + b ∗ ) − 1 + ξ i ∗ = 0 ⇒ y i ( w ∗ x i + b ∗ ) = 1 \xi^{*}=0,y_{i}(w^{*}x_{i}+b^{*})-1+\xi_{i}^{*}= 0\Rightarrow y_{i}(w^{*}x_{i}+b^{*})= 1 ξ∗=0,yi(w∗xi+b∗)−1+ξi∗=0⇒yi(w∗xi+b∗)=1

完整的,

α i = 0 ⟺ y i ( w ∗ x i + b ∗ ) ≥ 1 α i = C ⟺ y i ( w ∗ x i + b ∗ ) ≤ 1 0 < α i < C ⟺ y i ( w ∗ x i + b ∗ ) = 1 \alpha_{i}=0\iff y_{i}(w^{*}x_{i}+b^{*})\geq 1\\\alpha_{i}=C \iff y_{i}(w^{*}x_{i}+b^{*})\leq 1\hspace{0.1cm}\\0<\alpha_{i}<C \iff y_{i}(w^{*}x_{i}+b^{*})= 1\hspace{0.6cm} αi=0⟺yi(w∗xi+b∗)≥1αi=C⟺yi(w∗xi+b∗)≤10<αi<C⟺yi(w∗xi+b∗)=1

选择第一个变量的流程如下:

- 遍历整个训练样本的数据集,找到违反KKT条件的 α i \alpha_{i} αi作为第一个变量,然后根据后面讲到的规则选择第二个变量,接着对这两个变量进行优化。

- 当遍历完整个训练样本的数据集后,接着遍历间隔边界上的样本点,根据相关规则选择第二个变量,接着对这两个变量进行优化。

- 返回1,继续遍历整个训练样本点数据集。即在整个样本的数据集和非边界样本上来回进行切换。直到遍历整个样本的数据集,没有可以优化的 α i \alpha_{i} αi为止,退出循环。

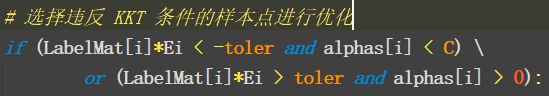

对于第一个变量的选择,在《机器学习实战》中有具体的代码实现:

这里有一个问题:为什么这样写呢?

前面我们已经解释了满足KKT条件的表达式,如果不满足KKT条件,则可以表达为:

α i = 0 ⟺ y i ( w ∗ x i + b ∗ ) < 1 α i = C ⟺ y i ( w ∗ x i + b ∗ ) > 1 0 < α i < C ⟺ y i ( w ∗ x i + b ∗ ) > 1 或 y i ( w ∗ x i + b ∗ ) < 1 \alpha_{i}=0\iff y_{i}(w^{*}x_{i}+b^{*})< 1\\\alpha_{i}=C \iff y_{i}(w^{*}x_{i}+b^{*})> 1\hspace{0.1cm}\\0<\alpha_{i}<C \iff y_{i}(w^{*}x_{i}+b^{*})> 1或y_{i}(w^{*}x_{i}+b^{*})<1 αi=0⟺yi(w∗xi+b∗)<1αi=C⟺yi(w∗xi+b∗)>10<αi<C⟺yi(w∗xi+b∗)>1或yi(w∗xi+b∗)<1

进一步的,可以转化成:

当 y i ( w ∗ x i + b ∗ ) > 1 时 , 0 < α i ≤ C 当y_{i}(w^{*}x_{i}+b^{*})> 1时,0<\alpha_{i}\leq C 当yi(w∗xi+b∗)>1时,0<αi≤C 当 y i ( w ∗ x i + b ∗ ) < 1 时 , 0 ≤ α i < C 当y_{i}(w^{*}x_{i}+b^{*})<1时,0\leq \alpha_{i}< C 当yi(w∗xi+b∗)<1时,0≤αi<C

我们看代码中的表达式, E i = g ( x i ) − y i E_{i}=g(x_{i})-y_{i} Ei=g(xi)−yi

对于 ∀ δ > 0 \forall \ \delta >0 ∀ δ>0

1.当 α i < C \alpha_{i}<C αi<C,即 0 ≤ α i < C 0\leq \alpha_{i}< C 0≤αi<C

y i [ g ( x i ) − y i ] = y i g ( x i ) − y i y i = y i g ( x i ) − 1 < − δ = y i g ( x i ) < 1 − δ \begin{aligned}y_{i}[g(x_{i})-y_{i}] &=y_{i}g(x_{i})-y_{i}y_{i}\\ &=y_{i}g(x_{i})-1<-\delta\\ &=y_{i}g(x_{i})<1-\delta \end{aligned} yi[g(xi)−yi]=yig(xi)−yiyi=yig(xi)−1<−δ=yig(xi)<1−δ

2.当 α i > 0 \alpha_{i}>0 αi>0,即 0 < α i ≤ C 0< \alpha_{i}\leq C 0<αi≤C

y i [ g ( x i ) − y i ] = y i g ( x i ) − y i y i = y i g ( x i ) − 1 > δ = y i g ( x i ) > 1 + δ \begin{aligned}y_{i}[g(x_{i})-y_{i}] &=y_{i}g(x_{i})-y_{i}y_{i}\\ &=y_{i}g(x_{i})-1>\delta\\ &=y_{i}g(x_{i})>1+\delta \end{aligned} yi[g(xi)−yi]=yig(xi)−yiyi=yig(xi)−1>δ=yig(xi)>1+δ

这里, δ \delta δ是一个误差项。

2.第二个变量的选择问题

SMO称第二个变量的选择过程为内循环。第二个变量的选择的标准是使 α i \alpha_{i} αi有足够大的变化.由于 α 2 \alpha_{2} α2是依赖于 ∣ E 1 − E 2 ∣ |E_{1}-E_{2}| ∣E1−E2∣的,则我们选择使得 ∣ E 1 − E 2 ∣ |E_{1}-E_{2}| ∣E1−E2∣最大的 α 2 \alpha_{2} α2。如果 E 1 E_{1} E1是正的,则选择最小的 E i E_{i} Ei作为 E 2 E_{2} E2;如果 E 1 E_{1} E1是负的,则选择最大的 E i E_{i} Ei作为 E 2 E_{2} E2;通常为每个样本的 E i E_{i} Ei保存在一个列表中,选择最大的 ∣ E 1 − E 2 ∣ |E_{1}-E_{2}| ∣E1−E2∣来近似最大化步长。

按照上述的启发式选择第二个变量,如果不能够使得函数值有足够的下降,则需要:

遍历在间隔边界上的支持向量点,找到能够使目标函数下降的点,如果没有,则遍历整个数据集;如果还是没有合适的 α 2 \alpha_{2} α2,则放弃第一个 α 1 \alpha_{1} α1,通过外层循环重新选择 α 1 \alpha_{1} α1。

三.简易版的SMO算法

from numpy import *

import matplotlib.pyplot as plt

# SMO算法中的辅助函数

# 读取文本中的数据,将文本中的特征存放在dataMat中,将标签存放在labelMat中

def loadDataSet(fireName):

DataMat = [];LabelMat = []

fr = open(fireName)

for line in fr.readlines():

LineArr = line.strip().split('\t')

DataMat.append([float(LineArr[0]),float(LineArr[1])])

LabelMat.append(float(LineArr[2]))

return DataMat,LabelMat

# 随机选择alpha_j的索引值

def SelectJrand(i,m):

j = i

while(j == i):

j = int(random.uniform(0,m))

return j

#判断alpha值是否越界

def clipAlpha(aj,H,L):

if aj > H:

aj = H

elif aj < L:

aj = L

return aj

SMO算法正式部分

# 该函数的伪代码如下:

# 创建一个alpha向量并将其初始化为0向量

# 当迭代次数小于最大的迭代次数时(外循环):

# 对数据集中的每个数据向量(内循环):

# 如果该数据向量可以被优化:

# 随机选择另外一个数据向量

# 同时优化这两个向量

# 如果这两个向量都不能被优化,退出内循环

# 如果所有的向量都没有被优化,增加迭代数目,继续下一次循环

def smoSimple(dataMatIn,classLabels,C,toler,MaxIter):

# 将列表 dataMatIn 和 classLabels转化成矩阵,运用矩阵乘法来减少运算

dataMatrix = mat(dataMatIn)

LabelMat = mat(classLabels).transpose()# .transpose()是转置运算

# 初始化y = w*x + b 的 b 为0

b = 0

# 得到 dataMatrix 的行数和列数

m,n = shape(dataMatrix)

# 初始化 alphas 为 m×1的零矩阵

alphas = mat(zeros((m,1)))

iter = 0

# 开始迭代

while (iter < MaxIter):

# 记录alphas是否已经发生了优化

alphaPairsChanged = 0

# 遍历数据集上的每一个样本

for i in range(m):

# 计算第一个变量alphas[i]的预测值

gxi = float(multiply(alphas,LabelMat).T*(dataMatrix*dataMatrix[i,:].T)) + b

# 计算第一个变量的预测值与真实值的差

Ei = gxi - float(LabelMat[i])

# 选择违反 KKT 条件的样本点进行优化,为什么这么处理,我们在正文中有说明

if (LabelMat[i]*Ei < -toler and alphas[i] < C) or (LabelMat[i]*Ei > toler and alphas[i] > 0):

# 选择好了i,就开始选择另外一个变量i

j = SelectJrand(i,m)

# 计算第二个变量的预测值

gxj = float(multiply(alphas,LabelMat).T*(dataMatrix*dataMatrix[j,:].T)) + b

# 计算第二个变量的预测值和真实值的差

Ej =gxj - float(LabelMat[j])

# 储存alpha的初始值

alphaIold = alphas[i].copy()

alphaJold = alphas[j].copy()

# 计算alphas[j]的上界和下界

if LabelMat[j] != LabelMat[i]:

H = min(C,C + alphas[j] - alphas[i])

L = max(0,alphas[j] - alphas[i])

else:

H = min(C,alphas[j]+alphas[i])

L = max(0,alphas[j]+alphas[i]-C)

# 如果 L == H,则跳过本次循环

if L == H:

print("L == H")

continue

# 计算alphas[j]的公式的分母,公式见正文

eta = dataMatrix[i,:]*dataMatrix[i,:].T + dataMatrix[j,:]*dataMatrix[j,:].T \

-2.0*dataMatrix[i,:]*dataMatrix[j,:].T

# 分母不能为0,否则跳过本次循环

if eta ==0:

print("eta == 0")

continue

# 计算第二个变量alphas[j]的值

alphas[j] += LabelMat[j]*(Ei - Ej)/eta

# 判断alphas[j]的值是否超过了其上界和下界

alphas[j] = clipAlpha(alphas[j],H,L)

# 如果本次更新的alphas[j]变化太小,则跳过本次循环

if (abs(alphas[j] - alphaJold)) < 0.00001:

print("j not moving enough !")

continue

# 根据alphas[j]去求解alphas[i]

alphas[i] += LabelMat[i]*LabelMat[j]*(alphaJold - alphas[j])

# 更新b值

b1 = - Ei - LabelMat[i]*(alphas[i] - alphaIold)*(dataMatrix[i,:]*dataMatrix[i,:].T)\

-LabelMat[j]*(alphas[j] - alphaJold)*(dataMatrix[j,:]*dataMatrix[i,:].T) + b

b2 = - Ej - LabelMat[i]*(alphas[i] - alphaIold)*(dataMatrix[i,:]*dataMatrix[j,:].T)\

- LabelMat[j]*(alphas[j] - alphaJold)*(dataMatrix[j,:]*dataMatrix[j,:].T) + b

if alphas[i] > 0 and alphas[i] < C:

b = b1

elif alphas[j] > 0 and alphas[j] < C:

b = b2

else:

b = (b1+b2)/2.0

alphaPairsChanged +=1

print("iter:{0};i:{1};alpha pair changed:{2}".format(iter,i,alphaPairsChanged))

# 如果这两个向量都不能被优化,增加迭代次数

if (alphaPairsChanged == 0):

iter +=1

else:

iter = 0

print("iteration number:%d" % iter)

return b,alphas

有一个地方需要理解:alphaIold = alphas[i].copy() 和 alphaJold = alphas[j].copy()

为什么要用.copy()?

这里就要说到python中的浅拷贝,详细介绍见https://blog.csdn.net/weixin_40238600/article/details/93603747

如果使用直接复制的话,alphas[j]或alphas[i]一旦更新,alphaIold和alphaJold也会随之而改变。

# 计算权重系数w

def clacW(alphas,dataArr,LabelArr):

dataMat = mat(dataArr);labelMat = mat(LabelArr).transpose()

m,n = shape(dataMat)

w = zeros((n,1))

for i in range(m):

w += multiply(alphas[i]*labelMat[i],dataMat[i,:].T)

return w

拟合曲线

def plotBestFit(alphas,dataArr,labelArr,b):

dataMat,labelMat = loadDataSet('testSet.txt')

dataArr = array(dataMat)

# 返回的是dataArr的行数

n = shape(dataArr)[0]

xcord1 = [];ycord1 = []

xcord2 = [];ycord2 = []

for i in range(n):

if (labelArr[i] == 1):

xcord1.append(dataArr[i,0]);ycord1.append(dataArr[i,1])

else:

xcord2.append(dataArr[i,0]);ycord2.append(dataArr[i,1])

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(xcord1,ycord1,s = 30,c = 'red',marker = 's')

ax.scatter(xcord2,ycord2,s = 30,c = 'green')

x =arange(2.0,6.0,0.1)

w = clacW(alphas, dataArr, labelArr)

print(type(b))

print(type(w))

print(type(x))

y = (-b - w[0]* x) / w[1] # 由w1*x1+w2*x2+b=0得到x2(即y)=(-b-w1x1)/w2

ax.plot(x,y)

plt.xlabel('x1');plt.ylabel('x2')

plt.show()

结果报错:

![]()

原因是:b的类型

![]()

应该改成数组:y = (-array(b)[0] - w[0] x) / w[1]

主函数运行:

if __name__ == '__main__':

dataArr,labelArr = loadDataSet('testSet.txt')

b,alphas= smoSimple(dataArr, labelArr, 0.6, 0.001, 40)

plotBestFit(alphas,dataArr,labelArr,b)

参考资料:

1.《统计学习方法》李航

2.《机器学习实战》Peter Harrington

3.【机器学习】支持向量机原理(四)SMO算法原理:https://blog.csdn.net/made_in_china_too/article/details/79547296

4.终端中光标的模式与操作:https://blog.csdn.net/Jeffxu_lib/article/details/84759961

5.[latex] 长公式换行:https://blog.csdn.net/solidsanke54/article/details/45102397

6.LaTeX大括号用法:https://blog.csdn.net/l740450789/article/details/49487847

7.机器学习实战 简化SMO算法 对第一个alpha选择条件的解读:https://blog.csdn.net/sky_kkk/article/details/79535060