无人驾驶工程师学习笔记(十六)——Project code : Advanced Lane Finding

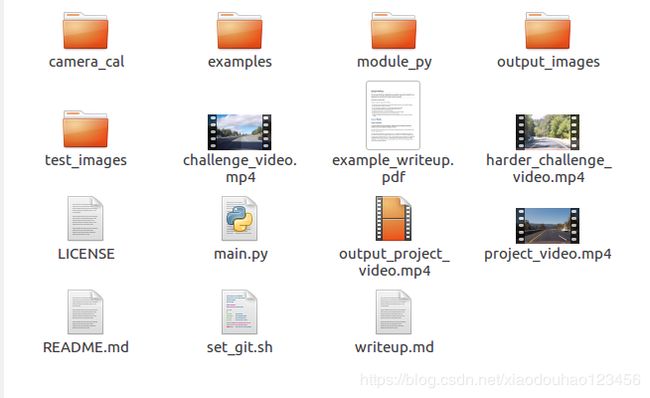

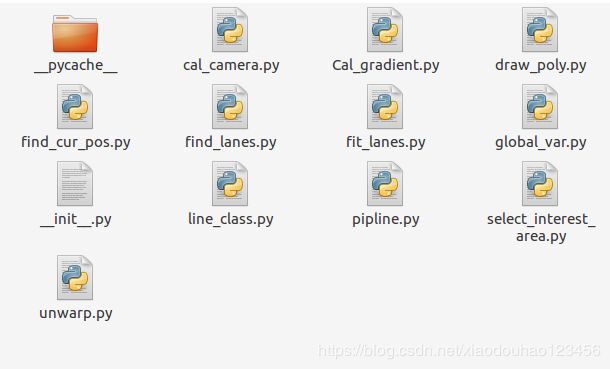

I write Python project in this “Advanced Lane Finding” , and set the program structure like this:

including the main.py and sub_functions in the module_py.

now I will introduce the logic in the above code :

now I will introduce the logic in the above code :

1. camera calibrate

I creat calibrate_cam() in the ‘module_py/cal_camera.py’. And also creat undist_test() to test the effect of calibrate camera matirx. The code like this:

important steps:

- define

objp: 这些点是一个没有畸变的棋盘上角点的位置坐标集合 - find

img_points:这些点是在畸变的棋盘上找到的角点。

通过上面这两组点,使用cv2.calibrateCamera()来计算相机的矫正参数。用这些参数来矫正这个相机拍出的所有图片cv2.undistort()。

import cv2

import numpy as np

import matplotlib.pyplot as plt

from module_py.global_var import nx,ny

def calibrate_cam(is_test=False):

# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(6,5,0)

objp = np.zeros((nx*ny,3), np.float32)

objp[:,:2] = np.mgrid[0:nx, 0:ny].T.reshape(-1,2)#生成棋盘网格中每个点的坐标

# Arrays to store object points and image points from all the images.

objpoints = [] # 3d points in real world space

imgpoints = [] # 2d points in image plane.

# Step through the list and search for chessboard corners

for i in range(1,21):

fname = 'camera_cal/calibration{}.jpg'.format(i) #main.py的相对位置

img = cv2.imread(fname)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Find the chessboard corners

ret, corners = cv2.findChessboardCorners(gray, (nx,ny), None)

# If found, add object points, image points

if ret == True:

# print(fname)

objpoints.append(objp)#将棋盘网格中每个角点的目标坐标存起来

imgpoints.append(corners)#将矫正图片的每个角点坐标存起来

# Draw and display the corners

cv2.drawChessboardCorners(img, (8,6), corners, ret)

#write_name = 'corners_found'+str(idx)+'.jpg'

#cv2.imwrite(write_name, img)

#cv2.imshow('img', img)

fname = 'camera_cal/calibration{}.jpg'.format(2)

img = cv2.imread(fname)

img_size = (img.shape[1], img.shape[0])

# Do camera calibration given object points and image points,其中所有图片的imgsize都是相同的,用任何一张的都可以

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, img_size,None,None)

# Test undistortion on an image

return mtx, dist

def undist_test(img,mtx,dist):

#对camera_cal/calibration2.jpg进行undistort,并存起来

dst = cv2.undistort(img, mtx, dist, None, mtx)

#cv2.imwrite('output_images/test_undist.jpg',dst)

# Visualize undistortion 显示矫正结果

f, (ax1, ax2) = plt.subplots(1, 2, figsize=(20,10))

ax1.imshow(img)

ax1.set_title('Original Image', fontsize=15)

ax2.imshow(dst)

ax2.set_title('Undistorted Image', fontsize=15)

plt.show()

# # Save the camera calibration result for later use (we won't worry about rvecs / tvecs)

# dist_pickle = {}

# dist_pickle["mtx"] = mtx

# dist_pickle["dist"] = dist

# # 将相机矫正的数据以wb格式存到本地

# pickle.dump( dist_pickle, open( "wide_dist_pickle.p", "wb" ) )

# #dst = cv2.cvtColor(dst, cv2.COLOR_BGR2RGB)2.获取感兴趣区域内符合条件的二值化图像

需符合的条件如下:

- 灰度梯度变化在一定范围内

- 灰度梯度变化幅度

- 灰度梯度变化方向

- 某通道色彩值

程序步骤:

- 高斯滤波

-

Convert to HLS color space and separate the S channel

hls = cv2.cvtColor(img, cv2.COLOR_RGB2HLS)

s = hls[:,:,2]

-

Grayscale image

gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

- 按照上面所述的条件筛选,可以自己定义其他的条件

# Apply each of the thresholding functions

#自定义算出图像在x或者y方向的灰度变化梯度,梯度值在阈值内的保留为1,在阈值外的保留为0.生成二值化的图像

gradx = abs_sobel_thresh(gray, orient='x', sobel_kernel=ksize, thresh=(10, 255))

grady = abs_sobel_thresh(gray, orient='y', sobel_kernel=ksize, thresh=(60, 255))

#自定义计算梯度幅度,对梯度变化幅度在感兴趣范围内的像素点位置设为1

mag_binary = mag_thresh(gray, sobel_kernel=ksize, mag_thresh=(40, 255))

#自定义计算梯度方向,筛选梯度方向在感兴趣范围之内的

dir_binary = dir_threshold(gray, sobel_kernel=ksize, thresh=(.65, 1.05))

# Combine all the thresholding information,找出以上三个条件全部满足的像素点位置。

combined = np.zeros_like(dir_binary)

combined[((gradx == 1) & (grady == 1)) | ((mag_binary == 1) & (dir_binary == 1))] = 1

# Threshold color channel 筛选出原始图像s通道值在感兴趣范围内的像素点

s_binary = np.zeros_like(combined)

s_binary[(s > 160) & (s < 255)] = 1

# Stack each channel to view their individual contributions in green and blue respectively

# This returns a stack of the two binary images, whose components you can see as different colors

# 筛选出灰度变化梯度、梯度变化幅度、梯度方向及s通道值均满足的像素点位置

color_binary = np.zeros_like(combined)

color_binary[(s_binary > 0) | (combined > 0)] = 1- 获取感兴趣区域内的点

# Defining vertices for marked area定义感兴趣区域,将左右两条车道线的区域框出来

imshape = img.shape

# print(imshape)

left_bottom = (100, imshape[0])

right_bottom = (imshape[1]-20, imshape[0])

apex1 = (610, 410)

apex2 = (680, 410)

inner_left_bottom = (310, imshape[0])

inner_right_bottom = (1150, imshape[0])

inner_apex1 = (700,480)

inner_apex2 = (650,480)

vertices = np.array([[left_bottom, apex1, apex2, \

right_bottom, inner_right_bottom, \

inner_apex1, inner_apex2, inner_left_bottom]], dtype=np.int32)

# Masked area,自定义,将感兴趣区域内的为1的像素点保留为1,之外的保留为0

color_binary = region_of_interest(color_binary, vertices)3.透视变换

对上面获取的图像进行透视变换,变为正俯视视角,以方便后面对车道线进行二次项多项式拟合等工作。

比较重要的是定义当前视角中图像中的点和正俯视视角图像中对应的点的坐标,这两组坐标的对应能够决定图像变换的准确度。

通过这两组坐标的对应获取透视变换矩阵和反透视变换矩阵,以对图像进行透视变换和反透视变换。

# Choose an offset from image corners to plot detected corners

offset1 = 200 # offset for dst points x value

offset2 = 0 # offset for dst points bottom y value

offset3 = 0 # offset for dst points top y value

# Grab the image shape

#img_size = (gray.shape[1], gray.shape[0])

#print("gray_size",img_size)

img_size=(img.shape[1],img.shape[0])

#print("img size",img_size_raw)

# For source points I'm grabbing the outer four detected corners

src = np.float32(area_of_interest)

# For destination points, I'm arbitrarily choosing some points to be

# a nice fit for displaying our warped result 定义目标点

dst = np.float32([[offset1, offset3],

[img_size[0]-offset1, offset3],

[img_size[0]-offset1, img_size[1]-offset2],

[offset1, img_size[1]-offset2]])

# Given src and dst points, calculate the perspective transform matrix

M = cv2.getPerspectiveTransform(src, dst)#得到透视变换矩阵

Minv = cv2.getPerspectiveTransform(dst, src)#得到反透视变换矩阵

# Warp the image using OpenCV warpPerspective()

warped = cv2.warpPerspective(undist, M, img_size)#对去畸变后的图像进行透视变换4. 获取车道线

现在我们通过第二步和第三步获取了感兴趣区域内符合条件的二值化图像,并对其进行了透视变换,得到了正俯视视角下的感兴趣区域内的二值化图像,现在我们就可以用这个结果作为输入去进一步获取车道线。

- 找到组成车道线的像素点

# 将image在y方向划分成4/6/8个窗口,限定y方向的车道线的感兴趣区域,在每个窗口中计算纵向求和直方图,找出限定区域内的最大值,

# 25、50/75、为相对于车道线中心位置的左右区域范围,即限定x方向的车道线的感兴趣区域

# lanes为透视变换后的感兴趣区域内的二值化图像所有非0元组的索引

# 定为当前窗口中车道线所在的坐标位置,即left_lane_x, left_lane_y, right_lane_x, right_lane_y

# 0、1/2不同划分windows方法对应的序列号,用于将left、rightline存在LIne类的window属性时,区别不同的windows划分方法

# 将image在y方向划分成n个窗口,限定y方向的车道线的感兴趣区域,在每个窗口中计算纵向求和直方图,找出限定区域内的最大值,暂时作为车道线的中心线

# 再通过获取lanes中当前车道线区域内的所有像素点,去矫正中心线的位置,循环遍历所有的window找到组成车道线的所有像素点。

left_lane_x, left_lane_y, right_lane_x, right_lane_y = find_lanes(4, image, 25, lanes, left_lane_x, left_lane_y, right_lane_x, right_lane_y, 0)

# 通过三种方法累计获取车道线区域所有的点

left_lane_x, left_lane_y, right_lane_x, right_lane_y= find_lanes(6, image, 50, lanes, left_lane_x, left_lane_y, right_lane_x, right_lane_y, 1)

left_lane_x, left_lane_y, right_lane_x, right_lane_y = find_lanes(8, image, 75, lanes, left_lane_x, left_lane_y, right_lane_x, right_lane_y, 2)

# Find the coefficients of polynomials对获取的点进行拟合,得到多项式,利用多项式重新得到车道线区域内的点

left_fit = np.polyfit(left_lane_y, left_lane_x, 2)

left_fitx = left_fit[0]*yvals**2 + left_fit[1]*yvals + left_fit[2]

right_fit = np.polyfit(right_lane_y, right_lane_x, 2)

right_fitx = right_fit[0]*yvals**2 + right_fit[1]*yvals + right_fit[2]- 获得车道线的曲率半径,并检查获得的车道线是否合理

# Find curvatures,将矫正后的车道线区域内的点输入find_curvature,返回曲率半径

left_curverad = find_curvature(yvals, left_fitx)

right_curverad = find_curvature(yvals, right_fitx)

# Sanity check for the lanes在find_lanes中我们已经将车道线的中心位置存在了left_lane的windows属性中,检查我们获取的这些值是否合法

left_fitx = sanity_check(left_lane, left_curverad, left_fitx, left_fit)

right_fitx = sanity_check(right_lane, right_curverad, right_fitx, right_fit)

# 返回y的值,对应左右车道中心线x的值,组成左右车道线区域的点的值以及曲率半径5.图像合成显示

现在我们已经获得了车道线等信息,现在就可以把这些信息附加到图像显示出来了。

分为以下步骤:

- 将可行驶区域覆盖到主图上

- 将需要显示的文字说明覆盖到图片上

- 提取车道线小图(右上角),并覆盖到图片上

- 将车道线覆盖到主图上

- 将透视变换的小图(左上角)覆盖到图片上。

合成出来的图像如下图所示:

import cv2

import numpy as np

import matplotlib.pyplot as plt

from module_py.find_cur_pos import find_position

import operator

# draw poly on an image

# def draw_poly(image, warped, yvals, left_fitx, right_fitx, Minv):

# This is different from the original function. Instead of showing one image,

# it will show multiple images.

# image原始图像,warped 透视变换后的图像

# 返回y的值yvals,对应左右车道中心线x的值left_fitx, right_fitx,

# 组成左右车道线区域的点的值以及曲率半径left_lane_x, left_lane_y, right_lane_x, right_lane_y,curvature

# Minv:反透视变换矩阵,img:获得感兴趣区域内,各个值均在在限定范围的点二值化图像

def draw_poly(image, warped, yvals, left_fitx, right_fitx,

left_lane_x, left_lane_y, right_lane_x, right_lane_y, Minv, curvature, img):

# Create an image to draw the lines on 这里是将两条车道线中心线之间的可行驶区域绘制附加在原始图像上

warp_zero = np.zeros_like(warped).astype(np.uint8)

color_warp = np.dstack((warp_zero, warp_zero, warp_zero))#The array formed by stacking the given arrays, will be at least 3-D.

# Recast the x and y points into usable format for cv2.fillPoly()

pts_left = np.array([np.transpose(np.vstack([left_fitx, yvals]))])

pts_right = np.array([np.flipud(np.transpose(np.vstack([right_fitx, yvals])))])

pts = np.hstack((pts_left, pts_right))

# Draw the lane onto the warped blank image

cv2.fillPoly(color_warp, np.int_([pts]), (0, 255, 0))#这个可行驶区域用绿色填充

# Warp the blank back to original image space using inverse perspective matrix (Minv)

newwarp = cv2.warpPerspective(color_warp, Minv, (image.shape[1], image.shape[0]))

# Combine the result with the original image 将可行驶区域以0.3的透明度覆盖到原始图像上

result = cv2.addWeighted(image, 1, newwarp, 0.3, 0)

# Put text on an image 在图片行添加一些text显示

font = cv2.FONT_HERSHEY_SIMPLEX

text = "Radius of Curvature: {} m".format(int(curvature))

cv2.putText(result,text,(400,100), font, 1,(255,255,255),2)

# Find the position of the car

pts = np.argwhere(newwarp[:,:,1])

position = find_position(pts,image.shape)

if position < 0:

text = "Vehicle is {:.2f} m left of center".format(-position)

else:

text = "Vehicle is {:.2f} m right of center".format(position)

cv2.putText(result,text,(400,150), font, 1,(255,255,255),2)

# assemble the screen example 画出右上角小图的车道线

img = (img/np.max(img)*255)

warped = (warped/np.max(warped)*255)

img_stacked = np.dstack((img, img, img))

warped_stacked = np.dstack((warped, warped, warped))

warped_stacked_line = np.dstack((warped, warped, warped))

pts_left = pts_left.reshape((-1,1,2))

pts_right = pts_right.reshape((-1,1,2))

# draw lines on image

# Left Lane

start = 0

for line in pts_left:

if start == 0:

first_line = line

start = 1

else:

end_line = line

cv2.line(warped_stacked, (int(first_line[0][0]),int(first_line[0][1])),

(int(end_line[0][0]),int(end_line[0][1])), [0,100,0], 15)

cv2.line(warped_stacked_line, (int(first_line[0][0]),int(first_line[0][1])),

(int(end_line[0][0]),int(end_line[0][1])), [0,0,255], 50)

start = 0

# Right Lane

start = 0

for line in pts_right:

if start == 0:

first_line = line

start = 1

else:

end_line = line

cv2.line(warped_stacked, (int(first_line[0][0]),int(first_line[0][1])),

(int(end_line[0][0]),int(end_line[0][1])), [0,0,255], 15)

cv2.line(warped_stacked_line, (int(first_line[0][0]),int(first_line[0][1])),

(int(end_line[0][0]),int(end_line[0][1])), [0,0,255], 50)

start = 0

# add line 画出主图额车道线

new_warped_stacked = cv2.warpPerspective(warped_stacked_line, Minv, (image.shape[1], image.shape[0]))

# Combine the result with the original image

result1 = cv2.addWeighted(np.uint8( new_warped_stacked), 0.5,result, 1, 0)

# 将上面的图像进行合并

combined = np.zeros((720, 1280, 3), dtype=np.uint8)

combined[:, 0:1280] = result1

combined[:160, :320] = cv2.resize(img_stacked, (320,160))

combined[:160, 960:] = cv2.resize(warped_stacked, (320,160))

# combined[:, 0:1280] = result

# combined[:360, 1280:] = cv2.resize(img_stacked, (640,360))

# combined[360:, 1280:] = cv2.resize(warped_stacked, (640,360))

cv2.putText(combined,"Masked Image",(1500,100), font, 1,(255,255,255),2)

cv2.putText(combined,"Warped Image",(1500,450), font, 1,(255,255,255),2)

# f, (ax1, ax2) = plt.subplots(1, 2, figsize=(15, 8))

# f.tight_layout()

# ax1.imshow(image)

# ax1.set_title('Original Image', fontsize=15)

# ax2.imshow(warped_stacked, cmap='gray')

# ax2.set_title('2nd order polynomial fit Result', fontsize=15)

# # plt.subplots_adjust(left=0., right=1, top=0.9, bottom=0.)

# plt.show()

return combined