SparkSQL源码解读1.6

总的流程入下:

1.通过Sqlparse 转成unresolved Logicplan

2.通过Analyzer转成 resolved Logicplan

3.通过optimizer转成 optimzed Logicplan

4.通过sparkplanner转成physical Logicplan

5.通过prepareForExecution 转成executable logicplan

6.通过toRDD等方法执行executedplan去调用tree的doExecute

SparkSQL执行入口:sqlContext.sql()

//SparkSQL执行入口

/**

* Executes a SQL query using Spark, returning the result as a [[DataFrame]]. The dialect that is

* used for SQL parsing can be configured with 'spark.sql.dialect'.

*

* @group basic

* @since 1.3.0

*/

def sql(sqlText: String): DataFrame = {

DataFrame(this, parseSql(sqlText))

}parseSql(sqlText)往里点,直到AbstractSparkSQLParser:

def parse(input: String): LogicalPlan = synchronized {

// Initialize the Keywords.

initLexical

phrase(start)(new lexical.Scanner(input)) match {

case Success(plan, _) => plan

case failureOrError => sys.error(failureOrError.toString)

}

}这里再往start 里点 protected def start: Parser[LogicalPlan]这是个接口,所以得往回找实现类,找到:

protected[sql] def parseSql(sql: String): LogicalPlan = ddlParser.parse(sql, false)然后再ddlParse里面找到start:

protected lazy val ddl: Parser[LogicalPlan] = createTable | describeTable | refreshTable

protected def start: Parser[LogicalPlan] = ddl假如我们执行的sql语句为:

SELECT fcustnumber,FCREATETIME FROM clue 显然不在:createTable | describeTable | refreshTable里面,前面parse抛异常,再往前翻,然后执行parseQuery(input)

def parse(input: String, exceptionOnError: Boolean): LogicalPlan = {

try {

parse(input)

} catch {

case ddlException: DDLException => throw ddlException

case _ if !exceptionOnError => parseQuery(input)

case x: Throwable => throw x

}

}class DDLParser(parseQuery: String => LogicalPlan)从上面这行代码可以看出,parseQuery是从new DDLParser对象时传入的,再往前翻

protected[sql] def parseSql(sql: String): LogicalPlan = ddlParser.parse(sql, false)再看ddlParser是怎么来的:

@transient

protected[sql] val ddlParser = new DDLParser(sqlParser.parse(_))

@transient

protected[sql] val sqlParser = new SparkSQLParser(getSQLDialect().parse(_))

从上面可以看出,其实parseQuery(input) 执行的就是sqlParser.parse(_) , sqlParser是SparkSQLParser的一个对象。而SparkSQLParser中并没有重写parse方法,执行的还是父类AbstractSparkSQLParser中的parse方法,又到了

def parse(input: String): LogicalPlan = synchronized {

// Initialize the Keywords.

initLexical

phrase(start)(new lexical.Scanner(input)) match {

case Success(plan, _) => plan

case failureOrError => sys.error(failureOrError.toString)

}

}又看start,不过此时的解释器为SparkSQLParser,

override protected lazy val start: Parser[LogicalPlan] =

cache | uncache | set | show | desc | others根据我们需要执行的sql,显然属于others

private lazy val others: Parser[LogicalPlan] =

wholeInput ^^ {

case input => fallback(input)

}这里的fallback方法是创建SparkSQLParser对象是传入的

class SparkSQLParser(fallback: String => LogicalPlan) extends AbstractSparkSQLParser如下:

@transient

protected[sql] val sqlParser = new SparkSQLParser(getSQLDialect().parse(_))

protected[sql] def getSQLDialect(): ParserDialect = {

try {

val clazz = Utils.classForName(dialectClassName)

clazz.newInstance().asInstanceOf[ParserDialect]

} catch {

case NonFatal(e) =>

// Since we didn't find the available SQL Dialect, it will fail even for SET command:

// SET spark.sql.dialect=sql; Let's reset as default dialect automatically.

val dialect = conf.dialect

// reset the sql dialect

conf.unsetConf(SQLConf.DIALECT)

// throw out the exception, and the default sql dialect will take effect for next query.

throw new DialectException(

s"""Instantiating dialect '$dialect' failed.

|Reverting to default dialect '${conf.dialect}'""".stripMargin, e)

}

}点进parse方法,发现也是个接口,找到对应的实现类DefaultParserDialect

private[spark] class DefaultParserDialect extends ParserDialect {

@transient

protected val sqlParser = SqlParser

override def parse(sqlText: String): LogicalPlan = {

sqlParser.parse(sqlText)

}

}此时的sqlParser是SqlParser的一个对象,再点入parse方法,发现又回到这里了(有点绕)

def parse(input: String): LogicalPlan = synchronized {

// Initialize the Keywords.

initLexical

phrase(start)(new lexical.Scanner(input)) match {

case Success(plan, _) => plan

case failureOrError => sys.error(failureOrError.toString)

}

}找到SqlParse的start

protected lazy val start: Parser[LogicalPlan] =

start1 | insert | cte

protected lazy val start1: Parser[LogicalPlan] =

(select | ("(" ~> select <~ ")")) *

( UNION ~ ALL ^^^ { (q1: LogicalPlan, q2: LogicalPlan) => Union(q1, q2) }

| INTERSECT ^^^ { (q1: LogicalPlan, q2: LogicalPlan) => Intersect(q1, q2) }

| EXCEPT ^^^ { (q1: LogicalPlan, q2: LogicalPlan) => Except(q1, q2)}

| UNION ~ DISTINCT.? ^^^ { (q1: LogicalPlan, q2: LogicalPlan) => Distinct(Union(q1, q2)) }

)返回一个unresolved Logicplan ,至此,parseSql(sqlText)方法解读完了。接着

def sql(sqlText: String): DataFrame = {

DataFrame(this, parseSql(sqlText))

}一直往里点,直到

/**

* The primary workflow for executing relational queries using Spark. Designed to allow easy

* access to the intermediate phases of query execution for developers.

*

* While this is not a public class, we should avoid changing the function names for the sake of

* changing them, because a lot of developers use the feature for debugging.

*/

class QueryExecution(val sqlContext: SQLContext, val logical: LogicalPlan) {

def assertAnalyzed(): Unit = sqlContext.analyzer.checkAnalysis(analyzed)

lazy val analyzed: LogicalPlan = sqlContext.analyzer.execute(logical)

lazy val withCachedData: LogicalPlan = {

assertAnalyzed()

sqlContext.cacheManager.useCachedData(analyzed)

}

lazy val optimizedPlan: LogicalPlan = sqlContext.optimizer.execute(withCachedData)

lazy val sparkPlan: SparkPlan = {

SQLContext.setActive(sqlContext)

sqlContext.planner.plan(optimizedPlan).next()

}

// executedPlan should not be used to initialize any SparkPlan. It should be

// only used for execution.

lazy val executedPlan: SparkPlan = sqlContext.prepareForExecution.execute(sparkPlan)

/** Internal version of the RDD. Avoids copies and has no schema */

lazy val toRdd: RDD[InternalRow] = executedPlan.execute()

protected def stringOrError[A](f: => A): String =

try f.toString catch { case e: Throwable => e.toString }

def simpleString: String = {

s"""== Physical Plan ==

|${stringOrError(executedPlan)}

""".stripMargin.trim

}

override def toString: String = {

def output =

analyzed.output.map(o => s"${o.name}: ${o.dataType.simpleString}").mkString(", ")

s"""== Parsed Logical Plan ==

|${stringOrError(logical)}

|== Analyzed Logical Plan ==

|${stringOrError(output)}

|${stringOrError(analyzed)}

|== Optimized Logical Plan ==

|${stringOrError(optimizedPlan)}

|== Physical Plan ==

|${stringOrError(executedPlan)}

""".stripMargin.trim

}

}QueryExecution这个类是SQL解析的主流程。下面一步步来解析这个主流程。首先来看

//通过Analyzer转成 resolved Logicplan

lazy val analyzed: LogicalPlan = sqlContext.analyzer.execute(logical)往里点,发现execute这个方法里面在遍历batches这个集合,而batches是Analyzer里面的

lazy val batches: Seq[Batch] = Seq(

Batch("Substitution", fixedPoint,

CTESubstitution,

WindowsSubstitution),

Batch("Resolution", fixedPoint,

ResolveRelations ::

ResolveReferences ::

ResolveGroupingAnalytics ::

ResolvePivot ::

ResolveUpCast ::

ResolveSortReferences ::

ResolveGenerate ::

ResolveFunctions ::

ResolveAliases ::

ExtractWindowExpressions ::

GlobalAggregates ::

ResolveAggregateFunctions ::

DistinctAggregationRewriter(conf) ::

HiveTypeCoercion.typeCoercionRules ++

extendedResolutionRules : _*),

Batch("Nondeterministic", Once,

PullOutNondeterministic,

ComputeCurrentTime),

Batch("UDF", Once,

HandleNullInputsForUDF),

Batch("Cleanup", fixedPoint,

CleanupAliases)

)大概可以看出,这里面是一个个规则,粗略找了个规则ResolveSortReferences

object ResolveSortReferences extends Rule[LogicalPlan] {

def apply(plan: LogicalPlan): LogicalPlan = plan resolveOperators {

case s @ Sort(ordering, global, p @ Project(projectList, child))

if !s.resolved && p.resolved =>

val (newOrdering, missing) = resolveAndFindMissing(ordering, p, child)

// If this rule was not a no-op, return the transformed plan, otherwise return the original.

if (missing.nonEmpty) {

// Add missing attributes and then project them away after the sort.

Project(p.output,

Sort(newOrdering, global,

Project(projectList ++ missing, child)))

} else {

logDebug(s"Failed to find $missing in ${p.output.mkString(", ")}")

s // Nothing we can do here. Return original plan.

}

}大致可以看出返回的是一个resolve logicalPlan。然后再回到sqlContext.analyzer.execute(logical)方法

def execute(plan: TreeType): TreeType = {

var curPlan = plan

batches.foreach { batch =>

val batchStartPlan = curPlan

var iteration = 1

var lastPlan = curPlan

var continue = true

// Run until fix point (or the max number of iterations as specified in the strategy.

while (continue) {

curPlan = batch.rules.foldLeft(curPlan) {

case (plan, rule) =>

val startTime = System.nanoTime()

val result = rule(plan) // unresolved Logic plan 转成 resolved Logic plan

val runTime = System.nanoTime() - startTime

RuleExecutor.timeMap.addAndGet(rule.ruleName, runTime)

if (!result.fastEquals(plan)) {

logTrace(

s"""

|=== Applying Rule ${rule.ruleName} ===

|${sideBySide(plan.treeString, result.treeString).mkString("\n")}

""".stripMargin)

}

result

}

iteration += 1

if (iteration > batch.strategy.maxIterations) {

// Only log if this is a rule that is supposed to run more than once.

if (iteration != 2) {

logInfo(s"Max iterations (${iteration - 1}) reached for batch ${batch.name}")

}

continue = false

}

if (curPlan.fastEquals(lastPlan)) {

logTrace(

s"Fixed point reached for batch ${batch.name} after ${iteration - 1} iterations.")

continue = false

}

lastPlan = curPlan

}

if (!batchStartPlan.fastEquals(curPlan)) {

logDebug(

s"""

|=== Result of Batch ${batch.name} ===

|${sideBySide(plan.treeString, curPlan.treeString).mkString("\n")}

""".stripMargin)

} else {

logTrace(s"Batch ${batch.name} has no effect.")

}

}

curPlan

}这个方法还是比较复杂的,实际上将unresolve logicPlan转为resolve logicPlan是在这一步

val result = rule(plan) // unresolved Logic plan 转成 resolved Logic plan将unresolve logicPlan转为resolve logicPlan之后,然后检查哪些表缓存起来了,用缓存起来的logicPlan替换掉原有的logicPlan

lazy val withCachedData: LogicalPlan = {

assertAnalyzed()

sqlContext.cacheManager.useCachedData(analyzed)

}接下来是优化器,它的执行过程和unresolve logicPlan转为resolve logicPlan的过程一样。

//通过optimizer转成 optimzed Logicplan (优化器)

lazy val optimizedPlan: LogicalPlan = sqlContext.optimizer.execute(withCachedData)接下来就是通过sparkplanner转成physical Logicplan (物理逻辑计划)

//.通过sparkplanner转成physical Logicplan (物理逻辑计划)

lazy val sparkPlan: SparkPlan = {

SQLContext.setActive(sqlContext)

sqlContext.planner.plan(optimizedPlan).next()

}通过prepareForExecution 转成executable logicplan 可执行逻辑计划(这一步和之前的unresolve logicePlan 转 resolve logicPlan的过程一样)

// only used for execution. 通过prepareForExecution 转成executable logicplan 可执行逻辑计划

lazy val executedPlan: SparkPlan = sqlContext.prepareForExecution.execute(sparkPlan)通过toRDD等方法执行executedplan去调用tree的doExecute

/** Internal version of the RDD. Avoids copies and has no schema 通过toRDD等方法执行executedplan去调用tree的doExecute */

lazy val toRdd: RDD[InternalRow] = executedPlan.execute() final def execute(): RDD[InternalRow] = {

if (children.nonEmpty) {

val hasUnsafeInputs = children.exists(_.outputsUnsafeRows)

val hasSafeInputs = children.exists(!_.outputsUnsafeRows)

assert(!(hasSafeInputs && hasUnsafeInputs),

"Child operators should output rows in the same format")

assert(canProcessSafeRows || canProcessUnsafeRows,

"Operator must be able to process at least one row format")

assert(!hasSafeInputs || canProcessSafeRows,

"Operator will receive safe rows as input but cannot process safe rows")

assert(!hasUnsafeInputs || canProcessUnsafeRows,

"Operator will receive unsafe rows as input but cannot process unsafe rows")

}

RDDOperationScope.withScope(sparkContext, nodeName, false, true) {

prepare()

doExecute()

}

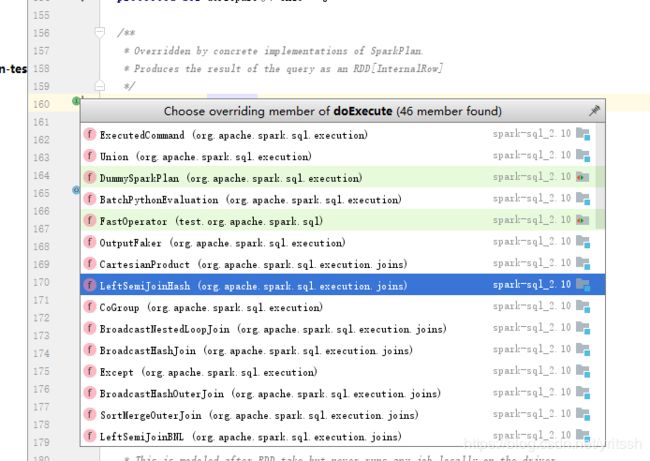

}然后发现doExecute的实现类都是一些sql语法里的关键字 ,返回的都是rdd

随便找了个实现类LeftSemiJoinHash

case class LeftSemiJoinHash(

leftKeys: Seq[Expression],

rightKeys: Seq[Expression],

left: SparkPlan,

right: SparkPlan,

condition: Option[Expression]) extends BinaryNode with HashSemiJoin {

override private[sql] lazy val metrics = Map(

"numLeftRows" -> SQLMetrics.createLongMetric(sparkContext, "number of left rows"),

"numRightRows" -> SQLMetrics.createLongMetric(sparkContext, "number of right rows"),

"numOutputRows" -> SQLMetrics.createLongMetric(sparkContext, "number of output rows"))

override def outputPartitioning: Partitioning = left.outputPartitioning

override def requiredChildDistribution: Seq[Distribution] =

ClusteredDistribution(leftKeys) :: ClusteredDistribution(rightKeys) :: Nil

protected override def doExecute(): RDD[InternalRow] = {

val numLeftRows = longMetric("numLeftRows")

val numRightRows = longMetric("numRightRows")

val numOutputRows = longMetric("numOutputRows")

right.execute().zipPartitions(left.execute()) { (buildIter, streamIter) =>

if (condition.isEmpty) {

val hashSet = buildKeyHashSet(buildIter, numRightRows)

hashSemiJoin(streamIter, numLeftRows, hashSet, numOutputRows)

} else {

val hashRelation = HashedRelation(buildIter, numRightRows, rightKeyGenerator)

hashSemiJoin(streamIter, numLeftRows, hashRelation, numOutputRows)

}

}

}

}简单看了下发现里面都是一些算子的操作。

可以发现,前面这些都是懒加载操作,所以没有遇到action算子是不会触发任务的。SparkSQL粗略的解析过程就是这样了。