spark 2.4.0源码分析--(四)Spark Netty RPC实现及Endpoint

文章目录

- 一、主线NettyRpcEnv

- 1、Netty Rpc总体流程

- 2、创建NettyRpcEnv

- 3、NettyRpcEnv成员变量及方法分类介绍

- 二、client发送数据及outboxes

- 1、NettyRpcEndpointRef发送数据接口

- 2、postToOutbox()向outboxes提交数据

- 3、drainOutbox()将数据发送到远端

- 三、server接收数据及dispatcher & inbox

- 1、server消息分发的终点:RpcEndpoint概览

- 2、dispatcher数据分发

- 3、MessageLoop中轮询取inbox数据

- 4、inbox数据对应Endpoint响应

一、主线NettyRpcEnv

上一篇文章,我们分析了Spark Netty在数据传输层相关的实现,及Transport命令相关的类,包括TransportConf、TransportContext、TransportServer、TransportClientFactory、TransportChannelHandler。这些介绍的主要类,均在NettyRpcEnv中创建了成员变量。

在本文,我们将分析NettyRpcEnv中对上述类的包装及使用,即如何实现RPC

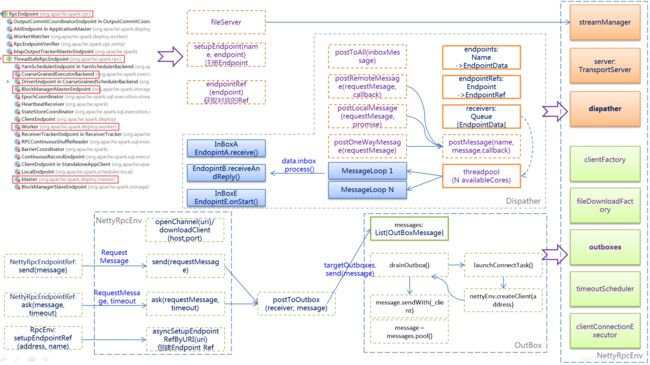

1、Netty Rpc总体流程

序号①为一个本地发送消息的特例,表示通过NettyRpcEndpointRef的ask和send方法,向本地节点的RpcEndpoint发送消息,由于是同一节点,直接调用Dispatcher的postLocalMessage或postOnewayMessage方法,将消息放入EndpointData内部的Inbox的Message列表中。Message-Loop线程最后处理消息,并将消息发送给对应的Endpoint处理。

序号②为一个向远端发送消息的流程,表示通过NettyRpcEndpointRed的ask和send方法,向远端节点的RpcEndpoint发送消息,这种情况下,消息先被封装为OutboxMessage,然后放入到远端RPCEndpoint地址对应的OutBox的messages列表中。

序号③表示每个Outbox的drainOutbox方法通过循环,不断从messages列表中取得OutboxMessage。

序号④表示每个Outbox的drainOutbox方法,使用内部的TransportClient向远端的NettyRpcEnv发送序号③所取得的OutboxMessage

序号⑤表示序号④发出的请求在与远端NettyRpcEnv的TransportServer建立了连接后(即launchConnectTask()创建TransportClient),请求消息首先结果Netty管道的处理,然后经过NettyRpccHandler的处理,最后NettyRpcHandler的receive方法会调用Dispatcher的postRemoteMessage或postOneWayMessage方法,将消息放入EndpointData内部的Inbox的messages列表中,MessageLoop线程最后处理消息,并将消息发送给对应的RpcEndpoint处理。

2、创建NettyRpcEnv

NettyRpcEnv是一个主线类,通过它可以分析:

- Client数据调用postToOutbox()发送到outboxes进行分发

- Server端TransportChannelHandler接收到数据,调用NettyRpcHandler的receive()方法,将数据分发到dispatcher中。

- NettyRpcEnv既有Server相关变量和接口函数,也有Client相关变量和接口函数,一些读者在初步接触时,容易混淆。

- 事实上NettyRpcEnv既存在于driver创建的SparkEnv中,也存在于executor创建的SparkEnv中,存在于driver时,会默认创建TransportServer,这样就好理解些了。

上图中标注①,在executor和driver中均创建了NettyRpcEnv,即driver也可以使用client的相关特性,例如向其它远端server发送消息,这里只做了解。

private[rpc] class NettyRpcEnvFactory extends RpcEnvFactory with Logging {

def create(config: RpcEnvConfig): RpcEnv = {

val sparkConf = config.conf

val javaSerializerInstance =

new JavaSerializer(sparkConf).newInstance().asInstanceOf[JavaSerializerInstance]

val nettyEnv =

new NettyRpcEnv(sparkConf, javaSerializerInstance, config.advertiseAddress,

config.securityManager, config.numUsableCores)

if (!config.clientMode) {

val startNettyRpcEnv: Int => (NettyRpcEnv, Int) = { actualPort =>

nettyEnv.startServer(config.bindAddress, actualPort)

(nettyEnv, nettyEnv.address.port)

}

try {

Utils.startServiceOnPort(config.port, startNettyRpcEnv, sparkConf, config.name)._1

} catch {

case NonFatal(e) =>

nettyEnv.shutdown()

throw e

}

}

nettyEnv

}

}

3、NettyRpcEnv成员变量及方法分类介绍

NettyRpcEnv具有server、client相关特性,按以下图示,进行分类说明:

- 图中上部分为Server相关实现,包括streamManager、transportServer、dispatcher

- 图中下部分为Client相关实现,包括clientFactory、outboxes、clientConnectionExecutor

二、client发送数据及outboxes

1、NettyRpcEndpointRef发送数据接口

NettyRpcEndpointRef提供了send()、ask()方法,用于向server发送单向消息和需要回执的RPC消息,其内部实际上是调用NettyRpc对应的接口:

private[netty] class NettyRpcEndpointRef(

private val conf: SparkConf,

private val endpointAddress: RpcEndpointAddress,

private var nettyEnv: NettyRpcEnv) extends RpcEndpointRef(conf) {

override def ask[T: ClassTag](message: Any, timeout: RpcTimeout): Future[T] = {

nettyEnv.ask(new RequestMessage(nettyEnv.address, this, message), timeout)

}

override def send(message: Any): Unit = {

require(message != null, "Message is null")

nettyEnv.send(new RequestMessage(nettyEnv.address, this, message))

}

}

由以上代码可知,NettyRpcEndpointRef对应的ask()和send()方法,内部实际是调用NettyRpcEnv相应的方法:

private[netty] class NettyRpcEnv(

val conf: SparkConf,

javaSerializerInstance: JavaSerializerInstance,

host: String,

...) extends RpcEnv(conf) with Logging {

// 单向发送数据

private[netty] def send(message: RequestMessage): Unit = {

val remoteAddr = message.receiver.address

if (remoteAddr == address) {

// local模式

// Message to a local RPC endpoint.

try {

dispatcher.postOneWayMessage(message)

} catch {

case e: RpcEnvStoppedException => logDebug(e.getMessage)

}

} else {

// 远端模式

// Message to a remote RPC endpoint.

postToOutbox(message.receiver, OneWayOutboxMessage(message.serialize(this)))

}

}

// 发送数据并获取返回消息

private[netty] def ask[T: ClassTag](message: RequestMessage, timeout: RpcTimeout): Future[T] = {

val promise = Promise[Any]()

val remoteAddr = message.receiver.address

def onFailure(e: Throwable): Unit = {

if (!promise.tryFailure(e)) {

...

}

}

def onSuccess(reply: Any): Unit = reply match {

case RpcFailure(e) => onFailure(e)

case rpcReply =>

if (!promise.trySuccess(rpcReply)) {

logWarning(s"Ignored message: $reply")

}

}

try {

// local模式

if (remoteAddr == address) {

val p = Promise[Any]()

p.future.onComplete {

case Success(response) => onSuccess(response)

case Failure(e) => onFailure(e)

}(ThreadUtils.sameThread)

dispatcher.postLocalMessage(message, p)

} else {

// 远端模式

val rpcMessage = RpcOutboxMessage(message.serialize(this),

onFailure,

(client, response) => onSuccess(deserialize[Any](client, response)))

postToOutbox(message.receiver, rpcMessage)

promise.future.failed.foreach {

case _: TimeoutException => rpcMessage.onTimeout()

case _ =>

}(ThreadUtils.sameThread)

}

// 超时调度

val timeoutCancelable = timeoutScheduler.schedule(new Runnable {

override def run(): Unit = {

onFailure(new TimeoutException(s"Cannot receive any reply from ${remoteAddr} " +

s"in ${timeout.duration}"))

}

}, timeout.duration.toNanos, TimeUnit.NANOSECONDS)

promise.future.onComplete { v =>

timeoutCancelable.cancel(true)

}(ThreadUtils.sameThread)

} catch {

case NonFatal(e) =>

onFailure(e)

}

promise.future.mapTo[T].recover(timeout.addMessageIfTimeout)(ThreadUtils.sameThread)

}

}

NettyRpcEnv中send()、ask()方法分为了本地和远端两种模式:

- 其中本地模式直接调用dispather的postOnewayMessage()或postLocalMessage()

- 远端模式则调用postToOutbox()方法,将获取outboxes中与远端地址匹配的Outbox,最后调用targetOutbox.send(message)发送当前消息

以上ask()方法中,可关注timeoutScheduler指定时间后,调度了一个timeoutCancelable,其run()方法运行Rpc超时响应。如果Rpc返回消息完成响应,则创建的timeoutCancelable被取消调度

2、postToOutbox()向outboxes提交数据

向outboxes提交数据即postToOutbox()方法:

注意是先查找targetOutbox,再调用targetOutbox.send(message)

private[netty] class NettyRpcEnv(

val conf: SparkConf,

javaSerializerInstance: JavaSerializerInstance,

host: String,

...) extends RpcEnv(conf) with Logging {

private def postToOutbox(receiver: NettyRpcEndpointRef, message: OutboxMessage): Unit = {

if (receiver.client != null) {

message.sendWith(receiver.client)

} else {

val targetOutbox = {

val outbox = outboxes.get(receiver.address)

if (outbox == null) {

val newOutbox = new Outbox(this, receiver.address)

val oldOutbox = outboxes.putIfAbsent(receiver.address, newOutbox)

...

} else {

outbox

}

}

if (stopped.get) {

...

} else {

// 注意这里即为向Outbox提交数据

targetOutbox.send(message)

}

}

}

}

3、drainOutbox()将数据发送到远端

- 接上一小节,postToOutbox()提交消息后,会立即调用targetOutbox.send(message),其内部会调用drainOutbox()将messages列表内所有数据全部发出:

- 值得一提的是,在drainOutbox()方法前段,当client为空时,由launchConnectTask()创建client连接,其内部是调用nettyEnv.createClient(address)获取连接,真实为调用clientFactory.createClient(address.host, address.port),获取缓存连接或解析DNS创建连接,在上一篇文章TransportClientFactory章节有介绍

private[netty] class Outbox(nettyEnv: NettyRpcEnv, val address: RpcAddress) {

private val messages = new java.util.LinkedList[OutboxMessage]

def send(message: OutboxMessage): Unit = {

val dropped = synchronized {

if (stopped) {

true

} else {

messages.add(message)

false

}

}

if (dropped) {

message.onFailure(new SparkException("Message is dropped because Outbox is stopped"))

} else {

drainOutbox()

}

}

private def drainOutbox(): Unit = {

var message: OutboxMessage = null

synchronized {

...

if (client == null) {

// There is no connect task but client is null, so we need to launch the connect task.

launchConnectTask()

return

}

...

message = messages.poll()

...

draining = true

}

while (true) {

try {

val _client = synchronized { client }

if (_client != null) {

message.sendWith(_client)

} else {

assert(stopped == true)

}

} catch {

case NonFatal(e) =>

handleNetworkFailure(e)

return

}

synchronized {

if (stopped) {

return

}

message = messages.poll()

if (message == null) {

draining = false

return

}

}

}

}

}

三、server接收数据及dispatcher & inbox

1、server消息分发的终点:RpcEndpoint概览

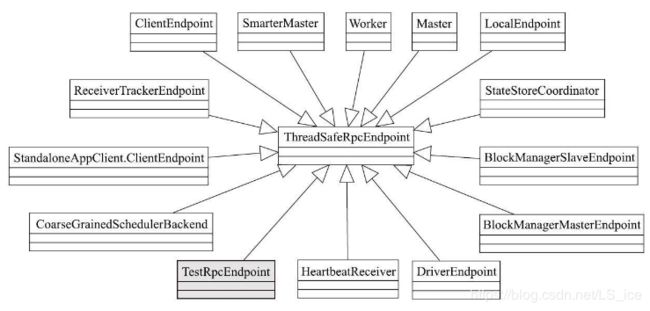

RpcEndpoint继承体系如下,其中receive()、receiveAndReply()即单向消息、Rpc消息处理,是各应用实现消息处理的重要方法

ThreadSafeEndpoint是一个特质,表示线程安全的Endpoint,即指定Endpoint内部消息是有序处理,不同Endpoint间消息并发处理。

2、dispatcher数据分发

消息调度器Dispatcher在NettyRpcEnv中创建,能够提高NettyRpcEnv对消息异步并行处理的能力。

消息调度器Dispatche通过多线程方式,负责将RPC消息路由到需要对该消息处理的RPCEndpoint(RPC端点)

Dispatcher成员变量及注释(包括内部类EndpointData):

private[netty] class Dispatcher(nettyEnv: NettyRpcEnv, numUsableCores: Int) extends Logging {

// RPC端点数据,它包括了端点名、端点实例、端点引用Ref

private class EndpointData(

val name: String,

val endpoint: RpcEndpoint,

val ref: NettyRpcEndpointRef) {

// 当前端点对应的消息盒子,内部具有一个messages: List[InboxMessage]消息队列

// Inbox提供post()、process()方法,用于接收和处理消息,这里与client端的OutBox类似

val inbox = new Inbox(ref, endpoint)

}

// 端点名与端点数据队列的映射

private val endpoints: ConcurrentMap[String, EndpointData] =

new ConcurrentHashMap[String, EndpointData]

// 端点与端点引用Ref的映射,RpcEndpointRef用于ask()、send()发送消息

private val endpointRefs: ConcurrentMap[RpcEndpoint, RpcEndpointRef] =

new ConcurrentHashMap[RpcEndpoint, RpcEndpointRef]

// 向EndpointData inbox放入消息后,同时在receivers中放入EndpointData,用于MessageLoop中循环调用receivers.take()处理消息

// 使用示例:data.inbox.post(message) receivers.offer(data)

private val receivers = new LinkedBlockingQueue[EndpointData]

}

Dispatcher对外提供了postRemoteMessage()、postLocalMessage()、postOneWayMessage()方法

Dispatcher对外提供的方法,主要是由NettyRpcHandler调用(server端),也可以由RpcEndpointRef调用,处理本地消息

NettyRpcHandler是server端TansportRequestHandler的内部成员,Netty Channel接收到的OneWayMessage、RpcMessage消息,都是调用NettyRpcHandler receive()方法,将消息放入dispatcher中。

Dispatcher的内存模型:

序号①表示调用Inbox的post()方法将消息放入Inbox messages队列中。

序号②表示将有消息的Inbox相关联的EndpointData放入receivers。

序号③表示MessageLoop每次循环,首先从receivers中获取EndpointData。

序号④表示执行EndpointData中Inbox的process()方法对消息进行具体的处理。

3、MessageLoop中轮询取inbox数据

MessageLoop是Dispatcher的内部类,同时实现了Java Runnable接口,在配置的spark.rpc.netty.dispatcher.numThreads线程池threadpool中持续调度。

MessageLoop的实现较为简单,只有一个run()方法:

- 调用receivers.take()获取一个端点

- 调用端点内部inbox.process()方法,将该端点的数据全部处理

- 调用receivers.take()获取下一个端点

private[netty] class Dispatcher(nettyEnv: NettyRpcEnv, numUsableCores: Int) extends Logging {

private class MessageLoop extends Runnable {

override def run(): Unit = {

while (true) {

try {

val data = receivers.take()

if (data == PoisonPill) {

// Put PoisonPill back so that other MessageLoops can see it.

receivers.offer(PoisonPill)

return

}

data.inbox.process(Dispatcher.this)

} catch {

...

}

}

...

}

}

}

4、inbox数据对应Endpoint响应

Inbox成员和方法:

- 成员:messages消息队列

- 方法: post(),由Dispatcher postMessage()调用,向messages队列增加消息

- 方法: process(),从messages队列获取消息并处理

process()方法处理消息的流程:

- numActiveThreads检测,如果有其它MessageLoop在处理当前端点Inbox,即(!enableConcurrent && numActiveThreads != 0),则退出,保证ThreadSafeEndpoint特性。注意,一次Inbox.process()方法,即会将messages队列中所有消息依次全部处理。

- 从messages队列中循环poll()消息,并根据对象的类型分类处理:RpcMessge、OneWayMessage、Onstart、RemoteProcessConnected等消息类型

- messages被全部处理后numActiveThreads -=1,退出这一次process()流程

private[netty] class Inbox(

val endpointRef: NettyRpcEndpointRef,

val endpoint: RpcEndpoint)

extends Logging {

protected val messages = new java.util.LinkedList[InboxMessage]()

// ThreadSafeEndpoint类型端点,enableConcurrent 在process() OnStart消息中被置为true

private var enableConcurrent = false

def post(message: InboxMessage): Unit = inbox.synchronized {

if (stopped) {

...

} else {

messages.add(message)

false

}

}

def process(dispatcher: Dispatcher): Unit = {

var message: InboxMessage = null

inbox.synchronized {

if (!enableConcurrent && numActiveThreads != 0) {

return

}

message = messages.poll()

if (message != null) {

numActiveThreads += 1

} else {

return

}

}

while (true) {

safelyCall(endpoint) {

message match {

case RpcMessage(_sender, content, context) =>

try {

endpoint.receiveAndReply(context).applyOrElse[Any, Unit](content, { msg =>

throw new SparkException(s"Unsupported message $message from ${_sender}")

})

} catch {

case NonFatal(e) =>

context.sendFailure(e)

throw e

}

case OneWayMessage(_sender, content) =>

endpoint.receive.applyOrElse[Any, Unit](content, { msg =>

throw new SparkException(s"Unsupported message $message from ${_sender}")

})

case OnStart =>

endpoint.onStart()

if (!endpoint.isInstanceOf[ThreadSafeRpcEndpoint]) {

inbox.synchronized {

if (!stopped) {

enableConcurrent = true

}

}

}

case OnStop =>

val activeThreads = inbox.synchronized { inbox.numActiveThreads }

...

dispatcher.removeRpcEndpointRef(endpoint)

endpoint.onStop()

...

case RemoteProcessConnected(remoteAddress) =>

endpoint.onConnected(remoteAddress)

case RemoteProcessDisconnected(remoteAddress) =>

endpoint.onDisconnected(remoteAddress)

case RemoteProcessConnectionError(cause, remoteAddress) =>

endpoint.onNetworkError(cause, remoteAddress)

}

}

inbox.synchronized {

if (!enableConcurrent && numActiveThreads != 1) {

// If we are not the only one worker, exit

numActiveThreads -= 1

return

}

message = messages.poll()

if (message == null) {

numActiveThreads -= 1

return

}

}

}

}

}

- 重点:在RpcMessage、OneWayMessage这两个处理分支中,分别调用了endpoint.receiveAndReply(context)、 endpoint.receive(),这里就是调用应用层Endpoint端点处理消息。

- Endpoint处理消息示例:集群Block块管理器BlockManagerMasterEndpoint:

class BlockManagerMasterEndpoint(

override val rpcEnv: RpcEnv,

val isLocal: Boolean,

conf: SparkConf,

listenerBus: LiveListenerBus)

extends ThreadSafeRpcEndpoint with Logging {

override def receiveAndReply(context: RpcCallContext): PartialFunction[Any, Unit] = {

case RegisterBlockManager(blockManagerId, maxOnHeapMemSize, maxOffHeapMemSize, slaveEndpoint) =>

context.reply(register(blockManagerId, maxOnHeapMemSize, maxOffHeapMemSize, slaveEndpoint))

case _updateBlockInfo @

...

case GetLocations(blockId) =>

context.reply(getLocations(blockId))

case GetLocationsAndStatus(blockId) =>

context.reply(getLocationsAndStatus(blockId))

case GetLocationsMultipleBlockIds(blockIds) =>

context.reply(getLocationsMultipleBlockIds(blockIds))

}